Enhancing Assessment with Online Quizzes: Strategies and Insights

Explore the effective use of online quizzes for summative assessment in academia, with insights on question designing, risk mitigation strategies, and optimizing student engagement. Discover the impact of continuous assessment practices, literature reviews, and research evidence in enhancing student learning outcomes.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Online quizzes for summative assessment Presenters: Huw Morgan [huw.morgan@manchester.ac.uk]

Ice Breaker: Ambiguity Laurel/Yanny? Brainstorm or Green-needle? Professor David Alais from the University of Sydney s school of psychology says the Yanny/Laurel sound is an example of a perceptually ambiguous stimulus such as the Necker cube or the face/vase illusion. They can be seen in two ways, and often the mind flips back and forth between the two interpretations. This happens because the brain can t decide on a definitive interpretation, Alais says. If there is little ambiguity, the brain locks on to a single perceptual interpretation .. All of this goes to highlight just how much the brain is an active interpreter of sensory input, and thus that the external world is less objective than we like to believe. https://www.theguardian.com/technology/2018/may/16/yanny-or-laurel-sound-illusion-sets-off-ear-splitting-arguments

Session Objectives Appreciate the research findings on using Online Quizzes in summative, continuous assessment Be able to create basic quiz questions in Blackboard Be aware of how Blackboard can minimise the risks of student collusion when quizzes offered outside University PC Clusters o Use of Quiz Pools, sets, and randomisingQ s o Setting timescales and time limits for each quiz Consider question design in your discipline, and the possibility of using partial credit for more advanced aspects. Be aware of the support that is available to help you!

BMAN10621A/B/M: Fundamentals in Financial Reporting 854 students over 3 cohorts Single-semester, First Year UG course, diverse student background, 80% exam, broad syllabus extending from accounting principles to preparation/analysis of financial statements Assessment in 2015-16: single online assignment for 20% in week 8, with minimal feedback on answers: quite late in course. Anecdotal evidence of lack of student preparation before workshops Challenge of monitoring progress in large cohorts. We wanted to encourage active and early participation The subject requires a continuous building up of knowledge and understanding so that by the end the student is able to prepare a set of financial statements

Literature Review JISC Project on assessment design (Ferrell 2012) Review of Continuous Assessment in HE (Hern ndez 2012) Learning-oriented assessment: Feed Forward v Feedback Aspects of cognitive load (Halabi 2006) Research Evidence on Computer-based assessment

Effecting Sustainable Change in Assessment Practice and Experience: Escape Project A typical example of assessment Formative or low stakes assessment week 5 High stakes assessment (formal examination). End of semester week 2 10 8 12 6 4 Possible consequences Undistributed student work load Teachers not seeing student conceptions until too late Examination too high stakes = Low stakes assessment = Medium stakes assessment = High stakes assessment http://jiscdesignstudio.pbworks.com/w/page/12458419/ESCAPE%20Project

Assessment Design: Some better patterns? Two separate low / medium stakes assessment: weeks 7 & 12 Formative or low stakes assessment week 3 week 2 10 8 12 6 4 Possible consequences Engages students early with the curriculum Student workload reasonably well spread out Not reliant on high stakes assessment activity No (Limited?) opportunity for feedback after 3rd assessment http://jiscdesignstudio.pbworks.com/w/page/12458419/ESCAPE%20Project

Assessment Design: Some better patterns? Low stakes assessment weekly / fortnightly across all semester week 2 10 8 12 6 4 Possible consequences Engages students early with the curriculum Student workload is even distributed across entire semester All assessments are low stakes Could be demanding in staff / student time Assessment tasks could assess specific concepts / parts of the curriculum Staff gain early / regular feedback on student performance and concept understanding Tasks could be computer-based assessment http://jiscdesignstudio.pbworks.com/w/page/12458419/ESCAPE%20Project

Assessment Design: Some better patterns? Medium/High stakes assessment (integrating? / overview of previous tasks? ). Tutor Marked Low stakes assessment fortnightly for most of semester week 2 10 8 12 6 4 Possible consequences Engages students early with the curriculum Student workload is even distributed across entire semester Low stakes continuous assessment building to medium/high stakes assessment (exam) Could be demanding in staff / student time (although BBD quizzes will assist) Tasks could assess specific concepts: Building upon previous tasks: aiding cognitive load Staff gain early / regular feedback on student performance and concept understanding Tasks could be computer-based assessment http://jiscdesignstudio.pbworks.com/w/page/12458419/ESCAPE%20Project

Computer Assisted Assessment (JISC) Requirements: Benefits A QuestionMark Perception (QMP) account Students Staff Formative and summative assessment online The possibility of immediate and detailed feedback. More detailed and immediate analysis of student performance on each question enabling a greater fine tuning of assessment for future use. The ability to question the student right across the curriculum, addressing the full range of learning outcomes. Greater efficiency and reliability as a large number of papers can be marked quickly and consistently. The possibility of immediate and detailed feedback to students. The literature shows that gradual introduction e.g. a few short formative tests, and building a bank of tested questions over a period of time before moving to summative assessment is lower risk and less stressful for staff Tips

Continuous Assessment in HE? Define Summative assessment v Formative assessment Continuous assessment generally combines both: o Assessment tasks that provide feedback that students learn from, and provide part of their grade, would meet both definitions Learning-oriented assessment aims to encourage and support students learning (Carless 2007): focus on learning process and provide effective feedback: promote students autonomy and responsibility for monitoring and managing their own learning Hern ndez, R. (2012) "Does continuous assessment in higher education support student learning?" Higher Education 64(4): 489-502. Carless, D. (2007) "Learning oriented assessment: conceptual bases and practical implications." Innovations in Education and Teaching International 44(1): 57-66.

Continuous Assessment in HE? Refocus on effective - and timely - feedback to support students learning: and how eLearning techniques can facilitate this - in this case, in the context of a large UG first year cohort. Brown (1999) suggests effective feedback requires: 1. A clear statement of what will be assessed 2. A judgementof the students work 3. Feedback to address the gap between what they know and what s expected of them. Such description of advice and support to improve learning may be missing or mistimed: best described by the term feed-forward:how learners respond to feedback. Brown, S. (1999). "Institutional strategies for assessment." Assessment matters in higher education: Choosing and using diverse approaches: 3-13

Feedback v Feed Forward (JISC) While feedback focuses on current performance (and may simply justify the grade awarded), feed forward looks ahead to the next assignment. Feed forward offers constructive guidance on how to improve. A combination of feedback and feed forward ensures that assessment has an effective developmental impact on learning (provided the student has the opportunity and support to develop their own evaluative skills in order to use the feedback effectively) https://www.jisc.ac.uk/guides/feedback-and-feed-forward

Quality of Feedback in online tests Halabi (2006): Applied cognitive load theories in Accounting Education: Rich feedback (elaboration: cues to guide the learner toward the correct answer, in addition to basic feedback, i.e. verification: whether correct or incorrect) was significantly more useful for students with no prior knowledge. Providing rich feedback minimises extraneous cognitive load and aids easier construction by student of Schema (organizing and integrating new information) Halabi, A. K. (2006). "Applying an instructional learning efficiency model to determine the most efficient feedback for teaching introductory accounting." Global Perspective on Accounting Education 3(93): 113.

Quality of Feedback in online tests Attali (2015): Maths: varied feedback to investigate learning transfer: Solving open-ended problems resulted in larger transfer than multiple-choice. Where answer was correct, providing no feedback showed similar transfer effect as correct response ( Multiple try feedback showed larger transfer effect than single try feedback. Hint feedback showed largest transfer effect Attali, Y. (2015). "Effects of multiple-try feedback and question type during mathematics problem solving on performance in similar problems." Computers & Education 86: 260-267.

Quality of Feedback in online tests Attali and van der Kleij (2017): Maths: feedback elaboration and timing in practice tests (pairs of isomorphic items in tests): For incorrect (first) responses: o Elaborated feedback: more effective o Immediate feedback: more effective than delayed. For correct responses: o Delayed feedback was more effective than immediate. Attali, Y. and F. van der Kleij (2017). "Effects of feedback elaboration and feedback timing during computer-based practice in mathematics problem solving." Computers & Education 110: 154-169.

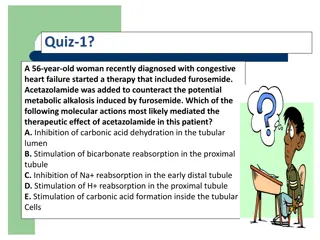

Blackboard basics: Test Q Types Calculated Formula (Maths) / Calculated Numeric Fill in the Blank / Multiple Blanks; Jumbled Sentence Essay / Short Answer / File Response Multiple Choice / Multiple Answer Matching; Ordering Either/Or; True/False Opinion / Likert Hot Spot (anatomy, geography, languages) Quiz Bowl

MCQ and online Quiz design: advice from Literature STEM: Present a single, definite statement to be completed or answered by a set of homogeneous choices. Avoid unnecessary / irrelevant material Use clear, straightforward language If negatives must be used: highlight. Put as much of the Q in the stem: don t duplicate in each option (Gronlund 1988) Avoid clues to the correct answer e.g. called a/an: is better than called an: Avoid ALWAYS and NEVER: test-wise students rule out universal statements KEY: For single response MCQ's, ensure that there is only one correct response! http://www.caacentre.ac.uk/resources/objective_tests/anatomy.shtml

MCQ and online Quiz design: advice from Literature DISTRACTERS Use only plausible and attractive alternatives as distractors. Distracters based on common student errors/misconceptions:very effective. Correct statements that don t answer the question: often strong distracters Avoid distracters that are very close to correct answer: confuses students. Distracters should differ substantially, not just in some minor nuance of phrasing or emphasis." (Isaacs 1994) Provide enough alternatives: a 1987 study suggests three choices sufficient (Brown 1997), provided of equal difficulty. http://www.caacentre.ac.uk/resources/objective_tests/anatomy.shtml

MCQ and online Quiz design: advice from Literature Matching Questions assess understanding of relationships. Test application of knowledge: a match of: Examples & terms Functions & parts Classifications & structures Applications & postulates Problems & principles Test knowledge: require a match of: Definitions & terms Historical events & dates Achievements & people Statements & postulates Descriptions & principles If you are writing MCQs sharing the same answer choices: group questions into a matching item. Provide clear directions Keep the information in each column as homogeneous as possible Allow the responses to be used more than once Arrange the list of responses systematically if possible (chronological, alphabetical, numerical) Include more responses than stems to help prevent process of elimination to answer question. http://www.caacentre.ac.uk/resources/objective_tests/anatomy.shtml

MCQ and online Quiz design: advice from Literature True-false questions Efficient in testing material in short time; combine within MCQ for complex Assertion-Reason Q 1 in 2 chance of guessing the correct answer of a question: Risk of guessing. Challenge: to write an unambiguously true or false statement for complex material. Less good at testing students of different abilities. Suggestions for writing true-false questions: Include only one main idea in each item and avoid negatives. Combine with other material (graphs, maps, writing) to test advanced learning outcomes. Use statements which are unequivocally true or false. Avoid lifting statements directly from course materials to avoid a correct answer by recall. Avoid absolutes and qualifiers: o none , never , always , all , impossible tend to be false, o usually , generally , sometimes , often tend to be true. http://www.caacentre.ac.uk/resources/objective_tests/anatomy.shtml

MCQ and online Quiz design: advice from Literature Assertion-Reason Questions Combines elements of multiple choice and true/false question types: tests more complicated issues and requires a higher level of learning. Q has two statements, an assertion and a reason. o Are both statements true? o If yes: does the reason correctly explains the assertion? http://www.caacentre.ac.uk/resources/objective_tests/anatomy.shtml

MCQ and online Quiz design: advice from Literature Text/Numerical Response/Match Questions Student supplies answer or completes a blank within text (words or numbers) Supply correct answer rather than identify or choose it: lower risk of guessing. Difficult to phrase in such a way that only a single correct answer is possible Spelling errors may disadvantage students who know the right answer. Blackboard allows numerous permutations of correct answer for which the student will be awarded full marks. Eg: "United States , "US", "USA" and "United States of America http://www.caacentre.ac.uk/resources/objective_tests/anatomy.shtml

MCQ and online Quiz design: advice from Literature Other Question Types Sore finger questions (language teaching and computer programming): a word, code or phrase is out of keeping with the rest of a passage. It could be presented as a 'hot spot' or text input type of question. Ranking questions require the student to relate items in a column to one another and can be used to test the knowledge of sequences, order of events, level of gradation. Sequencing questions require the student to position text or graphic objects in a given sequence. These are particularly good for testing methodology. Field simulation questions offer simulations of real problems or exercises. http://www.caacentre.ac.uk/resources/objective_tests/anatomy.shtml

Case Study: BMAN10621A/B/M Write tests: based on workshop areas, set a week before Sets of Questions (similar area/difficulty) Transfer to Blackboard: Question Pools Create a Test with Question Sets Please refer to Handout for detail on each step of the process Test the tests (using teaching team to trial Q s) Set up Groups for students with additional time Set Test Options and Adaptive Release Involve students in process: eg student reps (time limits etc.) Item analysis on each test: Q stats to ID weaker questions

Points for discussion/consideration Sharing Test Banks: share the burden! Purchasing Test Banks (and amending as needed) Uploading from excel or respondus Consider essay for some element (but: marking time..) Setting up student groups: advice available from your school s eL support Chasing up low scorers and non-submissions: email via BBD

Blackboard Item Analysis Question statistics table on the Item Analysis page: item analysis statistics for each question in the test. Questions that are recommended for your review are indicated with red circles so you can quickly scan for questions that might need revision. Discrimination: how well a Q differentiates students knowledge. Gooddiscriminating Q: students answer correctly also do well on the test overall. o Good: greater than 0.3 o Fair: between 0.1 and 0.3 o Poor: less than 0.1. for review o Cannot Calc: difficulty 100% or all same. Difficulty: % students who answered Q correctly. o Easy: > 80% correct for review o Medium: 30% - 80% o Hard: < 30% correct for review https://help.blackboard.com/Learn/Instructor/Tests_Pools_Surveys/Item_Analysis

BBD Item Analysis Example: Test 3: Higher Easy Q s for BMAN10621B (which tends to get higher score as it includes UG2s). Mainly discrimination is good which means most who score well in Q also score well in test overall. For 10621A and 10621M: Mainly medium-scoring Qs which is what BBD is looking for, with 8- 9 easy questions.

Feedback from BMAN10621B students: 2017 and 2018 Q1. The on-line tests pushed me to study more regularly as I progressed. 30 Q1 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2018 2017 26 20 4 2 24 19 5 25 - 1 - 4 53 - 3 3 2018 2017 20 58 Q1 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2018 45% 34% 2017 45% 36% 15 7% 3% 0% 5% 5% 9% 0% 2% 0% 8% 10 5 100% 100% 0 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree

Feedback from BMAN10621B students: 2017 and 2018 25 Q2. The regular tests helped me to gain a better understanding of course materials. Q2 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2,018 2,017 17 19 7 8 - 2 6 59 16 22 11 3 2 - 5 59 20 2018 2017 15 Q2 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2018 29% 32% 12% 14% 2017 27% 37% 19% 10 5% 3% 0% 8% 0% 3% 10% 100% 5 100% 0 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree

Feedback from BMAN10621B students: 2017 and 2018 20 Q3. I think it is a good idea to have regular tests and I would like to see this on other courses. Q3 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2018 2017 18 18 13 11 3 1 1 10 57 15 9 9 10 3 2 9 57 16 14 2018 2017 12 Q3 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2018 32% 23% 19% 2017 26% 16% 16% 18% 10 8 5% 2% 2% 18% 100% 6 5% 4% 16% 100% 4 2 0 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree

Feedback from BMAN10621B students: 2017 and 2018 35 Q4 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2018 2017 3 2 2 3 8 1 2 2 5 4 Q4. I prefer one mid-term test rather than several tests through a semester 30 16 26 60 13 31 58 25 2018 2017 20 Q4 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree 2018 2017 5% 3% 3% 5% 13% 27% 43% 100% 2% 3% 3% 9% 7% 22% 53% 100% 15 10 5 0 Strongly Agree Agree Somewhat Agree Neutral Somewhat Disagree Disagree Strongly Disagree

Feedback from BMAN10621B students: 2017 and 2018 Comments via Turning Point Message: I would like feedback after online tests [2018] Could there be more questions for every quiz? [2017] Could you please give us more time for each quiz? [2017] Please provide more exercises before each quiz [2017] Comments via Course Evaluations: Having small tests throughout the course helps (forces) you to keep up with revision and reading so you don't fall behind and takes the stress slightly off having one large exam at the end of the course. There is loads of support and extra work on blackboard for you to do if the lecture isn't enough. [2017] I liked the fact that the assessments were split into 5 smaller tests as it reduced my workload during reading week and meant I could revise in smaller chunks for each test. [2017]

Feedback from BMAN10621B students: 2017 and 2018 Comments via Course Evaluations: onlintest is a good choice for examining students. [2017] Weekly online tests are a fantastic idea to encourage constant revision of material. I feel I understand the course a lot more thanks to these. [2017] I found the online test very helpful as they kept me up to date with the course. [2017] Also, there are practice quizzes online and I like the weekly online assignments, which promotes me review the knowledge in a regular time and I won't fall behind. [2018] I most valued the amount of additional materials that the lecturers provided in order for us to get a better understanding of the unit (workshops, preview videos, homework questions, practice quizzes, additional exercises, self tests, online assignments). Because of all these, I found the course extremely well organised, in such a way that the students were continuously helped in their learning process. [2018] Online tests were a nice addition, keeping me motivated to read the course material in time, rather just a few weeks before the main exam. [2018]

Closing the feedback Loop The third element of Learning-Oriented Assessment: student action on feedback: Email and chase non-participants Discussion Board Retest: exam (large question) Retest: second attempt?

Some evidence from literature Huw Morgan [huw.morgan@manchester.ac.uk]

A brief review of literature on Summative Quizzes and Continuous Assessment Marriott and Lau (2008): Accounting Education Qualitative study on a series of online summative assessments introduced into a first-year financial accounting course. Student feedback (an evaluative survey and focus group interviews) suggested that students perceived a beneficial impact on learning, motivation, and engagement. Marriott, P. and A. Lau (2008). "The use of on-line summative assessment in an undergraduate financial accounting course." Journal of Accounting Education 26(2): 73-90.

A brief review of literature on Summative Quizzes and Continuous Assessment Nnadi and Rosser (2014): Accounting Education Developed individualised questions to reduce risk of collusion and enable coursework assessments to contribute to final grades. Incentivised students to do them in their own time, drawing on teaching materials and helping them to develop competency. Specific individualised feedback provided, and found to encourage students to work independently. Nnadi, M. and M. Rosser (2014). "The Individualised Accounting Questions Technique: Using Excel to Generate Quantitative Exercises for Large Classes with Unique Individual Answers." Accounting Education 23(3): 193-202.

A brief review of literature on Summative Quizzes and Continuous Assessment Einig (2013): Accounting Education MCQs perceived as useful (questionnaire) and used in different ways (accommodating different learning styles?). Statistically significant correlation between regular MCQ usage and higher final exam performance (controlling for past subject experience, gender, nationality), although limitation of self-selection. Quotes Kibble (2007): significant performance improvement for students using optional MCQs, but effect disappeared when significant incentives were offered and nearly all students completed them. Einig, S. (2013). "Supporting Students' Learning: The Use of Formative Online Assessments." Accounting Education 22(5): 425-444. Kibble, J. (2007). "Use of unsupervised online quizzes as formative assessment in a medical physiology course: effects of incentives on student participation and performance." Advances in physiology education 31(3): 253-260.

Brief review of literature on Summative Quizzes and Continuous Assessment: Other disciplines MCQs used as compulsory assessment, research suggests improved performance in the final examination for the whole group when compared to: Previous year s grade results: o Psychology: Buchanan (2000) o Geography: Charman (1998) A parallel control group: o Maths: McSweeney and Weiss (2003) o Natural sciences: Klecker (2007) No self-selection bias, but in comparing different student groups, alternative biases: e.g. cohort ability, changes in delivery or staff) Quoted in Einig, S. (2013). "Supporting Students' Learning: The Use of Formative Online Assessments." Accounting Education 22(5): 425-444.

Brief review of literature on Summative Quizzes and Continuous Assessment: Cumulative Testing Domenech, Blazquez et al. (2015): Microeconomics Frequent, Cumulative testing provides opportunities for students to receive regular feedback and to increase their motivation, provides the instructor with valuable information on how course progresses and resolve any problems encountered before it is too late. Students who were assessed by a frequent, cumulative testing approach largely outperformed those assessed with a single final exam. Domenech, J., et al. (2015). "Exploring the impact of cumulative testing on academic performance of undergraduate students in Spain." Educational Assessment, Evaluation and Accountability 27(2): 153-169.

Brief review of literature on Summative Quizzes and Continuous Assessment: Single v Dual Attempts Rhodes and Sarbaum (2013) However: Students got information on their online score and which questions they specifically missed, then re-take the same assignment in full with each question appearing one at a time in exactly the same order. Considered student behaviour under single and dual attempt online homework settings. Multiple attempts lead to gaming behaviour (and grade inflation): potentially demotivating students in final exam. Randomisation of pooled questions would mitigate the risk of gaming . Marriott, P. and A. Lau (2008). "The use of on-line summative assessment in an undergraduate financial accounting course." Journal of Accounting Education 26(2): 73-90.