Efficient Rule Management for Data Centers

This research discusses scalable rule management for data centers, exploring the use of rules for implementing management policies related to access control, rate limiting, traffic measurement, traffic engineering, and flow fields. It evaluates current practices, highlights the challenges of limited resources on machines, and anticipates the need for future data centers to handle a vast number of fine-grained rules efficiently.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Scalable Rule Management for Data Centers Masoud Moshref, Minlan Yu, Abhishek Sharma, Ramesh Govindan 4/3/2013

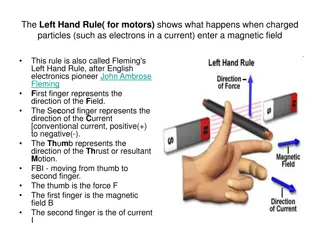

Introduction: Definitions Datacenters use rules to implement management policies Datacenters use rules to implement management policies Datacenters use rules to implement management policies Access control Rate limiting Traffic measurement Traffic engineering Flow fields examples: Src IP / Dst IP Protocol Src Port / Dst Port An action on a set of ranges on flow fields An actionon a set of ranges on flow fields Examples: Deny Accept Enqueue Motivation Introduction Motivation Design Evaluation 2

Introduction: Definitions Datacenters use rules to implement management policies An actionon a set of ranges on flow fields Src IP R1: Accept SrcIP: 12.0.0.0/7 DstIP: 10.0.0.0/8 Dst IP R2 R2: Deny SrcIP: 12.0.0.0/8 DstIP: 8.0.0.0/6 R1 Motivation Introduction Motivation Design Evaluation 3

Current practice Rules are saved on predefined fixed machines Agg2 Agg1 R3 R3 Agg2 Agg1 R4 R4 R0 R0 ToR1 ToR2 ToR3 ToR1 ToR2 ToR3 R3 R3 R3 R4 R4 R4 R0 R0 R0 R3 R3 R3 R3 R3 R3 R4 R4 R4 R4 R4 R4 R0 R0 R0 R0 R0 R0 On hypervisors On switches Motivation Introduction Motivation Design Evaluation 4

Machines have limited resources Top-of-Rack switch Network Interface Card Software switches on servers Motivation Introduction Motivation Design Evaluation 5

Future datacenters will have many fine-grained rules VLAN per server Traffic management (NetLord, Spain) 1M rules Per flow decision Flow measurement for traffic engineering (MicroTE, Hedera) 10M 100M rules Regulating VM pair communication Access control (CloudPolice) Bandwidth allocation (Seawall) 1B 20B rules Motivation Introduction Motivation Design Evaluation 6

Rule location trade-off (resource vs. bandwidth usage) R0 Storing rules at hypervisor incurs CPU overhead Architecture Motivation Introduction Design Evaluation 7

Rule location trade-off (resource vs. bandwidth usage) R0 Storing rules at hypervisor incurs CPU overhead Move the rule to ToR switch and forward traffic Architecture Motivation Introduction Design Evaluation 8

Rule location trade-off: Offload to servers R1 Architecture Motivation Introduction Design Evaluation 9

Challenges: Concrete example VM1 VM3 VM5 VM7 Agg1 Agg2 Src IP VM0 VM2 VM4 VM6 Dst IP 0 1 2 3 4 5 6 7 0 R4 1 2 3 4 5 6 7 ToR1 ToR2 ToR3 R5 R1 R0 R3 R2 R6 S1 S2 S3 S4 S5 S6 Architecture Motivation Introduction Design Evaluation 10

Challenges: Overlapping rules Source Placement: Saving rules on the source machine means minimum overhead Agg1 Agg2 Src IP Dst IP 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 R4 R4 1 2 3 4 5 6 7 7 ToR1 ToR2 ToR3 R5 R1 R1 R2 R0 R0 R3 R3 R2 R6 S1 VM2 VM6 S2 S3 S4 S5 S6 Architecture Motivation Introduction Design Evaluation 11

Challenges: Overlapping rules If Source Placement is not feasible Agg1 Agg2 Src IP Dst IP 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 R4 R4 1 2 3 4 5 6 7 7 ToR1 ToR2 ToR3 R1 R2 R1 R2 R0 R0 R3 R3 S1 VM2 VM6 S2 S3 S4 S5 S6 Architecture Motivation Introduction Design Evaluation 12

Challenges Preserve the semantics of overlapping rules Respect resource constraints Heterogeneous devices Minimize traffic overhead Handle Dynamics Traffic changes Rule changes VM Migration Architecture Motivation Introduction Design Evaluation 13

Contribution: vCRIB, a Virtual Cloud Rule Information Base Proactive rule placement abstraction layer Optimize traffic given resource constraints & changes Network State Agg2 Agg1 Rules ToR1 ToR2 ToR3 vCRIB R1 R4 R2 R3 R3 Design Introduction Motivation Evaluation 14

vCRIB design Topology & Routing Source Overlapping Rules Partitioning with Replication Rules Partitions Minimum Traffic Feasible Placement Design Introduction Motivation Evaluation 15

Partitioning with replication 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 7 7 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 6 0 1 2 3 4 5 0 Smaller partitions have more flexibility Cutting causes rule inflation R3 R3 R3 R3 1 2 3 4 5 6 7 R6 R6 R5 R5 R4 R4 R0 R0 R0 R0 R2 R2 R8 R8 R3 R3 R6 R5 R4 R 1 R 1 R1 R1 R7 R7 R0 R2 R0 R8 P2 P1 P3 R 1 R7 R 1 P1 P2 P3 Design Introduction Motivation Evaluation 16

Partitioning with replication R3 Introduce the concept of similarity to mitigate inflation ??? ?2,?3 = ?2 ?3 = R0,R1,R3 R6 R5 P1 P2 (7 rules) R0 R2 = 3 R1 R7 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 0 0 0 R3 R3 1 2 3 4 5 6 7 R3 1 2 3 4 5 6 7 1 2 3 4 5 6 7 R5R2 R6 R4 R0 R0 R0 R8 R1 R1 R 1 A7 P2(5 rules) P3 (5 rules) P1 (5 rules) Design Introduction Motivation Evaluation 17

Per-source partitions Src IP Dst IP 0 1 2 3 4 5 6 7 0 R4 1 2 3 4 5 6 7 Limited resource for forwarding No need for replication to approximate source-placement Closer partitions are more similar R5 R1 R0 R3 R2 R6 Design Introduction Motivation Evaluation 18

vCRIB design: Placement Topology & Routing Source Partitioning with Replication Rules Partitions Placement T11 Resource Constraints ToR1 T21 T22 T23 T32 T33 Traffic Overhead Minimum Traffic Feasible Placement Design Introduction Motivation Evaluation 19

vCRIB design: Placement Topology & Routing Source Partitioning with Replication Rules Partitions Resource-Aware Placement Resource Constraints Feasible Placement Traffic Overhead Traffic Overhead Traffic-Aware Refinement Refinement Traffic-Aware Minimum Traffic Feasible Placement Design Introduction Motivation Evaluation 20

FFDS (First Fit Decreasing Similarity) 1. Put a random partition on an empty device 2. Add the most similar partitions to the initial partition until the device is full Find the lower bound for optimal solution for rules Prove the algorithm is a 2-approximation of the lower bound Design Introduction Motivation Evaluation 21

vCRIB design: Heterogeneous resources Topology & Routing Source Partitioning with Replication Rules Partitions Resource-Aware Placement Resource Heterogeneity Resource Usage Function Feasible Placement Traffic-Aware Refinement Minimum Traffic Feasible Placement Design Introduction Motivation Evaluation 22

vCRIB design: Traffic-Aware Refinement Topology & Routing Source Partitioning with Replication Rules Partitions Resource-Aware Placement Resource Usage Function Feasible Placement Traffic-Aware Refinement Traffic Overhead Minimum Traffic Feasible Placement Design Introduction Motivation Evaluation 23

Traffic-aware refinement Overhead greedy approach 1. Pick maximum overhead partition 2. Put it where minimizes the overhead and maintains feasibility Agg1 Agg2 P4 ToR1 ToR2 P2 ToR3 S1 VM 2 S2 S3 S4 VM 4 S5 S6 Design Introduction Motivation Evaluation 24

Traffic-aware refinement Overhead greedy approach 1. Pick maximum overhead partition 2. Put it where minimizes the overhead and maintains feasibility Problem: Local minima Our approach: Benefit greedy Agg1 Agg2 ToR1 ToR2 ToR3 S1 VM 2 P2 S2 S3 S4 VM 4 P4 S5 S6 Design Introduction Motivation Evaluation 25

vCRIB design: Dynamics Topology & Routing Source Partitioning with Replication Rules Partitions Rule/VM Dynamics Resource-Aware Placement Resource Usage Function Feasible Placement Traffic-Aware Refinement Dynamics Major Traffic Changes Minimum Traffic Feasible Placement Design Introduction Motivation Evaluation 26

vCRIB design Topology & Routing Source Overlapping Rules Partitioning with Replication Rules Partitions Resource Constraints Rule/VM Dynamics Resource-Aware Placement Resource Heterogeneity Resource Usage Function Feasible Placement Traffic-Aware Refinement Traffic Overhead Major Traffic Changes Dynamics Minimum Traffic Feasible Placement Design Introduction Motivation Evaluation 27

Evaluation Comparing vCRIB vs. Source-Placement Parameter sensitivity analysis Rules in partitions Traffic locality VMs per server Different memory sizes Where is the traffic overhead added? Traffic-aware refinement for online scenarios Heterogeneous resource constraints Switch-only scenarios Evaluation Introduction Motivation Design 28

Simulation setup 1k servers with 20 VMs per server in a Fat-tree network 200k rules generated by ClassBench and random action IPs are assigned in two ways: 0 1 2 3 4 5 6 7 Random Range Flows Size follows long-tail distribution Local traffic matrix (0.5 same rack, 0.3 same pod, 0.2 interpod) Evaluation Introduction Motivation Design 29

Comparing vCRIB vs. Source-Placement Maximum Load is 5K Capacity is 4K 0.3 Range Random 0.25 Traffic overhead ratio Random: Average load is 4.2K 0.2 0.15 0.1 0.05 0 4k_0 Server memory_Switch memory 4k_4k 4k_6k vCRIB finds low traffic feasible solution Range is better as similar partitions are from the same source Adding more resources helps vCRIB reduce traffic overhead Evaluation Introduction Motivation Design 30

Parameter sensitivity analysis: Rules in partitions Total space 2000 Average Similarity vCRIB 1000 Source placement 0 0 1000 Average Partition Size 2000 Defined by maximum load on a server Evaluation Introduction Motivation Design 31

Parameter sensitivity analysis: Rules in partitions Total space 2000 Average Similarity vCRIB A vCRIB <10% Traffic 1000 A Source placement 0 0 1000 Average Partition Size 2000 Lower traffic overhead for smaller partitions and more similar ones Evaluation Introduction Motivation Design 32

Conclusion and future work Conclusion vCRIB allows operators and users to specify rules, and manages their placement in a way that respects resource constraints and minimizes traffic overhead. Future work Support reactive placement by adding the controller in the loop Break a partition for large number of rules per VM Test for other rulesets Evaluation Introduction Motivation Design 33

Scalable Rule Management for Data Centers Masoud Moshref, Minlan Yu, Abhishek Sharma, Ramesh Govindan 4/3/2013