Assessing via Traditional Cognitive Tests and Aptitude Tests

Terminology, standardization, interview testing, domain-referenced vs. norm-referenced testing, discrimination, generalization, and examples of traditional cognitive tests like GATB and GMA are discussed in Chapter 10.

Uploaded on Mar 01, 2025 | 0 Views

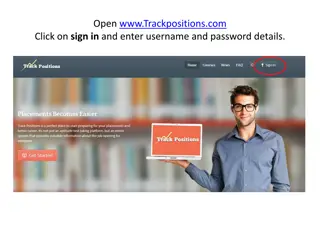

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Part III Choosing the Right Method Chapter 10 Assessing Via Tests Traditional Employment Tests Work Samples Situational Judgment (SJT) Tests Technology Global Testing chapter 10 Assessing via Tests 1

Terminology Test ..objective and standardized procedure for measuring a psychological construct using a sample of behavior What are some examples? Standardization ..controlling the conditions and procedures of test administration, keeping them constant or unvaryng. Construct fairly well developed idea of a trait. Most KSAOs Kerlinger s definitions Give an example of a construct and operational definition using Kerlinger s definitions that s IO related chapter 10 Assessing via Tests 2

More terms Standardization controlling conditions and procedures so scores among different people are comparable. What makes an interview a test rather than just an interview? chapter 10 Assessing via Tests 3

NORM-REFERENCED AND DOMAIN- REFERENCED TESTING What the difference between the two? (domain-referenced is also call criterion- referenced) What are some examples (or situations) where the purpose would require one or the other? (thought question) Hint: when criticality of performance is important or where characteristics of the applicant pool may vary geographically or over time. chapter 10 Assessing via Tests 4

TRADITIONAL COGNITIVE TESTS Discrimination and generalization i.e. recognize or discover relationships Know, perceive, remember, understand, cognitive manipulation Problem solving, evaluation of ideas Compare and contrast IQ, GMA, Cognitive ability Also can be considered aptitude and/ or achievement e.g. WPT with NFL players performance?) (do you think scores are related to chapter 10 Assessing via Tests 5

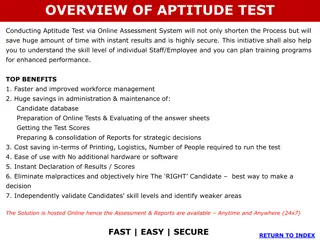

Traditional Cognitive Tests (Aptitude Tests) What is the difference between an achievement test and an aptitude test? Should you make one or buy one? GATB (General Aptitude Test Battery) GMA Verbal Aptitude Numerical Spatial Form Perception Clerical Perception Motor/Coordination Finger Dexterity Manual Dexterity chapter 10 Assessing via Tests 6

Cognitive Ability Off the shelf WPT Watson Glaser Test of Cognitive Ability (WGCTA) DAT (Differential aptitude Test) What is a job you would use each of them for? What are the relative advantages and disadvantages for developing homemade tests vs. commercially available ones? chapter 10 Assessing via Tests 7

Work Samples and Performance Tests How can they be both criteria and / or predictors? Give examples. Work Samples and Simulations standardized abstraction of the work Give hypothetical examples of a high and low fidelity simulation using an example from Boeing 737 MAX MCAS) what level of abstraction Would you use? Developing Work Samples to measure proficiency For criterion which tasks would be measured? For selection which ones would be omitted? chapter 10 Assessing via Tests 8

Work Samples and Performance Tests (cont ) Situational Judgments Can be either multiple choice or video (higher fidelity) should do? McDaniel & Nguyen, 01) or would do? Ployhart & Ehrhart , 03) With incremental validity over GMA, Personality, job experience (Chan & Schmitt, 02) If situational judgement is used as a construct for good judgment Then it holds much promise (Brooks & Highhouse, 2006) chapter 10 Assessing via Tests 9

Non-Cognitive Performance Physical Abilities (series of distinct physical activities) Sometimes difficult to set valid cut scores Make sure the cut score does not discriminate unfairly for gender. (can the job be redesigned?) Fitness Testing (measures strength agility, etc.) a two-edged sword for setting standards and not doing so. What is the legal conundrum here? Should fitness testing be required for some jobs on a daily basis? Should drug testing be performed for some jobs and not other? Sensory & Psychomotor Proficiencies (GATB?) chapter 10 Assessing via Tests 10

Technology and Testing What is a stenographer? Where did they go? Computerization of tests Hi fidelity is more possible with CAT (SJT, etc.) Collection and storing data made easier (lot of data!) Computer Adaptive Tests What s the difference between linear testing and branching algorithms? How can IRT help? chapter 10 Assessing via Tests 11

Technology and Testing Proctored v. Un-proctored Proctored vs. Un-proctored testing (a range) Proctored 1. limits distraction 2. Verifies user identity 3. Monitors time (also possible with un- proctored) 4. prevents unauthorized subs 5. prevents cheating chapter 10 Assessing via Tests 12

Technology and Testing Proctored v. Un-proctored Use of mobile devices for testing online (Arthur, et al.2014) Un-proctored internet-based tests (UIT) Can they be trusted for: for personality? cognitive ability? See two articles on files directory (Arthur et al. 2009 & 2010) AOE Science chapter 10 Assessing via Tests 13

Technology and Testing Simulations, Games, Gamification Gamification Game elements introduced into traditional testing as SJT or cognitive assessments personality assessed with facial recognition in online interviews, or in basket exercises Vary in levels of fidelity E.g. online interviews with avatars or video interaction with actors IRT may be implemented here chapter 10 Assessing via Tests 14

Global Testing Standardization across Countries Legal Issues across countries: Definition of protected (or minority groups) vary Examples? Ethical behavior viewed differently Examples? rights of workers vary Examples? These issues are amplified in a global environment chapter 10 Assessing via Tests 15

Global Issues Translations and Equivalence Issues Cross cultural testing a challenge: Different approaches to testing Test administration problems Score equivalence Considerations: Use IRT to establish item equivalence Develop global measures (Schmitt, Kihm & Robie, 2000) Always use translation to back translation chapter 10 Assessing via Tests 16

Global Testing Standardization across Countries Translation and Equivalence Score equivalence is unattainable with literal translation Words don t mean the same thing in different cultures Some constructs may not exit in some cultures E.g. Island of Bali direction terms are geocentric Where the speaker is located relative to an object (volcano, e.g) In Guugu Yimidhirr language do not use left-right Always know where north is so would say: a spider is crawling up your southeastern arm chapter 10 Assessing via Tests 17

Tests and Controversy Setting Cut Scores rarely recommended but often necessary: Civil service Licenses & certification Cyclical hiring (needs forecasting estimates, e.g. teachers) Predicted Yield Model (Thorndike, 49) Fluctuation of candidate qualifications varies availability of openings varies Depends upon accurate forecasting (history and research) Regression-Based Methods chapter 10 Assessing via Tests 18

Tests and Controversy Do we need licensing tests to establish credentials? Do we need educational proficiency exams? We will always assess - either With tests (more objective) With subjective judgment chapter 10 Assessing via Tests 19