Neural Network and Variational Autoencoders

The concepts of neural networks and variational autoencoders. Understand decision-making, knowledge representation, simplification using equations, activation functions, and the limitations of a single perceptron.

4 views • 28 slides

Comprehensive Overview of Autoencoders and Their Applications

Autoencoders (AEs) are neural networks trained using unsupervised learning to copy input to output, learning an embedding. This article discusses various types of autoencoders, topics in autoencoders, applications such as dimensionality reduction and image compression, and related concepts like embe

7 views • 86 slides

Deep Generative Models in Probabilistic Machine Learning

This content explores various deep generative models such as Variational Autoencoders and Generative Adversarial Networks used in Probabilistic Machine Learning. It discusses the construction of generative models using neural networks and Gaussian processes, with a focus on techniques like VAEs and

10 views • 18 slides

Improving Qubit Readout with Autoencoders in Quantum Science Workshop

Dispersive qubit readout, standard models, and the use of autoencoders for improving qubit readout in quantum science are discussed in the workshop led by Piero Luchi. The workshop covers topics such as qubit-cavity systems, dispersive regime equations, and the classification of qubit states through

3 views • 22 slides

Telco Data Anonymization Techniques and Tools

Explore the sensitive data involved in telco anonymization, techniques such as GANs and Autoencoders, and tools like Microsoft's Presidio and Python libraries for effective data anonymization in the telecommunications field.

1 views • 9 slides

Classical Mechanics: Variational Principle and Applications

Classical Mechanics explores the Variational Principle in the calculus of variations, offering a method to determine maximum values of quantities dependent on functions. This principle, rooted in the wave function, aids in finding parameter values such as expectation values independently of the coor

2 views • 16 slides

Autoencoders: Applications and Properties

Autoencoders play a crucial role in supervised and unsupervised learning, with applications ranging from image classification to denoising and watermark removal. They compress input data into a latent space and reconstruct it to produce valuable embeddings. Autoencoders are data-specific, lossy, and

1 views • 18 slides

Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) in Machine Learning

Introduction to Generative Models with Latent Variables, including Gaussian Mixture Models and the general principle of generation in data encoding. Exploring the creation of flexible encoders and the basic premise of variational autoencoders. Concepts of VAEs in practice, emphasizing efficient samp

0 views • 19 slides

Principal Components Analysis (PCA) and Autoencoders in Neural Networks

Principal Components Analysis (PCA) is a technique that extracts important features from high-dimensional data by finding orthogonal directions of maximum variance. It aims to represent data in a lower-dimensional subspace while minimizing reconstruction error. Autoencoders, on the other hand, are n

2 views • 35 slides

Neural Quantum States and Symmetries in Quantum Mechanics

This article delves into the intricacies of anti-symmetrized neural quantum states and the application of neural networks in solving for the ground-state wave function of atomic nuclei. It discusses the setup using the Rayleigh-Ritz variational principle, neural quantum states (NQSs), variational pa

3 views • 15 slides

Stacked RBMs for Deep Learning

Explore the concept of stacking Restricted Boltzmann Machines (RBMs) to learn hierarchical features in deep neural networks. By training layers of features directly from pixels and iteratively learning features of features, we can enhance the variational lower bound on log probability of generating

0 views • 39 slides

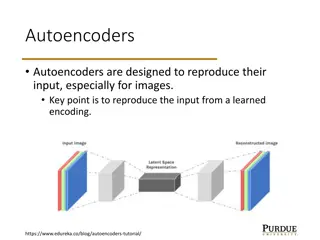

Variational Autoencoders (VAE) in Machine Learning

Autoencoders are neural networks designed to reproduce their input, with Variational Autoencoders (VAE) adding a probabilistic aspect to the encoding and decoding process. VAE makes use of encoder and decoder models that work together to learn probabilistic distributions for latent variables, enabli

8 views • 11 slides

Generative AI Training | Generative AI Course in Hyderabad

Visualpath Generative AI Training in teachesCovering key technologies like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer models such as GPT. Attend a Free Demo Call At 91-9989971070\nVisit our Blog: \/\/vis

1 views • 2 slides

Evolution of Lexical Categories: A Cognitive Sociolinguistics Perspective

The lecture series at the University of Leuven explores Diachronic Prototype Semantics and its implications for Variational Linguistics. It delves into semasiological, conceptual onomasiological, and formal onomasiological variation in linguistic meaning, emphasizing the role of variability in the e

2 views • 67 slides

Hybrid Variational/Ensemble Data Assimilation for NCEP GFS

Hybrid Variational/Ensemble Data Assimilation combines features from the Ensemble Kalman Filter and Variational assimilation methods to improve the NCEP Global Forecast System. It incorporates ensemble perturbations into the variational cost function, leading to more accurate forecasts. The approach

1 views • 22 slides

Evidence for Hydrometeor Storage and Advection Effects in DYNAMO Budget Analyses of the MJO

Variational constraint analyses (VCA) were conducted for DYNAMO in two regions to compare observed radar rainfall data with conventional budget method results, examining differences and composite analyses of MJO events. The study utilized input data including Gridded Product Level 4 sounding data an

0 views • 13 slides

GENAI Training In Hyderabad | GENAI Course In Hyderabad

Gen AI Training - Visualpath offers the best Generative AI Training, teaches key technologies like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer models such as GPT. Our Generative AI Online Training is avai

0 views • 3 slides

Gen AI Online Training Institute | Gen AI Training

Gen AI Online Training Institute - Visualpath offers the best Generative AI Training, teaches key technologies like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer models such as GPT. Our Generative AI Online

0 views • 10 slides

Gen AI Online Training Institute | Gen AI Training

\nGen AI Online Training Institute - Visualpath offers the best Generative AI Training, teaches key technologies like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer models such as GPT. Our Generative AI Onli

1 views • 10 slides

Machine Learning and Generative Models in Particle Physics Experiments

Explore the utilization of machine learning algorithms and generative models for accurate simulation in particle physics experiments. Understand the concepts of supervised, unsupervised, and semi-supervised learning, along with generative models like Variational Autoencoder and Gaussian Mixtures. Le

1 views • 15 slides

Probabilistic Graphical Models Part 2: Inference and Learning

This segment delves into various types of inferences in probabilistic graphical models, including marginal inference, posterior inference, and maximum a posteriori inference. It also covers methods like variable elimination, belief propagation, and junction tree for exact inference, along with appro

1 views • 33 slides

Generative AI Online Training | Generative AI Training

Gen AI Online Training - Visualpath offers the best Generative AI Training, teaches key technologies like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer models such as GPT. Our Generative AI Online Training

0 views • 10 slides

Generative AI Training | Generative AI Online Training

Gen AI Online Training - Visualpath offers the best Generative AI Training, teaches key technologies like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer models such as GPT. Our Generative AI Online Training

0 views • 4 slides

Nonlinear Proton Dynamics in the IOTA Ring: Advancements in Beam Acceleration

Probing the frontier of proton acceleration, this research delves into nonlinear dynamics within the IOTA ring, showcasing integrable optics and innovative technologies. Collaborations with Fermilab drive advancements in accelerator science, supported by the US DOE. The study explores variational as

2 views • 21 slides

Riemannian Normalizing Flow on Variational Wasserstein Autoencoder for Text Modeling

This study explores the use of Riemannian Normalizing Flow on Variational Wasserstein Autoencoder (WAE) to address the KL vanishing problem in Variational Autoencoders (VAE) for text modeling. By leveraging Riemannian geometry, the Normalizing Flow approach aims to prevent the collapse of the poster

1 views • 20 slides

Insights into Small Strain Elasto-Plasticity Variational Viewpoint

Explore the variational viewpoint on old and new results in small strain elasto-plasticity, discussing deformation, frame indifference, linear elasticity, crystalline plasticity, stress equilibration, thermodynamics, Von Mises flow rule, existence theory, and variational evolution in a nutshell.

0 views • 13 slides

Tricks of the Trade II - Deep Learning and Neural Nets

Dive into the world of deep learning and neural networks with "Tricks of the Trade II" from Spring 2015. Explore topics such as perceptron, linear regression, logistic regression, softmax networks, backpropagation, loss functions, hidden units, and autoencoders. Discover the secrets behind training

0 views • 27 slides

Terrain-Resolving Scheme for Doppler Radar Analysis System

This study focuses on developing a unique terrain-resolving scheme for the forward model and its adjoint in the Four-Dimensional Variational Doppler Radar Analysis System (VDRAS) in the year 2018. The research delves into enhancing the capabilities of radar analysis systems to improve weather foreca

0 views • 14 slides

Variational Knowledge Graph Reasoning with Generative Models

In this study, the concept of variational inference is applied to resolve intractable objectives in knowledge graph completion tasks. The research outlines methods, experimental results, and concluding remarks, along with exploring existing knowledge graph completion techniques and problem formulati

0 views • 23 slides

Cutting-edge Techniques in Graph Regression using Graph Autoencoders

Explore the latest advancements in graph regression using Graph Autoencoders as discussed in the IAPR Joint International Workshops on Statistical Techniques in Pattern Recognition (SPR 2022) and Structural and Syntactic Pattern Recognition (SSPR 2022). The methodology involves a 2-step approach wit

0 views • 15 slides

Unsupervised Learning and Neural Networks: A Comprehensive Guide

Explore the world of unsupervised learning in neural networks, including concepts like clustering, autoencoders, and more. Discover the key differences between supervised and unsupervised learning approaches and their applications in solving complex data problems.

0 views • 13 slides

Cutting-Edge Prosody Modeling for Expressive Speech Synthesis

Explore the latest research on discourse-level prosody modeling using variational autoencoders for non-autoregressive expressive speech synthesis. The study delves into innovative methods to address the one-to-many mapping challenge in synthesizing expressive speech, presenting a detailed analysis o

0 views • 22 slides

Advanced Medical Imaging Applications for Brain MR Analysis

Explore cutting-edge applications in medical imaging, including image segmentation, age regression, sex classification, and representation learning using advanced neural networks. Dive into the world of multi-sequence brain MR analysis, from predicting brain tissues to reconstructing images with dee

0 views • 10 slides

Few-Shot Link Prediction Using Variational Heterogeneous Attention Networks

Explore a novel approach for few-shot link prediction leveraging variational heterogeneous attention networks to enhance representations of high-frequency and few-shot entities. Addressing challenges of long-tailed distributions, tail entity importance variations, and information bottlenecks, the mo

0 views • 10 slides

Molecular Structure: Hückel Approximation and Molecular Orbitals

Explore the concepts of Hückel approximation, Hartree-Fock equations, and Density Functional Theory in molecular structure studies. Learn about molecular orbitals, variational methods, overlap integrals, and more through examples of homonuclear and heteronuclear molecules.

0 views • 22 slides

Understanding Computational Chemistry in Quantum Chemistry Studies

Explore the world of computational chemistry in quantum chemistry studies, covering topics like optimizing structures, basis set names, molecular orbitals, Hamiltonian principles, approximations, linear combinations of atomic orbitals, and the variational principle for finding optimal electronic str

0 views • 21 slides

AI CSI Compression Study with VQ-VAE Method

Explore the study on AI CSI compression using a new vector quantization variational autoencoder (VQ-VAE) method, discussing existing works, performance evaluation, and future possibilities. The study focuses on reducing feedback overhead and improving throughput in wireless communication systems.

0 views • 10 slides

Decoupling Quantum Dynamics with Machine Learning

Explore variational decoupling of quantum dynamics using a proper basis and machine learning techniques to find efficient measurable cost functions and updates. Learn how computers can determine if a basis is proper for general dynamics.

0 views • 4 slides

Deep Similarity Learning for Multimodal Medical Images

Explore how deep neural networks are utilized to learn similarity metrics for uni-modal/multi-modal medical images in the context of image registration and clinical applications. Methods such as fully connected DNNs and stacked denoising autoencoders are discussed, emphasizing the importance of effe

0 views • 19 slides

International Conference on Particle Physics and Astrophysics - Resonant States of Muonic Three-Particle Systems

Explore the study of resonant states in muonic three-particle systems with lithium, helium, and hydrogen nuclei at the 7th International Conference on Particle Physics and Astrophysics. Discover the importance of low-energy interactions in atomic nuclei, computational variational methods, and stocha

0 views • 12 slides