Introduction to Deep Learning: Neural Networks and Multilayer Perceptrons

Explore the fundamentals of neural networks, including artificial neurons and activation functions, in the context of deep learning. Learn about multilayer perceptrons and their role in forming decision regions for classification tasks. Understand forward propagation and backpropagation as essential

3 views • 74 slides

Rainfall-Runoff Modelling Using Artificial Neural Network: A Case Study of Purna Sub-catchment, India

Rainfall-runoff modeling is crucial in understanding the relationship between rainfall and runoff. This study focuses on developing a rainfall-runoff model for the Upper Tapi basin in India using Artificial Neural Networks (ANNs). ANNs mimic the human brain's capabilities and have been widely used i

0 views • 26 slides

Understanding Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM)

Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) are powerful tools for sequential data learning, mimicking the persistent nature of human thoughts. These neural networks can be applied to various real-life applications such as time-series data prediction, text sequence processing,

15 views • 34 slides

Understanding Mechanistic Interpretability in Neural Networks

Delve into the realm of mechanistic interpretability in neural networks, exploring how models can learn human-comprehensible algorithms and the importance of deciphering internal features and circuits to predict and align model behavior. Discover the goal of reverse-engineering neural networks akin

6 views • 31 slides

Graph Neural Networks

Graph Neural Networks (GNNs) are a versatile form of neural networks that encompass various network architectures like NNs, CNNs, and RNNs, as well as unsupervised learning models such as RBM and DBNs. They find applications in diverse fields such as object detection, machine translation, and drug d

2 views • 48 slides

Understanding Keras Functional API for Neural Networks

Explore the Keras Functional API for building complex neural network models that go beyond sequential structures. Learn how to create computational graphs, handle non-sequential models, and understand the directed graph of computations involved in deep learning. Discover the flexibility and power of

1 views • 12 slides

Understanding LR Parsing and State Merging Techniques

The content discusses LR parsing techniques such as LR(0), SLR(1), LR(1), LALR(1), and their advantages in resolving shift-reduce and reduce-reduce conflicts. It also delves into state merging in LR parsing, highlighting how merging states can introduce conflicts and affect error detection in parser

0 views • 29 slides

Understanding Artificial Neural Networks From Scratch

Learn how to build artificial neural networks from scratch, focusing on multi-level feedforward networks like multi-level perceptrons. Discover how neural networks function, including training large networks in parallel and distributed systems, and grasp concepts such as learning non-linear function

1 views • 33 slides

Understanding Back-Propagation Algorithm in Neural Networks

Artificial Neural Networks aim to mimic brain processing. Back-propagation is a key method to train these networks, optimizing weights to minimize loss. Multi-layer networks enable learning complex patterns by creating internal representations. Historical background traces the development from early

1 views • 24 slides

A Deep Dive into Neural Network Units and Language Models

Explore the fundamentals of neural network units in language models, discussing computation, weights, biases, and activations. Understand the essence of weighted sums in neural networks and the application of non-linear activation functions like sigmoid, tanh, and ReLU. Dive into the heart of neural

0 views • 81 slides

Assistive Speech System for Individuals with Speech Impediments Using Neural Networks

Individuals with speech impediments face challenges with speech-to-text software, and this paper introduces a system leveraging Artificial Neural Networks to assist. The technology showcases state-of-the-art performance in various applications, including speech recognition. The system utilizes featu

1 views • 19 slides

Advancing Physics-Informed Machine Learning for PDE Solving

Explore the need for numerical methods in solving partial differential equations (PDEs), traditional techniques, neural networks' functioning, and the comparison between standard neural networks and physics-informed neural networks (PINN). Learn about the advantages, disadvantages of PINN, and ongoi

0 views • 14 slides

Exploring Neural Quantum States and Symmetries in Quantum Mechanics

This article delves into the intricacies of anti-symmetrized neural quantum states and the application of neural networks in solving for the ground-state wave function of atomic nuclei. It discusses the setup using the Rayleigh-Ritz variational principle, neural quantum states (NQSs), variational pa

1 views • 15 slides

Understanding Spiking Neurons and Spiking Neural Networks

Spiking neural networks (SNNs) are a new approach modeled after the brain's operations, aiming for low-power neurons, billions of connections, and high accuracy training algorithms. Spiking neurons have unique features and are more energy-efficient than traditional artificial neural networks. Explor

5 views • 23 slides

Introduction to Neural Networks in IBM SPSS Modeler 14.2

This presentation provides an introduction to neural networks in IBM SPSS Modeler 14.2. It covers the concepts of directed data mining using neural networks, the structure of neural networks, terms associated with neural networks, and the process of inputs and outputs in neural network models. The d

0 views • 18 slides

Dynamic Oracle Training in Constituency Parsing

Policy gradient serves as a proxy for dynamic oracles in constituency parsing, helping to improve parsing accuracy by supervising each state with an expert policy. When dynamic oracles are not available, reinforcement learning can be used as an alternative to achieve better results in various natura

0 views • 20 slides

Understanding Shift-Reduce Parsing Example in Mr. Lupoli's F2012

This example explains shift-reduce parsing by tracing the input to the original start symbol. It demonstrates how shifting and reducing operations work in parsing mechanics, using the given original production and syntax rules for matching and reduction steps.

0 views • 16 slides

Neural Shift-Reduce Dependency Parsing in Natural Language Processing

This content explores the concept of Shift-Reduce Dependency Parsing in the context of Natural Language Processing. It describes how a Shift-Reduce Parser incrementally builds a parse without backtracking, maintaining a buffer of input words and a stack of constructed constituents. The process invol

0 views • 34 slides

Understanding Shift Registers in Sequential Logic Circuits

Shift registers are sequential logic circuits used for storing digital data. They consist of interconnected flip-flops that shift data in a controlled manner. This article explores different types of shift registers such as Serial In - Serial Out, Serial In - Parallel Out, Parallel In - Serial Out,

2 views • 9 slides

Understanding Advanced Classifiers and Neural Networks

This content explores the concept of advanced classifiers like Neural Networks which compose complex relationships through combining perceptrons. It delves into the workings of the classic perceptron and how modern neural networks use more complex decision functions. The visuals provided offer a cle

0 views • 26 slides

Understanding Advanced Parsing Techniques for NLP Evaluation

Delve into the realm of advanced parsing with a focus on evaluating natural language processing models. Learn about tree comparison, evaluation measures like Precision and Recall, and the use of corpora like Penn Treebank for standardized parsing evaluation. Gain insights on how to assess parser per

0 views • 50 slides

Analyzing Discourse Structures in Natural Language Processing

This content explores various aspects of NLP including discourse analysis, parsing, rhetorical relations, and argumentative zoning. It delves into understanding text structures, relationships, and the use of different rhetorical devices. Examples and illustrations are provided to aid comprehension a

0 views • 8 slides

Understanding Neural Processing and the Endocrine System

Explore the intricate communication network of the nervous system, from nerve cells transmitting messages to the role of dendrites and axons in neural transmission. Learn about the importance of insulation in neuron communication, the speed of neural impulses, and the processes involved in triggerin

0 views • 24 slides

Review of Quiz 2 Topics: Encoding in Python, Binary Representations, and Parsing Messages

Today's session covered a review of Quiz 2 topics focusing on Encoding in Python, Binary Representations, and Parsing Messages. Key points included understanding why different types of data cannot have unique types in Python, recognizing the significance of 0d0a in HTTP body, discussing exercises fr

0 views • 10 slides

Understanding Sentence Comprehension and Memory in Psycholinguistics

Sentence comprehension involves parsing, assigning linguistic categories, and utilizing syntactic, semantic, and pragmatic knowledge. The immediacy principle and wait-and-see approach play roles in the processing of sentences. Figurative language and the challenges in parsing sentences are also disc

0 views • 26 slides

Understanding Top-Down Parsing in Context-Free Syntax

Context-free syntax expressed with context-free grammar plays a key role in top-down parsing. This parsing method involves constructing parse trees from the root down to match an input string by selecting the right productions guided by the input. Recursive-descent parsing, Rule Sentential Forms, an

0 views • 17 slides

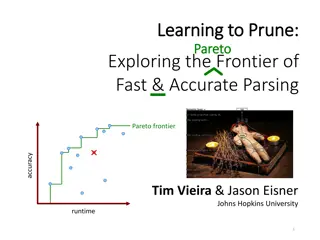

Exploring Fast & Accurate Parsing With Learning to Prune

In this informative content, the concept of learning to prune is discussed in the context of exploring the frontier of fast and accurate parsing. It delves into the optimization tradeoff between runtime and accuracy in end-to-end systems, showcasing a Pareto frontier of different system performances

0 views • 42 slides

Exploring Data Acquisition and Parsing Methods in Data Science

This lecture covers various aspects of obtaining and parsing data, including methods for extracting web content, basic PANDAS commands for data storage and exploration, and the use of libraries like Requests, BeautifulSoup, and PANDAS. The Data Science Process is highlighted, emphasizing the importa

0 views • 42 slides

Neural Network Control for Seismometer Temperature Stabilization

Utilizing neural networks, this project aims to enhance seismometer temperature stabilization by implementing nonlinear control to address system nonlinearities. The goal is to improve control performance, decrease overshoot, and allow adaptability to unpredictable parameters. The implementation of

0 views • 24 slides

Introduction to NLP Parsing Techniques and Algorithms

Delve into the world of Natural Language Processing (NLP) with a focus on parsing techniques like Cocke-Kasami-Younger (CKY) and Chart Parsing. Explore challenges such as left recursion and dynamic programming in NLP, along with detailed examples and explanations of the CKY Algorithm.

0 views • 42 slides

Understanding Batch Normalization in Neural Networks

Batch Normalization (BN) is a technique used in neural networks to improve training efficiency by reducing internal covariate shift. This process involves normalizing input data to specific ranges or mean and variance values, allowing for faster convergence in optimization algorithms. By standardizi

0 views • 18 slides

Machine Learning and Artificial Neural Networks for Face Verification: Overview and Applications

In the realm of computer vision, the integration of machine learning and artificial neural networks has enabled significant advancements in face verification tasks. Leveraging the brain's inherent pattern recognition capabilities, AI systems can analyze vast amounts of data to enhance face detection

0 views • 13 slides

Enhancing Name and Address Parsing for Data Standardization

Explore the project focused on improving the quality of name and address parsing using active learning methods at the University of Arkansas. Learn about the importance of parsing, entity resolution, and the token pattern approach in standardizing and processing unstructured addresses. Discover the

0 views • 11 slides

Understanding Neural Network Training and Structure

This text delves into training a neural network, covering concepts such as weight space symmetries, error back-propagation, and ways to improve convergence. It also discusses the layer structures and notation of a neural network, emphasizing the importance of finding optimal sets of weights and offs

0 views • 31 slides

Exploring Variability and Noise in Neural Networks

Understanding the variability of spike trains and sources of variability in neural networks, dissecting if variability is equivalent to noise. Delving into the Poisson model, stochastic spike arrival, and firing, and biological modeling of neural networks. Examining variability in different brain re

0 views • 71 slides

Understanding Neural Network Watermarking Technologies

Neural networks are being deployed in various domains like autonomous systems, but protecting their integrity is crucial due to the costly nature of machine learning. Watermarking provides a solution to ensure traceability, integrity, and functionality of neural networks by allowing imperceptible da

0 views • 15 slides

Understanding Bottom-Up and Top-Down Parsing in Computer Science

Bottom-up parsing and top-down parsing are two essential strategies in computer science for analyzing and processing programming languages. Bottom-up parsing involves constructing a parse tree starting from the leaves and moving towards the root, while top-down parsing begins at the root and grows t

0 views • 29 slides

Advances in Neural Semantic Parsing

Delve into the realm of neural semantic parsing with a focus on data recombination techniques, traditional parsers, and the shift towards domain-general models. Explore the application of sequence-to-sequence models and attention-based neural frameworks in semantic parsing tasks. Discover the evolvi

0 views • 67 slides

Utilizing Topic Modeling for Identifying Critical Log Lines in Research

By employing Topic Modeling, Vithor Bertalan, Robin Moine, and Prof. Daniel Aloise from Polytechnique Montréal's DORSAL Laboratory aim to extract essential log lines from a log parsing research. The process involves building a log parser, identifying important log lines and symptoms, and establishi

0 views • 18 slides

Understanding Deep Generative Bayesian Networks in Machine Learning

Exploring the differences between Neural Networks and Bayesian Neural Networks, the advantages of the latter including robustness and adaptation capabilities, the Bayesian theory behind these networks, and insights into the comparison with regular neural network theory. Dive into the complexities, u

0 views • 22 slides