Dynamic Oracle Training in Constituency Parsing

Policy gradient serves as a proxy for dynamic oracles in constituency parsing, helping to improve parsing accuracy by supervising each state with an expert policy. When dynamic oracles are not available, reinforcement learning can be used as an alternative to achieve better results in various natural language processing tasks, including machine translation and parsing methods like CCG parsing. Expert policies and dynamic oracles have shown significant improvements in parsing performance, with a focus on maximizing achievable F1 scores and addressing exposure bias and loss mismatch issues.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Policy Gradient as a Proxy for Dynamic Oracles in Constituency Parsing Daniel Fried and Dan Klein

Policy Gradient as a Proxy for Dynamic Oracles in Constituency Parsing Daniel Fried and Dan Klein

Policy Gradient as a Proxy for Dynamic Oracles in Constituency Parsing Daniel Fried and Dan Klein

Parsing by Local Decisions S VP NP NP nap . a The cat took (S (NP The cat ) (VP ? ? = log? ? ?;?) = log?(??|?1:? 1,?;?) ?

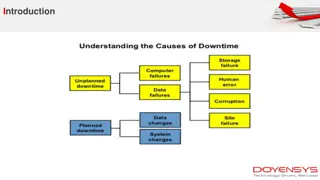

Non-local Consequences Loss-Evaluation Mismatch S S NP ? ? VP VP VP NP NP NP The cat took a The cat took a nap . nap . (?, ?): -F1(?, ?) Exposure Bias True Parse ? (S (NP The cat ? (S (NP (VP Prediction ?? [Ranzato et al. 2016; Wiseman and Rush 2016]

Dynamic Oracle Training Explore at training time. Supervise each state with an expert policy. ? (S (NP The cat True Parse addresses exposure Prediction (sample, or greedy) ? The (S (NP (VP bias ? Oracle The The (NP cat addresses loss mismatch ?? choose to maximize achievable F1 (typically) log?(?? | ?1:? 1,?;?) ? ? = ? [Goldberg & Nivre 2012; Ballesteros et al. 2016; inter alia]

Dynamic Oracles Help! Expert Policies / Dynamic Oracles Daume III et al., 2009; Ross et al., 2011; Choi and Palmer, 2011; Goldberg and Nivre, 2012; Chang et al., 2015; Ballesteros et al., 2016; Stern et al. 2017 mostly dependency parsing PTB Constituency Parsing F1 Static Oracle Dynamic Oracle System Coavoux and Crabb , 2016 88.6 89.0 Cross and Huang, 2016 Fern ndez-Gonz lez and G mez-Rodr guez, 2018 91.0 91.3 91.5 91.7

What if we dont have a dynamic oracle? Use reinforcement learning

Reinforcement Learning Helps! (in other tasks) machine translation Auli and Gao, 2014; Ranzato et al., 2016; Shen et al., 2016 Xu et al., 2016; Wiseman and Rush, 2016; Edunov et al. 2017 machine translation several, including dependency parsing CCG parsing

Policy Gradient Training Minimize expected sequence-level cost: ? ? True Parse Prediction S S ?(?) = ? ? ?;? (?, ?) NP VP VP NP NP NP ? NP The man had an idea. The man had an idea. (?, ?) ?? ? = ? ? ?;? ?, ? ?log?( ?|?;?) ? addresses exposure bias (compute by sampling) addresses loss mismatch (compute F1) compute in the same way as for the true tree [Williams, 1992]

Policy Gradient Training ?? ? = ? ? ?;? ?, ? ?log?( ?|?;?) ? Input, ? The cat took a nap. S S-INV S S k candidates, ? NP VP ADJP VP VP NP NP NP NP NP NP NP NP nap . took a The cat took a nap . nap . nap . took a took a The cat The cat The cat (?, ?) (negative F1) 89 80 80 100 gradient for candidate ?log?( ?1|?;?) ? log?( ?2|?;?) ? log?( ?3|?;?) ?log?(?|?;?)

Setup Parsers Span-Based [Cross & Huang, 2016] Top-Down [Stern et al. 2016] RNNG [Dyer et al. 2016] In-Order [Liu and Zhang, 2017] Training Static oracle Dynamic oracle Policy gradient x

English PTB F1 93 Static oracle Policy gradient Dynamic oracle 92.5 92 91.5 91 90.5 90 Span-Based Top-Down RNNG-128 RNNG-256 In-Order

Training Efficiency PTB learning curves for the Top-Down parser 92 91.5 Development F1 91 90.5 90 static oracle dynamic oracle policy gradient 89.5 89 5 10 15 20 Training Epoch 25 30 35 40 45

French Treebank F1 84 Static oracle Policy gradient Dynamic oracle 83 82 81 80 Span-Based Top-Down RNNG-128 RNNG-256 In-Order

Chinese Penn Treebank v5.1 F1 88 Static oracle Policy gradient Dynamic oracle 87 86 85 84 83 Span-Based Top-Down RNNG-128 RNNG-256 In-Order

Conclusions Local decisions can have non-local consequences Loss mismatch Exposure bias How to deal with the issues caused by local decisions? Dynamic oracles: efficient, model specific Policy gradient: slower to train, but general purpose

For Comparison: A Novel Oracle for RNNG (S (NP The man (VP ) had 1. Close current constituent if it s a true constituent ) (S (NP The man or it could never be a true constituent. (S (VP ) (NP ) The man 2. Otherwise, open the outermost unopened true constituent at this position. (S (NP The man (VP ) 3. Otherwise, shift the next word. (S (NP The man had (VP )