Exploring Neural Quantum States and Symmetries in Quantum Mechanics

This article delves into the intricacies of anti-symmetrized neural quantum states and the application of neural networks in solving for the ground-state wave function of atomic nuclei. It discusses the setup using the Rayleigh-Ritz variational principle, neural quantum states (NQSs), variational parameters, and the optimization process. Additionally, it highlights the advantages and disadvantages of using neural networks in this context, shedding light on the challenges and benefits they bring to quantum mechanics. The article also explores symmetries in quantum mechanics, focusing on eigenstates, parity operators, and examples such as the 1D harmonic oscillator and the hydrogen atom.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Anti Anti- -symmetrized Neural symmetrized Neural Quantum States Quantum States Javier Rozal n Sarmiento Advisor: Arnau Rios Huguet

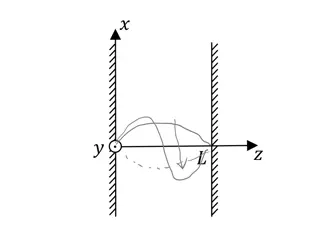

Problem set-up Goal: Solve for the non-relativistic ground-state wave function of an atomic nucleus, ? Set-up: Rayleigh-Ritz variational principle < ?| ?|? > < ?|? > |? > = |? > ? ??,??, ,??d3Np ? = ? + ? ??? ??,?? ??,?? ?? ?? ????(?;?) Ansatz: Neural Network, ?NQS??,??, ,??,;? ?? L ? = ??,?? ?=1 2

Neural Quantum States (NQSs) Neural Quantum States (NQSs) ??,?? ??,?? ?? Neural Quantum State: ?NQS??,??, ,??,;? ?? ????(?;?) ?? L ? = ??,?? ?=1 L Variational parameters: ? = ??,?? , L = # of layers Core idea ?=1 Training + numerical minimisation algorithm or Optimizer Learning <?NQS| ?|?NQS> <?NQS|?NQS> Minimise the loss function: Loss = ? = 3

Advantages Advantages Universal approximation: a neural network can approximate any continuous function [1], [2] Space complexity: polynomial scaling of the weights possibly! Software with GPU integration: PyTorch, Jax, Tensorflow also libraries like NetKet [2]. Constants matter! (parallelization) Artificial Intelligence sounds great when asking funding [1] G. Cybenko, Approximation by superpositions of a sigmoidal function, 1989 [2] K. Hornik M. Stinchcombe, H. White, Multilayer feedforward networks are universal approximators, 1989 4

Disadvantages Disadvantages Black box issue: it is difficult to extract physical information from the analytical form of the neural net. Optimization: as the Hamiltonian becomes more complex, the task of searching the parameter space for a good set of parameters becomes more difficult. Neural net architecture: what is the best architecture for a given problem? Providing physical information: symmetries of ?, boundary conditions, etc. my current work 5

Symmetries in Quantum Mechanics Symmetries in Quantum Mechanics ?, ? = ? {|? solution kets} are eigenstates of ? Example: 1D HO ? =1 2?2+1 2??2?2 ?, ? = 0, where ? is the parity operator ? ? = ? ? , solutions are either parity-even or parity-odd Example: Hydrogen atom ? = 2 ?2 ??2 ??2 1 ?2 ? ?? ?2? +1 1 ? sin?? 1 ?, ?2= ?, + ?? = 0 ?2 sin2? 2? ?? sin? ?? ?? ? ? 1 +? ?????,?,? = ???????? ???(?,?) ?=1 ?=0 ?= ? 6

Symmetries in neural networks Symmetries in neural networks 1D HO: naive approach (but good!) Fermionic particle exchange: common approach ? =1 2?2+1 Spin-statistics theorem (or just assume it ) 2??2?2 ? ? = ? ? ?NQS?1,?2, ,?? = ?EQUIV det ?GSM(?1,?2, ,??) ?NQS? ?NN? + ?NN( ?) ??,?? ??,?? ?NN(?) ? [3] Is this the best way? What about other symmetries (continuous groups)? [3] J Keeble, A Rios et al, Machine learning one-dimensional spinless trapped fermionic systems with neural-network quantum states, arXiv:2304.0475 7

Group Group theory theory Def. (group): a group G is a set of elements with a binary operation with respect to which it is closed. Ex. 1: Symmetries of a triangle.?2?,? = 3 {?,?120,?240,?1,?2,?3} (? rotation, ? mirror) ? ?120 ?120 ?240 ? ?240 ?240 ? ?1 ?1 ?2 ?3 ? ?2 ?2 ?3 ?1 ?240 ? ?3 ?3 ?1 ?2 ?120 ?240 ? ?1 ? ? 1 ?120 ?240 ?1 ?2 ?3 ?120 ?240 ?1 ?2 ?3 ?120 ?2 ?3 ?1 ?3 ?1 ?2 3 ?120 ?120 ?2 ?3 2 ?240 Cayley table 8

Group Group theory theory Def. (linear representation, informal): a linear representation of a group ? is a map ?:? ??( ) such that matrix multiplication is associated with the group product: ? rep:?1?2= ?3 ? ?1? ?2 = ?(?3) Example (rotation in 2D). G = SO 2 = {? ? , ? 0,2? }. This is a cyclic group (only one generator). Trivial representation ? ? ? ? = 1 , ? [0,2?) ? ? ? ? = 1 1 = 1 1 = 1 = D(? + ?) Defining representation over ? cos? sin? sin? cos? ? ? ? ? = , ? 0,2? ? ? ? ? = ?(? + ?) (2 dimensional representation) Defining Representation over ? ?? 0 0 1 ? ? ? ? = ?+??, ? 0,2? , in the basis spanned by ? = 2 ?? ???, D ? ? ? = ?(? + ?) 9

Group Group theory theory Def. (Irreducible representation, informal): a representation is irreducible if the matrix can be block-diagonalized. 1 block diagonalizes the rep.over 2 Example (rotation in 2D). The change of basis ? = 2 ?? ??? Defining representation over ? ? ?? cos? sin? sin? cos? 0 ? ? ? ? = = ??? ??? ??? are irreps ?+?? 0 Example (rotation in 3D). Euler parametrization over ?? (sphere). Defining representation cos? 0 sin? 0 1 0 sin? 0 cos? cos? sin? 0 sin? cos? 0 0 0 1 ? ?,?,? ? ?,?,? = ?3? ?2? ?3? ,where R3? = ,R2? = Euler parametrization over ?? (sphere). Irreducible representations ? = ? ??? ??? ? ? ???, ? ???,?,? where ??? = ?? ? ???2?? ? ?,?,? j th irrep ? ? ? Wigner D-matrices 10

G G- -CNNs are the only way CNNs are the only way In general, we want our NN output to be equivariant under a certain group ? of transformations: ??? = ?? ? ??is the action/representation of ? ? on ? ?. is an equivariant map (also intertwiner). Group convolution Group equivariance (2016) [4] ? ? 1? ?(?) ? ? ? = (regular representation) ? ? Steerable CNNs (2016) [5] ? = ? , filter dim ? dim(?) dimHomH(?,?) (any representation) ? = dimHomH(?,?) = ????? [4] T S Cohen and M Welling, Group Convolutional Neural Networks, arXiv:1602.07576 [5] T S Cohen and M Welling, Steerable CNNs, arXiv:1612.08498 11

G G- -CNNs are the only way CNNs are the only way Group equivariance Group convolution (2018) [6] Question (I): can we re-think all our previous to write our NQSs? (MPNNs are proved to be a special case of G-CNNs) Question (II): is there an advantage to designing our NQS within this framework? [6] R Kondor and S Trivedi, On the Generalization of Equivariance and Convolution in Neural Networks to the Action of Compact Groups, arXiv:1802.03690 12

Toy example (I) Toy example (I) /? , ??= {?,?} (parity) ?NQS? ?NN? + ?NN( ?) What are the irreps of /? ? In position space, the trivial and defining/sign representations are: ??? = 1 , ??? = 1 Trivial representation ??? = 1 , ??? = 1 Sign representation We can project onto the invariant subspaces and we will find they are spanned by: ?+? ? ? + ?( ?), ? ? ? ? ?( ?) ?NQS? ?NN? + ?NN( ?) is for wave functions transforming with the trivial rep. ?NQS? ?NN? ?NN( ?) is for wave functions transforming with the sign rep. 13

Toy example (II) Toy example (II) ??(symmetric/permutation group) (for fermionic particle exchange symmetry) We can take the permutation/regular representation of ??. ? = 3 0 1 0 0 0 1 0 0 1 1 0 0 0 1 0 1 0 0 0 0 1 0 1 0 1 0 0 ? ?1 = ,? ?2 = , ? ?3 = , 0 1 0 1 0 0 1 0 0 0 0 1 1 0 0 0 1 0 0 0 1 0 0 1 0 1 0 ? ?4 = ,? ?5 = ,? ?6 = Using this representation/action, our G-CNN input layer looks like: ?! 1) ? ?(?)? ? 1 ? = ? ? ? = ???(?? ? ?? ?=1 Pro: # parameters fixed by N for few particles, we need less parameters than other architectures. Con: the scaling is factorial! 14

Next steps and open questions Next steps and open questions Some people have already shown G-CNN advantage for quantum systems [7] Why is the Slater determinant so good ? Can we explain it from the irreps of ??? Can we find something even better? Since the different excited states have different symmetries (they transform according to different irreps of the same group), can we predict the spectrum all at once by having different output neurons transform differently? In nuclear physics, the usual DOF are hadrons spin- particles spinors. We could leverage the language of G- CNNs to encode the spinor transformation under different symmetries! ? ?? = ??? ???(? 1?) ? THANK YOU [7] C Roth and A MacDonald, Group Convolutional Neural Networks Improve Quantum State Accuracy, arXiv:2104.05085 15