Computational Physics (Lecture 18)

The basic structure of MPICH and its features in Computational Physics Lecture 18. Understand how MPI functions are used and linked with a static library provided by the software package. Explore how P4 offers functionality and supports parallel computer systems. Discover the concept of clusters in

0 views • 38 slides

Stata's Capabilities for Efficiency and Productivity Assessment

Stata offers a range of tools for conducting frontier efficiency and productivity assessments, including nonparametric and parametric approaches, technical efficiency modeling, different orientations in DEA, productivity estimation techniques, and popular models like MPI and MLPI. The software empow

4 views • 50 slides

Evaluating PyORBIT as Unified Simulation Tool for Beam-Dynamics Modeling

PyORBIT is an open-source code developed for SNS at ORNL with features such as multiparticle tracking, space charge algorithms, and MPI integration. It addresses the shortcomings of current simulation approaches by providing a Python API for advanced data analysis and visualization. Alternative solu

2 views • 13 slides

Enhancing Healthcare Services in Malawi through the Master Patient Index (MPI)

The Master Patient Index (MPI) plays a crucial role in Malawi's healthcare system by providing a national patient identification system to improve healthcare quality and treatment accuracy. Leveraging the MPI aims to dispense unique patient IDs, connect with existing registries, enhance data managem

4 views • 8 slides

Crash Course in Supercomputing: Understanding Parallelism and MPI Concepts

Delve into the world of supercomputing with a crash course covering parallelism, MPI, OpenMP, and hybrid programming. Learn about dividing tasks for efficient execution, exploring parallelization strategies, and the benefits of working smarter, not harder. Discover how everyday activities, such as p

0 views • 157 slides

Innovations in Multidimensional Poverty Measures in the Dominican Republic

Explore the advancements in poverty measurement through a presentation focusing on the adoption of multidimensional poverty indices in the Dominican Republic. The session delves into various poverty measures used, including the Multidimensional Poverty Index (MPI) by UNDP, highlighting the country's

0 views • 22 slides

Proposal for National MPI using SHDS Data in Somalia

The proposal discusses the creation of a National Multidimensional Poverty Index (MPI) for Somalia using data from the Somali Health and Demographic Survey (SHDS). The SHDS, with a sample size of 16,360 households, aims to provide insights into the health and demographic characteristics of the Somal

0 views • 26 slides

Overview of Nepal MPI 2021 and Multidimensional Poverty Peer Network Meeting

The 8th Annual High-Level Meeting of the Multidimensional Poverty Peer Network (MPPN) was hosted by the Government of Chile on 4-5 October, 2021. Dr. Ram Kumar Phuyal from the Government of Nepal National Planning Commission presented at the event. The meeting discussed poverty, its measurement tech

1 views • 20 slides

Understanding libfabric: A Comprehensive Tutorial on High-Level and Low-Level Interface Design

This tutorial delves into the intricate details of libfabric, covering high-level architecture, low-level interface design, simple ping-pong examples, advanced MPI and SHMEM usage. Explore design guidelines, control services, communication models, and discover how libfabric supports various systems,

2 views • 143 slides

A Handbook for Building National MPIs: Practical Guidance for Ending Poverty

This handbook provides detailed practical guidance on creating a technically rigorous permanent national Multidimensional Poverty Index (MPI). Jointly developed with UNDP, it aims to accelerate progress towards the Sustainable Development Goals by offering insights from countries' experiences in des

3 views • 18 slides

Exploring Distributed Solvers for Scalable Computing in UG

This project discusses the use of distributed solvers in UG to enable multi-rank MPI-based solvers with varying sizes, addressing the need for scalable solver codes and dynamic resource allocation. It introduces the UG solver interface, revisits the Concorde solver for TSP problems, and explores run

0 views • 14 slides

Nuclear Physics Computing System Overview

Explore the Nuclear Physics Computing System at RCNP, Osaka University, featuring software, hardware, servers, interactive tools, and batch systems for research and data processing. Discover the capabilities of Intel Parallel Studio, compilers, libraries, MPI applications, and access protocols for e

0 views • 16 slides

A Deep Dive into the Pony Programming Language's Concurrency Model

The Pony programming language is designed for high-performance concurrent programming, boasting speed, ease of learning and use, data race prevention, and atomicity. It outperforms heavily optimized MPI versions in benchmarks related to random memory updates and actor creation. With an API adopted f

0 views • 33 slides

Choices in Measurement Design and National Poverty Assessment

This content discusses normative choices in measurement design, normative reasoning, relevance, usability, essential choices for creating an Alternative Poverty Measure (AF Measure), alongside measurement design considerations, and a purpose statement for a National MPI. It emphasizes the importance

0 views • 33 slides

Open MPI Project: Updated Version Numbering Scheme & Release Planning

Explore the transition from an odd/even version numbering scheme to an A.B.C version triple for Open MPI project, addressing issues with feature adoption and stability. This update aims to deliver new features efficiently and maintain backward compatibility effectively.

0 views • 36 slides

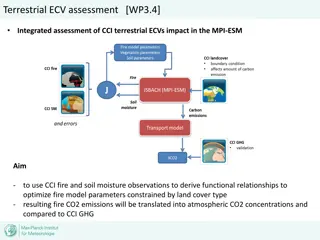

Integrated Assessment of Terrestrial ECV Impact in MPI-ESM

Utilizing CCI fire and soil moisture observations to optimize fire model parameters in MPI-ESM. The study focuses on deriving functional relationships to enhance accuracy in predicting fire CO2 emissions and their impact on atmospheric CO2 concentrations compared to CCI GHG data. JSBACH-SPITFIRE fir

0 views • 7 slides

Understanding Open MPI: A Comprehensive Overview

Open MPI is a high-performance implementation of MPI, widely used in academic, research, and industry settings. This article delves into the architecture, implementation, and usage of Open MPI, providing insights into its features, goals, and practical applications. From a high-level view to detaile

0 views • 33 slides

Threaded Construction and Fill of Tpetra Sparse Linear System Using Kokkos

Tpetra, a parallel sparse linear algebra library, provides advantages like solving problems with over 2 billion unknowns and performance portability. The fill process in Tpetra was not thread-scalable, but it is being addressed using the Kokkos programming model. By utilizing Kokkos data structures

0 views • 19 slides

Introduction to Message Passing Interface (MPI) in IT Center

Message Passing Interface (MPI) is a crucial aspect of Information Technology Center training, focusing on communication and data movement among processes. This training covers MPI features, types of communication, basic MPI calls, and more. With an emphasis on MPI's role in synchronization, data mo

0 views • 29 slides

Developing MPI Programs with Domain Decomposition

Domain decomposition is a parallelization method used for developing MPI programs by partitioning the domain into portions and assigning them to different processes. Three common ways of partitioning are block, cyclic, and block-cyclic, each with its own communication requirements. Considerations fo

0 views • 19 slides

Optimization Strategies for MPI-Interoperable Active Messages

The study delves into optimization strategies for MPI-interoperable active messages, focusing on data-intensive applications like graph algorithms and sequence assembly. It explores message passing models in MPI, past work on MPI-interoperable and generalized active messages, and how MPI-interoperab

0 views • 20 slides

Communication Costs in Distributed Sparse Tensor Factorization on Multi-GPU Systems

This research paper presented an evaluation of communication costs for distributed sparse tensor factorization on multi-GPU systems. It discussed the background of tensors, tensor factorization methods like CP-ALS, and communication requirements in RefacTo. The motivation highlighted the dominance o

0 views • 34 slides

Understanding Collective Communication in MPI Distributed Systems

Explore the importance of collective routines in MPI, learn about different patterns of collective communication like Scatter, Gather, Reduce, Allreduce, and more. Discover how these communication methods facilitate efficient data exchange among processes in a distributed system.

0 views • 6 slides

Enhancing Seasonal-to-Decadal Predictions in the Arctic

Daniela Matei from MPI and Noel Keenlyside from UiB aim to improve Arctic climate predictions and their connection to the Northern Hemisphere. They plan to enhance models and methodologies, assess baseline predictions, conduct coordinated experiments, explore innovative techniques for predictive ski

0 views • 6 slides

Technical Tasks and Contributions in Plasma Physics Research

This collection of reports details the technical tasks undertaken in 2022 by AMU in managing interfaces with ACH for Eiron and IMAS, as well as contributions to EIRENE_unified. It also discusses the parallelization of rate coefficient calculations and the implementation of MPI parallelisation for ef

0 views • 10 slides

SVD Cables Presentation Summary

Presentation by Markus Friedl from HEPHY Vienna on September 8, 2015, covers various aspects of SVD cables, including front-end, DOCK, FADC power supply, CDC end wall routing, electronic instrumentation overview, cable slot assignments, recent actions, and cable specifications for the sensor side an

0 views • 34 slides

Dynamic Load Balancing Library Overview

Dynamic Load Balancing Library (DLB) is a tool designed to address imbalances in computational workloads by providing fine-grain load balancing, resource management, and performance measurement modules. With an integrated yet independent structure, DLB offers APIs for user-level interactions, job sc

0 views • 27 slides

Leveraging MPI's One-Sided Communication Interface for Shared Memory Programming

This content discusses the utilization of MPI's one-sided communication interface for shared memory programming, addressing the benefits of using multi- and manycore systems, challenges in programming shared memory efficiently, the differences between MPI and OS tools, MPI-3.0 one-sided memory model

0 views • 20 slides

Challenges and Feedback from 2009 Sonoma MPI Community Sessions

Collected feedback from major commercial MPI implementations in 2009 addressing challenges such as memory registration, inadequate support for fork(), and problematic connection setup scalability. Suggestions were made to improve APIs, enhance memory registration methods, and simplify connection man

0 views • 20 slides

Government Funding Options for Kiwifruit Growers in June 2021

Ministry for Primary Industries (MPI) provides various funding schemes and ongoing projects to support kiwifruit growers, including environmental schemes, Māori landowners' support programs, and Sustainable Food and Fibre Futures. These initiatives offer financial assistance, mediation for financia

0 views • 7 slides

Impact of High-Bandwidth Memory on MPI Communications

Exploring the impact of high-bandwidth memory on MPI communications, this study delves into the exacerbation of the memory wall problem at Exascale and the need to leverage new memory technologies. Topics covered include intranode communication in MPICH, Intel Knight Landing memory architecture, and

0 views • 20 slides

Understanding the Multidimensional Poverty Index (MPI)

The MPI, introduced in 2010 by OPHI and UNDP, offers a comprehensive view of poverty by considering various dimensions beyond just income. Unlike traditional measures, the MPI captures deprivations in fundamental services and human functioning. It addresses the limitations of monetary poverty measur

0 views • 56 slides

Fault-Tolerant MapReduce-MPI for HPC Clusters: Enhancing Fault Tolerance in High-Performance Computing

This research discusses the design and implementation of FT-MRMPI for HPC clusters, focusing on fault tolerance and reliability in MapReduce applications. It addresses challenges, presents the fault tolerance model, and highlights the differences in fault tolerance between MapReduce and MPI. The stu

1 views • 25 slides

Enhancing HPC Performance with Broadcom RoCE MPI Library

This project focuses on optimizing MPI communication operations using Broadcom RoCE technology for high-performance computing applications. It discusses the benefits of RoCE for HPC, the goal of highly optimized MPI for Broadcom RoCEv2, and the overview of the MVAPICH2 Project, a high-performance op

0 views • 27 slides

Understanding Message Passing Interface (MPI) Standardization

Message Passing Interface (MPI) standard is a specification guiding the development and use of message passing libraries for parallel programming. It focuses on practicality, portability, efficiency, and flexibility. MPI supports distributed memory, shared memory, and hybrid architectures, offering

0 views • 29 slides

Understanding Master Patient Index (MPI) in Healthcare Systems

Explore the significance of Master Patient Index (MPI) in healthcare settings, its role in patient management, patient identification, and linking electronic health records (EHRs). Learn about the purpose, functions, and benefits of MPI in ensuring accurate patient data and seamless healthcare opera

0 views • 16 slides

PMI: A Scalable Process Management Interface for Extreme-Scale Systems

PMI (Process-Management Interface) is a critical component for high-performance computing, enhancing scalability and performance. It allows independent development of parallel libraries like MPI, ensuring portability across different environments. The PMI system model includes various components suc

1 views • 29 slides

Insights into Pilot National MPI for Botswana

This document outlines the structure, dimensions, and indicators of the Pilot National Multidimensional Poverty Index (MPI) for Botswana. It provides detailed criteria for measuring deprivation in areas such as education, health, social inclusion, living standards, and more. The presentation also in

0 views • 10 slides

Fast Noncontiguous GPU Data Movement in Hybrid MPI+GPU Environments

This research focuses on enabling efficient and fast noncontiguous data movement between GPUs in hybrid MPI+GPU environments. The study explores techniques such as MPI-derived data types to facilitate noncontiguous message passing and improve communication performance in GPU-accelerated systems. By

0 views • 18 slides

Introduction to Charm++ Programming Framework

Charm++ is a generalized approach to parallel programming that offers an alternative to traditional parallel programming languages like MPI, UPC, and GA. It emphasizes overdecomposition, migratability, and asynchrony to enhance parallel program performance and efficiency. The framework uses indexed

0 views • 43 slides