How to Resolve QuickBooks Error 1014?

How to Resolve QuickBooks Error 1014?\nStuck with QuickBooks Error 1014? Don't panic! This error disrupts QuickBooks by overloading the company file cache. Fear not! Our guide equips you with solutions. Rebuild the cache, close unnecessary programs, or update QuickBooks. For multi-user issues, try E

0 views • 3 slides

Understanding Memory Organization in Computers

The memory unit is crucial in any digital computer for storing programs and data. It comprises main memory, auxiliary memory, and cache memory, each serving different roles in data storage and retrieval. Main memory directly communicates with the CPU, while cache memory enhances processing speed by

1 views • 37 slides

Understanding Cache and Virtual Memory in Computer Systems

A computer's memory system is crucial for ensuring fast and uninterrupted access to data by the processor. This system comprises internal processor memories, primary memory, and secondary memory such as hard drives. The utilization of cache memory helps bridge the speed gap between the CPU and main

2 views • 47 slides

Understanding Shared Memory Architectures and Cache Coherence

Shared memory architectures involve multiple CPUs sharing one memory with a global address space, with challenges like the cache coherence problem. This summary delves into UMA and NUMA architectures, addressing issues like memory latency and bandwidth, as well as the bus-based UMA and NUMA shared m

0 views • 27 slides

Understanding Cache Memory in Computer Architecture

Cache memory is a crucial component in computer architecture that aims to accelerate memory accesses by storing frequently used data closer to the CPU. This faster access is achieved through SRAM-based cache, which offers much shorter cycle times compared to DRAM. Various cache mapping schemes are e

2 views • 20 slides

GPU Scheduling Strategies: Maximizing Performance with Cache-Conscious Wavefront Scheduling

Explore GPU scheduling strategies including Loose Round Robin (LRR) for maximizing performance by efficiently managing warps, Cache-Conscious Wavefront Scheduling for improved cache utilization, and Greedy-then-oldest (GTO) scheduling to enhance cache locality. Learn how these techniques optimize GP

0 views • 21 slides

Understanding Shared Memory Architectures and Cache Coherence

Shared memory architectures involve multiple CPUs accessing a common memory, leading to challenges like the cache coherence problem. This article delves into different types of shared memory architectures, such as UMA and NUMA, and explores the cache coherence issue and protocols. It also highlights

2 views • 27 slides

Understanding Multi-Threading Concepts in Computer Systems

Exploring topics such as cache coherence, shared memory versus message passing, synchronization primitives, cache block states, performance improvements in multiprocessors, and the Ocean Kernel procedure for solving matrices.

0 views • 22 slides

Mitigating Conflict-Based Attacks in Modern Systems

CEASER presents a solution to protect Last-Level Cache (LLC) from conflict-based cache attacks using encrypted address space and remapping techniques. By avoiding traditional table-based randomization and instead employing encryption for cache mapping, CEASER aims to provide enhanced security with n

1 views • 21 slides

Amoeba Cache: Adaptive Blocks for Memory Hierarchy Optimization

The Amoeba Cache introduces adaptive blocks to optimize memory hierarchy utilization, eliminating waste by dynamically adjusting storage allocations. Factors influencing cache efficiency and application-specific behaviors are explored. Images and data distributions illustrate the effectiveness of th

0 views • 57 slides

Understanding Cache Memory Designs: Set vs Fully Associative Cache

Exploring the concepts of cache memory designs through Aaron Tan's NUS Lecture #23. Covering topics such as types of cache misses, block size trade-off, set associative cache, fully associative cache, block replacement policy, and more. Dive into the nuances of cache memory optimization and understa

0 views • 42 slides

Architecting DRAM Caches for Low Latency and High Bandwidth

Addressing fundamental latency trade-offs in designing DRAM caches involves considerations such as memory stacking for improved latency and bandwidth, organizing large caches at cache-line granularity to minimize wasted space, and optimizing cache designs to reduce access latency. Challenges include

0 views • 32 slides

Compute and Storage Overview at JLab Facility

Compute nodes at JLab facility run CentOS Linux for data processing and simulations with access to various software libraries. File systems provide spaces like /group for group software, /home for user directories, and Cache for write-through caching. Additionally, there are 450TB of cache space on

0 views • 11 slides

Understanding Cache Memory Organization in Computer Systems

Exploring concepts such as set-associative cache, direct-mapped cache, fully-associative cache, and replacement policies in cache memory design. Delve into topics like generality of set-associative caches, block mapping in different cache architectures, hit rates, conflicts, and eviction strategies.

0 views • 35 slides

Adaptive Insertion Policies for High-Performance Caching

Explore the concept of adaptive insertion policies in high-performance caching systems, focusing on mitigating the issue of Dead on Arrival (DoA) lines by making simple changes to cache insertion policies. Understanding cache replacement components, victim selection, and insertion policy can signifi

0 views • 15 slides

Guide to Clearing Browser Cache for Better Online Experience

Learn how to clear cache on Internet Explorer, Firefox, and Chrome to avoid distorted data and inaccurate information during a weekend release. Follow simple steps provided for each browser to ensure smooth browsing experience on BT Wholesale Online. Access the user guide via My BT Wholesale.

0 views • 8 slides

Tradeoffs in Coherent Cache Hierarchies for Accelerators

Explore the design tradeoffs and implementation details of coherent cache hierarchies for accelerators in the context of specialized hardware. The presentation covers motivation, proposed design, evaluation methods, results, and conclusions, highlighting the need for accelerators and considerations

0 views • 22 slides

Efficient Handling of Cache Miss Rate in FPGAs

This study focuses on improving cache miss rate efficiency in FPGAs through the implementation of non-blocking caches and efficient Miss Status Holding Registers (MSHRs). By tracking more outstanding misses and utilizing memory-level parallelism, this approach proves to be more cost-effective than s

0 views • 44 slides

Cache-Based Attack and Defense on ARM Platform - Doctoral Dissertation Thesis Defense

Recent research efforts have focused on securing ARM platforms due to their prevalence in the market. The study delves into cache-based security threats and defenses on ARM architecture, emphasizing the risks posed by side-channel attacks on the Last-Level Cache. It discusses the effectiveness of si

0 views • 44 slides

Defending Against Cache-Based Side-Channel Attacks

The content discusses strategies to mitigate cache-based side-channel attacks, focusing on the importance of constant-time programming to avoid timing vulnerabilities. It covers topics such as microarchitectural attacks, cache structure, Prime+Probe attack, and the Bernstein attack on AES. Through d

0 views • 25 slides

Cache Attack on BLISS Lattice-Based Signature Scheme

Public-key cryptography, including the BLISS lattice-based signature scheme, is pervasive in digital security, from code signing to online communication. The looming threat of scalable quantum computers has led to the development of post-quantum cryptography, such as lattice-based cryptography, whic

0 views • 13 slides

Efficient Cache Management using The Dirty-Block Index

The Dirty-Block Index (DBI) is a solution to address inefficiencies in caches by removing dirty bits from cache tag stores, improving query response efficiency, and enabling various optimizations like DRAM-aware writeback. Its implementation leads to significant performance gains and cache area redu

0 views • 44 slides

Improving Cache Performance Through Read-Write Disparity

This study explores how exploiting the difference between read and write requests can enhance cache performance by prioritizing read over write operations. By dynamically partitioning the cache and protecting lines with more read hits, the proposed method demonstrates significant performance improve

0 views • 27 slides

Understanding Cache Memory in Computer Systems

Explore the intricate world of cache memory in computer systems through detailed explanations of how it functions, its types, and its role in enhancing system performance. Delve into the nuances of associative memory, valid and dirty bits, as well as fully associative examples to grasp the complexit

0 views • 15 slides

Understanding Caching and Virtual Memory Concepts

Exploring the fundamental concepts of caching and demand-paged virtual memory in computer systems. Topics covered include cache definitions, memory hierarchy, cache concepts for reading and writing, main points on memory management techniques, hardware address translation, demand paging process, and

1 views • 46 slides

Understanding Multiprocessors and Memory Hierarchy

Explore topics such as snooping-based coherence, synchronization, consistency, virtual memory overview, address translation, memory hierarchy properties, TLB functionality, TLB and cache access considerations, and cache indexing strategies in multiprocessor systems.

0 views • 22 slides

Understanding Cache Coherency and Multi-Core Programming

Explore the intricate world of cache coherency and multi-core programming through images and descriptions covering topics such as how cache shares data between cores, maintaining data consistency, CPU architecture, memory caching, MESI protocol, and interconnect bus communication.

0 views • 97 slides

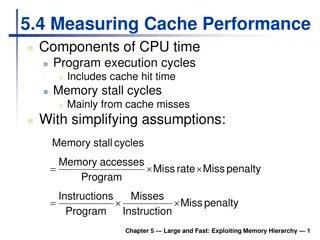

Understanding Cache Performance Components and Memory Hierarchy

Exploring cache performance components, such as hit time and memory stall cycles, is crucial for evaluating system performance. By analyzing factors like miss rates and penalties, one can optimize CPU efficiency and reduce memory stalls. Associative caches offer flexible options for organizing data

0 views • 22 slides

Understanding Web Caching: An Overview

Web caching, implemented through various types of caches like browser cache, proxy cache, and gateway cache, plays a crucial role in improving content availability, reducing network congestion, and enhancing user experience by saving bandwidth and decreasing latency. It addresses the challenges pose

0 views • 27 slides

Trace-Driven Cache Simulation in Advanced Computer Architecture

Trace-driven simulation is a key method for assessing memory hierarchy performance, particularly focusing on hits and misses. Dinero IV is a cache simulator used for memory reference traces without timing simulation capabilities. The tool aids in evaluating cache hit and miss results but does not ha

0 views • 13 slides

Understanding Cache Coherence in Computer Architecture

Exploring the concept of cache coherence in computer architecture, this content delves into the challenges and solutions associated with maintaining consistency among multiple caches in modern systems. It discusses the importance of coherence in shared memory systems and the use of cache-coherent me

0 views • 24 slides

Targeted Deanonymization via the Cache Side Channel: Attacks and Defenses

This presentation by Abdusamatov Somon explores targeted deanonymization through cache side-channel attacks, focusing on leaky resource attacks and cache-based side-channel attacks. It discusses the motivation behind these attacks, methods employed, potential defenses, and the evaluation of such att

0 views • 16 slides

Revisiting Complexity of Hardware Cache Coherence in Computer Science

Today's shared memory systems face increasing complexity in cache coherence protocol implementations, posing significant challenges in verification and optimization. This study re-evaluates the complexities of existing protocols like MESI and introduces an alternative approach called DeNovo, focusin

0 views • 18 slides

Clearing Browser Cache and Cookies: Google Chrome Edition

In this guide, you will learn how to clear the browser cache and cookies in Google Chrome. Follow the easy steps to ensure smooth browsing experience. From accessing your browser settings to selecting the right options, this tutorial covers it all. Keep your browser running efficiently by regularly

0 views • 6 slides

Intelligent DRAM Cache Strategies for Bandwidth Optimization

Efficiently managing DRAM caches is crucial due to increasing memory demands and bandwidth limitations. Strategies like using DRAM as a cache, architectural considerations for large DRAM caches, and understanding replacement policies are explored in this study to enhance memory bandwidth and capacit

0 views • 23 slides

Cache Replacement Policies and Enhancements in Fall 2023 Lecture 8 by Brandon Lucia

The Fall 2023 Lecture 8 by Brandon Lucia delves into cache replacement policies and enhancements for efficient memory management. The session covers the intricacies of replacement policies such as Round Robin, discussing evictions and block prioritization within cache sets. Visual aids and examples

0 views • 60 slides

Efficient Instruction Cache Prefetching Techniques

Discussion on issues and solutions related to instruction cache prefetching, including trigger timing, next-line prefetching, I-Shadow cache, and footprint prediction. Evaluation results show improved performance with FNL methodology compared to traditional prefetching methods.

0 views • 24 slides

Maximizing Cache Hit Rate with LHD: An Overview

This presentation discusses the concept of Least Hit Density (LHD) for improving cache hit rates, focusing on the challenges and benefits of key-value caches in maximizing performance through efficient eviction policies like LRU. It emphasizes the importance of cache hit rates in enhancing web appli

0 views • 40 slides

Cooperative Cache Scrubbing for Efficient Memory Management in Multicore Systems

Cooperative Cache Scrubbing optimizes memory management in multicore systems by efficiently handling short-lived application objects and reducing unnecessary data writes to memory. By communicating semantic information to hardware caches, dead lines are scrubbed, dirty bits unset, and unnecessary fe

0 views • 40 slides

Cache Replacement Policies in Distributed Systems: Key Considerations and Challenges

Explore the critical aspects of cache replacement policies in distributed systems, including cache consistency, update propagation, eviction strategies, and working sets. Dive into the implications of different policies like LRU and discover why certain access patterns may not be efficiently handled

0 views • 22 slides