Performance Comparison of 40G NFV Environments

This study compares the performance of 40G NFV environments focusing on packet processing architectures and virtual switches. It explores host architectures, NFV related work, evaluation of combinations of PM and VM architectures with different vswitches, and the impact of packet processing architecture on performance. Various aspects such as throughput, latency, jitter, and the use of Intel and Mellanox 40GbE NICs with SR-IOV are discussed.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

A Host-based Performance Comparison of 40G NFV Environments Focusing on Packet Processing Architectures and Virtual Switches R. Kawashima, S. Muramatsu, H. Nakayama T. Hayashi, and H. Matsuo

Contents Backgrounds NFV Related work Packet Processing Architectures Virtual Switches Evaluation Conclusion 1

Network Functions Virtualization (NFV) Traditional NFV Router vRouter VM VM FW vFW VM vEPC EPC HW-based SW-based High cost Low flexibility Closed Low cost High flexibility Open 2

Host Architectures in NFV VM VNF NAPI DPDK Netmap Packet Processing Architecture vhost-net vhost-user Which architecture or vswitch should be used for NFV hosts ? Bridge OVS VALE Lagopus PM How much performance differs depending on its architecture and vswitch ? vSW NAPI DPDK Netmap Path-through Packet Processing Architecture 3

Related Work Performance of baremetal servers [1][2] They focused on performance bottlenecks Performance of KVM and Container-based virtualization [3] [1] P. Emmerich et al., Assessing Soft- and Hardware Bottlenecks in PC-based Packet Forwarding Systems , Proc. ICN, pp. 78-83, 2015. [2] S. Gallenm ller et al., Comparison of Frameworks for High-Performance Packet IO , Proc. ANCS, pp. 29-38, 2015. [3] R. Bonafiglia et al., Assessing the Performance of Virtualization Technologies for NFV: a Preliminary Benchmarking , Proc. EWSDN, pp. 67-72, 2015. 4

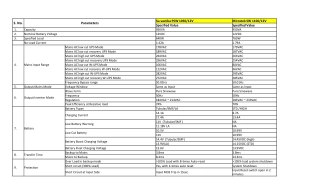

Our Evaluation Combination of PM and VM architectures and vswitches Throughput and Latency/Jitter Intel and Mellanox 40GbE NICs SR-IOV 5

Contents Backgrounds NFV Related work Packet Processing Architectures Virtual Switches Evaluation Conclusion 6

Packet Processing Architecture A way to forward packets (NIC <=> Applications) Interruption or Polling Single core or Multi cores Kernel space or User space Packet buffer structure Packet buffer management The architecture has a major effect on the performance ! 7

Three Architectures NAPI Netmap DPDK vSW App DPDK API User Netmap API Kernel DPDK Netmap Rx Tx vSW PMD VALE Network Stack Network Stack Rx Tx Tx Rx Driver Interruption or Polling Interruption or Polling DMA Polling DMA DMA NIC NIC NIC 8

Contents Backgrounds NFV Related work Packet Processing Architectures Virtual Switches Evaluation Conclusion 9

Virtual Switch A virtual switch bridges the host and VMs VM VM L2 switching (VLAN) (OpenFlow) (Tunneling) (QoS) vSW Packet Processing Architecture The virtual switch has an impact on performance too ! NIC NIC 10

Six Virtual Switches Name Running Space Kernel Kernel Kernel User User User Architecture Virtual I/O Support TAP/vhost-net TAP/vhost-net (QEMU) - vhost-user (to be supported) Linux Bridge OVS VALE L2FWD-DPDK OVS-DPDK Lagopus NAPI NAPI Netmap DPDK DPDK DPDK 11

Contents Backgrounds NFV Related work Packet Processing Architectures Virtual Switches Evaluation Conclusion 12

Goals Clarify performance characteristics of existing systems Propose appropriate NFV host environments Find a proper direction for performance improvement 13

Experiment 1 (Baremetal) PM pktgen-dpdk (Throughput) OSTA (Latency) vSwitch UDP traffic eth1 eth1 eth2 eth2 Server 2 (DUT) Server 1 40GbE 14

Throughput Mellanox ConnectX-3 EN Intel XL710 Throughputs differ depending on the NIC type Throughputs with short packet sizes are far from wire rate 15

Latency Mellanox ConnectX-3 EN Intel XL710 L2FWD-DPDK and Lagopus show worse latency Jitter values are less than 10 s 16

VM Experiment 2 (VM) vSwitch veth1 veth2 PM pktgen-dpdk (Throughput) OSTA (Latency) vSwitch UDP traffic eth1 eth2 eth1 eth2 Server 2 (DUT) Server 1 40GbE 17

Throughput Mellanox ConnectX-3 EN Intel XL710 NAPI/ vhost-net The virtualization overhead is fairly large DPDK/ vhost-user 18

Latency Mellanox ConnectX-3 EN Intel XL710 NAPI/ vhost-net The virtualization amplifies jitters DPDK/ vhost-user 19

SR-IOV Throughput Latency SR-IOV shows the best performance ! SR-IOV lacks flexibility of flow handling 20

Adequate NFV Host Environment Architecture/ Virtualization Virtual Switch NIC Linux Bridge Throughput NAPI/ vhost-net Intel XL710 OVS Latency/Jitter Mellanox ConnectX-3 DPDK/ vhost-user L2FWD-DPDK Usability OVS-DPDK 21

Contents Backgrounds NFV Related work Packet Processing Architectures Virtual Switches Evaluation Conclusion 22

Conclusion Summary We have evaluated NFV host environments with 40GbE A NIC device affects performance characteristics DPDK should be used for both the host and the guest We cannot reach the wire rate with short packet sizes Virtualization worsens both throughput and latency SR-IOV showed better throughput and latency Future Work Further evaluations VALE/Netmap based virtualization VALE and Lagopus on the VM Bidirectional and lots of flows 23