Streamlining Keep-Up Processing in Production Proposals

The production proposal by Ivan Furic at the University of Florida explores the challenges and solutions related to keep-up-centric production processes. It delves into issues of expertise variability, coordination efficiency, and the SAM-driven framework for campaign launches. The Grab Bag Model and SAM-driven frameworks are highlighted as tools to address non-uniform expertise and streamline production workflows across different physics groups.

- Production Processes

- Expertise Coordination

- SAM-Driven Framework

- Streamlining Workflows

- Physics Group Collaboration

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Toward a keepup-centric production proposal Ivan Furic, University of Florida

The Grab Bag Model, For each production, each physics group (consumer) provides an expert [in project.py] Expert is given dunepro k5login access Expert does whatever it takes to produce the sample Actual implementations / workarounds around project.py protocols depend on the individual expertise of the physics group s expert Not necessarily done the same way or with the same code between different samples, each expert does their own thing

Issues that come to mind widespread (and non-uniform) expertise with project.py Non-homogenous outputs Coordination is surrendered for expediency Need to work on getting dunepro operators to provide advance notice adhere to a plan provide feedback / keep the coordinators in the loop In terms of training, coordinators don t have a carrot nor a stick

SAM Driven framework (1) Campaign launch job follows prescription for keep-up processing: Create SAM project Launch one job per project file Campaign worker job Requests next file from SAM project Ifdh copies file to node Runs production executable on file Validates executable output Ifdh copies file to output location Upon successful ifdh return value declares output file to SAM Adds output file to SAM output definitions

SAM driven framework (2) Define POMS campaign with unique name, i.e. dune_mcc_10_pd_sp_reco Corresponding SAM dataset definitions: input definition: Dune_mcc_10_pd_sp_reco_input output defintion(s): Dune_mcc_10_pd_sp_reco_output, next_campaign_input problem definition(s) ?? Dune_mcc_10_pd_sp_reco_held, Dune_mcc_10_pd_sp_reco_crash, Dune_mc_10_pd_sp_reco_debug

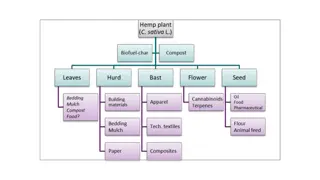

Example of a production campaign flow Sample 3 Gen+G4+Det Sample 1A Gen+G4+Det Sample 1B Gen+G4+Det Sample 2 Gen+G4+Det Sample 4 Gen+G4+Det Config_B_Reco_input Config_A_Reco_input Det Config B Reco Det Config A Reco Sample_4_Merge_input Sample_1_Merge_input Sample_3_Merge_input Sample_2_Merge_input Sample 4 Merge+Ana Sample 3 Merge+Ana Sample 1 Merge+Ana Sample 2 Merge+Ana

Types of campaigns Generator type: no input file, 1 or more output files Reco type: 1 input file -> 1 output file [currently running DC1.5] Merge type: multiple input files -> 1 output file Analysis type: 1 input file -> histo output file [special case of reco type, histogram files require special handling] N.B. While less efficient than mergeana as a joined type, personally prefer greater robustness of merge as a separate stage with null reco path

More to come Rough sketch, lots of technical points to pin down DC1.5 keep-up starting as a proof-of-principle for a reco type job Go back to barebone scripts that do absolute minimum Build up or re-use parts of larbatch that are atomic and useful Important: keep code as lean as possible [an operator can quickly debug what is going on at 2 am with little prior knowledge and experience]