Beyond Numerical MIXATON for Outlier Explanation on Mixed-Type Data SEKE 2022 Special Session ADPBD

This presentation delves into outlier detection in mixed-type data, exploring approaches, evaluation methods, and conclusions. Motivated by the need for detailed outlier explanations, it discusses deep learning ensemble techniques and the challenges of understanding why certain data points are flagged as outliers. The session emphasizes the importance of moving beyond traditional statistical measures and towards more exploratory and user-investigative outlier detection strategies.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Beyond numerical MIXATON for Outlier Explanation on mixed-type Data SEKE 2022 Special Session ADPBD: Agile Development Practices for Big Data Analytics Jakob Nonnenmacher and Jorge Marx G mez 05thJuly 2022 Carl von Ossietzky Universit t Oldenburg | Very Large Business Applications | Prof. Dr.-Ing. habil. Jorge Marx G mez

Agenda Introduction Approaches Evaluation Conclusion 2

Introduction Outlier Detection 2D Dataset Discount Y Offer price X 3

Introduction Outlier Detection 2D Dataset Discount Y Offer price X 4

Introduction Outlier Detection n-dimensional Dataset 5

Introduction Outlier Detection n-dimensional Dataset 6

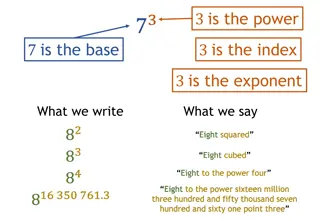

Motivation Outlier explanation Information on whether something is an outlier (label or score) - no information for why something is an outlier(Kopp, Pevn , & Hole a, 2020, p. 1; Liu, Shin, & Hu, 2018, p. 2461; Xu et al., 2021, p. 1328) Employed in a more exploratory setting, requiring the user to investigate the results (Sejr, Zimek, & Schneider-Kamp, 2020, p. 71) Statistical measures can select activities which are not seen as outliers according to the domain the detector is used in (Siddiqui et al., 2019, p. 2872; Siddiqui, Fern, Dietterich, & Wong, 2019, 4) Ensemble or deep learning approaches (IForest, Autoencoder, etc.) (Carletti, Terzi, & Susto, 2020) ? ??????( ?? = ?? ??( ??) ?=1 Deep Learning Ensemble 7

Motivation Outlier explanation Information on whether something is an outlier (label or score) - no information for why something is an outlier(Kopp, Pevn , & Hole a, 2020, p. 1; Liu, Shin, & Hu, 2018, p. 2461; Xu et al., 2021, p. 1328) Employed in a more exploratory setting, requiring the user to investigate the results (Sejr, Zimek, & Schneider-Kamp, 2020, p. 71) Statistical measures can select activities which are not seen as outliers according to the domain the detector is used in (Siddiqui et al., 2019, p. 2872; Siddiqui, Fern, Dietterich, & Wong, 2019, 4) Ensemble or deep learning approaches (IForest, Autoencoder, etc.) (Carletti, Terzi, & Susto, 2020) ? ??????( ?? = ?? ??( ??) ?=1 Deep Learning Ensemble 8

Motivation Outlier explanation Information on whether something is an outlier (label or score) - no information for why something is an outlier(Kopp, Pevn , & Hole a, 2020, p. 1; Liu, Shin, & Hu, 2018, p. 2461; Xu et al., 2021, p. 1328) Employed in a more exploratory setting, requiring the user to investigate the results (Sejr, Zimek, & Schneider-Kamp, 2020, p. 71) Statistical measures can select activities which are not seen as outliers according to the domain the detector is used in (Siddiqui et al., 2019, p. 2872; Siddiqui, Fern, Dietterich, & Wong, 2019, 4) Ensemble or deep learning approaches (IForest, Autoencoder, etc.) (Carletti, Terzi, & Susto, 2020) ? ??????( ?? = ?? ??( ??) ?=1 Deep Learning Ensemble 9

Motivation Outlier explanation Information on whether something is an outlier (label or score) - no information for why something is an outlier(Kopp, Pevn , & Hole a, 2020, p. 1; Liu, Shin, & Hu, 2018, p. 2461; Xu et al., 2021, p. 1328) Employed in a more exploratory setting, requiring the user to investigate the results (Sejr, Zimek, & Schneider-Kamp, 2020, p. 71) Statistical measures can select activities which are not seen as outliers according to the domain the detector is used in (Siddiqui et al., 2019, p. 2872; Siddiqui, Fern, Dietterich, & Wong, 2019, 4) Ensemble or deep learning approaches (IForest, Autoencoder, etc.) (Carletti, Terzi, & Susto, 2020) ? ??????( ?? = ?? ??( ??) ?=1 Deep Learning Ensemble 1 0

Motivation Outlier explanation Information on whether something is an outlier (label or score) - no information for why something is an outlier(Kopp, Pevn , & Hole a, 2020, p. 1; Liu, Shin, & Hu, 2018, p. 2461; Xu et al., 2021, p. 1328) Employed in a more exploratory setting, requiring the user to investigate the results (Sejr, Zimek, & Schneider-Kamp, 2020, p. 71) Statistical measures can select activities which are not seen as outliers according to the domain the detector is used in (Siddiqui et al., 2019, p. 2872; Siddiqui, Fern, Dietterich, & Wong, 2019, 4) Ensemble or deep learning approaches (IForest, Autoencoder, etc.) (Carletti, Terzi, & Susto, 2020) ? ??????( ?? = ?? ??( ??) ?=1 Deep Learning Ensemble 1 1

Motivation Outlier explanation Outlier explanation can tell the analyst which features to focus on in their further investigation Outlier explanation can make the application of outlier detection more useful for various areas from accounting fraud detecting to network intrusion detection Problem: Problem: Methods have only been evaluated on either numerical or categorical data so far no evaluation on mixed-type data even though mixed-type data is the kind of data commonly found in real-world datasets (Nonnenmacher, Holte & Marx G mez, 2022) For this reason, we propose and evaluate multiple outlier explanation methods for mixed-type data 1 2

Approaches 1 3

Approaches Existing approaches Existing approaches Explainer approach by Kopp et al. (2020) XGBoost in combination with SHAP Not evaluated yet 1 4

Approaches Existing approaches Existing approaches Proposed approaches Proposed approaches Explainer approach by Kopp et al. (2020) XGBoost in combination with SHAP Not evaluated yet Adapt approach ATON Adapt approach ATON (Xu et al. 2021) Apply one-hot encoding to input Add up importance of one-hot encoded features after processing MIXATON_OE_SUM Average importance of one-hot encoded features after processing MIXATON_OE_AVG Further adaptions for mixed Further adaptions for mixed- -type data Adapt neighborhood search by using Gower Distance as a mixed-type distance metric (Foss, Markatou & Ray 2019) MIXATON_GD Separate embedding layers for categorical features (Guo & Berkhahn 2016) MIXATON_EL type data 1 5

Evaluation Foundation for evaluation Adapting an approach used for the evaluation of mixed-type outlier detectors Do et al. (2018, p. 429) Using mixed-type datasets without outliers and injecting known outliers into the dataset Randomly sample 10% of the entries Randomly sample 30% of the features of each entry For numeric features shift its value by two times the feature s standard deviation For categorical or binary features replace the value with a different value of that feature This way ground-truth outlier explanations are obtained ???????????= ???????????+ 2 ??????????? ???????????= ? ????????????\ {???????????} 1 6

Evaluation Foundation for evaluation Since the synthetic outliers might not be similar to real outliers a second approach is used Using mixed-type datasets (<15 features) with outliers Determine a pseudo-ground-truth for the real outliers Create all possible feature combinations Run mixed-type outlier detectors (IForest, SPAD, Mixmad) on all feature subspaces For each outlier select the subspace in which it is ranked the highest as the ground truth This way, ground-truth explanations for real-world outliers can be obtained 1 7

Results Evaluation of approaches Results on synthetic outliers Statistically significant difference regarding R-precision 1 8

Results Evaluation of approaches Results on synthetic outliers Statistically significant difference regarding R-precision 1 9

Results Evaluation of approaches Results on real outliers Statistically significant difference regarding R-precision 2 0

Results Evaluation of approaches Results on real outliers Statistically significant difference regarding R-precision 2 1

Results Evaluation of approaches Results on synthetic outliers Average Precision Statistically significant difference regarding Average Precision 1 ) ?? = |?| ?@????(? ? ? 2 2

Results Evaluation of approaches Results on synthetic outliers Average Precision Statistically significant difference regarding Average Precision 1 ) ?? = |?| ?@????(? ? ? 2 3

Technical Experiment Evaluation of adapted approach - MIXATON Results on real outliers XGBoost SHAP 0.4468 0.5308 0.3486 0.4091 0.4067 0.3465 0.4112 0.4522 0.3438 0.3642 0.4445 0.3680 0.6389 0.6995 0.6483 0.4573 MIXATON_ OE_SUM 0.4218 0.6089 0.4694 0.3486 0.4320 0.2734 0.4440 0.4492 0.3863 0.3982 0.4498 0.3923 0.6870 0.7406 0.6705 0.4781 MIXATON_ GD 0.4162 0.5973 0.4747 0.3766 0.4285 0.3225 0.4297 0.4333 0.3858 0.4096 0.4693 0.4069 0.7124 0.7254 0.6786 0.4844 MIXATON_ OE_AVG 0.4309 0.6308 0.4577 0.4109 0.3569 0.3400 0.3894 0.4094 0.3526 0.3881 0.4526 0.3529 0.6327 0.7426 0.6554 0.4669 MIXATON_ EL 0.5271 0.6382 0.4996 0.3721 0.3961 0.2931 0.3871 0.4115 0.3460 0.3937 0.4616 0.3628 0.5444 0.6492 0.5889 0.4581 Dataset abalone_iforest abalone_mixmad abalone_spad adap_iforest adap_mixmad adap_spad credit_iforest credit_mixmad credit_spad heart_iforest heart_mixmad heart_spad mammography_iforest mammography_mixmad mammography_spad avg 0.4844 2 4

Technical Experiment Evaluation of adapted approach - MIXATON Results on real outliers XGBoost SHAP 0.4468 0.5308 0.3486 0.4091 0.4067 0.3465 0.4112 0.4522 0.3438 0.3642 0.4445 0.3680 0.6389 0.6995 0.6483 0.4573 MIXATON_ OE_SUM 0.4218 0.6089 0.4694 0.3486 0.4320 0.2734 0.4440 0.4492 0.3863 0.3982 0.4498 0.3923 0.6870 0.7406 0.6705 0.4781 MIXATON_ GD 0.4162 0.5973 0.4747 0.3766 0.4285 0.3225 0.4297 0.4333 0.3858 0.4096 0.4693 0.4069 0.7124 0.7254 0.6786 0.4844 MIXATON_ OE_AVG 0.4309 0.6308 0.4577 0.4109 0.3569 0.3400 0.3894 0.4094 0.3526 0.3881 0.4526 0.3529 0.6327 0.7426 0.6554 0.4669 MIXATON_ EL 0.5271 0.6382 0.4996 0.3721 0.3961 0.2931 0.3871 0.4115 0.3460 0.3937 0.4616 0.3628 0.5444 0.6492 0.5889 0.4581 Dataset abalone_iforest abalone_mixmad abalone_spad adap_iforest adap_mixmad adap_spad credit_iforest credit_mixmad credit_spad heart_iforest heart_mixmad heart_spad mammography_iforest mammography_mixmad mammography_spad avg 0.4844 2 5

Conclusion and Outlook Conclusion Conclusion No single approach that is always superior Presented approaches provide consistently better performance on a large variety of datasets good option for explaining outliers on mixed-type data Introduced methods can make outlier detection more useful for multiple real- world domains 2 7

Conclusion and Outlook Conclusion Conclusion Outlook Outlook No single approach that is always superior Presented approaches provide consistently better performance on a large variety of datasets good option for explaining outliers on mixed-type data Introduced methods can make outlier detection more useful for multiple real- world domains Different weighing of numerical and categorical features in methods Some are strong on certain datasets while some are strong on others possibility of ensemble explanations in the future 2 8

Conclusion and Outlook Conclusion Conclusion Outlook Outlook No single approach that is always superior Presented approaches provide consistently better performance on a large variety of datasets good option for explaining outliers on mixed-type data Introduced methods can make outlier detection more useful for multiple real- world domains Different weighing of numerical and categorical features in methods Some are strong on certain datasets while some are strong on others possibility of ensemble explanations in the future 2 9

Thank you for your kind attention. Are there any questions? Get Get in in touch touch Jakob Nonnenmacher jakob.nonnenmacher@uol.de 3 0

References Carletti, Mattia; Terzi, Matteo; Susto, Gian Antonio (2020): Interpretable Anomaly Detection with DIFFI: Depth-based Feature Importance for the Isolation Forest. Kopp, Martin; Pevn , Tom ; Hole a, Martin (2020): Anomaly explanation with random forests. In Expert Systems with Applications 149, pp. 1 18. DOI: 10.1016/j.eswa.2020.113187. Liu, Ninghao; Shin, Donghwa; Hu, Xia (2018): Contextual outlier interpretation. In : Proceedings of the 27th International Joint Conference on Artificial Intelligence. Stockholm, Sweden: AAAI Press, pp. 2461 2467. Xu, Hongzuo; Wang, Yijie; Jian, Songlei; Huang, Zhenyu; Wang, Yongjun; Liu, Ning; Li, Fei (2021): Beyond Outlier Detection: Outlier Interpretation by Attention-Guided Triplet Deviation Network. In : Proceedings of the Web Conference 2021. New York, NY, USA: Association for Computing Machinery (WWW 21), pp. 1328 1339. Available online at https://doi.org/10.1145/3442381.3449868. Sejr, J. H.; Zimek, A.; Schneider-Kamp, P. (Eds.) (2020): Explainable Detection of Zero Day Web Attacks. 2020 3rd International Conference on Data Intelligence and Security (ICDIS). 2020 3rd International Conference on Data Intelligence and Security (ICDIS). Siddiqui, M. A.; Stokes, J. W.; Seifert, C.; Argyle, E.; McCann, R.; Neil, J.; Carroll, J. (2019a): Detecting Cyber Attacks Using Anomaly Detection with Explanations and Expert Feedback. In : ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2872 2876. Siddiqui, Md Amran; Fern, Alan; Dietterich, Thomas G.; Wong, Weng-Keen (2019b): Sequential Feature Explanations for Anomaly Detection. In ACM Trans. Knowl. Discov. Data 13 (1), Article 1. DOI: 10.1145/3230666. Nonnenmacher, Holte, Marx G mez (2022), IEEE 3rd International Conference on Pattern Recognition and Machine Learning (PRML) (Forthcoming) 3 1