Implicit Citations for Sentiment Detection: Methods and Results

This study focuses on detecting implicit citations for sentiment detection through various tasks such as finding zones of influence, citation classification, and corpus construction. The research delves into features for classification, highlighting the use of n-grams, dependency triplets, and other distinctive markers. The methods and results include utilizing SVM for 10-fold cross-validation to achieve F-score accuracy in the sentiment analysis of citations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Detection of Implicit Citations for Sentiment Detection Awais Athar & Simone Teufel

Context-Enhanced Citation Sentiment Task 1: Find zones of influence of the citation O'Conner 1982 (manual, partially implemented) Kaplan et al (2009), for MDS Related to implicit citation detection (Qazvinian & Radev, 2010) Task 2: Citation Classification Many manual annotation schemes in Content Citation Analysis Nanba and Okumura (1999) Athar (2011)

Corpus Construction Starting point: Athar's 2011 citation sentence corpus Select top 20 papers; treat all incoming citations to these 1,741 citations (from >850 papers) 4-class scheme objective/neutral positive negative e cluded x

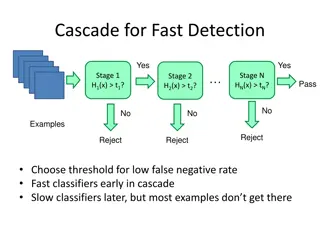

Task 1: Features for Classification S(i) or S(i-1) contains full formal citation (2 features) S(i) contains author name S(i) contains acronym associated with citation METEOR, BLEU etc. S(i) contains a determiner followed by a work noun This approach, These techniques

Task 1: Features (cont.) S(i) contains a lexical hook The Xerox tagger (Cutting et al. 1992) S(i) starts with a third person pronoun S(i) starts with a connector S(i), S(i+1) or S(i-1) starts with a subsection heading (3 features) S(i) contains other citations than one under review n-grams of length 1-3 (also acts as baseline)

Task 1: Methods and Results SVM 10-fold crossvalidation F-score

Task 2: Features for Classification n-grams of length 1 to 3 Dependency triplets (Athar, 2011) det_results_The nsubj_good_results cop_good_were

Annotation Unit is the Citation Problem There may be more than 1 sentiment /citation Annotation unit = citation. Projection needed: For Gold Standard: assume last sentiment is what is really meant For Automatic Treatment: merge citation context into one single sentence

Task 2: Methods and Results SVM 10-fold crossvalidation F-score

Conclusion Detection of citation sentiment in context, not just citation sentence. New, large, context-aware citation corpus This gives us a new truth: More sentiment recovered Harder to determine Subtask of finding citation context: MicroF=.992; MacroF=.75 Overall result: MicroF=0.8; MacroF=0.68

Thank you! Questions?