Real-World Networking: Switch Internals and Considerations

Exploring the intricacies of switch design, this lecture delves into key considerations for efficient frame flow and minimal latency. It compares the drawbacks of a bus architecture to the advantages of a crossbar switch, highlighting the importance of output-queued switch models for optimal data movement. The challenges of backplane bandwidth scaling with port numbers are also discussed in depth, offering insights into ideal switch configurations for various networking scenarios.

Uploaded on Oct 01, 2024 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

14-760: ADV. REAL-WORLD NETWORKING LECTURE 6 * SWITCH INTERNALS * SPRING 2020 * KESDEN

SWITCH GOALS Allow frame to flow from input to output port With a minimum latency And with minimal involvement of or interference to stations connected by other ports

KEY SWITCH CONSIDERATIONS Line rate Backplane rate Memory requirements Processing requirements Power requirements Etc.

BASE CASE: BUS ARCHITECTURE (HUB) All ports shared the same backplane without any arbitration, buffering, switching, etc. Can be viewed as a switch with all ports connected at all times. Input Ports Efficient for broadcast. Significant wasted network time for unicast as significant portions of the network carry messages without leading to any intended recipient(s). Bus Output Ports Latency comes from collision prevention and recovery. No memory required in switch buffering on sending and receiving devices.

EXAMPLE BACKPLANE: CROSSBAR SWITCH We can make things better by building a fabric of switched connections We ve already talked about this example in passing Great in the situation where there is no concentration of multiple inputs going to a single output Input Ports But, if that happens, then what? Collision Queueing And, this switches complexity grows O(N2) with N ports Ouch! Output Ports

IDEAL WORLD: OUTPUT QUEUED (OQ) SWITCH: BASIC MODEL Frames arrive at an input port They are immediately move to the correct output port This ensures that input ports don t need queues They are then queued at the output port until they are drained onto the network This queuing is necessary because multiple input ports could simultaneously receive frames destined for the same output port, requiring that they be drained over time.

IDEAL WORLD: OUTPUT QUEUED (OQ) SWITCH: KEY CHALLENGE If there are N ports the backplane bandwidth must be N*the bandwidth of each port Why? No input queuing. Can t delay data movement. Is this reasonable? As number of ports increase linearly, i.e. O(n), backplane speed needs to increase quadratically, e.g. O(N2) This is starting to feel like a challenge

IDEAL WORLD: OUTPUT QUEUED (OQ) SWITCH: KEY CHALLENGE To understand if this O(N2) connections are possible, we ve got to understand how the connections are made. Let s assume they are wires and semiconductor switches Can we clock the backplane N times faster than the ports for a large N? Not reasonable But, let s assume that we could. We d still have to read the data from memory and write it to memory, right? This still isn t likely in our favor. See next slide.

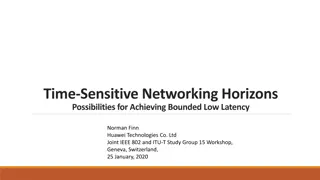

MEMORY LATENCY VS NETWORK SPEED TREND LINES: SEE ANOTHER POTENTIAL THE PROBLEM? 100,000,000.0 10,000,000.0 Disk seek time 1,000,000.0 SSD access time 100,000.0 10,000.0 DRAM access time SRAM access time CPU cycle time Time (ns) 1,000.0 100.0 10.0 1.0 Effective CPU cycle time 0.1 0.0 1985 1990 1995 2000 2003 2005 2010 2015 Year Credit: 14/15/18-213/513/600 Credit: https://www.napatech.com/history-of-ethernet-new-rules-and-the-ongoing-evolution/

INPUT QUEUED SWITCH (IQ): BASIC MODEL Okay. If we Need to queue because we could have N simultaneous inputs destined for the same port, so they can t all be sent at the same time And we can t do the queueing on the output port, because that would require an unreasonable ratio of port to switch throughput Maybe we can just queue on the input ports, and then drain to the output ports, as available? Seems like a good idea, r-i-g-h-t?

INPUT QUEUED SWITCH (IQ): KEY CHALLENGE Packets arriving at an input port are not necessarily destined for the same output port. What happens if the head of the line of the input queue can t be dequeued, because the output port is congested? Head of line blocking Frames destined for other ports, which aren t congested, get stuck behind it.

INPUT QUEUED SWITCH (IQ): THEORETICAL RESULTS Theoretical results: Assuming uniform traffic less than 60% throughput is achieved Hluchyj & Karol, Queuing in high-performance packet switching , IEEE Journal on Selected Areas in Communications, Volume: 6 , Issue: 9 , Dec. 1988. Graph matching algorithms can improve this to up to 100%, in theory, but at an unbearable computational complexity.

VIRTUAL OUTPUT QUEUES (VOQ) What if we move the output ques to the input side of the fabric? In other words, what if each input queue is divided into virtual output queues for each output? And we eliminate the output-side output queues Now, each arriving frame is queued at the input port but in a queue specific to the output port? No more head of line blocking!

VIRTUAL OUTPUT QUEUES (VOQ): AIN T NOTHIN FOR FREE But, we now have a lot of queues N2 queues. That s a lot We now need to figure out which queue to drain to where and when O(N2) complexity Again, it is great if we can do it But, going from N ports to O(N2) work may not be doable

COMBINED INPUT-OUTPUT QUEUE (CIOQ) SWITCHING Have queues on input Have queues on output Use some scheduling algorithm to move inputs to outputs. Goals: Avoid head of line blocking Avoid running out of queue space Avoid running out of fabric time

COMBINED INPUT-OUTPUT QUEUE (CIOQ) TRADE-OFFS Good: Avoid head of line blocking Simpler than VOQs Not so good: Copying data twice, e.g. once per buffer

COMBINED INPUT-OUTPUT QUEUE (CIOQ) KEY THEORETICAL FINDINGS Various algorithms are able to produce CIOQ switches that emulate OQ switches as long as they have a speed-up of 2 or more In other words, their internal fabric can move two frame per cycle.

COMBINED INPUT-OUTPUT QUEUE (CIOQ) KEY QUESTION How to schedule movement from inputs to outputs to Avoid head of line blocking Avoid running out of queue space Avoid running out of fabric time All sorts of other parameters Fabric speed Implement priorities with separate queues?

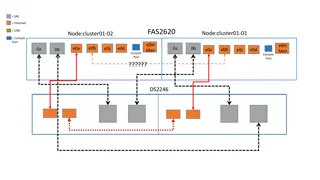

INTERNALLY BUFFERED CROSSBAR SWITCHES (IBCS) Consider where we might put buffers in crossbar switch: Input queues Output queues Each point of connection Input Ports Output Ports

INTERNALLY BUFFERED CROSSBAR SWITCHES (IBCS): O(N2) AGAIN? Can buffer at each interconnect Pairs each buffer with connectivity, no bottle neck there. Serves as a VOQ of sorts w.r.t. hed of line blocking. O(N2) memory better than O(N2) speed At least for modest N

INTERNALLY BUFFERED CROSSBAR SWITCHES (IBCS): Can be combined with input buffering Less likely to HOL block with N places to go. Output buffering doesn t make as much sense as input buffering Interconnects provide an output queue

KNOCKOUT SWITCHES Concentrators Knock out competing inputs randomly input buses Prevents N-To-1 Concentration possible at a crossbar switch Allows some L < N buffers associated with each input port vs N for a buffered crossbar. concentrators buffers

KEY PARAMETERS (FOR ANY OF THESE) How large each buffer/queue should be How to schedule which queue gets drained when

REFLECTIONS ON BUFFERING It takes time to copy into and out of buffers Longer queue sizes mean the potential for greater latency and greater jitter Of course, lower occupancy means lower latency and lower jitter, even if there is more room Shorter buffer sizes mean greater potential for dropped frames And, dropped frames often mean resends with associated longer delays felt by application Impact of drops depends on application Behavior of multiple layers of buffering is very dyamic

THE REAL WORLD Buffered crossbar fabrics are really common at the top level But they are often attached to very sophisticated line cards

CISCO 8-PORT M1-XL I/O MODULE ARCHITECTURE https://www.cisco.com/c/en/us/td/docs/solutions/Enterprise/Data_Center/VMDC/2-6/vmdcm1f1wp.html