Understanding BlinkDB: A Framework for Fast and Approximate Query Processing

BlinkDB is a framework built on Hive and Spark that creates and maintains offline samples for fast, approximate query processing. It provides error bars for queries executed on the same data and ensures correctness. The paper introduces innovations like sample creation techniques, error latency profile storage, and predictable Quality of Confidence Scores (QCSs). It emphasizes the importance of statistics education and discusses the advantages of using stratified sampling over uniform distribution for accurate estimations. BlinkDB's performance is impacted by joins and query constraints like error and time limits.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Authored by Sameer Agarwal, et. al. Scribed by: Shivam Upadhyay

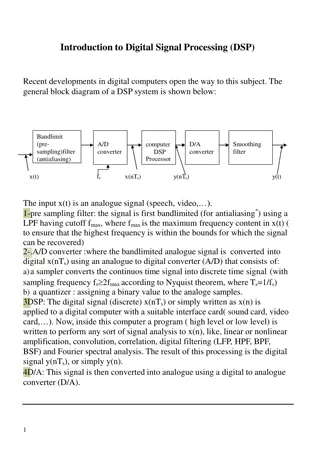

BlinkDB is a framework built on Hive and spark that- Creates and maintains variety of offline samples from underlying data Returns fast, approximate answers with error bars by executing queries on same data Verifies the correctness of the error bars that it returns at runtime Works on closed form Aggregate queries (Count, Sum, Variance etc.) Main takeaways/innovations in paper- Sample Creation, The reason why BlinkDB gives result in a Blink Sample selection, Intuitive Error Latency Profile Storage Technique, rows of sample are stored sequentially according to their order in QCS Predictable QCSs: Frequency of columns used for grouping and filtering does not change over time (Empirical evidence) For me: Take a Statistics Class, It s very important

Why Stratified ? Whats wrong with the Uniform Distribution ? Uniform sampling may miss subgroups Rare subgroups require large samples for high confidence estimates Subgroups are sufficiently represented When can we use Uniform Distribution ? When there is no filtering or grouping Will it boost the performance ? What happens to the systems performance if we implement joins ? Paper suggests that it s straight forward, given joined tables are small enough to fit in the main memory What will happen to the stratified distribution? Will it be affected ? Effect of cap Size K? If |Tx| <= K ; Standard Error can be zero If |Tx| > k ; Standard Error is inversely proportional to K

Depends on columns that appear in query's Where and/or Group By clause If it s subset of subset of stratified sample QCS , select it Else , run query in parallel on in memory subsets of all the samples currently maintained by the system. Select the one with highest selectivity Error Constraints: Makes error profile, to estimates the number of rows to satisfy the error constrain mentioned in the query Based on query selectivity , sample variance , and input data distribution by plugging them into statistical formulas Latency Profile: Makes latency profile same as error profile, reads only n which can be processed within a given time constrain What if we mention both constrains ? Error as well as Time ? How to resolve which to satisfy ?

BlinkDB is fast and with reliable evaluation No support for Joins, min , max , count distinct etc, type of queries Parameter K and M are arbitrary assigned values for optimal performance without clear explanation Optimization of optimization requires user intervention and knowledge of past queries Stability of QCS is doubtful, query sets given as empirical can be deliberate Re-computing frequency of stratified samples require user to intervene, again is vague in the paper, how to set optimal parameters ? Are BlinkDB results deterministic ? Yes with an error bond What kinds of query workloads would break BlinkDBs assumption that query column sets are predictable?

Augmenting HIVE to support queries with error and time constrain makes BlinkDB more usable Evaluation is based on real data obtained from Conviva and bench marked against TPC-H, makes result more believable Optimization of optimization, BlinkDB requires maximizing the linear program (as mentioned) for computing converge probability, since it s hard to compute exponentially with the number of columns in T, can be optimized using column sets that occurred in past queries and leaving out unrealistically large column sets. Handling the biases and providing empirical evidence for any assumption they made - Hidden Columns Novel way to escape memory barrier, for large data set Great performance on real world work loads

Induced correlation amongst queries that uses same QCS, as higher error in one will also induce higher error in any query that has overlapping subset of columns Slightly vague description for resolving the problems associated with changing workload over the period of time What if there is a high churn in data ? Offline computation will be as effective in that case ? What about the performance ?