Enhancing Distributional Similarity: Lessons from Word Embeddings

Explore how word vectors enable easy computation of similarity and relatedness, along with approaches for representing words using distributional semantics. Discover the contributions of word embeddings through novel algorithms and hyperparameters for improved performance.

- Word embeddings

- Distributional similarity

- Word vectors

- Natural language processing

- Word representation

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Improving Distributional Similarity with Lessons Learned from Word Embeddings Omer Levy Yoav Goldberg Ido Dagan Bar-Ilan University Israel 1

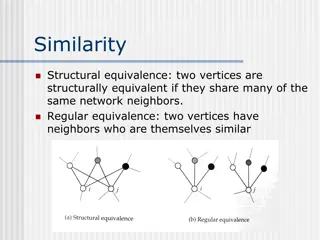

Word Similarity & Relatedness How similar is pizza to pasta? How related is pizza to Italy? Representing words as vectors allows easy computation of similarity 2

Approaches for Representing Words Distributional Semantics (Count) Used since the 90 s Sparse word-context PMI/PPMI matrix Decomposed with SVD Word Embeddings (Predict) Inspired by deep learning word2vec(Mikolov et al., 2013) GloVe (Pennington et al., 2014) Underlying Theory: The Distributional Hypothesis(Harris, 54; Firth, 57) Similar words occur in similar contexts 3

Approaches for Representing Words Both approaches: Rely on the same linguistic theory Use the same data Are mathematically related Neural Word Embedding as Implicit Matrix Factorization (NIPS 2014) How come word embeddings are so much better? Don t Count, Predict! (Baroni et al., ACL 2014) More than meets the eye 4

The Contributions of Word Embeddings Novel Algorithms New Hyperparameters (objective + training method) Skip Grams + Negative Sampling CBOW + Hierarchical Softmax Noise Contrastive Estimation GloVe (preprocessing, smoothing, etc.) Subsampling Dynamic Context Windows Context Distribution Smoothing Adding Context Vectors What s really improving performance? 5

The Contributions of Word Embeddings Novel Algorithms New Hyperparameters (objective + training method) Skip Grams + Negative Sampling CBOW + Hierarchical Softmax Noise Contrastive Estimation GloVe (preprocessing, smoothing, etc.) Subsampling Dynamic Context Windows Context Distribution Smoothing Adding Context Vectors What s really improving performance? 6

The Contributions of Word Embeddings Novel Algorithms New Hyperparameters (objective + training method) Skip Grams + Negative Sampling CBOW + Hierarchical Softmax Noise Contrastive Estimation GloVe (preprocessing, smoothing, etc.) Subsampling Dynamic Context Windows Context Distribution Smoothing Adding Context Vectors What s really improving performance? 7

The Contributions of Word Embeddings Novel Algorithms New Hyperparameters (objective + training method) Skip Grams + Negative Sampling CBOW + Hierarchical Softmax Noise Contrastive Estimation GloVe (preprocessing, smoothing, etc.) Subsampling Dynamic Context Windows Context Distribution Smoothing Adding Context Vectors What s really improving performance? 8

Our Contributions 1) Identifying the existence of new hyperparameters Not always mentioned in papers 2) Adapting the hyperparameters across algorithms Must understand the mathematical relation between algorithms 9

Our Contributions 1) Identifying the existence of new hyperparameters Not always mentioned in papers 2) Adapting the hyperparameters across algorithms Must understand the mathematical relation between algorithms 3) Comparing algorithms across all hyperparameter settings Over 5,000 experiments 10

Background 11

What is word2vec? How is it related to PMI? 13

What is word2vec? word2vec is not a single algorithm It is a software package for representing words as vectors, containing: Two distinct models CBoW Skip-Gram Various training methods Negative Sampling Hierarchical Softmax A rich preprocessing pipeline Dynamic Context Windows Subsampling Deleting Rare Words 14

What is word2vec? word2vec is not a single algorithm It is a software package for representing words as vectors, containing: Two distinct models CBoW Skip-Gram (SG) Various training methods Negative Sampling (NS) Hierarchical Softmax A rich preprocessing pipeline Dynamic Context Windows Subsampling Deleting Rare Words 15

Skip-Grams with Negative Sampling (SGNS) Marco saw a furry little wampimuk hiding in the tree. word2vec Explained Goldberg & Levy, arXiv 2014 16

Skip-Grams with Negative Sampling (SGNS) Marco saw a furry little wampimuk hiding in the tree. word2vec Explained Goldberg & Levy, arXiv 2014 17

Skip-Grams with Negative Sampling (SGNS) Marco saw a furry little wampimuk hiding in the tree. words wampimuk wampimuk wampimuk wampimuk contexts furry little hiding in ? (data) word2vec Explained Goldberg & Levy, arXiv 2014 18

Skip-Grams with Negative Sampling (SGNS) SGNS finds a vector ? for each word ? in our vocabulary ?? Each such vector has ? latent dimensions (e.g. ? = 100) Effectively, it learns a matrix ? whose rows represent ?? Key point: it also learns a similar auxiliary matrix ? of context vectors In fact, each word has two embeddings ? ? ?:wampimuk = ( 3.1,4.15,9.2, 6.5, ) ? ? ?? ?? ?:wampimuk = ( 5.6,2.95,1.4, 1.3, ) word2vec Explained Goldberg & Levy, arXiv 2014 19

Skip-Grams with Negative Sampling (SGNS) word2vec Explained Goldberg & Levy, arXiv 2014 20

Skip-Grams with Negative Sampling (SGNS) Maximize:? ? ? ? was observed with ? words wampimuk wampimuk wampimuk wampimuk contexts furry little hiding in word2vec Explained Goldberg & Levy, arXiv 2014 21

Skip-Grams with Negative Sampling (SGNS) Maximize:? ? ? ? was observed with ? Minimize:? ? ? ? was hallucinated with ? words wampimuk wampimuk wampimuk wampimuk contexts furry little hiding in words wampimuk wampimuk wampimuk wampimuk contexts Australia cyber the 1985 word2vec Explained Goldberg & Levy, arXiv 2014 22

Skip-Grams with Negative Sampling (SGNS) Negative Sampling SGNS samples ? contexts ? at random as negative examples Random = unigram distribution ?? =#? ? Spoiler: Changing this distribution has a significant effect 23

What is SGNS learning? Take SGNS s embedding matrices (? and ?) ? ? ? ? ?? ?? Neural Word Embeddings as Implicit Matrix Factorization Levy & Goldberg, NIPS 2014 25

What is SGNS learning? Take SGNS s embedding matrices (? and ?) Multiply them What do you get? ? ?? ? ? ?? ? Neural Word Embeddings as Implicit Matrix Factorization Levy & Goldberg, NIPS 2014 26

What is SGNS learning? A ?? ?? matrix Each cell describes the relation between a specific word-context pair ? ? = ? ?? ? ?? = ? ? ? ?? ?? ? Neural Word Embeddings as Implicit Matrix Factorization Levy & Goldberg, NIPS 2014 27

What is SGNS learning? We proved that for large enough ? and enough iterations ?? ? ?? = ? ? ? ?? ?? ? Neural Word Embeddings as Implicit Matrix Factorization Levy & Goldberg, NIPS 2014 28

What is SGNS learning? We proved that for large enough ? and enough iterations We get the word-context PMI matrix ?? ? ?? ???? = ? ? ?? ?? ? Neural Word Embeddings as Implicit Matrix Factorization Levy & Goldberg, NIPS 2014 29

What is SGNS learning? We prove that for large enough ? and enough iterations We get the word-context PMI matrix, shifted by a global constant ??? ? ? = ??? ?,? log? ?? ? ?? ???? = log? ? ? ?? ?? ? Neural Word Embeddings as Implicit Matrix Factorization Levy & Goldberg, NIPS 2014 30

What is SGNS learning? SGNS is doing something very similar to the older approaches SGNS is factorizing the traditional word-context PMI matrix So does SVD! GloVe factorizes a similar word-context matrix 31

But embeddings are still better, right? Plenty of evidence that embeddings outperform traditional methods Don t Count, Predict! (Baroni et al., ACL 2014) GloVe (Pennington et al., EMNLP 2014) How does this fit with our story? 32

The Big Impact of Small Hyperparameters word2vec & GloVe are more than just algorithms Introduce new hyperparameters May seem minor, but make a big difference in practice 34

Identifying Identifying New Hyperparameters 35

New Hyperparameters Preprocessing Dynamic Context Windows Subsampling Deleting Rare Words (word2vec) Postprocessing Adding Context Vectors (GloVe) Association Metric Shifted PMI Context Distribution Smoothing (SGNS) 36

New Hyperparameters Preprocessing Dynamic Context Windows Subsampling Deleting Rare Words (word2vec) Postprocessing Adding Context Vectors (GloVe) Association Metric Shifted PMI Context Distribution Smoothing (SGNS) 37

New Hyperparameters Preprocessing Dynamic Context Windows Subsampling Deleting Rare Words (word2vec) Postprocessing Adding Context Vectors (GloVe) Association Metric Shifted PMI Context Distribution Smoothing (SGNS) 38

New Hyperparameters Preprocessing Dynamic Context Windows Subsampling Deleting Rare Words (word2vec) Postprocessing Adding Context Vectors (GloVe) Association Metric Shifted PMI Context Distribution Smoothing (SGNS) 39

Dynamic Context Windows Marco saw a furry little wampimuk hiding in the tree. 40

Dynamic Context Windows Marco saw a furry little wampimuk hiding in the tree. 41

Dynamic Context Windows Marco saw a furry little wampimuk hiding in the tree. 1 4 2 4 3 4 4 4 4 4 3 4 2 4 1 4 word2vec: 1 4 1 3 1 2 1 1 1 1 1 2 1 3 1 4 GloVe: 1 8 1 4 1 2 1 1 1 1 1 2 1 4 1 8 Aggressive: The Word-Space Model (Sahlgren, 2006) 42

Adding Context Vectors SGNS creates word vectors ? SGNS creates auxiliary context vectors ? So do GloVe and SVD 43

Adding Context Vectors SGNS creates word vectors ? SGNS creates auxiliary context vectors ? So do GloVe and SVD Instead of just ? Represent a word as: ? + ? Introduced by Pennington et al. (2014) Only applied to GloVe 44

Adapting Adapting Hyperparameters across Algorithms 45

Context Distribution Smoothing SGNS samples ? ~?to form negative(?,? ) examples Our analysis assumes ?is the unigram distribution #? ?? = ? ??#? 46

Context Distribution Smoothing SGNS samples ? ~?to form negative(?,? ) examples Our analysis assumes ?is the unigram distribution In practice, it s a smoothed unigram distribution #?0.75 ? 0.75? = ? ??#? 0.75 This little change makes a big difference 47

Context Distribution Smoothing We can adapt context distribution smoothing to PMI! Replace ?(?) with ? 0.75(?): ?(?,?) ???0.75?,? = log ? ? ??.??? Consistently improves PMI on every task Always use Context Distribution Smoothing! 48

Comparing Comparing Algorithms 49

Controlled Experiments Prior art was unaware of these hyperparameters Essentially, comparing apples to oranges We allow every algorithm to use every hyperparameter 50