Comprehensive Overview of Autoencoders and Their Applications

Autoencoders (AEs) are neural networks trained using unsupervised learning to copy input to output, learning an embedding. This article discusses various types of autoencoders, topics in autoencoders, applications such as dimensionality reduction and image compression, and related concepts like embe

4 views • 86 slides

Understanding Medical Word Elements: Roots, Combining Forms, Suffixes, and Prefixes

Medical terminology uses word elements like roots, combining forms, suffixes, and prefixes. Word roots provide the main meaning, combining forms connect elements, suffixes modify word meaning, and prefixes are placed at the beginning of words. Examples illustrate how these elements are used in medic

6 views • 13 slides

Stages of First Language Acquisition in Children

First language acquisition in children progresses through distinct stages including cooing and babbling, the one-word stage, the two-word stage, and telegraphic speech. These stages mark the development of speech sounds, single-word utterances, two-word combinations, and more complex speech structur

2 views • 16 slides

Understanding Word Order in Different Languages

Explore the fascinating world of word order in languages. Discover how different languages arrange words in various ways, such as Subject-Verb-Object (SVO), Subject-Object-Verb (SOV), and more. Delve into the diversity of word orders for subjects, objects, and verbs, and uncover how language structu

2 views • 31 slides

Exploring Graph-Based Data Science: Opportunities, Challenges, and Techniques

Graph-based data science offers a powerful approach to analyzing data by leveraging graph structures. This involves using graph representation, analysis algorithms, ML/AI techniques, kernels, embeddings, and neural networks. Real-world examples show the utility of data graphs in various domains like

3 views • 37 slides

Transforming NLP for Defense Personnel Analytics: ADVANA Cloud-Based Platform

Defense Personnel Analytics Center (DPAC) is enhancing their NLP capabilities by implementing a transformer-based platform on the Department of Defense's cloud system ADVANA. The platform focuses on topic modeling and sentiment analysis of open-ended survey responses from various DoD populations. Le

0 views • 13 slides

Faithful Living and Preaching God's Word in 2 Timothy

In 2 Timothy, the call to preach the Word faithfully is emphasized, highlighting the importance of living with courage and commitment in spreading God's message. The text underscores the need to remain steadfast in the face of challenges and to uphold the truth of God's Word amidst changing times. T

0 views • 12 slides

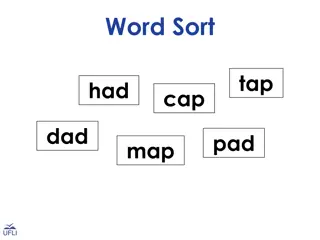

Engaging Word Sorting Activity for Students

Encourage student engagement with a hands-on word sorting activity involving phonograms. Students copy word cards into categories, read each word, and determine its appropriate category in a fun and interactive manner using a sorting chart.

2 views • 5 slides

Binary Basic Block Similarity Metric Method in Cross-Instruction Set Architecture

The similarity metric method for binary basic blocks is crucial in various applications like malware classification, vulnerability detection, and authorship analysis. This method involves two steps: sub-ldr operations and similarity score calculation. Different methods, both manual and automatic, ha

0 views • 20 slides

Understanding Positional Encoding in Transformers for Deep Learning in NLP

This presentation delves into the significance and methods of implementing positional encoding in Transformers for natural language processing tasks. It discusses the challenges faced by recurrent networks, introduces approaches like linear position assignment and sinusoidal/cosinusoidal positional

0 views • 15 slides

Understanding Word Embeddings in NLP: An Exploration

Explore the concept of word embeddings in natural language processing (NLP), which involves learning vectors that encode words. Discover the properties and relationships between words captured by these embeddings, along with questions around embedding space size and finding the right function. Delve

0 views • 28 slides

Understanding Autoencoders: Applications and Properties

Autoencoders play a crucial role in supervised and unsupervised learning, with applications ranging from image classification to denoising and watermark removal. They compress input data into a latent space and reconstruct it to produce valuable embeddings. Autoencoders are data-specific, lossy, and

0 views • 18 slides

Advancements in Cross-lingual Spoken Language Understanding

Significant advancements have been made in cross-lingual spoken language understanding (SLU) to overcome barriers related to labeled data availability in different languages. The development of a SLU model for a new language with minimal supervision and achieving reasonable performance has been a ke

0 views • 20 slides

Understanding Word Sense Disambiguation: Challenges and Approaches

Word Sense Disambiguation (WSD) is a complex task in artificial intelligence that aims to determine the correct sense of a word in context. It involves classifying a word into predefined classes based on its meaning in a specific context. WSD requires not only linguistic knowledge but also knowledge

2 views • 12 slides

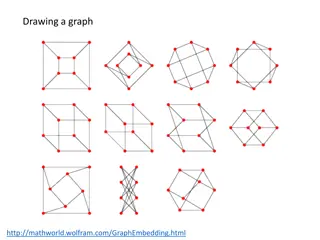

Understanding Graph Theory Fundamentals

Delve into the basics of graph theory with topics like graph embeddings, graph plotting, Kuratowski's theorem, planar graphs, Euler characteristic, trees, and more. Explore the principles behind graphs, their properties, and key theorems that define their structure and connectivity.

0 views • 17 slides

Understanding Sparse vs. Dense Vector Representations in Natural Language Processing

Tf-idf and PPMI are sparse representations, while alternative dense vectors offer shorter lengths with non-zero elements. Dense vectors may generalize better and capture synonymy effectively compared to sparse ones. Learn about dense embeddings like Word2vec, Fasttext, and Glove, which provide effic

0 views • 44 slides

Advancements in Word Embeddings through Dependency-Based Techniques

Explore the evolution of word embeddings with a focus on dependency-based methods, showcasing innovations like Skip-Gram with Negative Sampling. Learn about Generalizing Skip-Gram and the shift towards analyzing linguistically rich embeddings using various contexts such as bag-of-words and syntactic

0 views • 39 slides

Phonogram Word Cards for Teaching Phoneme-Grapheme Correspondences

Explore a collection of phonogram word cards featuring various phoneme-grapheme correspondences for educational activities like word sorts and review games. Enhance phonics skills with word lists containing words sharing the same phonogram, covering a wide range of graphemes and phonemes. Utilize th

0 views • 32 slides

WEB-SOBA: Ontology Building for Aspect-Based Sentiment Classification

This study introduces WEB-SOBA, a method for semi-automatically building ontologies using word embeddings for aspect-based sentiment analysis. With the growing importance of online reviews, the focus is on sentiment mining to extract insights from consumer feedback. The motivation behind the researc

2 views • 35 slides

Exploring Text Similarity in Natural Language Processing

Explore the importance of text similarity in NLP, how it aids in understanding related concepts and processing language, human judgments of similarity, automatic similarity computation using word embeddings like word2vec, and various types of text similarity such as semantic, morphological, and sent

3 views • 8 slides

Sketching as a Tool for Algorithmic Design by Alex Andoni - Overview

Utilizing sketching in algorithmic design, Alex Andoni from Columbia University explores methodologies such as succinct efficient algorithms, dimension reduction, sampling, metric embeddings, and more. The approach involves numerical linear algebra, similarity search, and geometric min-cost matching

0 views • 18 slides

Understanding Word Meaning in Lexical Semantics

Introduction to Chapter 5 Lecture 4.1 discusses the nature of word meaning, major problems of lexical semantics, and different approaches. It explains the concept of a word, prototypical words, lexical roots, lexemes, and word forms, highlighting the importance of the word as a lexeme in lexical sem

1 views • 20 slides

Semi-Automatic Ontology Building for Aspect-Based Sentiment Classification

Growing importance of online reviews highlights the need for automation in sentiment mining. Aspect-Based Sentiment Analysis (ABSA) focuses on detecting sentiments expressed in product reviews, with a specific emphasis on sentence-level analysis. The proposed approach, Deep Contextual Word Embedding

0 views • 34 slides

Effective Data Augmentation with Projection for Distillation

Data augmentation plays a crucial role in knowledge distillation processes, enhancing model performance by generating diverse training data. Techniques such as token replacement, representation interpolation, and rich semantics are explored in the context of improving image classifier performance. T

0 views • 13 slides

Exploring Word Embeddings in Vision and Language: A Comprehensive Overview

Word embeddings play a crucial role in representing words as compact vectors. This comprehensive overview delves into the concept of word embeddings, discussing approaches like one-hot encoding, histograms of co-occurring words, and more advanced techniques like word2vec. The exploration covers topi

1 views • 20 slides

Jeff Edmonds - Research Interests and Academic Courses

Jeff Edmonds is a researcher with interests in various theoretical and mathematical topics, including scheduling algorithms, cake cutting, and topological embeddings. He also provides support for mathematical and theoretical topics. Additionally, Jeff has ventured into machine learning to explore ne

0 views • 17 slides

Evolution of Sentiment Analysis in Tweets and Aspect-Based Sentiment Analysis

The evolution of sentiment analysis on tweets from SemEval competitions in 2013 to 2017 is discussed, showcasing advancements in technology and the shift from SVM and sentiment lexicons to CNN with word embeddings. Aspect-Based Sentiment Analysis, as explored in SemEval2014, involves determining asp

0 views • 23 slides

Understanding Word Formation and Coinage in English

Word formation in English involves different processes such as compounding, conversion, and derivational affixation. Compounding combines two or more words to create a new word, while conversion changes the word class without affixes. Word coinage includes compounds, acronyms, back-formations, abbre

0 views • 10 slides

Understanding the Role of Dictionaries in Translation

Dictionaries play a crucial role in translation by helping users find information about linguistic signs, word division, spelling, and word formation. The lemma serves as a representative of a lexical item in a dictionary, aiding users in locating specific entries. Word division information can assi

0 views • 12 slides

Using Word Embeddings for Ontology-Driven Aspect-Based Sentiment Analysis

Motivated by the increasing number of online product reviews, this research explores automation in sentiment mining through Aspect-Based Sentiment Analysis (ABSA). The focus is on sentiment detection for aspects at the review level, using a hybrid approach that combines ontology-based reasoning and

0 views • 26 slides

Understanding Word Embeddings: A Comprehensive Overview

Word embeddings involve learning an encoding for words into vectors to capture relationships between them. Functions like W(word) return vector encodings for specific words, aiding in tasks like prediction and classification. Techniques such as word2vec offer methods like CBOW and Skip-gram to predi

0 views • 27 slides

Understanding Text Similarity Techniques in NLP

Explore various text similarity techniques in Natural Language Processing (NLP), including word order, length, synonym, spelling, word importance, and word frequency considerations. Topics covered include bag-of-words representation, vector-based word similarities, TF-IDF weighting scheme, normalize

2 views • 62 slides

The Sunday of the Word of God Celebration - 24th January 2021

The Sunday of the Word of God, observed on the 24th of January 2021, is dedicated to the celebration, study, and dissemination of the Word of God. Pope Francis encourages Catholics worldwide to deepen their relationship with God through His Word. The event highlights the importance of valuing both t

0 views • 27 slides

Exploring Word Embeddings and Syntax Encoding

Word embeddings play a crucial role in natural language processing, offering insights into syntax encoding. Jacob Andreas and Dan Klein from UC Berkeley delve into the impact of embeddings on various linguistic aspects like vocabulary expansion and statistic pooling. Through different hypotheses, th

0 views • 26 slides

Enhancing Distributional Similarity: Lessons from Word Embeddings

Explore how word vectors enable easy computation of similarity and relatedness, along with approaches for representing words using distributional semantics. Discover the contributions of word embeddings through novel algorithms and hyperparameters for improved performance.

0 views • 69 slides

Understanding Word Vector Models for Natural Language Processing

Word vector models play a crucial role in representing words as vectors in NLP tasks. Subrata Chattopadhyay's Word Vector Model introduces concepts like word representation, one-hot encoding, limitations, and Word2Vec models. It explains the shift from one-hot encoding to distributed representations

0 views • 25 slides

Understanding Word Sense Disambiguation in Computational Lexical Semantics

Word Sense Disambiguation (WSD) is a crucial task in Computational Lexical Semantics, aiming to determine the correct sense of a word in context from a fixed inventory of potential word senses. This process involves various techniques such as supervised machine learning, unsupervised methods, thesau

0 views • 67 slides

Transformer Neural Networks for Sequence-to-Sequence Translation

In the domain of neural networks, the Transformer architecture has revolutionized sequence-to-sequence translation tasks. This involves attention mechanisms, multi-head attention, transformer encoder layers, and positional embeddings to enhance the translation process. Additionally, Encoder-Decoder

0 views • 24 slides

MEANOTEK Building Gapping Resolution System Overnight

Explore the journey of Denis Tarasov, Tatyana Matveeva, and Nailia Galliulina in developing a system for gapping resolution in computational linguistics. The goal is to test a rapid NLP model prototyping system for a novel task, driven by the motivation to efficiently build NLP models for various pr

0 views • 16 slides

Key Insights into Neural Embeddings and Word Representations

Explore the comparison between neural embeddings and explicit word representations, uncovering the mystery behind vector arithmetic in revealing analogies. Delve into the impact of sparse and dense vectors in representing words, with a focus on linguistic regularities and geometric patterns in neura

0 views • 58 slides