Decoding Uncertainty in Communication: Exploring Prior Distributions and Coding Schemes

Delve into the intricate world of communication under uncertainty, where decoding messages accurately is paramount. Discover how prior distributions, encoding schemes, and closeness metrics influence the efficiency and effectiveness of communication between parties sharing different priors.

Uploaded on Sep 17, 2024 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

The Price of Uncertainty in Communication Brendan Juba (Washington U., St. Louis) with Mark Braverman (Princeton)

SINCE WE ALL AGREE ON A PROB. DISTRIBUTION OVER WHAT I MIGHT SAY, I CAN COMPRESSIT TO: THE 9,232,142,124,214,214,123,845TH MOST LIKELY MESSAGE. THANK YOU!

1.Encodings and communication across different priors 2.Near-optimal lower bounds for different priors coding 3

Coding schemes MESSAGES Chicken Bird Duck Dinner Pet Lamb Cow Dog Cat ENCODINGS 4

Ambiguity Chicken Bird Duck Dinner Pet Lamb Cow Dog Cat 6

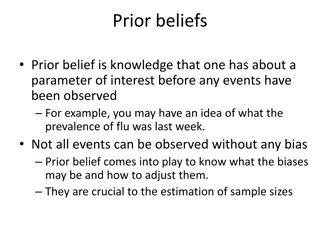

Prior distributions Chicken Bird Duck Dinner Pet Lamb Cow Dog Cat Decode to a maximum likelihood message 7

Source coding (compression) Assume encodings are binary strings Given a prior distribution P, message m, choose minimum length encoding that decodes to m. FOR EXAMPLE, HUFFMAN CODES AND SHANNON- FANO (ARITHMETIC) CODES NOTE: THE ABOVE SCHEMES DEPEND ON THE PRIOR. 8

SUPPOSE ALICE AND BOB SHARE THE SAME ENCODING SCHEME, BUT DON T SHARE THE SAME PRIOR P Q CAN THEY COMMUNICATE?? HOW EFFICIENTLY?? 9

THE ORANGE CAT. THE ORANGE CAT WITHOUT A HAT. THE CAT. 10

Closeness and communication Priors P and Q are -close ( 1) if for every message m, P(m) Q(m) and Q(m) P(m) Disambiguation and closeness together suffice for communication: 2-disambiguated If for every m m, P[m|e] > 2P[m |e], then: Q[m|e] 1/ P[m|e] > P[m |e] Q[m |e] SO, IF ALICE SENDS e THEN MAXIMUM LIKELIHOOD DECODING GIVES BOB m AND NOTm 11

Construction of a coding scheme (J-Kalai-Khanna-Sudan 11, Inspired by B-Rao 11) Pick an infinite random string Rm for each m, Put (m,e) E e is a prefix of Rm. Alice encodes m by sending prefix of Rm s.t. m is 2-disambiguated under P. Gives an expected encoding length of at most H(P) + 2log + 2 12

Remark Mimicking the disambiguation property of natural language provided an efficient strategy for communication. 13

1.Encodings and communication across different priors 2.Near-optimal lower bounds for different priors coding 14

Our results 1. The JKKS 11/BR 11 encoding is near optimal H(P) + 2log 3log log O(1) bits necessary (cf. achievedH(P) + 2log + 2 bits) 2. Analysis of positive-error setting [Haramaty-Sudan 14]: If incorrect decoding w.p. is allowed Can achieve H(P) + log + log 1/ bits H(P) + log + log 1/ (9/2)log log O(1) bits necessary for > 1/ 15

An -errorcoding scheme. (Inspired by J-Kalai-Khanna-Sudan 11, B-Rao 11) Pick an infinite random string Rm for each m, Put (m,e) E e is a prefix of Rm. Alice encodes m by sending the prefix of Rm of length log 1/P(m) + log + log 1/ 16

Analysis Claim. m is decoded correctly w.p. 1- Proof. There are at most 1/Q(m) messages with Q-probability greater than Q(m) P(m)/ . The probability that Rm for any one of these m agrees with the first log 1/P(m) + log + log 1/ log 1/Q(m)+log 1/ bits of Rm is at most Q(m). By a union bound, the probability that any of these agree with Rm (and hence could be wrongly chosen) is at most . 17

Length lower bound 1reduction to deterministic encodings Min-max Theorem: itsuffices to exhibit a distribution over priors for which deterministic encodings must be long 18

Length lower bound 2hard priors Lemma 1: H(P) = O(1) Lemma 2 log. prob. 0 - close -log - close -2log S m* 19 2

Length lower bound 3 short encodings have collisions Encodings of expected length < 2log 3log log encode m1 m2 identically with nonzero prob. With nonzero probability over choice of P & Q, m1,m2 S andm* {m1,m2} Decoding error with nonzero probability Errorless encodings have expected length 2log -3log log = H(P)+2log -3log log -O(1) 20

Length lower bound 4 very short encodings often collide If the encoding has expected length < log + log 1/ (9/2)log log m* collides with ( log ) other messages Probability that our draws for S miss all of these messages is < 1-2 Decoding error with probability > Error- encodings have expected length H(P) + log + log 1/ (9/2)log log O(1) 21

Recap. We saw a variant of source coding for which (near-)optimal solutions resemble natural languages in interesting ways. 22

The problem. Design a coding scheme E so that for any sender and receiver with -close prior distributions, the communication length is minimized. (In expectation w.r.t. sender s distribution) Questions? 23