Understanding Stereopsis: Depth Perception in Computational Vision

Dive into the fascinating world of stereopsis in computational vision, exploring topics such as binocular stereo, crossed and uncrossed disparity, angular disparity, discrimination of distances, stereo processing for depth determination, and the correspondence problem with random dot stereograms. Discover how our brains integrate visual information from both eyes to perceive depth and create 3D representations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Computational Vision CSCI 363, Spring 2023 Lecture 12 Stereopsis 1

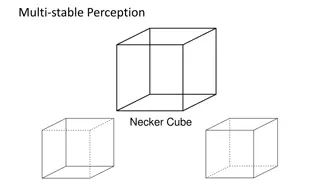

Binocular Stereo The image in each of our two eyes is slightly different. Images in the plane of fixation fall on corresponding locations on the retina. Images in front of the plane of fixation are shifted outward on each retina. They have crossed disparity. Images behind the plane of fixation are shifted inward on the retina. They have uncrossed disparity. 2

Crossed and uncrossed disparity uncrossed (negative) disparity 1 plane of fixation 2 crossed (positive) disparity 3

Angular disparity A plane of fixation D crossed (positive) disparity B If fixating on A, the disparity of B is given by: i = if B is closer than A if B is further than A = tan-1(i/(2D)) 4

Discrimination for near and far distances changes rapidly for small D changes slowly for large D Humans can discriminate surfaces that differ in disparity by about 5 seconds of arc. D At D = .5 m, 5'' of arc = 0.015 cm in distance At D = 5 m, 5" of arc = 2 cm in distance 5

Stereo processing To determine depth from stereo disparity: 1) Extract the "features" from the left and right images 2) For each feature in the left image, find the corresponding feature in the right image. 3) Measure the disparity between the two images of the feature. 4) Use the disparity to compute the 3D location of the feature. 6

The Correspondence problem How do you determine which features from one image match features in the other image? (This problem is known as the correspondence problem). This could be accomplished if each image has well defined shapes or colors that can be matched. Problem: Random dot stereograms. 7 Left Image Right Image Making a stereogram

Problem with Random Dot Stereograms In 1980's Bela Julesz developed the random dot stereogram. The stereogram consists of 2 fields of random dots, identical except for a region in one of the images in which the dots are shifted by a small amount. When one image is viewed by the left eye and the other by the right eye, the shifted region is seen at a different depth. No cues such as color, shape, texture, shading, etc. to use for matching. How do you know which dot from left image matches which dot from the right image? 8

Using Constraints to Solve the Problem To solve the correspondence problem, we need to make some assumptions (constraints) about how the matching is accomplished. Constraints used by many computer vision stereo algorithms: 1) Uniqueness: Each point has at most one match in the other image. 2) Similarity: Each feature matches a similar feature in the other image (i.e. you cannot match a white dot with a black dot). 3) Continuity: Disparity tends to vary slowly across a surface. (Note: this is violated at depth edges). 4) Epipolar constraint: Given a point in the image of one eye, the matching point in the image for the other eye must lie along a single line. 9

The epipolar constraint Feature in left image Possible matches in right image 10

It matters where you look If the observer is fixating a point along a horizontal plane through the middle of the eyes, the possible positions of a matching point in the other image lie along a horizontal line. If the observer is looking upward or downward, the line will be tilted. Most stereo algorithms for machine vision assume the epipolar lines are horizontal. For biological systems, the stereo computation must take into account where the eyes are looking (e.g. upward or downward). 11

The Marr-Poggio algorithm The Marr-Poggio algorithm uses the 4 constraints to find good matches for a stereo pair. Input Left Right The input is a pair of images consisting of 1's and 0's (like a random dot stereogram, representing white and black dots). 12

Output of Marr Poggio The output of the algorithm is a 3D array, C(x, y, d), where d is the disparity. A 1 is placed in a given position if there is evidence for that disparity at that position. A 0 indicates there is little or no evidence for that disparity at that position. Initially a 1 is placed in all possible matched disparity positions. disparity (limited to +/- 3 pixels) C(x, y, d) = 0 or 1 13

Using Constraints to solve the problem Our goal is to use an iterative process to change the state of the cells in the array until it reaches a final state with the correct disparities. We will use constraints to change the support for different matches. Two constraints are already incorporated in the initial state: Similarity: We only match 1's with 1's. Epipolar: We only match 1's with other 1's in the same horizontal row. Initially: C(x, y, d) = 1 if L(x,y) = R(x+d, y) 0 otherwise 14

Examining the 3D array We can examine the 3D array by looking at 2D slices. This will give us evidence for and against a given match. disparity 15 Consider the possible match at (4, 4, 2).

Using the uniqueness constraint x x-d slice for y = 4 The number of other matches in a given column decreases support for a given match. d The uniqueness constraint is violated. We cannot have more than one disparity match for each position. Matches at (4, 4, -1) and (4, 4, -3) provide evidence against the match at (4, 4, 2). 16

Continuity x x-y plane at d = +2 The continuity constraint implies that we expect the neighbors of a true match to have similar disparities. y We have many 1's in neighboring positions at disparity +2. This provides support for the match at (4, 4, 2). 17

Other Possible matches for the right point If the point at (4,4) in the left image has disparity of +2, it matches the point at (6, 4) in the right image. Input Left Right If there are other points in the left image that can match (6,4) in the right image, this will count against a disparity of +2 for (4, 4)L 18

Another uniqueness measure x x-d slice through y = 4 If C(4, 4, 2) is correct, then the dot in the left image at (4,4) matches the dot in the right image at (6, 4). d If there is strong evidence for a different match for the right image dot, this is evidence against the match at (4, 4, 2). 1's along the diagonal reflect other possible matches for the right image dot. These decrease support for the match at (4, 4, 2). 19

Changing the states We incorporate the uniqueness and continuity constraints by computing a new state for each disparity at each location over numerous iterations. We compute each new state by taking into account the number of nearby neighbors with the same disparity (this provides support). We also take into account the number of other possible disparity matches that are supported at that location (this decreases support). 20

Putting it all together S = support, Ct(x, y, d) = state at time t, E = positive evidence, I = negative evidence, = weighting factor, T = threshold S = Ct(x, y, d) + E - I Ct+1(x, y, d) = 1 if S >= T Ct+1(x, y, d) = 0 if S < T Iterate until values don't change much with each iteration. Example: S(4, 4, 2) = 1 + 10 - 3 if = 2.0 and T = 3.0 S(4, 4, 2) = 5; Ct+1(4, 4, 2) = 1 21

Demonstration of how it works Input stereogram State of network over time (Brightness indicates disparity). 22

Summary of Marr-Poggio Horizontal lines: constant Right eye position. Vertical lines: Constant left eye position. Diagonal lines: Constant disparity. 23

Limitations of the Marr-Poggio Algorithm Problems with Marr-Poggio algorithm: Does not specify what features are being matched. Does not make use of zero crossings or multiple spatial scales. Does not make use of vergence eye movements. Importance of Vergence Eye Movements: Humans can only fuse images with disparities of about +/- 10 min of arc. This range is called Panum's fusional area. If we need to fuse the images of an object that is nearer or farther than this, we make vergence eye movements to change the plane of fixation. Humans make many vergence eye movements as we scan the environment. 24