Understanding Semiotics and Multimodality in Communication

Exploring the realm of semiotics and multimodality, this study delves into the use of multiple semiotic modes in communication. It discusses the significance of combining different modes like verbal, visual, and aural symbols to convey messages effectively. The concept of multimodal texts and their role in communication is highlighted, along with the importance of semiotics in understanding translation and interpretation. Various approaches such as social semiotics and pragmatics are discussed to analyze the organization of signs in different modes of communication.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

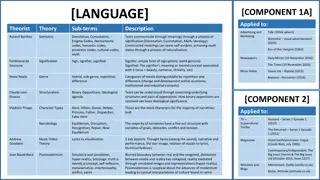

On the Road to Multimodality: Semiotics Sara Dicerto King s College London London, UK Abbas Khudhayyir Hussein Outlines Definitions Introduction - Multimodal Text - Multimodality Semiotics The Organization of Signs: The Realm of Semiotics

Verbal Visual Aural Symbols Icons Indices - - - - - - On Text, Many Semiotic Model: Social Semiotics Content - Discourse - Design Expression - Production - Distribution Baldry and Thibault (2005) :Multimodal Transcription and Text Analysis Taking Stock - Ideational - Interpersonal - Textual Beyond the Multimodal Code References

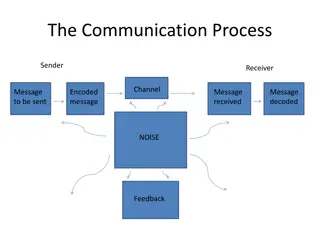

Multimodal Texts , Multimodality and Semiotics Multimodal Texts: texts which communicate their message by using more than one semiotic mode or channel of communication. -Examples: magazine articles which use words and pictures ,websites which contain audio clips alongside the words ,film which use words ,music, sound effect s and moving images. -Almost all human communication is intrinsically multimodal! Multimodality To understand the concept of modality for translation purpose ,we have to adopt various perspectives and combine different approaches such as: semiotics ( particularly social semiotics) ,analysis different modes and how they are organized ,their similarities/differences ,pragmatics which support to understand multimodality in context. Therefore, the relevant literatures to the development of the model for the study of multimodality in translation are rich and varied.

Semiotics Cambridge English dictionary defines semiotics as The study of signs and symbols ,what they mean ,and how they are used. i.e. Semiotics is the study of signs and symbols and their use or interpretation. https://dictionary.cambridge.org/dictionary/english/semiotics Dictionary of Language and Linguistics defines Semiotics systematic study of linguistic and non- linguistic signs. The logico-philosophical approach to language signs has been summarized by C.W Morris and R. Carnap ,who defined the field of semiotics as consisting of three main branches : PRAGMATICS, the study of how signs and symbols are used by man for communication in a particular language ,SEMANTICS ( with a theoretical or abstract and a descriptive or empirical component), the study of the relationships between symbol and its ,referent and SYNTACTIC ,the study of symbols in relation to each other. (Hartmann & Strok ,1972:205) as the

The Organization of Signs: The Realm of Semiotics We start with semiotics in the study of multimodal texts because they are based on semioticsystem or semioticmodes ,namely system of meaning. (Haliday and Webster 2003:2) .Thus, Austacy and Bull define Multimodal Text as follows: A text which combines two or more semiotic systems. This definition is arguably too broad and modified as follows a text may be defined as multimodal when it combines at least two semiotic systems that are not necessary ancillary to one another. The current study achieved by S. Dicerto argued that semiotics in the context of multimodality in translation needs to be taken in consideration when choosing a taxonomy suitable for stated research purpose. Barthes (1977) looks at images and sounds (i.e. visual and non-verbal aural signs) as two broad categories worth exploring for regularities in multimodal meaning production, along with language (i.e. verbal signs). The modes intervening in multimodal message formation will very much depend on the nature of the multimodal itself ,which can include verbal and visual signs (e.g. a poster and advertisement ) ,verbal and aural signs ( radio program),visual and aural signs (e.g. dance performance) or all three ( e.g. film). A team led by Kay O Halloran was created to help understand howlinguistics ,visual and audio resources function to attract attention and create particular views of the world. implicitly confirming that verbal, visual and aural signs are the resources leading recipients towards a certain interpretation of a multimodal text.

The above mentioned definition of multimodal text can be further specified as follows: a text may be defined as multimodal when it combines at least two of verbal ,visual and/or aural semiotic system. Static and Dynamic Multimodal Text Static multimodal texts refer to the texts with visual and verbal components only. Dynamic texts refer to texts with all the types ( verbal, visual and aural) of semiotic systems. Thus, the following indicates what types of sign are grouped in each category: verbal: signs belong to oral and written language. Visual: visual signs other than language Aural : aural signs other than language From semiotic perspective, the verbal ,visual and aural systems differ in the way they convey meaning, and their differences are connected with the signs that comprise them. One of the most well-known taxonomies of signs.( Peirce 1960:2.247). Divides them into three categories :symbols ,icons and indices. To use Saussurean terminology. These signifiers show different types of relationship with the respective signified , namely, the concepts they represent. This can be illustrated in the following:

signs signifier Signified

signifier Icon index symbol

Sign can be classified into : denotation and connotation. Denotation is the literal meaning of the word. Connotation represents the various social overtones ,cultural implications ,or emotional meanings associated with a sign. Sign has two parts: signifier and signified. Signifiers are the physical forms of a sign such as: a sound ,word or image that created a communication .For example, image of face book, T.V ,Music Signified is the concept that a signifier refers to . i.e. The mental image that creates in mind is called signified. For example, what concepts comes when you see the image of face book ? Someone says, it is social media, it refers to friends , families ..etc. Signifier is classified into three types ,namely: Icon ,symbol and index. Icon is the physical resemblance between signifier and signified such as photo graph ,..etc Symbol is arbitrary relation between signifier and signified . It is the opposite of an icon ,so it does not resemble the signifier that is being represented. For instance the snow signifies death in Euorepean culture, but it signifies love or hope in Asian culture. Index signifier has a direct correlation to space and time with the signified ,i.e. the relation between signifier and signified is concrete. For example, the proof of the existence of the signifier ,such as finger pit ,cigarette butts are the evidences of that somebody had just been here. Question: what kind of signifier is a shot? It is an index and an icon . Index because it is the proof of existence of a person or an object in front of the camera and icon because it bears great resemble to the object or person.

To map this taxonomy on the three semiotic models investigated in this study ,language is regarded as mostly symbolic ( Peirce,1960:2.2292; Chandler 2007:38) as words have an arbitrary connection with their signifieds ;visual communication is mainly iconic or indexical ( Chandler2007:43), as images tend to establish relationships of resemblance or causality with the objects they represent ; sounds are mostly indexical, as they are always in a causal relationship with the objects that produce them . Verbal ,visual and oral signifiers thus differ in the type of relationship they create with their signified ,the verbal having a conventionalized connection with them ( due to their symbolic nature) and the visual and aural showing some sort of similarity or contiguity to them because of their iconicity/indexicality. In general verbal, visual and aural signs play a different role in communication ,and are more or less suited to conveying information of a diverse nature on the basis of the distinct relation they tend to establish with their signified.

Kress notes how it is now impossible to make sense of text ,even of their linguistic parts alone, without having a clear idea of what { .} other features might be contributing to the meaning of a text . (Kress,2000:237). Baldry and Thibault support this view and describe multimodal meaning as multiplicative rather than additive. On the other hand, a comprehensive model for multimodal ST analysis needs to be multilayered and to take into account all these different dimensions of the text by: Investigating in depth the verbal, visual and aural semiotic modes in order to understand their individual organization, their potential and limitations; Understanding how semiotic systems differing in their resources and their specialization cooperate in conveying multimodal meaning in order to map their relationships; Studying multimodal texts in terms of usage in context, including in the analysis of a multimodal ST, the meaning contributed by the recipient in relation to their knowledge of the world and the contextual information they are aware of. In this particular instance of a model intended for translation purposes, these three dimensions of analysis will have to be investigated in terms of how they are likely to influence possible translations of the multimodal text.

One Text, Many Semiotic Modes: Social Semiotics semiotic modes interact with each other to form multimodal texts. This lack of a general model has been noted by St ckl, who claims that we seem to know more about the function of individual modes than about how they interact and are organized in text and discourse (2004: 10). Research in the field has been developing towards a higher level of generality, with academic attention being increasingly drawn towards the specific topic of the relationships between the verbal and the visual. looking at potential overriding principles of multimodality, discussed here in chronological order, come from Kress and Van Leeuwen (2001) and Baldry and Thibault (2005). Research in multimodality has not yet established a model suited to the study of how

Kress and Van Leeuwen (2001): Multimodal Discourse The purpose of Kress and Van Leeuwen s work is to explore the common principles behind multimodal communication [ ] [moving] away from the idea that the different modes in multimodal texts have strictly bounded and framed specialist tasks (2001: 1 2). Kress and Van Leeuwen acknowledge the possibility of exploring these common principles working out what they call different grammars for each semiotic mode (such as the grammar of visual design), in order to research their differences and their areas of overlap. Nevertheless, they argue that a full picture of multimodality cannot be painted in grammatical terms only. Thus, they take a novel approach towards the problem, setting aside a discussion of theoretical principles and focusing on the product of the interaction of several semiotic resources in practice namely, in authentic multimodal texts. In so doing, they attempt to analyze meaning production by dividing the practice of multimodal communication into different strata ,namely domains of [multimodal] practice (2001: 4). Their argument is that meaning is made in the strata of a message, and the interaction of modes can happen in all or some of these. Kress and Van Leeuwen s basic distinction is between the strata of content and expression, each showing two sub-strata: discourse and design as sub-strata of content, and production and distribution as sub-strata of expression. The purpose of the analysis is to show how meaning is made in multimodal terms at these levels of articulation, and in what terms the different semiotic resources can contribute to the meaning-making process.

Kress and Van Leeuwens framework of analysis can be summarized as follows (2001: 4 8): Content: Discourses are socially situated forms of knowledge about reality providing information about a certain process or event, often along with a set of interpretations and/or evaluative judgments. Design is the abstract conception of what semiotic resources to use for the production of a message about a certain discourse. Expression: Production is the material articulation of the message, the actual realization of design. For example in fashion magazine the choice of semiotic recourses enacted during the process of design to convey the message selected which took place in various steps (e.g. writing, editing, printing). Distribution is the technical side of production and relates to the actual means exploited for the articulation of the message, as the act of distribution can convey or influence meaning.

Baldry and Thibault (2005) :Multimodal Transcription and Text Analysis Baldry and Thibault produce a framework meant to describe general multimodal principles applicable to multimodal texts, seek[ing] to reveal the multimodal basis of a text s meaning in a systematic rather than an ad hoc way (2005:). Their framework pivots around two main theoretical constructs. The first is the concept of cluster , which refers to a local grouping of items and is used in particular in the analysis of static texts (2005: 31) to indicate that two or more signs from different modes form part of the same unit of meaning due to their proximity and are therefore to be analysed together. The second is the concept of phase namely, a time-based grouping of items codeployed in a consistent way over a given stretch of text (Baldry and Thibault 2005: 47). The concept of phase is used to signal that two or more signs from different modes are to be analyzed in conjunction due to their simultaneous or near-simultaneous use. This idea is mostly applicable to dynamic texts, as these show a development over time. The idea of phase is taken from Gregory s work on phasal analysis, in which phases are described as characterizing stretches within discourse [ ] exhibiting their own significant and distinctive consistency and congruity ( ((2002:321)

Taking Stock Kress and Van Leeuwen Grammar of Image Van Leeuwen (1996/2006( studied and proposed what they call a grammar of images , following Halliday sdefinition of grammar as a means of representing patterns of experience (1985: 101(. In order not to create confusion between their usage of the word grammar and its traditional language-based meaning, their approach to the investigation of the regularities of images starts out by setting the boundaries of what their project shares or does not share with Linguistics. In their view, the visual domain should not be analysed in terms of syntax or semantics, and their purpose is not to look for more or less direct equivalents to verbs and nouns; each semiotic system in their view has a different organisation, with only partial overlaps, and care should be taken in drawing unwarranted parallelisms between the mechanics of each mode. Nevertheless, these forms and mechanisms should still allow a semiotic system to perform the three metafunctions that Halliday assigned to language, and which Kress and Van Leeuwen believe can be generalised further to apply to the visual world as well. The three well- known metafunctions described in Halliday s work (1985/1994) are: Ideational, which deals with aspects of human perception and consists in the ability to represent human experience; Interpersonal, which deals with creating a relation between producer and receiver of the sign; Textual, which deals with the ability of a text to be internally and externally coherent through the establishment of logical ties

In their work, Kress and Van Leeuwen discuss the means by which images perform these metafunctions, also making comparisons with the means used by language. For example, they discuss how images can show concrete scenes, being able to represent human experience with a high degree of detail (ideational metafunction); features such as perspective can be used to create a relationship with the recipient, by making them part of the scene (interpersonal metafunction); finally, visual characteristics such as composition (viz, the position occupied by the different elementsin an image) can be used for the rendition of the active and passive voice of verbs and to influence coherence within the text (textual metafunction). Summarizing Kress and Van Leeuwen s claim, the visual mode has more limited representational capabilities than the verbal mode, but its ability to perform the Hallidayan metafunctions still sets it apart as a mode with a narrative potential independent of other modes and capable of performing with the highest degree of detail the narration of physical action, being at the same time less suited than language to describing cognitive processes. Machin s discussion of Kress and Van Leeuwen s work offers a detailed explanation about the differences in meaning-making between language and images; nevertheless, Machin reaches the conclusion that, contrary to what Kress and Van Leeuwen claim, the visual mode cannot satisfy the requisites set by Halliday for a complex semiotic system , and hence its regularities cannot be properly called a grammar (2007: 159 188). While this may sound like a purely terminological problem, we will come back to this point and its importance in the multimodal debate in Chap. 4

References Al-Hilali and Kh n.(1996) Interpretation of the meaning of The Noble Quran in the English Language. Darrusalam, publisher and distributer ,Riyadh Saudi Arabia. Baldry, A., & Thibault, P. J. (2005). Multimodal Transcription and Text Analysis. London: Fontana. Barthes, R. (1977). Rhetoric of the Image. In R. Barthes (Ed.), Image Music Text, (pp. 32 51). London: Fontana. Chandler, D. (2007). Semiotics: The Basics. New York: Routledge. Halliday, M. A. K. (1994). An Introduction to Functional Grammar (2nd ed.).London: Edward Arnold. Halliday, M. A. K., & Webster, J. J. (2003). On Language and Linguistics. London: Continuum. Hartman and F.C Strok.(1972)Dictionary of Language and Linguistics Applied Science publisher.LTD London Kress, G. (2000). Multimodality: Challenges to Thinking About Language. TESOL Quarterly, 34(2), 337 340. Kress, G., & Van Leeuwen, T. (2001). Multimodal Discourse: The Modes and Media of Contemporary Communication. London: Hodder Arnold. Peirce, C. S. (1960). Collected Writings. Cambridge: Harvard University Press. Van Leeuwen, T. (2006). Typographic Meaning. Visual Communication, 4, 137 143 Websites https://dictionary.cambridge.org/dictionary/english/semiotics