Understanding Iterative Methods in Linear Algebra

Explore the concepts of iterative methods such as Jacobi and Gauss-Seidel for solving systems of linear equations iteratively. Understand conditions for convergence, rate of convergence, and ways to improve convergence speed. Delve into iterative schemes in matrix forms, convergence criteria, eigenvalues, and spectral radius for iterative solvers.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

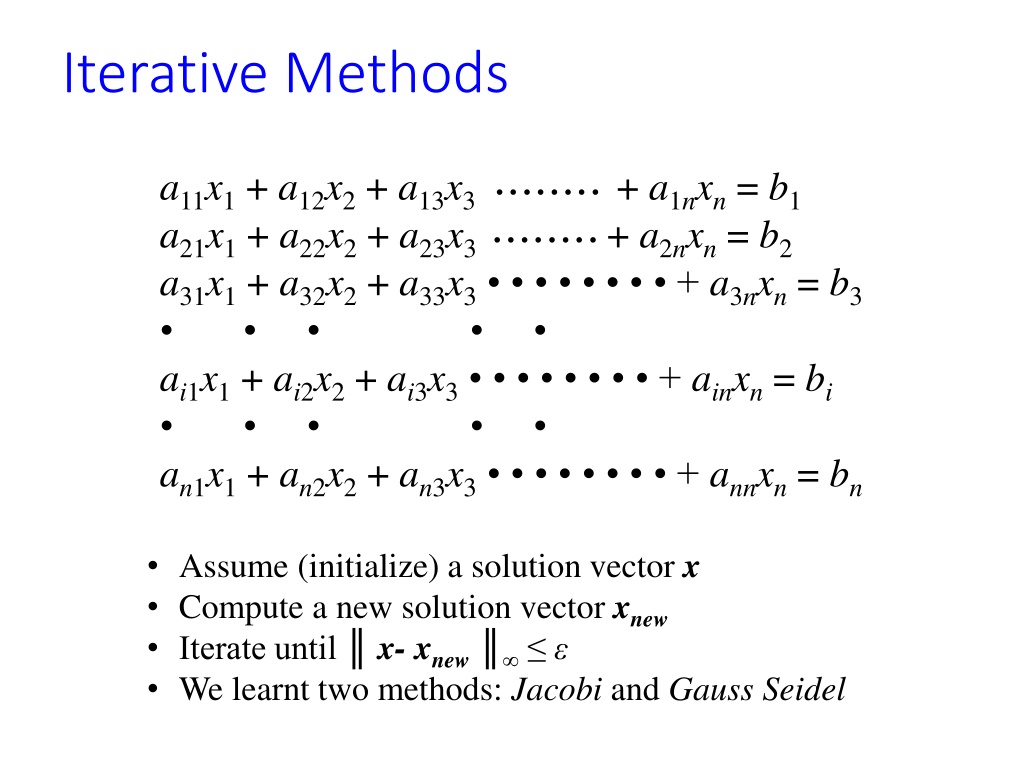

Iterative Methods a11x1 + a12x2 + a13x3 + a1nxn = b1 a21x1 + a22x2 + a23x3 + a2nxn = b2 a31x1 + a32x2 + a33x3 + a3nxn = b3 ai1x1 + ai2x2 + ai3x3 + ainxn = bi an1x1 + an2x2 + an3x3 + annxn = bn Assume (initialize) a solution vector x Compute a new solution vector xnew Iterate until x- xnew We learnt two methods: Jacobi and Gauss Seidel

Questions? What are the conditions of convergence for the iterative methods? Rate of convergence? Can we make them converge faster?

Iterative Schemes in Matrix Forms A = L + D + U Ax = b translates to (L + D + U)x = b Jacobi: for an iteration counter k ) 1 + ) 1 + ( ( ( ( ) ) k k k k = = + + + + U ( ) ) U ( Dx Dx L L x x b b ) 1 + ) 1 + ( ( 1 1 ( ( ) ) 1 1 k k k k = = + + + + U ( U ( ) ) x x D D L L x x D D b b Gauss Seidel: for an iteration counter k ) 1 + ) 1 + ( ( ( ( ) ) k k k k + + = = + + ( ( ) ) L L D D x x Ux 1 ) b b Ux ) 1 + ) 1 + ( ( 1 ( ( ) ) 1 1 k k k k = = + + + + + + ( ( ) ( ( ) ) x x L L D D Ux Ux L L D D b b

Iterative Methods: Convergence + ( ) 1 ( ) k k = + For all iterative methods: x Sx c 1 1 = + = U ( ) S D L c D b Jacobi: 1 1 = + = + ( ) ( ) S L D U c L D b Gauss Seidel: True error: e(k) = x- x(k) We showed that e(k+1) = Se(k) This constant matrix S governs whether error at each iteration step will increase or decrease: Stationery Iterative Methods Thus, e(k) = Ske(0) lim lim k ( ) k = 0 Methods will converge if: S = 0 e k k

Iterative Methods: Convergence For the solution to exist, the matrix S should have full rank (= n) The iteration matrix S will have n eigenvalues and n independent eigenvectors that will form the basis for an n-dimensional vector space Initial error vector: e n j = 1 j n j v = 1 j n ) 0 ( = C jv j = 1 j From the definition of eigenvalues: Svj = jvj ?(?) =???(0)=?? ?=1 n = ? ( ) k k j ? ? ? ? = e C v j j 1 j Necessary condition: Spectral Radius, (S) < 1 Sufficient condition: because S ( ) A A 1

Defining Vector and Matrix Norms 6

Vector Norms: A vector norm is a measure (in some sense) of the size or length of a vector Properties of Vector Norm: ? > 0 for ? ?; ? = 0 iff ? = ? ?? = ? ? for a scalar ? ? + ? ? + ? Lp-Norm of a vector x: 1? ?+ ?2 ? + ?? ? ??= ?1

Lp-Norm of a vector x: ??= ?? ?? Example Norms: p = 1: sum of the absolute values p = 2: Euclidean norm p : maximum absolute value, ? = max ?+ ?? ? + ?? ? 1 ? ??? 8

Lp-Norm of a vector x: ??= ?? ?? Example Norms: p = 1: sum of the absolute values p = 2: Euclidean norm p : maximum absolute value, ? = max ?+ ?? ? + ?? ? 1 ? ??? 9

Matrix Norms: A matrix norm is a measure of the size of a matrix Properties: ? > 0 for ? ?; ? = 0 iff ? = ? ?? = ? ? for a scalar ? ? + ? ? + ? ?? ? ? ?? ? ?for consistent matrix and vector norms Lp Norm of a matrix A: ??? ?? ??= max ? ? Spectral Radius: largest absolute eigenvalue of matrix A denoted by (A) If there are m distinct eigenvalues of A: ? ? = max Lower bound of all matrix norms:? ? ? For any norm of matrix A:? ? = lim 1 ? ??? 1? ? ??

Matrix Norms: ? Column-Sum norm: ?1= max 1 ? ? ?=1 ??? 12where, j are the Spectral norm: ?2= eigenvalues of the square symmetric matrix ATA. max 1 ? ??? ? Row-Sum norm: ? = max 1 ? ? ?=1 ??? ? ?=1 ? 2= Frobenius norm: ??= trace ??? ?=1 ??? Trace of a matrix is the sum of elements on the main diagonal

Jacobi Convergence -aij aii for i j S = - D-1(L+U) sij= 0 for i = j If we use row-sum norm: max 1 i n Recall, Sufficient Condition for Convergence: S n n aij aii max 1 i n S = sij= j=1 j=1 1 n aii> , i =1,2, n aij j=1,j i

Iterative Methods: Convergence Using the definition of S and using row-sum norm for matrix S, we obtain the following as the sufficient condition for convergence for both Jacobi and Gauss Seidel: n = = , , 2 , 1 a a i i n ii ij , 1 j j If the original matrix is diagonally dominant, it will always converge!

Rate of Convergence Number of iterations (k) required to decrease the initial error by a factor of 10-m is then given by: ?? ?0 ? ??= 10 ? or ? ? =? ? = log10? ? log10? ? ? R is the asymptotic rate of convergence of the iterative methods.

Improving Convergence Recall Gauss Seidel: 1 i = n = ) 1 + ( ( ) k k b a x a 1 x i ij ij j j + ) 1 + 1 j j i ( k = , 1 = , , 2 x i n i a ii Re-Write As: i-1 n (k+1)- bi- (k) aijxj aijxj j=1 j=i (k+1)= xi (k)+ , i =1, 2, n xi aii (k+1)= xi (k)+di (k), i=1, 2, n xi

Successive Over/Under Relaxation (k+1)= xi (k)+wdi (k), i=1, 2, n, w >0 xi 0 < < 1 : Under relaxation = 1 : Gauss Seidel 1 < < 2 : Over Relaxation i-1 n (k+1)- bi- (k) aijxj aijxj j=1 j=i+1 (k+1)=(1-w)xi (k)+w , i =1, 2, n xi aii

Pivoting, Scaling and Equilibration, Perturbation Analysis

Recall Gauss Elimination: Step 1: k = 1 ??1 ?11; ???= ???-??1?1?; ??= ?? ??1 ?1 i= 2, 3, .n and j= 2, 3, n ??1= Step k: k = k ??? ???; ???= ???-??????; ??= ?? ??? ?? for i = k+1, k+2, .n and j = k+1, k+2, .n ???= At each k, the all the elements in rows > k are multiplied by lik. Roundoff errors will be magnified and eventually may grow out of bound if lik > 1 (i.e., algorithm become unstable) Condition for stability: lik 1 (for all k) This will happen only if akk is the largest element in the kth column for rows k If not, make it! (This operation is called pivoting)

Partial Pivoting: Matrix at the start of the kth Step: Row Exchange apk Before operations of the kth step, Search for max interchange kth row with the pth row Interchange the right hand side vector bk with bp, if not working with the augmented matrix.) ? ? ????. Let s say, it occurs at i = p

Full Pivoting: Matrix at the start of the kth Step: Column Exchange Row Exchange apq Before operations of the kth step, Search for max ? ? ? ? ? ? interchange kth row with pth row, kth column with the qth column Interchange the right hand side vector bk with bp, if not working with the augmented matrix.) Column interchange renaming of variables k ???. Let s say, it occurs at i = p; j = q q

Perturbation Analysis Consider the system of equation Ax = b Question: If small perturbation is given in the matrix A and/or the vector b, what is the effect on the solution vector x ? Alternatively, how sensitive is the solution to small perturbations in the coefficient matrix and the forcing function. R1 = 10.0 1.2 ; R2 = 15.0 1.8 ; R3 = 25.0 2.7 VA - VC = 100.0 11.0 V; VA - VB= 60.0 9.0 V Example: C Let s denote the resulting perturbation in the solution vector x as x R3 B i3 R1 A A i1 b b i2 ?1 ?2 ?3 0 0 0 0 0 1 0 10 1 15 15 1 25 0 0 + 1.8 1.8 2.7 0 = + 11 9 100 60 R2 1.2 A

Perturbation in matrix A: System of equation: (A + A)(x + x)= b A x + A(x + x)=0 since, Ax = b x =- A-1 A(x + x) Take the norms of vectors and matrices: ?? = ? ??? ? + ?? ? ? ? ? ?? ?? ? + ?? ?? ? + ? ? ?? Product of perturbation quantities ?? ? ?? ? ? ? ?

Perturbation in forcing vector b: System of equation: A(x + x)=(b + b) A x = b since, Ax = b x =- A-1 b Take the norms of vectors and matrices: ?? ? ?? = ? ??? ? ? ?? = ? ? ? ?? ? ? ? ? ? ?? ? ?? ? ? ? ?

Condition Number: Condition number of a matrix A is defined as: ? ? = ? ? ? ? ?is the proportionality constant relating relative error or perturbation in A and b with the relative error or perturbation in x Value of ? ? depends on the norm used for calculation. Use the same norm for both A and A-1. If ? ? 1 or of the order of 1, the matrix is well- conditioned. If ? ? 1, the matrix is ill-conditioned.