Understanding Gradient Descent Optimization

Explore the concept of Gradient Descent optimization method, its application in solving optimization problems, tuning learning rates, adaptive learning rates, and Adagrad algorithm. Learn how to start, compute gradients, and make movements for efficient optimization.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

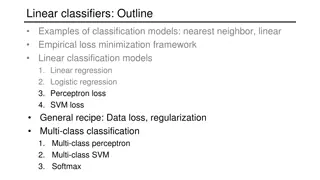

Review: Gradient Descent In step 3, we have to solve the following optimization problem: ? = argmin ? L: loss function ?: parameters ? ? Suppose that has two variables { 1, 2} 0 ?1 ?2 ?? ?1 ?? ?2 ??1 ??2 Randomly start at ?0= ?? ? = 0 0 0 ? 0 1 1= ?1 ?2 ?1 ?2 ?1 ?2 ?1 ?2 ?? ?1 ?? ?2 ?? ?1 ?? ?2 ??1 ??2 ??1 ??2 ?1= ?0 ??? ?0 0 2 2= 1 1 ? 1 ?2= ?1 ??? ?1 1

Review: Gradient Descent ?2 Gradient: Loss ?? ?0 Start at position ?0 ?? ?1 ?0 Compute gradient at ?0 ?1 ?? ?2 Move to ?1 = ?0 - ?? ?0 ?2 Gradient Compute gradient at ?1 ?? ?3 Movement ?3 Move to ?2 = ?1 ?? ?1 ?1

Gradient Descent Tip 1: Tuning your learning rates

( ) = L 1 1 i i i Learning Rate Set the learning rate carefully If there are more than three parameters, you cannot visualize this. Loss Very Large small Large Just make Loss No. of parameters updates But you can always visualize this.

Adaptive Learning Rates Popular & Simple Idea: Reduce the learning rate by some factor every few epochs. At the beginning, we are far from the destination, so we use larger learning rate After several epochs, we are close to the destination, so we reduce the learning rate E.g. 1/t decay: ??= ? ? + 1 Learning rate cannot be one-size-fits-all Giving different parameters different learning rates

??=?? ?? ? ??= Adagrad ?? ? + 1 Divide the learning rate of each parameter by the root mean square of its previous derivatives Vanilla Gradient descent ??+1 ?? ???? w is one parameters Adagrad ??: root mean square of the previous derivatives of parameter w ??+1 ?? ?? ???? Parameter dependent

??: root mean square of the previous derivatives of parameter w Adagrad ?1 ?0 ?0 ?0= ?0 2 ?0?0 1 2 ?2 ?1 ?1 ?1= ?0 2+ ?1 2 ?1?1 ?3 ?2 ?2 1 3 ?2?2 ?2= ?0 2+ ?1 2+ ?2 2 ? 1 ??+1 ?? ?? ??= ?? 2 ? + 1 ???? ?=0

Adagrad Divide the learning rate of each parameter by the root mean square of its previous derivatives ? ??= 1/t decay ? + 1 ?? ??+1 ?? ? ???? ?? ? 1 ??= ?? 2 ? + 1 ?=0 ? ??+1 ?? ?? ? ?? 2 ?=0

??=?? ?? ? ??= Contradiction? ?? ? + 1 Vanilla Gradient descent ??+1 ?? ???? Larger gradient, larger step Adagrad Larger gradient, larger step ? ??+1 ?? ?? ? ?? 2 ?=0 Larger gradient, smaller step

??=?? ?? ? ??= Intuitive Reason ? + 1 ?? How surprise it is g0 g1 g2 g3 g4 0.001 0.001 0.003 0.002 0.1 g0 g1 g2 g3 g4 10.8 20.9 31.7 12.1 0.1 ? ??+1 ?? ?? ? ?? 2 ?=0

Larger gradient, larger steps? Best step: |2??0+ ?| 2? ? |?0+ 2?| Larger 1st order derivative means far from the minima ?0 ? ? = ??2+ ?? + ? 2? |2??0+ ?| ?? ?? ?0 = |2?? + ?|

Larger 1st order derivative means far from the minima Do not cross parameters Comparison between different parameters a > b a b ?1 ?2 c c > d d ?2 ?1

Second Derivative Best step: ? |2??0+ ?| 2? |?0+ 2?| ?0 ? ? = ??2+ ?? + ? 2? |2??0+ ?| ?? ?? ?0 = |2?? + ?| ?2? ??2= 2? |First derivative| The best step is Second derivative

Larger 1st order derivative means far from the minima Do not cross parameters Comparison between different parameters |First derivative| The best step is a > b Second derivative a Larger Second b ?1 smaller second derivative ?2 c c > d Smaller Second d ?2 ?1 Larger second derivative

The best step is ? ??+1 ?? ?? |First derivative| ? ?? 2 ?=0 Second derivative ? Use first derivative to estimate second derivative larger second derivative smaller second derivative ?1 ?2 first derivative2

Gradient Descent Tip 2: Stochastic Gradient Descent Make the training faster

Stochastic Gradient Descent 2 Loss is the summation over all training examples ? ?? ? + ???? ? = ? ( ) Gradient Descent = L 1 1 i i i Stochastic Gradient Descent Faster! Pick an example xn 2 ( ) = L 1 1 i i n i ? ??= ?? ? + ???? Loss for only one example

Stochastic Gradient Descent Stochastic Gradient Descent Update for each example Gradient Descent Update after seeing all examples If there are 20 examples, 20 times faster. See only one example See all examples See all examples

Gradient Descent Tip 3: Feature Scaling

Source of figure: http://cs231n.github.io/neural- networks-2/ Feature Scaling ? = ? + ?1?1+ ?2?2 ?2 ?2 ?1 ?1 Make different features have the same scaling

Feature Scaling ? = ? + ?1?1+ ?2?2 w w w w y + 1x y 1 + 1x 1 1, 2 1, 2 2 2 2x 2x b b 1, 2 100, 200 w w Loss L Loss L 2 2 w w 1 1

Feature Scaling ?? ?2 ?1 ?2 ?3 ?? ?1 ?1 ?2 1 For each dimension i: 2 1 2 mean: ?? standard deviation: ?? ? ?? ?? ? ?? The means of all dimensions are 0, and the variances are all 1 ??

Gradient Descent Theory

Question When solving: ? = arg??? by gradient descent ? ? ? Each time we update the parameters, we obtain ? that makes ? ? smaller. ? ?0> ? ?1> ? ?2> Is this statement correct?

Formal Derivation L( ) Suppose that has two variables { 1, 2} 2 2 Given a point, we can easily find the point with the smallest value nearby. 1 0 How? 1

Taylor Series Taylor series: Let h(x) be any function infinitely differentiable around x = x0. ( )( )( k ! k h x ( ) x )k = 0 h x x 0 = ( ) x 0 k ( )( ! 2 h x ( )( x h ) ) 2 = + + + 0 h x x x x 0 0 0 0 ( ) x ( ) x ( )( 0 x ) + h h h x x When x is close to x0 0 0

E.g. Taylor series for h(x)=sin(x) around x0=/4 sin(x)= The approximation is good around /4.

Multivariable Taylor Series ( )( ( )( , , h x y h x y ( ) ( ) ) ) = + + 0 x 0 0 y 0 , , h x y h x y x x y y 0 0 0 0 + something related to (x-x0)2 and (y-y0)2+ When x and y is close to x0 andy0 ( )( ( )( , , h x y h x y ( ) ( ) ) ) + + 0 x 0 0 y 0 , , h x y h x y x x y y 0 0 0 0

Back to Formal Derivation Based on Taylor Series: If the red circle is small enough, in the red circle ( )( ( )( L , L , a b a b ( ) ( ) ) ) b + + L L , a b a 1 2 1 2 ( ) b , = L s a ( ) ( ) L( ) L , L , a b a b = = , u v ( ) b 1 2 2 a, ( ) s L ( ) ( ) b + + u a v 1 2 1

Back to Formal Derivation constant Based on Taylor Series: If the red circle is small enough, in the red circle ( ) ( ) a u s + + 1 L ( ) b , , = L s a ( ( ) b ( ) ) L , L v a b a b = = , u v 2 1 2 Find 1 and 2 in the red circle minimizing L( ) ( ) ( 1 a + ) 2 2 2 b d L( ) 2 ( ) b 2 a, Simple, right? d 1

Gradient descent two variables Red Circle: (If the radius is small) ( ) ( u s + L ) ( ) b 2 1 + a v 1 2 ( ) 1, ( ) 1, 2 2 Find 1 and 2 in the red circle minimizing L( ) ( ) ( 1 a + ) 2 2 2 b d ( ) v 2 u, 1 2 To minimize L( ) a u u 1 = 1 = b v v 2 2

Back to Formal Derivation constant Based on Taylor Series: If the red circle is small enough, in the red circle ( ) ( ) a u s + + 1 L ( ) b , , = L s a ( ( ) b ( ) ) L , L v a b a b = = , u v 2 1 2 Find ?1 and ?2 yielding the smallest value of ? ? in the circle ( ) L , a b a u a a 1 This is gradient descent. = = 1 , ( ) L b b v b 2 2 Not satisfied if the red circle (learning rate) is not small enough You can consider the second order term, e.g. Newton s method.

More Limitation of Gradient Descent Loss Very slow at the plateau ? Stuck at saddle point ?1 ?2 Stuck at local minima ?? ?? = 0 ?? ?? 0 ?? ?? = 0 The value of the parameter w

Acknowledgement Victor Chen