Understanding Directed Acyclic Graphs (DAGs) in Epidemiology

Exploring the significance of Directed Acyclic Graphs (DAGs) in pharmacoepidemiology, this content delves into the challenges faced in analyzing observational data and the benefits of DAGs in identifying confounders, mediators, and colliders. The conclusion emphasizes the importance of transparent research and highlights the limitations of DAGs in showing the direction and strength of effects. Additional topics covered include causal pathways, terminology, and practical exercises.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

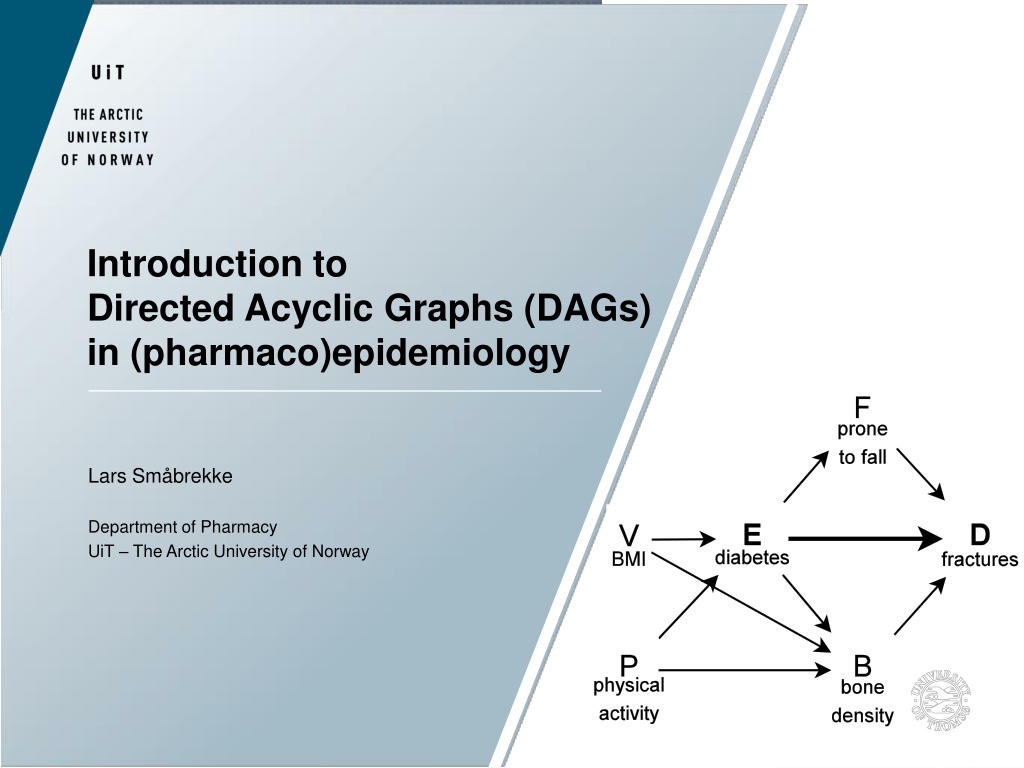

Introduction to Directed Acyclic Graphs (DAGs) in (pharmaco)epidemiology Lars Sm brekke Department of Pharmacy UiT The Arctic University of Norway

Why bother with Directed Acyclic Graphs (DAGs)? Our problem Observational data and experimental data are different We face many pitfalls in the analysis of observational data Confounding, colliding, conditioning on the outcome, regression to the mean, mathematical coupling bias, composite variable bias .. What about RCT s? Biased?

Conclusion Why bother with DAGs? DAGs help in identifying the status of the covariates in a statistical model (=confounders, mediators & colliders) Variables that needs to be adjusted for, and unintended consequences of adjustment Consequence of adjustment for variables affected by prior exposure Time dependent bias (not a topic today) Encourages more transparent research State your estimand ( What you seek ) E.g. The true difference in outcome due to exposure State your estimator ( How you will get there ) E.g. GLMs State you estimate (What you get ) The estimated difference in outcome from model coefficient

Conclusion Why bother with DAGs? DAGs don t show Direction of effect or strength of effect Interaction, synergism, antagonism

Agenda Conclusion & Background Definitions & terminology DAG concepts Paths, causal & non-causal Confounder, mediator & collider (proxy confounder, competing exposure) Drawing and interpreting DAGs Exercises (entry level) Introduction to DAGitty

Examples & Exercises Courtesy of Hein Stigum (Norwegian Institute of Public Health) Jon Michael Gran (UiO) Articles by: Shrier & Platt 2006 Fleischer et al 2008 Platt et al 2009 Schisterman et al. 2009 Williamson et al 2014 Rohrer 2018 Lectures available on www by: Schipf, Hardt & Knuppel de Stavola et al Judea Pearl Arnold KF Tennant PWG Judea Pearl. Causality - Models, Reasoning and Inference, 2009 www.dagitty.net (learning module) Projects in cooperation with: P l Haugen, June Utnes H gli, Dina Stensen, Kristian Svendsen Discussions with Per-Jostein Samuelsen (RELIS)

Models in examples & exercises May be: Unrealistic Over simplified Badly chosen Wrong However I hope they can serve for the sake of exemplification In the real world models should be simple enough to be useful, and complex enough to be realistic Missing arrows in a non-causal path will often be included in a closed path Moving out along the causal chain tend to weaken associations with the outcome

Background Data driven analysis - examples Step-wise backward selection of covariates Based on p-values Change in estimate Omission of a variable in a model changes the estimated exposure effect more than a prespecified threshold Or more advanced tools for model selection Trade-off between complexity of a model and goodness of fit Using these analytical strategies alone can increase rather than decrease bias The direction and the level of bias can be unknown

Background Data driven analysis DAGs = non-parametric models that consists of nodes (=variables) and directed arrows Assume this model on E(xposure) & D(isease). The pointed arrow shows that E precedes D By introducing an arrow between E&D we have made an assumption on causality, and that a change in E causes a change in D E D E is part of the function that we use to assign a value to D

Background Data driven analysis DAGs = non-parametric models that consists of nodes (=variables) and directed arrows Assume this model on E(xposure) & D(isease). (Arrow shows that E precedes D) By introducing an arrow between E&D we have made an assumption on causality, and that a change in E causes a change in D B E D Building blocks of a DAG Chain: Fork: Collider: E B D E B D E B D The presence of either may affect the association of interest, and inclusion in regression may change the effect estimates

Definitions & terminology A node has at least two values Path = any trail from E to D with out repeating itself (= acyclic ) What would be the interpretation of a circular path? A logic consequence - a path cannot pass twice through the same node But different paths can pass through the same node Variables connected with an arrow (or several variables with arrows in the same direction) = a causal path Almost any causal definition will work Variables connected with arrows in different directions = a non- causal path No path = independency The dose response can be linear, threshold, U-shaped or any other (DAGs are non-parametric)

Definitions & terminology A path can be open or closed Conditioning on a variable (=adjusting, stratifying or restricting) is denoted by a parenthesis (or a box) in a diagram E [B] D Conditioning may close or open a path Sequence of connected variables Parent to child E D Ancestors Descendants Exogenous variables = variables with no parents See references for a more comprehensive overview of terminology E.g. back doors , front doors , d-separation

Identifying causal effects from observational data Three general causal assumptions Exchangability A positive probability for receiving the intervention for everyone in the population A well defined intervention (e.g. not multiple versions of treatment). If not met, the magnitude of effect will depend on the proportion receiving each version of the intervention

Causal diagrams Directed Acyclic Graphs (DAGs) For a diagram to represent a causal system, all common causes of any pair of variables must be included in the diagram This is huge undertaking!!! It can be difficult to identify the correct model Divergent information on causal relationships between variables (i.e. what is the direction of an arrow) Consequence: Draw and run several models!

Definitions & terminology In statistical modeling A causal path will not induce bias keep open A non-causal path will induce bias try to close Adjusting, stratifying or restricting (= Conditioning in DAG terminology) on a variable can close or open a path depending on the status of the variable What is the status of the variable in a statistical model?

Example Association and cause - basics A common cause Condition on smoking Smoking Smoking + + + + YF LC YF LC + A confounder induces an association between its effects Conditioning (= restrict, stratify, adjust) on a confounder removes the association

Example Association and cause - basics Mediating variable. A part of the effect of DM on MI is caused by the effect of DM on CHL Assessing the total effect of DM on MI = No adjustment Assessing the direct effect of DM on MI = Adjust for CHL

Example Association and cause - basics Collider variable (two parents of one variable on the same path) Adjusting for e.g. age among those with erectile dysfunction opens a path between CCI and alcoholism, induces spurious correlations and bias True also if you condition on any children of the collider Conditioning on a collider is considered a rudimentary analytical mistake

Four simple rules (From Stigum) 1. A causal path = all arrows in the same direction: E D (Open path) A non-causal path = arrows in different directions a) Confounder: C (Open path) b) Collider: K (Closed path) Conditioning on a non-collider closes the path: [M] or [C] Conditioning on a collider (or a descendant) opens the path: [K] (=bias) 2. 3. 4.

DAG example & comments (Example from Stigum) 1. Is the total effect of E on D biased? Should we adjust for C? What happens if C also has a direct effect on D? Is it a problem if U is unmeasured? 2. 3. 4. 2 min

DAG example & comments 1. Is the total effect of E on D biased? Should we adjust for C? What happens if C also has a direct effect on D? 2. 3. ? Path E D E C U D E C D Type Status Consequence Causal Open Non-causal Open Bias Non-causal Open Bias Adjusting for C E D E [C] U D E [C] D Causal Open Non-causal Closed No bias Non-causal Closed No bias

DAG example & comments 1. Is it a problem if U is unmeasured? Path E D E C U D E C D Type Status Consequence Causal Open Non-causal Open Bias Non-causal Open Bias Adjusting for C E D E [C] U D E [C] D Causal Open Non-causal Closed No bias Non-causal Closed No bias

Example Association and cause more advanced concepts (X=Exposure & Y=Outcome in all examples) (DAGitty learning module) Proxy confounders are covariates that are not themselves confounders, but lie "between" confounders and the exposure or outcome in a causal chain A proxy confounder is a descendant of a confounder and an ancestor of either the exposure or the outcome but not both; else it would be a confounder Adjustment on proxy confounders depends on whether you will analyze direct (=adjust) or total effect (=not adjust) Example: A & M are proxy confounders

Example Association and cause more advanced concepts Competing exposure is an ancestor of the outcome that is not related with the exposure - it is neither a confounder, nor a proxy confounder, nor a mediator Including competing exposures in a regression model will not affect bias, but may improve precision

Drawing DAGs - Direction of arrow? (From Stigum) C Smoking ? does smoking reduce physical activity? Does physical activity reduce smoking, or E D Phys. Act. Diabetes 2 H C Health con. Smoking Maybe another variable (health consciousness) is causing both? E D Phys. Act. Diabetes 2 25

HC use and nasal carriage of S. aureus Minimal? sufficient? adjustment? sets? for? es ma ng? the? direct? effect? of? HC? on? carriage? of? S.? aureus: Age,? alcohol? use,? BMI,? HbA1c,? physical? ac vity,? smoking,? snuff? use,? social? network,? vit.? D? levels

Exercise Tea and depression (Example from Stigum) 1. Write the paths 2. You want the total effect of tea on depression. What would you adjust for? 3. You want the direct effect of tea on depression. What would you adjust for? 4. Is caffeine a mediator or a confounder? O C caffeine coffee E tea D depression 5 minutes 27

Exercise (direct & indirect effects, intermediate variables) Tea and depression 1. 2. 3. 4. See table Total effect: adjust for O Direct effect: adjust for C & O The status of a variables is defined by its path. Caffeine is both a mediator and a proxy confounder (the proportion of caffeine coming from coffee) O C caffeine coffee E tea D depression Path E D E C D E O C D Non-causal Open Type Causal Causal Status Open Open No bias No bias Bias 28

Exercise Statin use and CHD (Example from Stigum) 1. Write the paths 2. You want the total effect of statin on CHD. What would you adjust for? 3. If lifestyle is unmeasured, can we estimate the direct effect of statin on CHD (not mediated through cholesterol)? 4. Is cholesterol a mediator or a collider? U C lifestyle cholesterol E statin D CHD 5 minutes available 29

Exercise (direct & total effect) Statin and CHD (Example of collider stratification bias) 1. 2. 3. 4. See tables Total effect: no adjustments Direct effect: impossible C is an intermediate variable in path 2and collider in path 3 Path E D E C D E C U D Type Causal Causal Non-causalClosed No bias Status Open Open 1 2 3 No bias No bias Adjusting on C Path Type Status 1 Causal Open No bias E D 2 3 Causal Non-causal Closed Bias (total effect) Open Bias (direct effect) E [C] D E [C] U D 30

Summary on total and direct effects U2 M U3 Total effect no unmeasured U1 no unmeasured U2 E D + Direct and total effects no unmeasured U3 U1 Estimating direct effect increase complexity and requires more prior assumptions than a total model 31

Exercise Diabetes and Fractures (From Stigum) 1. Draw the paths 2. Is B a collider? 3. Estimate total effect of E on fractures. Adjusting? 4. What happens if P has an effect on V? 5 minutes 32

Exercise (confounders, colliders & mediators) Diabetes and Fractures 1. See table 2. No there is no single path where B is a collider 3. Adjust for V and P 4. Already adjusted for V Unconditional Path 1 E D 2 E F D 3 E B D 4 E V B D 5 E P B D Type Causal Causal Causal Non-causal Non-causal Status Open Open Open Open Open Mediators Confounders 33

Exercise Survivior bias (Example from Stigum) We want to study exposure early in life (E) on later disease (D) among survivors (S) Early exposure decreases survival A risk factor (R) increases later disease (D) and reduces survival (S) Only survivors are available for analysis 1. Draw the DAG 2. What is the effect of adjusting on survivors? 3. Is it possible to give a non-biased estimate on effect of E on D? 5 minutes 34

Exercise Survivor bias R S 1. See figure 2. See table. Bias 3. Yes. Adjust for R (-) (+) (-) E D No bias Causal Causal Non-causal Open Open Open Closed E D No bias E S D Bias E [S] R D E [S] [R] D Non-causal No bias

Overadjustment Inconsistent definition of overadjustment The Dictionary of epidemiology: Statistical adjustment of an excessive number of variables .. It can obscure a true effect or create an apperant effect when none exist Rothman & Greenland: Intermediate variables, if controlled in an analysis, would usually bias results towards the null . Such control of an intermediate may be viewed as a form of overadjustment

Overadjustment & unnecessary adjustment (Schisterman et al 2009) Causal diagrams Can distinguish overadjustment bias from confounding, selection bias and unnecessary adjustment Definition (from Schisterman et al) Overadjustment bias Control for a mediator (or a descending proxy for a mediator) on a causal path from exposure to outcome Unnecessary adjustment Control for a variable whose control does not affect the expectation of the estimate of the total causal effect between exposure and outcome (but may affect precision)

Overadjustment bias (Schisterman et el 2009) The simplest form of overadjustment bias E [M] D E = Prepregnancy BMI M = Triglycerides D = Preeclapsia In this scenario you can estimate the total effect of E on D using common regression techniques by ignoring M (M = mediator) However adjusting for M can provide an estimat of the direct effect of E on D under certain assuptions

Overadjustment bias (Schisterman et el 2009) Another example of overadjustment bias E U D [M] E = Smoking U = Abnormality of the endometrium (Typically unmeasured) M = Prior history of spontaneous abortion (Descending proxy of U, or an event caused by U) D = Current spontaneous abortion In this scenario you can still estimate the total effect of E on D using common regression techniques by ignoring M However adjusting for M cannot provide an estimate of the direct effect of E on D without bias. Leaves an partially open path from E [U D

Overadjustment bias (Schisterman et el 2009) Another example of overadjustment bias E U D [M] E = Smoking U = Abnormality of the endometrium (Typically unmeasured) M = Prior history of spontaneous abortion (Descending proxy of U, or an event caused by U) D = Current spontaneous abortion In this scenario, conditioning on M will not block the path from E to U to D, and (no bias from M => ascending proxy to U)

Overadjustment bias (Schisterman et el 2009) Generalization of previous DAG#2. Illustrates a general problem with control of variables affected by exposure such as U and M E U D M V E = Smoking U = Abnormality of the endometrium (Typically unmeasured) M = Prior history of spontaneous abortion (Descending proxy of U) D = Current spontaneous abortion V = Unmeasured common cause of M and D causes additional bias in the association between E and D for levels of M. Adjusting on the decending proxy M will cause collider-stratification bias

Overadjustment bias (Schisterman et el 2009) Maternal smoking and neonatal mortality E U D M V E = Pregnancy maternal smoking U = Unmeasured fetal development during pregnancy M = Birth weigth (Decending proxy of U) D = Neonatal mortality V = Unmeasured common cause of U and D

Overadjustment bias (Schisterman et el 2009) Maternal smoking and neonatal mortality E U D M V E = Pregnancy maternal smoking U = Unmeasured fetal development during pregnancy M = Birth weigth (Decending proxy of U) D = Neonatal mortality V = Unmeasured common cause of U and D Including M in the model would not be overadjustment

The effect of maternal smoking and neonatal mortality (Schisterman et al 2009) Including 10,035,444 live births in the USA from 1999-2001 Unadjusted risk ratio for the association between maternal smoking and neonatal mortality: 2.49 (95% CI 2.41-2.56) Adjustment for birth weight: 2.03 (95 % CI 1.97-2.09) This difference is probably because smoking causes changes in U that affects birth weight and neonatal mortality separately

Unnecessary adjustment (Schisterman et al 2009) Unnecessary adjustment occurs in 4 situations: C1: A variable outside the system of interest C2: A variable that causes the exposure only C3: A decendant of E not in the causal pathway C4&C5: A cause or a decendant of the outcome alone The result of adjustment on such variables: The total effect of exposure on outcome will remain unchanged

Summing up so far Data driven analyses of observational data is not enough we need (causal) information from outside the data DAGs visualize this information and guide the planning of a study and the analysis DAGs visualize the concepts of confounding, mediation & colliding, and highlights possible adjusting strategies Increases transparency!! 46

Selection bias Common consequence The association between exposure and outcome among those selected for analysis differs from the association among those eligible Visualizing selection bias Do the heterogeneous types of selection bias share a common underlying causal structure? 47

Selection bias Concept 1: Assume selected are different from unselected Prevalence (D) Old have higher prevalence than young Old respond less to survey (=selection) Selection bias: prevalence underestimated Effect (E D) Old have lower effect of E than young Old respond less to survey Selection bias: Effect overestimated 48

Selection bias (from Stigum) Concept 1. Assume selected are different from unselected Paths smoke CHD Type Causal Status Open S age Age Young Old All Normally, selection variables unknown Population 50 % 50 % RRsmoke 4.0 2.0 3.0 Selected RRsmoke 75 % 25 % 4.0 2.0 3.5 CHD smoke Properties Need smoke-age interaction Cannot be adjusted for (but stratum effects OK) True RR=weighted average of stratum effects RR in natural range (2.0-4.0) Scale dependent (linear vs. multiplicative model) 49

Selection bias Concept 2: Distorted E - D distributions In DAG terminology Collider bias In words Selection by sex and/or age Distorted sex-age distribution Old have more disease Men are more exposed Distorted E - D distribution 50