Understanding Concurrency in Computer Science

Concurrency in computer science involves running multiple threads or processes simultaneously, providing responsiveness, managing I/O devices, and improving performance by utilizing multiprocessors. This concept allows programs to handle tasks more efficiently and effectively through parallel execution. The comparison between threads and processes highlights their similarities in logical control flow and differences in data sharing and cost efficiency.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Lecture 21: Concurrency CS 105

Why Concurrent Programs? Program Structure: expressing logically concurrent programs Responsiveness: shifting work to run in the background 1.2 1.06 Elapsed time (s) 1 0.8 0.6 0.540.28 0.3 0.29 0.4 0.2 0 1 2 Threads 4 8 16 Responsiveness: managing I/O devices Performance: exploiting multiprocessors

Traditional View of a Process Process = process context + (virtual) memory state Virtual Memory Process Control Block Program context: Data registers Stack pointer (rsp) Condition codes Program counter (rip) Kernel context: VM structures File table brk pointer Stack rsp brk Heap Data rip Code 0

Alternate View of a Process Process = thread + other state Thread (main thread) Other data brk Heap Stack Data rsp Code Thread context: Data registers Stack pointer (rsp) Condition codes Program counter (rip) 0 Kernel context: VM structures File table brk pointer

A Process With Multiple Threads Multiple threads can be associated with a process Each thread has its own logical control flow Each thread has its own stack for local variables Each thread has its own thread id (TID) Each thread shares the same code, data, and kernel context Thread 1 (main thread) Thread 2 (peer thread) Shared data brk Heap Stack 1 Stack 2 Data rsp rsp Code Thread 1 context: Data registers Stack pointer Condition codes Program counter Thread 2 context: Data registers Stack pointer Condition codes Program counter 0 Kernel context: VM structures File table brk pointer

Threads vs. Processes How threads and processes are similar Each has its own logical control flow Each can run concurrently with others (possibly on different cores) Each is scheduled and context switched How threads and processes are different Threads share all code and data (except local stacks) Processes (typically) do not Threads are somewhat less expensive than processes Thread control (creating and reaping) is half as expensive as process control ~20K cycles to create and reap a process ~10K cycles (or less) to create and reap a thread Thread context switches are less expensive (e.g., don't flush TLB)

Logical View of Threads Threads associated with process form a pool of peers Unlike processes which form a tree hierarchy Process hierarchy Threads associated with process foo P0 T2 T4 T1 P1 shared code, data and kernel context sh sh sh T3 T5 foo bar

Posix Threads Interface C (Pthreads) Python (threading) Creating and reaping threads pthread_create() pthread_join() Determining your thread ID pthread_self() Terminating threads pthread_cancel() pthread_exit() exit() [terminates all threads] RET [terminates current thread] Creating and reaping threads Thread() thread.join() Determining your thread ID thread.get_ident() Terminating threads thread.exit() RET [terminates current thread]

The Pthreads "hello, world" Program /* * hello.c - Pthreads "hello, world" program */ #include "csapp.h" void *thread(void *vargp); Thread attributes (usually NULL) Thread ID int main() { pthread_t tid; Pthread_create(&tid, NULL, thread, NULL); Pthread_join(tid, NULL); exit(0); } Thread routine Thread arguments (void *p) hello.c Return value (void **p) void *thread(void *vargp) /* thread routine */ { printf("Hello, world!\n"); return NULL; } hello.c

11 Example Program to Illustrate Sharing void *thread(void *vargp) { long myid = (long)vargp; static int cnt = 0; char **ptr; /* global var */ int main() { long i; pthread_t tid; char *msgs[2] = { "Hello from foo", "Hello from bar" }; printf("[%ld]: %s (cnt=%d)\n", myid, ptr[myid], ++cnt); return NULL; } Peer threads reference main thread s stack indirectly through global ptr variable ptr = msgs; for (i = 0; i < 2; i++) Pthread_create(&tid, NULL, thread, (void *)i); Pthread_exit(NULL); } sharing.c

12 Mapping Variable Instances to Memory Global variables Def: Variable declared outside of a function Virtual memory contains exactly one instance of any global variable Local variables Def: Variable declared inside function without static attribute Each thread stack contains one instance of each local variable Local static variables Def: Variable declared inside function with the static attribute Virtual memory contains exactly one instance of any local static variable.

13 Mapping Variable Instances to Memory Global var: 1 instance (ptr [data]) Local vars: 1 instance (i.m, msgs.m) char **ptr; /* global var */ int main(){ long i; pthread_t tid; char *msgs[2] = {"Hello from foo", "Hello from bar"}; ptr = msgs; for (int i = 0; i < 2; i++) Pthread_create(&tid, NULL, thread, (void *)i); Pthread_exit(NULL); } Local var: 2 instances ( myid.p0 [peer thread 0 s stack], myid.p1 [peer thread 1 s stack] ) Local static var: 1 instance (cnt [data]) void *thread(void *vargp){ long myid = (long)vargp; static int cnt = 0; printf("[%ld]: %s (cnt=%d)\n", myid, ptr[myid], ++cnt); return NULL; }

15 Exercise 1: Shared Variables char **ptr; /* global var */ Which variables are shared? int main(){ long i; pthread_t tid; char *msgs[2] = {"Hello from foo", "Hello from bar"}; ptr = msgs; for (int i = 0; i < 2; i++) Pthread_create(&tid, NULL, thread, (void *)i); Pthread_exit(NULL); } ptr cnt i.main msgs.main myid.thread0 myid.thread1 void *thread(void *vargp){ long myid = (long)vargp; static int cnt = 0; printf("[%ld]: %s (cnt=%d)\n", myid, ptr[myid], ++cnt); return NULL; }

16 Exercise 1: Shared Variables Which variables are shared? A variable x is shared iff multiple threads reference at least one instance of x. Variable instance Referenced by main thread? Referenced by peer thread 0? Referenced by peer thread 1? yes no yes yes no no yes yes no yes yes no yes yes no yes no yes ptr cnt i.main msgs.main myid.thread0 myid.thread1 ptr, cnt, and msgs are shared i and myid are not shared

17 Why not Concurrent Programs? /* Thread routine */ void *thread(void *vargp){ long i, niters; niters = *((long *)vargp); for (i = 0; i < niters; i++){ cnt++; } /* Global shared variable */ volatile long cnt = 0; /* Counter */ int main(int argc, char **argv){ long niters; pthread_t tid1, tid2; niters = atoi(argv[1]); Pthread_create(&tid1, NULL, thread, &niters); Pthread_create(&tid2, NULL, thread, &niters); Pthread_join(tid1, NULL); Pthread_join(tid2, NULL); return NULL; } linux> ./badcnt 10000 OK cnt=20000 linux> ./badcnt 10000 BOOM! cnt=13051 linux> /* Check result */ if (cnt != (2 * niters)) printf("BOOM! cnt=%ld\n", cnt); else printf("OK cnt=%ld\n", cnt); exit(0); }

18 Assembly Code for Counter Loop C code for counter loop in thread i for (i = 0; i < niters; i++) cnt++; Asm code for thread i movq (%rdi), %rcx testq %rcx,%rcx jle .L2 movl $0, %eax .L3: movq cnt(%rip),%rdx addq $1, %rdx movq %rdx, cnt(%rip) addq $1, %rax cmpq %rcx, %rax jne .L3 .L2: Hi: Head Li : Load cnt Ui : Update cnt Si : Store cnt Ti : Tail

Race conditions A race condition is a timing-dependent error involving shared state whether the error occurs depends on thread schedule program execution/schedule can be non-deterministic compilers and processors can re-order instructions

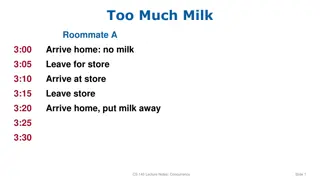

A concrete example You and your roommate share a refrigerator. Being good roommates, you both try to make sure that the refrigerator is always stocked with milk. Liveness: if you are out of milk, someone buys milk Safety: you never have more than one quart of milk Algorithm 1: Algorithm 1: Look in fridge. If out of milk: go to store, buy milk, go home put milk in fridge if (milk == 0) { milk++; } // no milk // buy milk

A problematic schedule You Your Roommate 3:00 3:05 3:10 3:15 3:20 fridge Look in fridge; out of milk Leave for store Arrive at store Buy milk Arrive home; put milk in 3:10 3:15 3:20 3:25 3:30 fridge Look in fridge; out of milk Leave for store Arrive at store Buy milk Arrive home; put milk in Safety violation: You have too much milk and it spoils

Solution 1: Leave a note You and your roommate share a refrigerator. Being good roommates, you both try to make sure that the refrigerator is always stocked with milk. Algorithm 2: if (milk == 0) { if (note == 0) { // no note note = 1; milk++; note = 0; } } // no milk // leave note // buy milk // remove note Safety violation: you've introduced a Heisenbug!

Solution 2: Leave note before check note You and your roommate share a refrigerator. Being good roommates, you both try to make sure that the refrigerator is always stocked with milk. Algorithm 3: note1 = 1 if (note2 == 0) { // no note from if (milk == 0) {// no milk milk++; // buy milk } } note1 = 0 roommate Liveness violation: No one buys milk

Solution 3: Keep checking for note You and your roommate share a refrigerator. Being good roommates, you both try to make sure that the refrigerator is always stocked with milk. Algorithm 4: note1 = 1 while (note2 == 1) { // wait until ; } if (milk == 0) { milk++; } note1 = 0 // no note // no milk // buy milk Liveness violation: You've introduced deadlock

Solution 4: Take turns You and your roommate share a refrigerator. Being good roommates, you both try to make sure that the refrigerator is always stocked with milk. Algorithm 5: note1 = 1 turn = 2 while (note2 == 1 and turn == 2){ ; } if (milk == 0) { milk++; } note1 = 0 // no milk // buy milk (probably) correct, but complicated and inefficient

Locks A lock (aka a mutex) is a synchronization primitive that provides mutual exclusion. When one thread holds a lock, no other thread can hold it. a lock can be in one of two states: locked or unlocked a lock is initially unlocked function acquire(&lock) waits until the lock is unlocked, then atomically sets it to locked function release(&lock) sets the lock to unlocked

Solution 5: use a lock You and your roommate share a refrigerator. Being good roommates, you both try to make sure that the refrigerator is always stocked with milk. Algorithm 6: acquire(&lock) if (milk == 0) { milk++; } release(&lock) // no milk // buy milk Correct!

Atomic Operations Solution: hardware primitives to support synchronization A machine instruction that (atomically!) reads and updates a memory location Example: xchgsrc, dest one instruction semantics: TEMP DEST; DEST SRC; SRC TEMP;

Spinlocks acquire: mov $1, eax xchg eax, (rdi) test eax, eax jnz acquire ret ; Set EAX to 1 ; Atomically swap EAX w/lock val ; check if EAX is 0 (lock unlocked) ; if was locked, loop ; lock has been acquired, return release: xor eax, eax xchg eax, (rdi) ret ; Set EAX to 0 ; Atomically swap EAX w/ lock val ; lock has been released, return

Programming with Locks C (pthreads) Python (threading) Defines class Lock Defines lock type pthread_mutex_t functions to create/destroy locks: int pthread_mutex_init(&lock, attr); int pthread_mutex_destroy(&lock); constructor to create locks: Lock() destroyed by garbage collector functions to aquire/release lock: lock.acquire() lock.release() functions to acquire/release lock: int pthread_mutex_lock(&lock); int pthread_mutex_unlock(&lock);

Exercise 2: Locks /* Thread routine */ void *thread(void *vargp) { long i, niters = *((long *)vargp); for (i = 0; i < niters; i++){ cnt++; } /* Global shared variable */ volatile long cnt = 0; /* Counter */ int main(int argc, char **argv) { long niters; pthread_t tid1, tid2; niters = atoi(argv[1]); Pthread_create(&tid1, NULL, thread, &niters); Pthread_create(&tid2, NULL, thread, &niters); Pthread_join(tid1, NULL); Pthread_join(tid2, NULL); return NULL; } TODO: Modify this example to guarantee correctness /* Check result */ if (cnt != (2 * niters)) printf("BOOM! cnt=%ld\n", cnt); else printf("OK cnt=%ld\n", cnt); exit(0); }

Problems with Locks 1. Locks are slow threads that fail to acquire a lock on the first attempt must "spin", which wastes CPU cycles threads get scheduled and de-scheduled while the lock is still locked 2. Using locks correctly is hard hard to ensure all race conditions are eliminated easy to introduce synchronization bugs (deadlock, livelock)

Better Synchronization Primitives Semaphores stateful synchronization primitive Condition variables event-based synchronization primitive

Exercise 3: Feedback 1. Rate how well you think this recorded lecture worked 1. Better than an in-person class 2. About as well as an in-person class 3. Less well than an in-person class, but you still learned something 4. Total waste of time, you didn't learn anything 2. How much time did you spend on this video (including exercises)? 3. Do you have any particular questions you d like me to address in this week s problem session? 4. Do you have any other comments or feedback?