Understanding Bayesian Learning in Machine Learning

Bayesian learning is a powerful approach in machine learning that involves combining data likelihood with prior knowledge to make decisions. It includes Bayesian classification, where the posterior probability of an output class given input data is calculated using Bayes Rule. Understanding Bayesian inference is crucial for shifting confidence from prior beliefs to data likelihood as more information is gathered.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

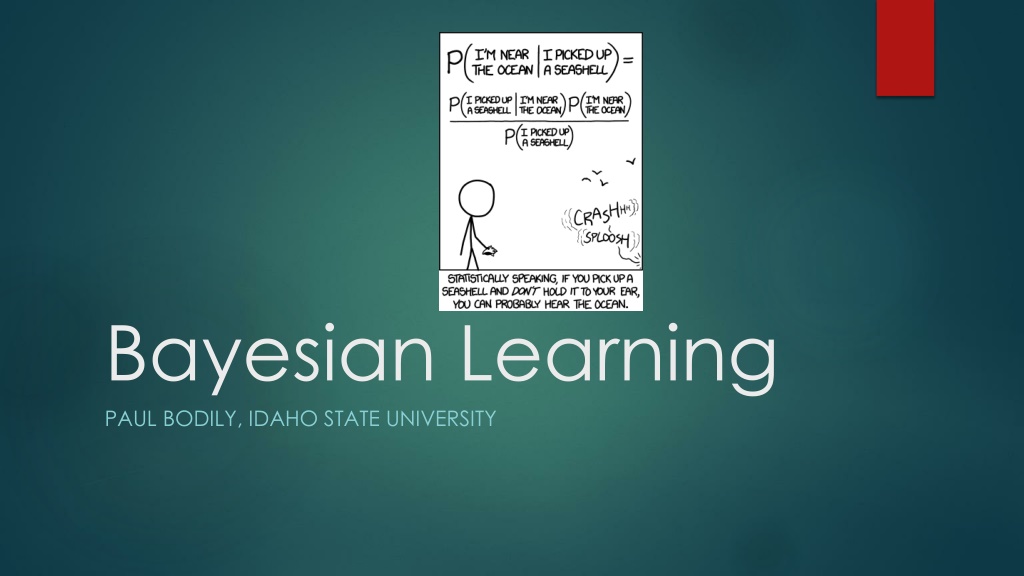

Bayesian Learning PAUL BODILY, IDAHO STATE UNIVERSITY

Bayesian Learning A powerful and growing approach in machine learning We use it in our own decision making all the time You hear a word which could equally be Thanks or Tanks , which would you go with? Combine Data likelihood and your prior knowledge Texting Suggestions on phone Spell checkers, etc. Many applications CS 4499/5599 - Bayesian Learning 2

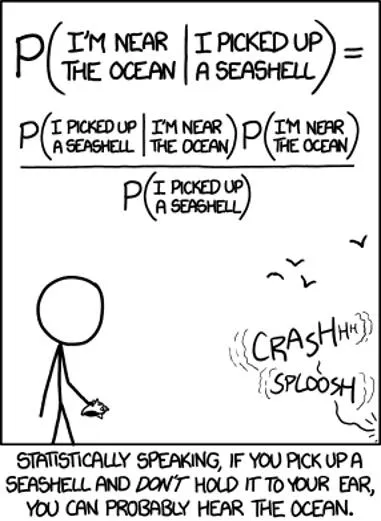

Bayesian Classification Bayes Rule: P(c|x) = P(x|c)P(c)/P(x) P(c|x) - Posterior probability of output class c given the input x The discriminative learning algorithms we have learned so far try to approximate this Seems like more work, but often calculating the right hand side probabilities can be relatively easy P(c) - Prior probability of class c How do we know? Just count up and get the probability for the Training Set Easy! P(x|c) - Probability likelihood of data vector x given that the output class is c If x is nominal we can just look at the training set and again count to see the probability of x given the output class c There are many ways to calculate this likelihood and we will discuss some P(x) - Prior probability of the data vector x This is just a normalizing term to get an actual probability. In practice we drop it because it is the same for each class c (i.e. independent), and we are just interested in which class c maximizes P(c|x).

Bayesian Classification Example Assume we have 100 examples in our Training Set with two output classes Democrat and Republican, and 80 of the examples are of class Democrat. Thus our priors are: P(Democrat) = .8 P(Republican) = .2 P(c|x) = P(x|c)P(c)/P(x) Bayes Rule Now we are given an input vector x From our training data we know the following likelihoods P(x|Democrat) = .3 P(x|Republican) = .4 What should our output be? Try all possible output classes and see which one maximizes the posterior using Bayes Rule: P(c|x) = P(x|c)P(c)/P(x) Drop P(x) since it is the same for both P(Democrat|x) = P(x| Democrat)P(Democrat) = .3 .8 = .24 P(Republican|x) = P(x| Republican)P(Republican) = .4 .2 = .08

Bayesian Inference starts with belief Bayesian vs. Frequentist Bayesian allows us to talk about probabilities/beliefs even when there is little data, because we can use the prior What is the probability of a nuclear plant meltdown? What is the probability that ISU will win the national championship? As the amount of data increases, Bayes shifts confidence from the prior to the likelihood Requires reasonable priors in order to be helpful We use priors all the time in our decision making Unknown coin: probability of heads? (over time?) CS 4499/5599 - Bayesian Learning 5

Another Look at Bayesian Classification P(c|x) = P(x|c)P(c)/P(x) P(c) - Prior probability of class c How do we know? Just count up and get the probability for the Training Set Easy! P(x|c) - Probability likelihood of data vector x given that the output class is c How do we really do this? If x is real valued? If x is nominal we can just look at the training set and again count to see the probability of x given the output class c but how often will they be the same? Which will also be the problem even if we bin real valued inputs For Na ve Bayes in the following we use v in place of c and use a1, ,anin place of x

Nave Bayes Classifier P(a1,...,an|vj)P(vj) P(a1,...,an) vMAP=argmax P(vj|a1,...,an) =argmax =argmax vj V P(a1,...,an|vj)P(vj) vj V vj V Given a training set, P(vj) is easy to calculate How about P(a1, ,an|vj)? Most cases would be either 0 or 1. Would require a huge training set to get reasonable values. Key "Na ve" leap: Assume conditional independence of the attributes (compare w/ chain rule) i i P(a1,...,an|vj)= P(ai|vj) vNB=argmax P(vj) P(ai|vj) vj V While conditional independence is not typically a reasonable assumption Low complexity simple approach, assumes nominal features for the moment - need only store all P(vj) and P(ai|vj) terms, easy to calculate and with only |attributes| |attribute values| |classes| terms there is often enough data to make the terms accurate at a 1st order level Effective for many large applications (Document classification, etc.) 17

Nave Bayes Homework Size (B, S) Color (R,G,B ) G Output (P,N) For the given training set: 1. Create a table of the statistics needed to do Na ve Bayes 2. What would be the output for a new instance which is Big and Red? 3. What is the Na ve Bayes value and the normalized probability for each output class (P or N) for this case of Big and Red? B N S R N S B P B R P B B N B G P S R N i vNB=argmax P(vj) P(ai|vj) vj V CS 478 - Homework Solutions 18

What do we need? Size (B, S) B Color (R,G,B) G Output (P,N) N S R N S B P B R P B B N B G P S R N i vNB=argmax P(vj) P(ai|vj) vj V CS 4499/5599 - Bayesian Learning 19

What do we need? P(P) P(N) Size (B, S) B Color (R,G,B) G Output (P,N) N P(Size=B|P) P(Size=S|P) S R N P(Size=B|N) S B P P(Size=S|N) B R P P(Color=R|P) B B N P(Color=G|P) B G P P(Color=B|P) S R N P(Color=R|N) P(Color=G|N) i vNB=argmax P(vj) P(ai|vj) P(Color=B|N) vj V CS 4499/5599 - Bayesian Learning 20

What do we need? What is our output for a new instance which is Big and Red? P(P) 3/7 P(N) 4/7 Size (B, S) Color (R,G,B ) G Output (P,N) P(Size=B|P) 2/3 vP = P(P)* P(B|P)*P(R|P) = 3/7*2/3*1/3= .0952 P(Size=S|P) 1/3 B N P(Size=B|N) 2/4 S R N P(Size=S|N) 2/4 S B P vN = P(N)* P(B|N)*P(R|N) = 4/7*2/4*2/4= .143 P(Color=R|P) 1/3 B R P P(Color=G|P) 1/3 B B N P(Color=B|P) 1/3 Normalized Probabilites: P= .0952 /(.0952 + .143) = .400 B G P P(Color=R|N) 2/4 S R N P(Color=G|N) 1/4 i vNB=argmax P(vj) P(ai|vj) P(Color=B|N) 1/4 N = .143 /(.0952+ .143) = .600 vj V CS 478 - Homework Solutions 21

Nave Bayes Homework Size (B, S) Color (R,G,B ) R Output (P,N) For the given training set: 1. Create a table of the statistics needed to do Na ve Bayes 2. What would be the output for a new instance which is Small and Blue? 3. What is the Na ve Bayes value and the normalized probability for each output class (P or N) for this case of Small and Blue? B P S B P S B N B R N B B P B G N S B P i vNB=argmax P(vj) P(ai|vj) vj V CS 478 - Homework Solutions 22

Getting actual probabilities Can normalize to get the actual na ve Bayes probability i i max vj VP(vj) P(ai|vj) P(vj) P(ai|vj) vj V CS 4499/5599 - Bayesian Learning 23

Dealing with Continuous Attributes Can discretize a continuous feature into bins thus changing it into a nominal feature and then gather statistics normally How many bins? - More bins is good, but need sufficient data to make statistically significant bins. Thus, base it on data available Could also assume data is Gaussian and compute the mean and variance for each feature given the output class, then each P(ai|vj) becomes ?(ai| vj, 2vj) CS 4499/5599 - Bayesian Learning 24

Use Smoothing for Infrequent Data Combinations Would if there are 0 or very few cases of a particular ai|vj (nc/n)? (nc is the number of instances with output vj where ai = attribute value c. n is the total number of instances with output vj ) Should usually allow every case at least some finite probability since it could occur in the test set, else the 0 terms will dominate the product (speech example) Replace nc/n with the Laplacian: nc+1 n +1/p p is a prior probability of the attribute value which is usually set to 1/(# of attribute values) for that attribute (thus 1/p is just the number of possible attribute values). Thus if nc/n is 0/10 and nc has three attribute values, the Laplacian would be 1/13. Another approach: m-estimate of probability: nc+ mp n + m As if augmented the observed set with m virtual examples distributed according to p. If m is set to 1/p then it is the Laplacian. If m is 0 then it defaults to nc/n. CS 4499/5599 - Bayesian Learning 25

Take-homes with NB No training per se, just gather the statistics from your data set and then apply the Na ve Bayes classification equation to any new instance Easier to have many attributes since not building a net, etc. and the amount of statistics gathered grows linearly with the number of attributes (# attributes # attribute values # classes) - Thus natural for applications like text classification which can easily be represented with huge numbers of input attributes. Used in many large real world applications

Text Classification Example A text classification approach Want P(class|document) - Use a "Bag of Words" approach order independence assumption (valid?) Variable length input of query document is fine Calculate P(word|class) for every word/token in the language and each output class based on the training data. Words that occur in testing but do not occur in the training data are ignored. Good empirical results. Can drop filler words (the, and, etc.) and words found less than z times in the training set. P(class|document) P(class|BagOfWords) = P(BagOfWords)*P(class)/P(document) //Bayes Rule P(class)* P(word|class) //assume word order independence // But BagofWords usually unique //and P(document) same for all classes // Thus Na ve Bayes CS 4499/5599 - Bayesian Learning 27