Scalable Causal Consistency for Wide-Area Storage with COPS

This paper delves into the importance of scalable causal consistency for wide-area storage with the COPS system. It explores desired properties such as availability, low latency, partition tolerance, and scalability within data centers. The document discusses the challenges of achieving consistency with ALPS, strong consistency, sequential consistency, and causality examples. Various systems and approaches for maintaining consistency are highlighted, emphasizing the significance of causality for programmers and users alike.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

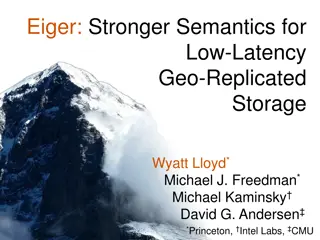

Dont Settle for Eventual: Scalable Causal Consistency for Wide-Area Storage with COPS Wyatt Lloyd* Michael J. Freedman* Michael Kaminsky David G. Andersen *Princeton, Intel Labs, CMU

Wide-Area Storage Stores: Status Updates Likes Comments Photos Friends List Stores: Posts +1s Comments Photos Circles Stores: Tweets Favorites Following List

Wide-Area Storage Serves Requests Quickly

Inside the Datacenter Web Tier Storage Tier A-F Remote DC G-L Web Tier Storage Tier A-F G-L M-R M-R S-Z S-Z

Desired Properties: ALPS Availability Always On Low Latency Partition Tolerance Scalability

Scalability Increase capacity and throughput in each datacenter A-Z A-L A-F A-C A-Z A-L A-F A-C M-Z G-L D-F M-Z G-L D-F M-R G-J M-R G-J S-Z K-L S-Z K-L M-O M-O P-S P-S T-V T-V W-Z W-Z

Desired Property: Consistency Restricts order/timing of operations Stronger consistency: Makes programming easier Makes user experience better

Consistency with ALPS Strong Impossible [Brewer00, GilbertLynch02] Sequential Impossible [LiptonSandberg88, AttiyaWelch94] Causal COPS Amazon Dynamo Voldemort LinkedIn Facebook/Apache Cassandra Eventual

System A L P S Consistency Scatter Strong PSI + Txn Walter ? Causal+ COPS Bayou Causal+ PNUTS Per-Key Seq. ? Dynamo Eventual

Causality By Example Causality ( ) Remove boss from friends group Friends Boss Thread-of-Execution Gets-From Transitivity Post to friends: Time for a new job! New Job! Friend reads post

Causality Is Useful For Programmers: For Users: Friends Boss Photo Upload Add to album New Job! Employment Integrity Referential Integrity

Conflicts in Causal K=1 K=1 K=1 K=2 K=2 K=2

Conflicts in Causal Causal + Conflict Handling = Causal+ K=2 K=3 K=2 K=3 K=2 K=3

Previous Causal+ Systems Bayou 94, TACT 00, PRACTI 06 Log-exchange based Log is single serialization point Implicitly captures and enforces causal order Limits scalability OR No cross-server causality

Scalability Key Idea Dependency metadata explicitly captures causality Distributed verifications replace single serialization Delay exposing replicated puts until all dependencies are satisfied in the datacenter

COPS All Data Causal+ Replication Local Datacenter All Data Client Library All Data

Get Local Datacenter Client Library get

Put put + put after = ordering metadata ? Local Datacenter ? Client Library put K:V Replication Q put after

Dependencies Dependencies are explicit metadata on values Library tracks and attaches them to put_afters

Dependencies Dependencies are explicit metadata on values Library tracks and attaches them to put_afters Client 1 put_after(Key,Val,deps) put(Key, Val) deps . . . Kversion version (Thread-Of-Execution Rule)

Dependencies Dependencies are explicit metadata on values Library tracks and attaches them to put_afters Client 2 get(K) get(K) value value,version,deps' deps . . . Kversion L337 M195 deps' L337 M195 (Gets-From Rule) (Transitivity Rule)

Causal+ Replication put_after(K,V,deps) K:V,deps Replication Q put after

Causal+ Replication dep_check(L337) put_after(K,V,deps) K:V,deps deps L337 M195 Exposing values after dep_checks return ensures causal+

Basic COPS Summary Serve operations locally, replicate in background Always On Partition keyspace onto many nodes Scalability Control replication with dependencies Causal+ Consistency

Gets Arent Enough My Remote Datacenter You re Fired!! Operations Remote Progress Boss Remote Progress Boss Boss Boss New Job! Remote Progress Portugal! Portugal! New Job! Boss New Job!

Gets Arent Enough My Remote Datacenter You re Fired!! Operations Boss Boss Boss Boss Boss Boss New Job! Remote Progress Portugal! Portugal! New Job! New Job! Portugal! Boss New Job! Portugal! Remote Progress Boss Remote Progress

Get Transactions Provide consistent view of multiple keys Snapshot of visible values Keys can be spread across many servers Takes at most 2 parallel rounds of gets Low Latency No locks, no blocking

Get Transactions My Remote Datacenter Operations Remote Progress Boss Could Get Remote Progress Boss Boss Boss Boss Boss Boss Portugal! New Job! Remote Progress Boss Portugal! Portugal! Portugal! New Job! New Job! Portugal! Boss Portugal! New Job! Remote Progress Boss Portugal! Never Boss Boss Boss Remote Progress New Job! Portugal!

System So Far ALPS and Causal+, but Proliferation of dependencies reduces efficiency Results in lots of metadata Requires lots of verification We need to reduce metadata and dep_checks Nearest dependencies Dependency garbage collection

Many Dependencies Dependencies grow with client lifetime Put Put Get Get Put Put

Nearest Dependencies Transitively capture all ordering constraints

The Nearest Are Few Transitively capture all ordering constraints

The Nearest Are Few Only check nearest when replicating COPS only tracks nearest COPS-GT tracks non-nearest for transactions Dependency garbage collection tames metadata in COPS-GT

Extended COPS Summary Get transactions Provide consistent view of multiple keys Nearest Dependencies Reduce number of dep_checks Reduce metadata in COPS

Evaluation Questions Overhead of get transactions? Compare to previous causal+ systems? Scale?

Experimental Setup Local Datacenter Clients COPS Servers Remote DC COPS N N N

COPS & COPS-GT Competitive for Expected Workloads All Put Workload 4 Servers / Datacenter Max Throughput (Kops/sec) 100 80 Low per-client write rates expected High per-client write rates result in 1000s of dependencies 60 40 COPS 20 COPS-GT 0 1 10 100 1000 People tweeting 1000 times/sec People tweeting 1 time/sec Average Inter-Op Delay (ms)

COPS & COPS-GT Competitive for Expected Workloads Varied Workloads 4 Servers / Datacenter Max Throughput (Kops/sec) 100 80 60 40 COPS 20 COPS-GT 0 1 10 100 1000 Expected Pathological Average Inter-Op Delay (ms) Workload

COPS Low Overhead vs. LOG COPS dependencies LOG 1 server per datacenter only LOG COPS COPS-GT COPS and LOG achieve very similar throughput Nearest dependencies mean very little metadata In this case dep_checks are function calls Normalized Throughput 1 0.8 0.6 0.4 0.2 0 Pathological High 1:16 Put:Get 1/128 Variance 16KB Values Expected Workload Inter-Op Delay

COPS Scales Out 320 Throughput (Kops) 160 80 40 20 1 2 4 8 16 COPS 1 2 4 8 16 COPS-GT LOG

Conclusion Novel Properties First ALPS and causal+ consistent system in COPS Lock free, low latency get transactions in COPS-GT Novel techniques Explicit dependency tracking and verification with decentralized replication Optimizations to reduce metadata and checks COPS achieves high throughput and scales out