Consistency Protocols in Distributed Systems

Today's lecture covers consistency protocols in distributed systems, focusing on primary-based protocols and replicated-write protocols. These protocols play a crucial role in ensuring consistency across multiple replicas. One example discussed is the Remote-Write Protocol, which enforces strict consistency by forwarding all write operations to a primary replica. The protocol ensures that read operations can be executed locally at each replica, while write operations are coordinated by the primary replica and propagated to other replicas for consistency. The session also includes announcements regarding upcoming project deadlines and the final exam schedule.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Distributed Systems CS 15-440 Replication Part III Lecture 24, November 06, 2022 Mohammad Hammoud

Today Last Session: Replication Part II Data- and client-centric consistency models Today s Session: Replication Part III Consistency protocols Announcements: PS5 is due today by midnight Project 4 is due on Wed Nov. 09 by midnight The final exam is on Wednesday Nov. 16 from 2:30PM to 5:30PM in Room 1190

Overview Last two lectures Motivation Consistency Models Data-Centric Consistency Models Client-Centric Consistency Models Consistency Protocols Today s lecture

Overview Motivation Consistency Models Data-Centric Consistency Models Client-Centric Consistency Models Consistency Protocols

Consistency Protocols A consistency protocol describes the implementation of a specific consistency model (e.g., strict consistency) We will study 2 types of consistency protocols: Primary-based Protocols One primary coordinator is elected to control replication across multiple replicas Replicated-write Protocols Multiple replicas coordinate to provide consistency guarantees

Consistency Protocols Replica Control Protocols Primary-Based Protocols Replicated-Write Protocols

Primary-Based Protocols In primary-based protocols, a simple centralized design is used to implement consistency models Each data-item xhas an associated primary replica The primary replica is responsible for coordinating write operations We will study one example of primary-based protocols that implements the Strict Consistency Model The Remote-Write Protocol

Remote-Write Protocol Two Rules: All write operations are forwarded to the primary replica Read operations are carried out locally at each replica Approach for write operations: Client connects to some replica RC If the client issues write operation to RC RC forwards the request to the primary replica RP, which Updates its local value Then forwards the update to other replicas Ri Other replicas Ri perform updates, and send ACKs back to RP After RP receives all ACKs, it informs RCthat the write operation was successful RC acknowledges the client, stating that the write operation was successful x+=5 Client 1 Primary Replica R2 R3 R1 x1=0 x1=5 x2=0 x2=5 x3=0 x3=5 Data-store

Remote-Write Protocol Discussion The Remote-Write Protocol Provides a simple way to implement strict consistency Guarantees that clients see always the most recent values However, latency is high in the Remote-Write Protocol The client blocks until all the replicas are updated In what scenarios would you use the Remote-Write protocol? Typically, for distributed databases and file systems in data-centers (i.e., in LAN settings) Replicas are placed on the same LAN to reduce latency

Consistency Protocols Consistency Protocols Primary-Based Protocols Replicated- Write Protocols Remote-Write Protocol

Replicated-Write Protocols In replicated-write protocols, updates can be carried out at multiple replicas We will study two examples of the replicated-write protocols Active Replication Protocol Clients write at any replica (no primary replicas) The altered replica will propagate updates to other replicas Quorum-Based Protocol A voting scheme is used

Active Replication Protocol Protocol: when a client writes at a replica, the replica will send the update to all other replicas Challenges with Active Replication Ordering of operations can differ leading to conflicts/inconsistencies So how to maintain consistent ordering? x+=2 x*=3 Client 1 Client 2 W(x) R(x)2 R(x)6 x+=2 R1 R(x)0 R(x)2 R2 W(x) R(x)2 R(x)6 R1 x*=3 R2 R3 R3 x1=0 x1=2 x1=6 x2=0 x2=2 x2=6 x3=0 x3=2 x3=6 Data-store

Centralized Active Replication Protocol A Possible Approach: Elect a centralized coordinator (let us call it sequencer (Seq)) When a client connects to a replica RC and issues a write operation RC forwards the update to Seq Seq assigns a sequence number to the update operation RC propagates the sequence number and the operation to other replicas Operations are carried out at all replicas in the order of the sequence numbers x-=2 x+=5 Client 1 Client 2 10 11 R1 R2 R3 Seq 11 10 x-=2 x+=5 Data-store

Replicated-Write Protocols In replicated-write protocols, updates can be carried out at multiple replicas We will study two examples of the replicated-write protocols Active Replication Protocol Clients write at any replica (no primary replicas) The replica will propagate updates to other replicas Quorum-Based Protocol A voting scheme is used

Quorum-Based Protocols Replicated writes can also be accomplished via using a votingscheme, originally proposed by Thomas (1979) then generalized by Gifford (1979) Basic Idea (Recap): Clients are required to request and acquire the permission of multiple servers before either reading or writing from or to a replicated data item Rules on reads and writes should be established Each replica is assigned a version number, which is incremented on each write Another protocol was proposed by Lamport in 1998 and referred to as Paxos

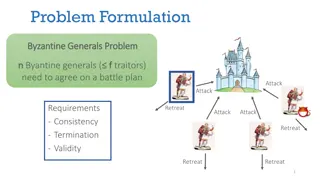

Assumptions in Paxos Paxos assumes asynchronous, non-Byzantine (more on this under fault-tolerance) model, in which: Processes: Operate at arbitrary speeds May fail by stopping, but may restart Since any process may fail after a value is chosen and then restart, a solution is impossible unless some information can be remembered (e.g., through logging) by a process that has failed and restarted Messages: May be lost, duplicated, delayed (and thus reordered), but not corrupted

Roles in Paxos Processes can take different roles: Client: Issues a request (e.g., write on a replicated file) to the distributed system and waits for a response Proposer (or a process bidding to become a coordinator/leader): Advocates for a Client and suggests values for consideration by Acceptors Acceptor (or a voter): Considers the values proposed by Proposers and renders an accept/reject decision Learner: Once a Client s request has been agreed upon by the Acceptors, the Learner can take action (e.g., execute the request and send a response to the Client)

Quorums in Paxos Any message sent to an Acceptor must be sent to a quorum of Acceptors consisting of more than half of all Acceptors (i.e., majority-- not unanimity) Any two quorums should have a nonempty intersection Common node acts as tie-breaker This helps avoid the split-brain problem (or a situation when Acceptors decisions are not in agreement) In a system with 2m+1 Acceptors, m Acceptors can fail and consensus can still be reached

Paxos Algorithm: Phase I Phase I The Proposer selects a unique sequence (or round) number n and sends a prepare(n) request to a quorum of Acceptors Step 1: Prepare Each acceptor does the following: Note that multiple processes can bid to become coordinators If n > (the sequence number of any previous promises or acceptances) It writes n to a stable storage, promising that it will never accept any future proposed number less than n It sends a promise(n, (N, U)) response, where N and U are the last sequence number and value it accepted so far (if any) Hence, how can each coordinator select a unique sequence number? Every process, P, can be assigned a unique IDP, between 0 and k 1, assuming Step 2: Promise a total of k processes P can select the smallest sequence number, s, that is larger than all sequence numbers seen thus far, so that s % k = IDP E.g., P will pick a sequence number of 23 for its next bid if IDP = 3, k = 5, and largest number seen = 20

Paxos Algorithm: Phase I Phase I The Proposer selects a unique sequence (or round) number n and sends a prepare(n) request to a quorum of Acceptors Step 1: Prepare Each Acceptor does the following: If n > (the sequence number of any of its previous promises or acceptances) It writes n to a stable storage, promising that it will never accept any future proposed number less than n It sends a promise(n, (N, U)) response, where N and U are the last sequence number and value it accepted so far (if any) Step 2: Promise

Example Proposer Acceptor Acceptor Acceptor Client request prepare(n) Quorum Size = 3, which is decided by the proposer promise(n, NULL) promise(n, NULL) promise(n, NULL)

Example Proposer Acceptor Acceptor Acceptor Client request Quorum Size = 2, which is the min acceptable quorum size in this example prepare(n) promise(n, NULL) promise(n, NULL)

Paxos Algorithm: Phase II Phase II If the Proposer receives promise responses from a quorum of Acceptors, it sends an accept(n, v) request to those Acceptors (v is the value of the highest-numbered proposal among the promise responses, or any value if no promise contained a proposal) Step 1: Accept Each acceptor does the following: If n >= the number of any previous promise It writes (n, v) to a stable storage, indicating that it accepts the proposal It sends an accepted(n, v) response Else It does not accept (it sends a NACK) Step 2: Accepted

Paxos Algorithm: Phase II Phase II If the Proposer receives promise responses from a quorum of Acceptors, it sends an accept(n, v) request to those Acceptors (v is the value of the highest-numbered proposal among the promise responses, or any value if no promise contained a proposal) Step 1: Accept Each Acceptor does the following: If n >= the number of any previous promise It writes (n, v) to a stable storage, indicating that it accepts the proposal It sends an accepted(n, v) response Else It does not accept (it sends a NACK) Step 2: Accepted

Example Proposer Acceptor Acceptor Acceptor Client request prepare(n) promise(n, NULL) promise(n, NULL) accept(n, v) accepted(n, v) accepted(n, v) But, an Acceptor can accept multiple concurrent proposals!

Example Proposer Proposer Acceptor Acceptor Acceptor prepare(1) promise(1, NULL) promise(1, NULL) prepare(2) promise(2, NULL) promise(2, NULL) accept(1, A) accepted(1, A) NAK(1) accept(2, B) accepted(2, B) accepted(2, B) But, what if before the blue Proposer sends its accept message, another Proposer (could be the green one again) submits a new proposal with a higher sequence number?

Example Proposer Proposer Acceptor Acceptor Acceptor prepare(1) promise(1, NULL) promise(1, NULL) prepare(2) promise(2, NULL) promise(2, NULL) accept(1, A) accepted(1, A) NAK(1) accept(2, B) accepted(2, B) accepted(2, B) The blue round will fail also!

Example Proposer Proposer Acceptor Acceptor Acceptor prepare(1) promise(1, NULL) promise(1, NULL) prepare(2) promise(2, NULL) promise(2, NULL) accept(1, A) accepted(1, A) NAK(1) accept(2, B) accepted(2, B) accepted(2, B) What if this keeps happening?

Example Proposer Proposer Acceptor Acceptor Acceptor prepare(1) promise(1, NULL) promise(1, NULL) prepare(2) promise(2, NULL) promise(2, NULL) accept(1, A) accepted(1, A) NAK(1) accept(2, B) accepted(2, B) accepted(2, B) Paxos will not commit until this scenario stops!

A Note on Liveness If two Proposers keep concurrently issuing proposals with increasing sequence numbers, none of them will succeed Hence, Paxos cannot guarantee liveness (i.e., cannot guarantee that a proposed value will be chosen within a finite time) Is there a way liveness can be guaranteed in Basic Paxos? Short Answer: No But: We can apply an optimization to potentially expedite (not guarantee) liveness in the presence of multiple concurrent Proposers

A Note on Liveness To expedite liveness: A distinguished Proposer can be selected as the only entity to try submitting proposals If this distinguished Proposer: Can communicate successfully with a majority of Acceptors And uses a sequence number that is greater than any number used already Then it will succeed in issuing a proposal that can be accepted, assuming enough of the system (Proposer, Acceptors, and network) is working properly Clearly, liveness remains impossible to guarantee in finite time since any component in the system could fail (e.g., a network partition can arise)

Possible Failures in Paxos Would a network partition impact Paxos scorrectness (NOT liveness)? No, due to the quorum mechanism What if an Acceptor fails? Case 1: The Acceptor is not a member of the Proposer s quorum No recovery is needed Case 2: The Acceptor is a member of the Proposer s quorum, but quorum size > majority of Acceptors No recovery is needed

Possible Failures in Paxos Would a network partition impact Paxos scorrectness (NOT liveness)? No, due to the quorum mechanism What if an Acceptor fails? Case 3: The Acceptor is a member of the Proposer s quorum and quorum size equals to the majority of Acceptors Sub-case 3.1: The Acceptor fails after accepting the proposal No recovery is needed, assuming the Proposer will receive (or has received already) its acceptance message Sub-case 3.2: The Acceptor fails before accepting the proposal Worst case: New quorum and round can be established

Possible Failures in Paxos What if a Proposer fails? Case 1: The Proposer fails after proposing a value, but before a consensus is reached New Proposer can take over Case 2: The Proposer fails after a consensus is reached, but before it gets to know about it Either its failure gets detected and a new round is launched Or, it recovers and starts a new round itself Case 3: The Proposer fails after a consensus is reached and after it gets to know about it (but before letting the Learner knowing) Either its failure gets detected and a new round is launched Or, it recovers and learns again from its stable storage that it has succeeded in its bidding

Next Lecture Fault-tolerance