Revolutionizing Game Development with Multi-Armed Bandit Integration

The rapid evolution of video games calls for innovative approaches to engage players effectively. By integrating Multi-Armed Bandit (MAB) algorithms, developers can optimize user happiness, satisfaction, and revenue by dynamically adjusting game elements. This method addresses challenges such as short attention spans, frustration, and player drop-off rates. Leveraging MAB in game development allows for tailored experiences that enhance player enjoyment and retention.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

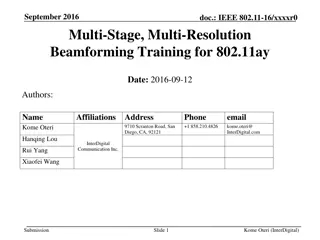

Agenda Problem scope and goals Game development trend Multi-armed bandit (MAB) introduction Integrating MAB into game development Project finding and results 2

Background Video Games rapidly developing Current generation have shorter attention span Thanks technology! Easily give up /frustrated on games. Too hard Repetitive Boring Challenge: Develop engaging games Use MAB to optimize happiness Player satisfaction -> Revenue 3

Why Game? People play video games to : Destress Pass time Edutainment (Educational Entertainment) Self esteem boost 4

Gaming Trend Technology advancing rapidly Gaming has become a norm Smartphone availability All ages 32% of phone time: games Nintendo Console -> Mobile 5

Gaming Trend Offline games: hard to collect data and fix bugs. Glitches and bugs will stay. Developers will pay. Online games: easy data collection and bug patches Bug fixes and Downloadable Content (DLC) Consumers: short attention span & picky. < Goldfish attention span Complain if game is bad Solution? 6

Multi-Armed Bandit Origin: Gambler in casino want to maximize winnings by playing slot machines Balance exploration and exploitation Objective: Given a set of K distinct arms, each with unknown reward distribution, find the maximum sum of rewards. Example: 3 slot machines 7

Multi-Armed Bandit Example: Restaurant example Arms: Restaurants Reward: Happiness Should I: Go to a new place to try? Stick with best option? 8

Multi-Armed Bandit Arms: Parameter choices What you want to optimize Rewards: observed outcome What you want to maximize Might be a quantifiable value Or a measure of happiness (Opportunity cost) Regret: Additional reward if started with optimal choice. What you want to minimize MAB algorithms guarantee minimal regret 9

Multi-Armed Bandit Bandit algorithm: basic idea 10

Multi-Armed Bandit Bandit algorithm: -greedy P(explore choices) = P(exploit best choice so far) = 1 Guarantee random behavior Common practice: Initial data (seed) 11

Multi-Armed Bandit Pros: Re-evaluate bad draws No supervision needed Cons: Result focused Does not explain trends 12

Applying MAB to game development Dynamically changing content Infinite Mario Mario Kart MAB constantly improve game (difficult or design) over time Maximize Satisfaction Happy customers -> $$ 13

Study Outline Simple, short retro RPG game (5-7 minutes) Arms: different skin (aesthetic) Reward: Game time Compute optimal skin One skin per game Data saved in a database 14

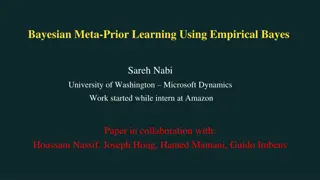

Result and Summary -Greedy algorithm -Greedy Algorithm 35 Plot Frequency VS Iteration # 30 25 42 data points 20 Forest 15 Frequency Winner: Variant 0 (Forest) Winter 10 Lava All options re-evaluated 5 0 0 10 20 30 40 50 Could Improve by: More samples Reward as function of time and score -5 Iteration number 15

Thank You! Q&A Contact: KennyRaharjo@gmail.com 16