Innovative Approach to GPU Performance Enhancement

This paper introduces a groundbreaking concept called Rollback-Free Value Prediction (RFVP). It addresses the performance limitations imposed by off-chip bandwidth on modern GPUs. By approximating data values, RFVP can predict cache misses efficiently, reducing the need for main memory access. The key idea is to use value prediction mechanisms to enhance speedup by 36% on average with minimal quality loss. Moreover, it results in a 27% reduction in energy consumption on average, making it a promising solution for optimizing GPU performance.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

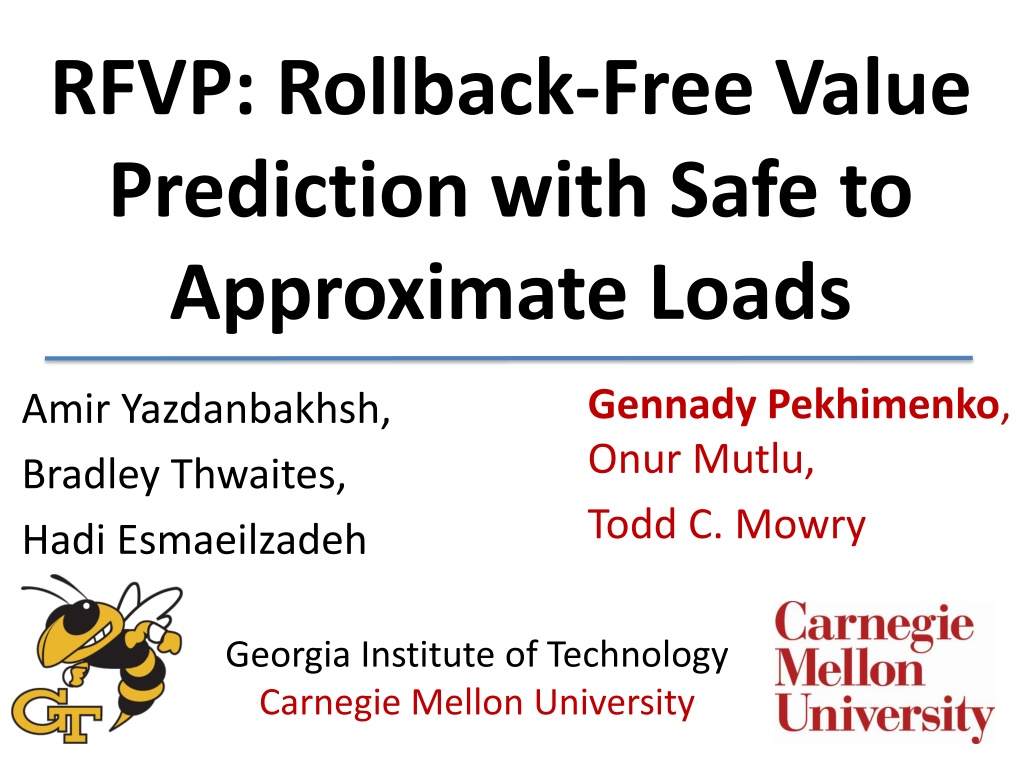

RFVP: Rollback-Free Value Prediction with Safe to Approximate Loads Gennady Pekhimenko, Onur Mutlu, Todd C. Mowry Amir Yazdanbakhsh, Bradley Thwaites, Hadi Esmaeilzadeh Georgia Institute of Technology Carnegie Mellon University

Executive Summary Problem: Performance of modern GPUs significantly limited by the available off-chip bandwidth Observations: Many GPU applications are amenable to approximation Data value similarity allows to efficiently predict values of cache misses Key Idea: Use simple value prediction mechanisms to avoid accesses to main memory when it is safe Results: Higher speedup (36% on average) with less than 10% quality loss Lower energy consumption (27% on average) 2

Data Analytics Virtual Reality GPU Multimedia Robotics 3

Data Analytics Virtual Reality Many GPU applications are limited by the off-chip bandwidth Multimedia Robotics 4

Motivation: Bandwidth Bottleneck 0.5x 1x 2x 4x 13.7 2.5 8x Perfect Memory 13.5 4.0 2.6 2.6 2.2 2 Speedup 1.8 1.6 1.4 1.2 1 0.8 0.6 Off-chip bandwidth is a major performance bottleneck 5

Only Few Loads Matters backprop fastwalsh matrixmul particlefilter srad2 stringmatch gaussian reduce heartwall similarityscore Percentage of Load Misses 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 1 2 3 4 5 6 7 8 9 10 Number of Loads Few GPU instructions generate most of the cache misses 6

Data Analytics Virtual Reality Many GPU applications are also amenable to approximation Multimedia Robotics 7

Rollback-Free Value Prediction Key idea: Predict values for safe-to-approximate loads when they miss in the cache Design principles: 1. No rollback/recovery, only value prediction 2. Drop rate is a tuning knob 3. Other requests are serviced normally 4. Providing safety guarantees 8

RFVP: Diagram GPU Cores RFVP Value Predictor Memory Hierarchy L1 Data Cache 9

Code Example to Support Intuition Matrixmul: float newVal = 0; for (int i=0; i<N; i++) { float4 v1 = matrix1[i]; float4 v2 = matrix2[i]; newVal += v1.x * v2.x; newVal += v1.y * v2.y; newVal += v1.z * v2.z; newVal += v1.w * v2.w; } 10

Code Example to Support Intuition (2) s.srad2: int d_cN, d_cS, d_cW, d_cE; d_cN = d_c[ei]; d_cS = d_c[d_iS[row] + d_Nr * col]; d_cW = d_c[ei]; d_cE = d_c[row + d_Nr * d_jE[col]]; 11

Outline Motivation Key Idea RFVP Design and Operation Evaluation Conclusion 12

RFVP Architecture Design Instruction annotations by the programmer ISA changes Approximate load instruction Instruction for setting the drop rate Defining approximate load semantics Microarchitecture Integration 13

Programmer Annotations Safety is a semantic property of the program We rely on the programmer to annotate the code 14

ISA Support Approximate Loads load.approx Reg<id>, MEMORY<address> is a probabilistic load - can assign precise or imprecise value to Reg<id> Drop rate set.rate DropRateReg sets the fraction (e.g., 50%) of the approximate cache misses that do not initiate memory requests 15

Microarchitecture Integration SM Cores Cores Cores RFVP Value Predictor Cores RFVP Value Predictor RFVP Value Predictor RFVP Value Predictor Memory Hierarchy L1 L1 Data Cache L1 Data Cache L1 Data Cache Data Cache 16

Microarchitecture Integration SM Cores Cores Cores RFVP Value Predictor Cores RFVP Value Predictor RFVP Value Predictor DATA RFVP Value Predictor Memory Hierarchy L1 Memory Request L1 Data Cache L1 Data Cache L1 Data Cache Data Cache All the L1 misses are sent to the memory subsystem 17

Microarchitecture Integration SM Cores Cores Cores RFVP Value Predictor Cores RFVP Value Predictor DATA RFVP Value Predictor RFVP Value Predictor Memory Hierarchy L1 Memory Request L1 Data Cache L1 Data Cache L1 Data Cache Data Cache A fraction of the requests will be handled by RFVP 18

Language and Software Support Targeting performance critical loads Only a few critical instructions matter for value prediction Providing safety guarantees Programmer annotations and compiler passes Drop-rate selection A new knob that allows to control quality vs. performance tradeoffs 19

Base Value Predictor: Two-Delta Stride Last Value Stride1 Stride2 Hash (PC) + Predicted Value 20

Designing RFVP predictor for GPUs Last Value Stride1 Stride2 Hash (PC) How to design a predictor for GPUs with, for example, 32 threads per warp? + Predicted Value 21

GPU Predictor Design and Operation Last Value Stride1 Stride2 Last Value Stride1 Stride2 Hash (PC) + + + + Predicted Value Predicted Value 1 2 16 17 18 32 warp (32 threads) 22

Outline Motivation Key Idea RFVP Design and Operation Evaluation Conclusion 23

Methodology Simulator GPGPU-Sim simulator (cycle-accurate) ver. 3.1 Workloads GPU benchmarks from Rodinia, Nvidia SDK, and Mars benchmark suites System Parameters GPU with 15 SMs, 32 threads/warp, 6 memory channels, 48 warps/SM, 32KB shared memory, 768KB LLC, GDDR5 177.4 GB/sec off-chip bandwidth 24

RFVP Performance Error < 1% Error < 3% Error < 5% 2.4 Error < 10% 2.2 1.6 1.5 Speedup 1.4 1.3 1.2 1.1 1.0 Significant speedup for various acceptable quality rates 25

RFVP Bandwidth Consumption BW Consumption Reduction Error < 1% Error < 3% Error < 5% Error < 10% 2.3 1.9 2.0 1.8 1.7 1.6 1.5 1.4 1.3 1.2 1.1 1.0 Reduction in consumed bandwidth (up to 1.5X average) 26

RFVP Energy Reduction Error < 1% Error < 3% Error < 5% 1.6 Error < 10% 1.9 2.0 1.4 Energy Reduction 1.3 1.2 1.1 1.0 Reduction in consumed energy (27% on average) 27

Sensitivity to the Value Prediction Null Predictor Last-Value Predictor 2.2 Two-Delta Predictor 2.4 1.6 1.5 Speedup 1.4 1.3 1.2 1.1 1 Two-Delta predictor was the best option 28

Other Results and Analyses in the Paper Sensitivity to the drop rate (energy and quality) Precise vs. imprecise value distributions RFVP for memory latency wall CPU performance CPU energy reduction CPU quality vs. performance tradeoff 29

Conclusion Problem: Performance of modern GPUs significantly limited by the available off-chip bandwidth Observations: Many GPU applications are amenable to approximation Data value similarity allows to efficiently predict values of cache misses Key Idea: Use simple rollback-free value prediction mechanism to avoid accesses to main memory Results: Higher speedup (36% on average) with less than 10% quality loss Lower energy consumption (27% on average) 30

RFVP: Rollback-Free Value Prediction with Safe to Approximate Loads Gennady Pekhimenko, Onur Mutlu, Todd C. Mowry Amir Yazdanbakhsh, Bradley Thwaites, Hadi Esmaeilzadeh Georgia Institute of Technology Carnegie Mellon University

Sensitivity to the Drop Rate Drop Rate = 12.5% Drop Rate = 75% Drop Rate = 25% Drop Rate = 80% Drop Rate = 50% Drop Rate = 90% 2.2 2.0 Speedup 1.8 1.6 1.4 1.2 1.0 Speedup varies significantly with different drop rates 32

Pareto Analysis Pareto-optimal is the configuration with 192 entries and 2 independent predictors for 32 threads 33