Hypothesis Testing and Confidence Intervals in Econometrics

This chapter delves into hypothesis testing and confidence intervals in econometrics, covering topics such as testing regression coefficients, forming confidence intervals, using the central limit theorem, and presenting regression model results. It explains how to establish null and alternative hypotheses, calculate z-statistics, and understand the properties of OLS estimators. The content also explores estimating standard errors and their impact on the distribution, along with comparing t-statistics and z-statistics.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Chapter 6 Hypothesis Testing and Confidence Intervals

Learning Objectives Test a hypothesis about a regression coefficient Form a confidence interval around a regression coefficient Show how the central limit theorem allows econometricians to ignore assumption CR4 in large samples Present results from a regression model

Hypotheses About 1 We propose a value of 1 and test whether that value is plausible based on the data we have 1 * Call the hypothesized value Formal statement: 1 * Null hypothesis: H0: 1= H1: 1 1 * Alternative hypothesis: 1 * Sometimes the alternative is one sided, e.g., H1: 1< Use one sided alternative if only one side is plausible

The z-statistic * 1 =b z 1 . .[ ] se b 1 For any hypothesis test: (i) Take the difference between our estimate and the value it would have under the null hypothesis, then (ii)Standardize it by dividing by the standard error of the parameter If z is a large positive or negative number, then we reject the null hypothesis. We conclude that the estimate is too far from the hypothesized value to have come from the same distribution. If z is close to zero, then we cannot reject the null hypothesis. We conclude that it is a plausible value of the parameter. But, what is a large z?

Recap: Properties of OLS Estimator b i 2 ( ) ~ 0,1 N 1 . .[ ] se b 1 ~ , b N or 1 1 N 2 i x 1 = 1 OLS has these properties if CR1, CR2, and CR3 hold, and N is large OR CR1, CR2, CR3, and CR4 hold

Properties of b =-1.80 = . .[ ] 0.41 s e b

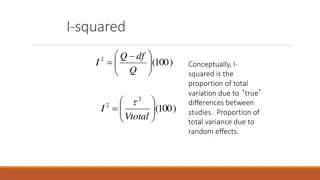

But, we dont know the standard error b i 2 Given assumptions CR1, CR2, CR3, and CR4 ( ) ~ 0,1 N 1 . .[ ] se b 1 ~ , b N or 1 1 N CR4 not necessary if N is large, thanks to the CLT 2 i x 1 = 1 Don t know 2, so have to estimate it with: N 1 K Sum of squared errors = i ) Y i = = 2 2 ( s Y i 1 1 N N K 1 s = . .[ ] est se b 1 N 2 i x = 1 i

How does estimating std error affect distribution? If CR1-CR3 hold (plus CR4, for small N) Compare this to the t-statistic formula * 1 b =b = t 1 ( ) ~ 0,1 z N 1 . .[ ] se b 1 . .[b ] est se 1 1 Numerator is the same if the null hypothesis is true Denominator is different, but If N is large, then estimated std error is close to s.e.[b1], so t has normal distribution If CR4 holds, then t has a student t distribution.

How large should the t statistic be before we reject the null hypothesis? * 1 b = Student t Table t 1 . .[b ] est se Significance Level (2-Tailed Test) 0.05 12.71 4.30 3.18 2.78 2.57 2.45 2.37 2.31 2.26 2.23 2.13 2.09 2.06 2.04 2.02 2.01 2.00 1.99 1.98 1.98 1.96 1 Degrees of Freedom 0.10 6.31 2.92 2.35 2.13 2.02 1.94 1.90 1.86 1.83 1.81 1.75 1.73 1.71 1.70 1.68 1.68 1.67 1.66 1.66 1.66 1.65 0.01 63.66 9.93 5.84 4.60 4.03 3.71 3.50 3.36 3.25 3.17 2.95 2.85 2.79 2.75 2.70 2.68 2.66 2.64 2.63 2.61 2.58 1 2 3 4 5 6 7 8 9 10 15 20 25 30 40 50 60 80 100 150 (Z) If the null hypothesis is true and CR1-CR4 hold, then t ~ t(N-K-1) If the null hypothesis is true, CR1-CR3 hold, and N is large then t ~ N(0,1) Knowing the distribution means that we know which values are likely and which are unlikely. From the normal table, getting a t-statistic larger than 1.96 in absolute value only happens with probability 5%.

Example: California schools data = + 951.87 2.11 API FLE e i i i Hypothesis: free-lunch eligibility (FLE) is uncorrelated with academic performance (API) = : 0 vs : 0 H H 0 1 1 1

Put this into practice with our California schools data = : : 0 0 H H (free-lunch eligibility, FLE, is uncorrelated with academic performance, API) 0 1 1 1 The ideal t-statistic would use the standard error from the population, i.e., Estimate from sample of 20 schools 2.11 0 0.41 = = 5.15 z Std error from whole population (usually don t know this) The absolute value of this test statistic 5.15 > 1.96, so we reject the null hypothesis at 5% significance.

But We Dont Usually Know the Population Variance . 2 11 0 = = . 5 86 t . 0 36 Std error estimated from sample of 20 schools Sample is small, so assume CR4. Exceeds the critical value (|-5.86| > 2.10), so we still reject the null hypothesis. But remember that we had to assume CR4 in order to perform this hypothesis test. If there s any reason to think that the population errors are not normally distributed (picture a nice bell curve), this analysis will be very hard to defend to your colleagues.

How Big a Sample is Big Enough? 0.4 0.35 0.3 0.25 0.2 0.15 0.1 0.05 0 1 2 3 4 5 6 7 or more Figure 6.1. Number of People per Household; US Census 2010 Source: https://www.census.gov/hhes/families/data/households.html This distribution is certainly not normal

Distribution of the t-statistic Looks More Normal the Bigger the Sample Average household size, 2010 US Census 2.52 N b = t / s Figure 6.2. Central Limit Theorem Implies t statistic gets more Normal as N Increases

CA Schools Errors Look Close to Normal -250 -200 -150 -100 -50 0 50 100 150 200 250 Figure 6.3. Histogram of Errors from Population Regression Using All 5765 CA Schools

How Big a Sample is Big Enough? There is no single answer to this question, but .. N > 100 can usually be considered large (unless your data are really weird) N < 20 is small 20 < N < 100 is the gray area

Confidence Interval Range of values that we can reasonably expect the true population parameter to take on. It is the set of null hypotheses that you cannot reject. * 1 In a large sample, this means that a 95% confidence interval is all the values for which * 1 1 1.96 1.96 . .[ ] est se b b 1 More common way to express the confidence interval: The 1- % confidence interval is all the values in the range , where , 1 1 ( ) ( ) = = + * . .[ ] * . .[ ] b c est se b b c est se b 1 1 1 1 1 1 where c is the critical value for a two-sided test.

Hypothesis Testing in Multiple Regression * k b = k t . .[ ] est se b k N i v y ki i = = 1 N b k 2 ki i v = 1 N 2 = . .[ ] se b k 2 ki v = 1 i N N 2 i e 2 s SSE K = = = 2 = K . .( ) , 1 i est se b s k N 1 1 N 2 ki v = 1 i

Do We Have a Model? Compare the R2 from two regressions, one with all the RHS variables in it, the other without any RHS variables (only an intercept, which will equal the mean of Y) Form the Wald statistic: 2 alt 2 null R R = W 2 alt (1 )/( 1) R N K alt Kalt in the denominator is the number of X variables in the main model The more the RHS variables explain the variation in Y, the bigger this test statistic will be Under the null hypothesis, the Wald statistic has a Chi-square distribution with q degrees of freedom, where q is the number of RHS variables omitted under the null hypothesis

Example: Do We Have a Model to Predict API in CA Schools? 0.86 0 = = 104.43 W (1 0.86)/(20 2 1) Because 104.43 > 5.99, we reject the null hypothesis at 5% significance Significance level Degrees of Freedom 0.10 0.05 0.01 1 2 3 4 5 2.71 4.61 6.25 7.78 9.24 3.84 5.99 7.82 9.49 11.07 6.64 9.21 11.35 13.28 15.09

How to Present Regression Results 1 = + + 777.17 0.51 (37.92) 2.34 (0.47) Y X X e 1 2 i i i i (0.40) = Sample size 0.84 = R 20 2

How to Present Regression Results 2 Estimated Coefficient Standard Error Variable t-Statistic Free-lunch eligibility 0.51 0.40 1.28 Parents education 2.34 0.47 5.01 Constant Sample size R2 777.17 20 0.84 37.92 20.50

What We Learned We reject the null hypothesis of zero relationship between free lunch eligibility (FLE) and academic performance. Our result is the same whether we drop CR4 and invoke the central limit theorem (valid in large samples) or whether we impose CR4 (necessary in small samples). Confidence intervals are narrow when the sum of squared errors is small, the sample is large, or there s a lot of variation in X. How to present results from a regression model.