Enhancing FAIR Data Sharing and Credit Mechanisms in Scientific Communities

Joint meeting in Helsinki focused on improving FAIR data practices, assessment criteria, and rewarding mechanisms in scientific communities. Key goals include creating assessment criteria for FAIRness, enhancing data sharing mechanisms, and integrating FAIR principles in evaluation schemes at institutional levels.

Uploaded on Oct 07, 2024 | 1 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

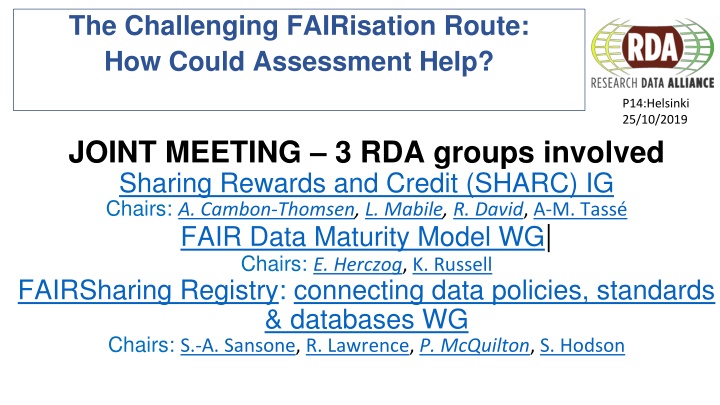

The Challenging FAIRisation Route: How Could Assessment Help? P14:Helsinki 25/10/2019 JOINT MEETING 3 RDA groups involved Sharing Rewards and Credit (SHARC) IG Chairs: A. Cambon-Thomsen, L. Mabile, R. David, A-M. Tass FAIR Data Maturity Model WG| Chairs: E. Herczog, K. Russell FAIRSharing Registry: connecting data policies, standards & databases WG Chairs: S.-A. Sansone, R. Lawrence, P. McQuilton, S. Hodson

Two parts : one objective FAIR: Finding Adequate Innovative Routes to FAIR 1st part: contribution from the groups and a challenger speaker Goals of SHARC group s project; towards recommendations / guidance : Laurence Mabile FAIR criteria assessment survey and discussions results, Romain David How can the FAIR criteria best be employed in guiding the researcher in the pre- FAIRification stage? Edit Herczog FAIRsharing and the FAIR evaluator - how to assess the right standards, and the right repository for your data, Paul McQuilton Potential of FAIR principles as tools in creating Open Science assessment frameworks: two examples, Heidi Laine 2ndpart: contribution of the audience through 3 groups of discussion on questions designed by the IG and WG Conclusion

Welcome to all, also to remote participants! Link to the collaborative session notes: open it attendees, please include your name on the Collaborative Notes page Session organised in 2 parts: presentation / discussion (Chairs) Speakers: ACT, Laurence Mabile, Romain David, 3

Specifically: Work out a list of criteria to assess FAIRness to prepare FAIRisation of data (see FAIR data maturity model wg) and of community (training, planing, governance, evaluation...) Review the existing rewarding mechanisms in various scientific communities, assess their limits, and to identify key factors to improve (ex: tools, incentives, requirements ). Encourage the integration of data sharing-related criteria including FAIRness criteria in the global scientific activity evaluation scheme at the European and national institutional levels. To plan processes for stepwise adoption of principles necessary for implementation measures tuned to national, local and institutional contexts. SHARC IG- objectives Unpack and improve crediting and rewarding mechanisms in the data/resources sharing process. Making resources available for community reuse means ensuring that data are findable and accessible on the web, and that they comply with international standards making them interoperable and reusable by others (FAIR principles) 4

What is needed? Guidance to enable credit /reward, to various stakeholders Guidance to plan, train and build FAIRness literacy for increasing the sharing capacity. Some cases study (INRA, EC-IMI FAIRplus, Belmont forum PARSEC communities) CHALLENGING PROCESSES that need to be planned early in the FAIRification route (pre-fairification steps)!! 5

Need to assess FAIRness: which criteria? Which priorities? Before automatization, stakeholders (humans) needs to understand Fairisation (AND how to plan and build steps): What to do on what Why have we to do it Necessary means for each action (short and long term) Necessary training ad adapted pedagogic material 6

First keys (Feedback from a survey, case studies and SHARC FAIR evaluation criteria subgroup) Need to be realistic Need stepwise processes First step reachable by all community members Organising steps between all stakeholders Iterative and participative process -> taken in account community and stakeholders progress 7

SHARC has worked at providing a list of criteria understandable as much as possible by non technical towards scientists or evaluators FDMM wg is working at establishing a machine actionable set of criteria Need to implement them gradually, how? Convergence could be only partial? (Quality process and several level of implementation depending on...?) 8

SHARC participants has worked at providing a proposal of 5 steps process to be adapted to different stakeholders 1- Fostering the pre-FAIRification decision 2- Planning pre-FAIRification training and support 3- Structuring pre-FAIRification processes: planning a step-by-step process for each action of FAIRification / evaluation 4- Ensuring all criteria are well understood in the pre-FAIRification process 5- Enabling crediting / rewarding mechanisms from the start 9

Next steps to go forward : collaborative and open work on Example of aspect to keep in mind: Kind of data / ressources Community FAIRness litteracy Quality of criteria compliance (Rich metadata? Curation?) Means and time of researchers Minimum requirement for a real improve of FAIRness ... 10

DISCUSSION in 3 groups Q1 : What is the place of FAIR assessment as regards other elements of scientific activities involved in data sharing? Chair: Anne Q1.1: some criteria of FAIR assessment can be considered as a part of quality of data assessment, give some examples / some not, give some examples Q1.2: All data should not be FAIRified / Give some examples Q1.3: FAIR assessment criteria should be part of the scientific evaluation process (grant applications, call for projects, individual scientist evaluation of activities, recruitment and career steps, teams or laboratories evaluation, institution policies' assessment, other?) / Give some examples of criteria that could be used Q2 : How can the FAIR criteria best be employed in guiding the researcher in the pre-FAIRification stage? Chair: Edit Q2.1: Which stakeholders need to be involved to prepare researchers for making their data FAIR ? Which aspect of FAIR will each stakeholder cover? (open question) Q2.2: How can researchers best be prepared in the pre-FAIRification stage for making their data FAIR? (open question); Is this a once off or will there be aspects that will need to be revisited throughout the research lifecycle? Q3: What is the role of repositories and standards providers (assessment for evaluation)? How can they ensure that their resources are visible and used by the community to enable FAIR data? How can FAIRsharing help? Chair: Pete Q3.1: There is no agreed naming convention for classifying standards for reporting and sharing data, metadata and other digital objects. Do you think we need such common classification? Q3.2: How can a repository increase it s visibility to researchers and policymakers? Q3.3: To measure the use and adoption of standards, showing which repositories implement them is essential, but how else can the adoption of standards be measured?

ADDITIONAL INFO Recruitment and promotion in scientific careers Allocation of research funding Provision of appropriate human, financial and infrastructural support within research institutions

The crediting/rewarding ecosystem Required Processes for sharing Repositories managers Funders; Research Institutions DATA REPOSITORIES Long-term, trustworthy Storing / Documenting (metadata) CAREER HIRING & PROMOTION CREDIT Funders; Research Institutions Data ID Identifying / Linking / Indexing (digital, global, persistent) Researchers ID Data ID authorities/ ID Registars FUNDING Citizens / Society Licences Acquiring / Protecting REPUTATION REWARD Citizen Contributions and recognition PUBLICATION & CITATION Data/resources papers Research articles Making discoverable / visible Promoting Research Institutions SUPPORT Editors / Publishers Trained personnel; technical & logistical PATENTS / IP Valuing --- Tools/Means --- Processes required --- Possible stakeholders in FAIR assessment RDA-SHARC Interest group recommendations RESEARCH EVALUATION SCHEME semi-quantitative; qualitative criteria 13

SHARC stands for: SHAring Rewards and Credit Observation: Despite numerous statements / active promotion, data & materials sharing according to the FAIR principles is still not the practice in most communities. AIM: focus on a major obstacle, the lack of recognition for the efforts required: SHARC aims to unpack and improve crediting and rewarding mechanisms in the data/resources sharing process. HOW? works to provide practical recommendations that would take into account as much as possible challenges within existing academic infrastructures to resolve these difficulties. 14

SO FAR: The group s activity has moved forward on 3 fronts: 1. Draft description of the landscape of the crediting and rewarding processes regarding the sharing activity in different scientific communities (biomedical and life sciences, biodiversity, geospatial domains mainly so far) that identifies gaps, 2. Draft recommendations towards researchers and relevant stakeholders at national and international levels, to establish improved guidance for resource sharing and its recognition in research practices (research evaluation scheme). 3. Ongoing development of FAIR assessment grids Today s session is an opportunity to share our tools and get feedback from potential users & from other groups having developed complementary tools. 15