Efficiency of Facebook’s Tectonic Filesystem for Exabyte-Scale Storage

"Facebook's Tectonic Filesystem improves efficiency for exascale storage, addressing challenges in data warehouse, blob storage, and operational complexity. By offering a generic solution scalable to exabyte-scale clusters, Tectonic simplifies operations, enhances resource utilization, and supports multitenancy while matching specialized system performance."

Uploaded on Sep 26, 2024 | 3 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

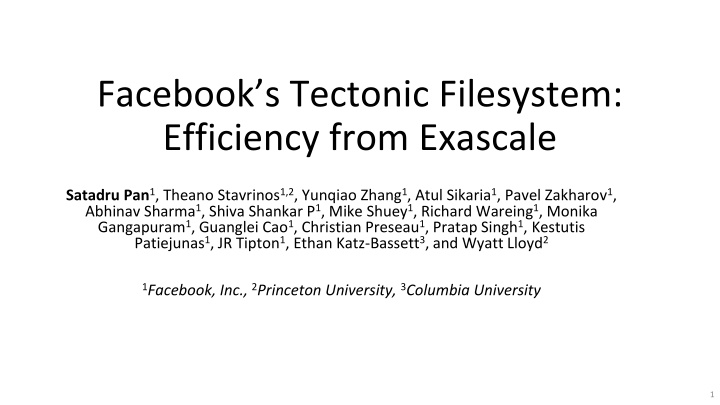

Facebooks Tectonic Filesystem: Efficiency from Exascale Satadru Pan1, Theano Stavrinos1,2, Yunqiao Zhang1, Atul Sikaria1, Pavel Zakharov1, Abhinav Sharma1, Shiva Shankar P1, Mike Shuey1, Richard Wareing1, Monika Gangapuram1, Guanglei Cao1, Christian Preseau1, Pratap Singh1, Kestutis Patiejunas1, JR Tipton1, Ethan Katz-Bassett3, and Wyatt Lloyd2 1Facebook, Inc., 2Princeton University, 3Columbia University 1

Exabyte-Scale Storage Use Cases at FB Blob storage Photos and videos in Facebook, Messenger attachments Data warehouse Hive tables for data analytics, machine learning Exabytes of data Exabytes of data Reads are order of multiple MBs, writes are 10s of MBs Several KBs to several MBs in size Throughput sensitive Latency sensitive 2

Storage Infrastructure Before Tectonic Existing solutions not generic enough Datacenter Operational complexity: 3 different systems tailored to different workloads Data warehouse Blob storage Haystack f4 HDFS HDFS HDFS HDFS Each instance not scalable beyond 10s of PBs Throughput-efficient, not suitable for small IO Hot blobs, not storage efficient Warm blobs, no support for uploads IO-bound: wasted storage Storage-bound: wasted IO Surplus storage Surplus IOPS IOPS Storage IOPS Storage IOPS Storage IOPS Storage IOPS Storage IOPS Storage Poor resource utilization: isolated systems could not share resources 3

Tectonic Overview Datacenter Simpler Operations: Single system to reason about, generic enough to handle all use cases Blob storage Data warehouse Tectonic Cluster Better utilization: no stranding of resources Scalability: support exabyte-scale clusters Multitenancy: isolate tenants and share resources Performance: match performance of specialized systems Performance: match performance of specialized systems Scalability: support exabyte-scale clusters Multitenancy: isolate tenants and share resources IOPS Storage 4

Scalability: Support Exabyte Scale Clusters / / [dir1, dir2] dir1 [file1, file2] dir2 dir1 Name layer file1 [block1, block2] file2 file1 Key-value store File layer Background Services Block1 [c1, , c14] file1 block2 block1 (80MB) Block layer RS(10,4) Metadata Store: linearly scalable metadata storage Garbage collectors, Rebalancer, c1 c14 c10 Data chunks Parity chunks Chunk Store: linearly scalable data storage 5

Scalability: Support Exabyte Scale Clusters Read FilePath 1. FilePath to FileID Name layer Key-value store 2. FileID to list of Blocks File layer Background Services Client Library 3. Block to list of Chunks Block layer Metadata Store Garbage collectors, Rebalancer, 4. Fetch data from chunks Chunk Store 6

Scalability: Support Exabyte Scale Clusters Add Block to File Name layer Key-value store 4. Add Block to File File layer Background Services Client Library 3. Store Block to Chunk map Block layer 1. List of suitable nodes Metadata Store Garbage collectors, Rebalancer, 2. Store Chunks to storage nodes Chunk Store 7

Performance: Match Specialized Systems Specialized storage systems optimize for the specific access pattern and performance requirements Tectonic uses tenant-specific optimizations to match the performance of specialized systems Optimizations are enabled by the Client Library, which runs in application binary Client library allows flexible and varying composition of Tectonic operations, which can be configured according to the needs of the tenant 8

Tenant-specific Optimizations: Appends Data warehouse Blob storage Append blob data to replicas of partial block on storage nodes Append data to in-memory buffer Blob sizes are small (100s of KBs) Files are large (100s of MBs) 3-way replicated Blobs appended to log structured file Files are read after the creator closes the file Read-after-write consistency on every append Block full? (80MB) Blobs need to be persisted before acknowledging upload Block full? (72MB) Read block back Minimize latency for blob uploads, Later optimize storage Minimize bytes written to store file to improve overall throughput RS-encode block and store chunks RS-encode block and store chunks Read-after-write consistency only after file close RS(10,4) RS(9,6) 9

Results Tectonic clusters are ~10x the size of HDFS clusters, which simplifies production operations Blob storage latency in Tectonic comparable to Haystack In a multitenant cluster, data warehouse uses surplus IO from blob storage to serve its peaks 10

Efficiency From Storage Consolidation 2000 1800 1600 1400 IOPS (K) 1200 1000 800 600 400 Blob storage: surplus IO available 200 10 20 30 Time (hours) 40 50 60 70 11

Efficiency From Storage Consolidation Data warehouse: peaks need excess IO, use surplus from blob storage 2000 1800 1600 1400 IOPS (K) 1200 1000 800 600 400 Blob storage: surplus IO available 200 10 20 30 Time (hours) 40 50 60 70 12

Tectonic Provides Datacenter-Scale Storage Replaced previous constellation of specialized storage systems Simpler operations Better resource utilization Tectonic's design addresses the key challenges: Scalability: disaggregated linearly scalable components Performance: tenant-specific optimizations via client library 13

Thank You 14