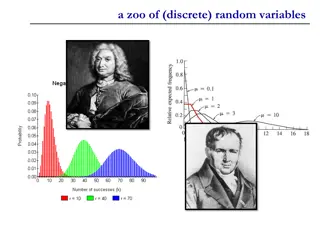

Discrete Optimization Methods Overview

Discrete optimization methods, such as total enumeration and constraint relaxations, are valuable techniques for solving problems with discrete decision variables. Total enumeration involves exhaustively trying all possibilities to find optimal solutions, while constraint relaxations offer a more tractable approach. Exponential growth can make total enumeration impractical for models with many variables. An example involving fundraising projects illustrates the application of these methods in real-world scenarios.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Chapter 12 Discrete Optimization Methods

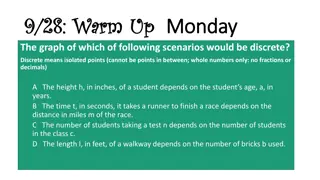

12.1 Solving by Total Enumeration If model has only a few discrete decision variables, the most effective method of analysis is often the most direct: enumeration of all the possibilities. [12.1] Total enumeration solves a discrete optimization by trying all possible combinations of discrete variable values, computing for each the best corresponding choice of any continuous variables. Among combinations yielding a feasible solution, those with the best objective function value are optimal. [12.2]

Swedish Steel Model with All-or-Nothing Constraints min 16(75)y1+10(250)y2 +8 x3+9 x4+48 x5+60 x6 +53 x7 s.t. 75y1+ 250y2+ x3+ x4+ x5+ x6 + x7 = 1000 0.0080(75)y1+ 0.0070(250)y2+0.0085x3+0.0040x4 0.0080(75)y1+ 0.0070(250)y2+0.0085x3+0.0040x4 0.180(75)y1+ 0.032(250)y2+ 1.0 x5 0.180(75)y1+ 0.032(250)y2+ 1.0 x5 0.120(75)y1+ 0.011(250)y2+ 1.0 x6 0.120(75)y1+ 0.011(250)y2+ 1.0 x6 0.001(250)y2+ 1.0 x7 0.001(250)y2+ 1.0 x7 x3 x7 0 y1, y2= 0 or 1 y1* = 1, y2* = 0, x3* = 736.44, x4* = 160.06 x5* = 16.50, x6* = 1.00, x7* = 11.00 (12.1) 6.5 7.5 30.0 30.5 10.0 12.0 11.0 13.0 Cost = 9967.06

Swedish Steel Model with All-or-Nothing Constraints Discrete Combination y1 0 0 1 1 Corresponding Continuous Solution Objective Value y2 0 1 0 1 x3 x4 x5 x6 x7 823.11 646.67 736.44 561.56 125.89 63.33 160.06 94.19 30.00 22.00 16.50 8.50 10.00 7.25 1.00 0.00 11.00 10.75 11.00 10.75 10340.89 10304.08 9967.06 10017.94

Exponential Growth of Cases to Enumerate Exponential growth makes total enumeration impractical with models having more than a handful of discrete decision variables. [12.3]

12.2 Relaxation of Discrete Optimization Models Constraint Relaxations Model ( ?) is a constraint relaxations of model (P) if every feasible solution to (P) is also feasible in ( ?) and both models have the same objective function. [12.4] Relaxation should be significantly more tractable than the models they relax, so that deeper analysis is practical. [12.5]

Example 12.1 Bison Booster The Boosters are trying to decide what fundraising projects to undertake at the next country fair. One option is customized T- shirts, which will sell for $20 each; the other is sweatshirts selling for $30. History shows that everything offered for sale will be sold before the fair is over. Materials to make the shirts are all donated by local merchants, but the Boosters must rent the equipment for customization. Different processes are involved, with the T-shirt equipment renting at $550 for the period up to the fair, and the sweatshirt equipment for $720. Display space presents another consideration. The Boosters have only 300 square feet of display wall area at the fair, and T-shirts will consume 1.5 square feet each, sweatshirts 4 square feet each. What plan will net the most income?

Bison Booster Example Model Decision variables: x1 number of T-shirts made and sold x2 number of sweatshirts made and sold y1 1 if T-shirt equipment is rented; =0 otherwise y1 1 if sweatshirt equipment is rented; =0 otherwise Max 20x1 + 30x2 550y1 720y2 (Net income) s.t. 1.5x1 + 4x2 300 x1 200y1 x2 75y2 x1, x2 0 y1, y2 = 0 or 1 x1* = 200, x2* = 0, y1* = 1, y2* = 0 (Display space) (T-shirt if equipment) (Sweatshirt if equipment) (12.2) Net Income = 3450

Constraint Relaxation Scenarios Double capacities 1.5x1 + 4x2 600 x1 400y1 x2 150y2 x1, x2 0 y1, y2 = 0 or 1 Dropping first constraint 1.5x1+ 4x2 300 x1 200y1 x2 75y2 x1, x2 0 y1, y2 = 0 or 1 Net Income = 7450 ?1* = 400, ?2* = 0, ?1* = 1, ?2 * = 0 Net Income = 4980 ?1* = 200, ?2* = 75, ?1* = 1, ?2 * = 1

Constraint Relaxation Scenarios Treat discrete variables as continuous 1.5x1 + 4x2 300 x1 200y1 x2 75y2 x1, x2 0 0 y1 1 0 y2 1 Net Income = 3450 ?1* = 200, ?2* = 0, ?1* = 1, ?2 * = 0

Linear Programming Relaxations Continuous relaxations (linear programming relaxations if the given model is an ILP) are formed by treating any discrete variables as continuous while retaining all other constraints. [12.6] LP relaxations of ILPs are by far the most used relaxation forms because they bring all the power of LP to bear on analysis of the given discrete models. [12.7]

Proving Infeasibility with Relaxations If a constraint relaxation is infeasible, so is the full model it relaxes. [12.8]

Solution Value Bounds from Relaxations The optimal value of any relaxation of a maximize model yields an upper bound on the optimal value of the full model. The optimal value of any relaxation of a minimize model yields an lower bound. [12.9] Feasible solutions in relaxation Feasible solutions in true model True optimum

Example 11.3 EMS Location Planning 8 2 9 1 6 4 5 10 3 7

Minimum Cover EMS Model 10 ??? ?? (12.3) 1 1 x4 + x6 x4 + x5 x4 + x5 + x6 1 x4 + x5 + x7 1 x8 + x9 x6 + x9 x5 + x6 x5 + x7 + x10 1 x8 + x9 x9 + x10 x10 ?=1 s.t. 1 x2 x1 + x2 1 x1 + x3 1 x3 x3 x2 x2 + x4 1 x3 + x4 1 x8 1 1 1 x2* = x3* = x4* = x6* = x8* = x10* =1, x1* = x5* = x7* = x9* = 0 1 1 1 1 1 1 xi = 0 or 1 j=1, ,10 1

Minimum Cover EMS Model with Relaxation 10 ??? ?? (12.4) 1 1 x4 + x6 x4 + x5 x4 + x5 + x6 1 x4 + x5 + x7 1 x8 + x9 x6 + x9 x5 + x6 x5 + x7 + x10 1 x8 + x9 x9 + x10 x10 ?=1 s.t. 1 x2 x1 + x2 1 x1 + x3 1 x3 x3 x2 x2 + x4 1 x3 + x4 1 x8 ?2* = ?3 * = ?8* = ?10* =1, ?4* = ?5* = ?6* = ?9* = 0.5 1 1 1 1 1 1 0 xj 1 j=1, ,10 1 1 1 1

Optimal Solutions from Relaxations If an optimal solution to a constraint relaxation is also feasible in the model it relaxes, the solution is optimal in that original model. [12.10]

Rounded Solutions from Relaxations Many relaxations produce optimal solutions that are easily rounded to good feasible solutions for the full model. [12.11] The objective function value of any (integer) feasible solution to a maximizing discrete optimization problem provides a lower bound on the integer optimal value, and any (integer) feasible solution to a minimizing discrete optimization problem provides an upper bound. [12.12]

Rounded Solutions from Relaxation: EMS Model Ceiling ?1 = ?1 = 0 = 0 ?2 = ?2 = 1 = 1 ?3 = ?3 = 1 = 1 ?4 = ?4 = 0.5 = 1 ?5 = ?5 = 0.5 = 1 ?6 = ?6 = 0.5 = 1 ?7 = ?7 = 0 = 0 ?8 = ?8 = 1 = 1 ?9 = ?9 = 0.5 = 1 ?10 = ?10 = 1 = 1 ?=1 Floor ?1 = ?1 = 0 = 0 ?2= ?2 = 1 = 1 ?3= ?3 = 1 = 1 ?4 = ?4 = 0.5 = 0 ?5 = ?5 = 0.5 = 0 ?6 = ?6 = 0.5 = 0 ?7= ?7 = 0 = 0 ?8= ?8 = 1 = 1 ?9 = ?9 = 0.5 = 0 ?10= ?10 = 1 = 1 ?=1 (12.5) 10 ?j= 8 10 ??= 4

12.3 Stronger LP Relaxations, Valid Inequalities, and Lagrangian Relaxation A relaxation is strong or sharp if its optimal value closely bounds that of the true model, and its optimal solution closely approximates an optimum in the full model. [12.13] Equally correct ILP formulations of a discrete problem may have dramatically different LP relaxation optima. [12.14]

Choosing Big-M Constants Whenever a discrete model requires sufficiently large big-M s, the strongest relaxation will result from models employing the smallest valid choice of those constraints. [12.15]

Bison Booster Example Model with Relaxation in Big-M Constants Max 20x1 + 30x2 550y1 720y2 1.5x1 + 4x2 300 x1 200y1 x2 75y2 x1, x2 0 y1, y2 = 0 or 1 (Net income) (Display space) (T-shirt if equipment) (Sweatshirt if equipment) Net Income = 3450 x1* = 200, x2* = 0, y1* = 1, y2* = 0 s.t. (12.2) Max 20x1 + 30x2 550y1 720y2 1.5x1 + 4x2 300 x1 10000y1 x2 10000y2 x1, x2 0 y1, y2 = 0 or 1 (Net income) (Display space) (T-shirt if equipment) (Sweatshirt if equipment) Net Income = 3989 ?1 = 200, ?2 = 0, ?1 = 0.02, ?2 = 0 s.t. (12.6)

Valid Inequalities A linear inequality is a valid inequality for a given discrete optimization model if it holds for all (integer) feasible solutions to the model. [12.16] To strengthen a relaxation, a valid inequality must cut off (render infeasible) some feasible solutions to the current LP relaxation that are not feasible in the full ILP model. [12.17]

Example 11.10 Tmark Facilities Location Fixed Cost 2400 7000 3600 1600 3000 4600 9000 2000 i 1 2 3 4 5 6 7 8 4 6 7 1 5 2 3 8

Example 11.10 Tmark Facilities Location Zone j 1 2 3 4 5 6 7 8 9 10 11 12 13 14 Possible Center Location, i 3 4 1.10 0.90 0.90 1.30 0.80 1.70 1.40 0.50 0.60 0.70 1.10 0.60 1.25 0.80 1.00 1.10 1.70 1.30 1.30 1.50 2.40 1.90 2.20 1.80 1.90 1.90 2.00 2.20 Call 1 2 5 6 7 8 Demand 250 150 1000 80 50 800 325 100 475 220 900 1500 430 200 1.25 0.80 0.70 0.90 0.80 1.10 1.40 1.30 1.50 1.35 2.10 1.80 1.60 2.00 1.40 0.90 0.40 1.20 0.70 1.70 1.40 1.50 1.90 1.60 2.90 2.60 2.00 2.40 1.50 1.40 1.60 1.55 1.45 0.90 0.80 0.70 0.40 1.00 1.10 0.95 1.40 1.50 1.90 2.20 2.50 1.70 1.80 1.30 1.00 1.50 0.80 1.20 2.00 0.50 1.00 1.20 2.00 2.10 2.05 1.80 1.70 1.30 1.00 1.50 0.70 1.10 0.80 2.00 0.90 1.10 2.10 1.80 1.60 1.40 1.30 1.40 1.10 1.00 0.80 0.70 1.20 1.00 0.80 0.80

Tmark Facilities Location Example with LP Relaxation 8 14 8 (12.8) ??? (??,???)??,?+ ???? ?=1 ?=1 ?=1 8 s.t. ??,?= 1 ??? ??? ? (????? ? ????) ?=1 14 1500?? ????,? 5000?? (???????? ?? ?) y4*=y8*= 1 y1*=y2*= y3*=y5*= y6*=y7*= 0 Total Cost = 10153 LP Relaxation ?1= 0.230, ?2= 0.000, ?3= 0.000, ?4= 0.301, ?5= 0.115, ?6= 0.000, ?7= 0.000, ?8= 0.650 Total Cost = 8036.60 ?=1 ??,? 0 ??? ??? ?,? ??= 0 ?? 1 ??? ??? ?

Tmark Facilities Location Example with LP Relaxation 8 14 8 (12.8) ??? (??,???)??,?+ ???? ?=1 ?=1 ?=1 8 s.t. ??,?= 1 ??? ??? ? (????? ? ????) ?=1 14 1500?? ????,? 5000?? (???????? ?? ?) LP Relaxation ?1= 0.000, ?2= 0.000, ?3= 0.000, ?4= 0.537, ?5= 0.000, ?6= 0.000, ?7= 0.000, ?8= 1.000 Total Cost = 10033.68 Insolvable by Excel. ?=1 ??,? 0 ??? ??? ?,? ??,? ?? ??? ??? ?,? ??= 0 ?? 1 ??? ??? ?

Lagrangian Relaxations Lagrangian relaxations partially relax some of the main, linear constraints of an ILP by moving them to the objective function as terms + ?? ?? ??,??? ? Here ?? is a Lagrangian multiplier chosen as the relaxation is formed. If the relaxed constraint has form ???,??? ??, multiplier ?? 0 for a maximize model and ?? 0 for a minimize. If the relaxed constraint has form ???,??? ??, multiplier ?? 0 for a maximize model and ?? 0 for a minimize model. Equality constraints ???,???= ?? have URS multiplier ??. [12.18]

Example 11.6 CDOT Generalized Assignment The Canadian Department of Transportation encountered a problem of the generalized assignment form when reviewing their allocation of coast guard ships on Canada s Pacific coast. The ships maintain such navigational aids as lighthouses and buoys. Each of the districts along the coast is assigned to one of a smaller number of coast guard ships. Since the ships have different home bases and different equipment and operating costs, the time and cost for assigning any district varies considerably among the ships. The task is to find a minimum cost assignment. Table 11.6 shows data for our (fictitious) version of the problem. Three ships-the Estevan, the Mackenzie, and the Skidegate--are available to serve 6 districts. Entries in the table show the number of weeks each ship would require to maintain aides in each district, together with the annual cost (in thousands of Canadian dollars). Each ship is available SG weeks per year.

Example 11.6 CDOT Generalized Assignment District, i 3 510 10 20 60 120 10 1 2 4 30 11 40 10 390 15 5 6 20 9 450 17 30 12 Ship, j 1.Estevan Cost Time Cost Time Cost Time 130 30 460 10 40 70 30 50 150 20 370 10 340 13 30 10 40 8 2.Mackenzie 3.Skidegate ??,? 1 if district i is assigned to ship j 0 otherwise

CDOT Assignment Model min 130x1,1+460x1,2 +40x1,3 +30x2,1+150x2,2 +370x2,3 +510x3,1+20x3,2 +120x3,3 +30x4,1+40x4,2 +390x4,3 +340x5,1+30x5,2 +40x5,3 +20x6,1+450x6,2 +30x6,3 s.t. x2,1 + x2,2 + x2,3 = 1 x3,1 + x3,2 + x3,3 = 1 x4,1 + x4,2 + x4,3 = 1 x5,1 + x5,2 + x5,3 = 1 x6,1 + x6,2 + x6,3 = 1 30x1,1 + 50x2,1 + 10x3,1 + 11x4,1 + 13x5,1 + 9x6,1 10x1,2 + 20x2,2 + 60x3,2 + 10x4,2 + 10x5,2 + 17x6,2 50 70x1,3 + 10x2,3 + 10x3,3 + 15x4,3 + 8x5,3 + 12x6,3 x1,1 + x1,2 + x1,3 = 1 x1,1*=x4,1*=x6,1*=x2,2*= x5,2*=x3,3*=1 Total Cost = 480 50 50 (12.12) Xi,j = 0 or 1 i=1, ,6; j=1, ,3

Largrangian Relaxation of the CDOT Assignment Example min 130x1,1+460x1,2 +40x1,3 +30x2,1+150x2,2 +370x2,3 +510x3,1+20x3,2 +120x3,3 +30x4,1+40x4,2 +390x4,3 +340x5,1+30x5,2 +40x5,3 +20x6,1+450x6,2 +30x6,3 +?1(1- x1,1 - x1,2 - x1,3 ) +?2(1- x2,1 - x2,2 - x2,3 ) +?3(1- x3,1 - x3,2 - x3,3 ) +?4(1- x4,1 - x4,2 - x4,3 ) +?5(1- x5,1 - x5,2 - x5,3 ) +?6(1- x6,1 + x6,2 + x6,3 ) s.t. 30x1,1 + 50x2,1 + 10x3,1 + 11x4,1 + 13x5,1 + 9x6,1 10x1,2 + 20x2,2 + 60x3,2 + 10x4,2 + 10x5,2 + 17x6,2 50 70x1,3 + 10x2,3 + 10x3,3 + 15x4,3 + 8x5,3 + 12x6,3 50 50 Xi,j = 0 or 1 i=1, ,6; j=1, ,3 (12.13)

More on Lagrangian Relaxations Constraints chosen for dualization in Lagrangian relaxations should leave a still-integer program with enough special structure to be relatively tractable. [12.19] The optimal value of any Lagrangian relaxation of a maximize model using multipliers conforming to [12.18] yields an upper bound on the optimal value of the full model. The optimal value of any valid Lagrangian relaxation of a minimize model yields a lower bound. [12.20] A search is usually required to identify Lagrangian multiplier values ?? defining a strong Lagrangian relaxation. [12.21]

Largrangian Relaxation of the CDOT Assignment Example min 130x1,1+460x1,2 +40x1,3 +30x2,1+150x2,2 +370x2,3 +510x3,1+20x3,2 +120x3,3 +30x4,1+40x4,2 +390x4,3 +340x5,1+30x5,2 +40x5,3 +20x6,1+450x6,2 +30x6,3 +?1(1- x1,1 - x1,2 - x1,3 ) +?2(1- x2,1 - x2,2 - x2,3 ) +?3(1- x3,1 - x3,2 - x3,3 ) +?4(1- x4,1 - x4,2 - x4,3 ) +?5(1- x5,1 - x5,2 - x5,3 ) +?6(1- x6,1 + x6,2 + x6,3 ) s.t. 30x1,1 + 50x2,1 + 10x3,1 + 11x4,1 + 13x5,1 + 9x6,1 10x1,2 + 20x2,2 + 60x3,2 + 10x4,2 + 10x5,2 + 17x6,2 50 70x1,3 + 10x2,3 + 10x3,3 + 15x4,3 + 8x5,3 + 12x6,3 50 50 (12.13) Xi,j = 0 or 1 i=1, ,6; j=1, ,3 Using ?1=300, ?2=200, ?3=200, ?4=45, ?5=45, ?6=30 x1,1*= x2,2*= x3,3*= x4,1*= x4,2*= x5,2*= x5,3*= x6,1 =1 Total Cost = 470

12.4 Branch and Bound Search Branch and bound algorithms combine a subset enumeration strategy with the relaxation methods. They systematically form classes of solutions and investigate whether the classes can contain optimal solutions by analyzing associated relaxations.

Example 12.2 River Power River Power has 4 generators currently available for production and wishes to decide which to put on line to meet the expected 700- MW peak demand over the next several hours. The following table shows the cost to operate each generator (in $1000/hr) and their outputs (in MW). Units must be completely on or completely off. Generator, j 2 12 600 1 7 3 5 4 14 1600 Operating Cost Output Power 300 500

Example 12.2 River Power The decision variables are defined as ?? 1 if generator j is turned on 0 otherwise min 7x1+ 12x2 + 5x3 + 14x4 s.t. 300x1 + 600x2 + 500x3 + 1600x4 xi = 0 or 1 j=1, ,4 700 x1* = x3* = 1 Cost = 12

Branch and Bound Search: Definitions A partial solution has some decision variables fixed, with others left free or undetermined. We denote free components of a partial solution by the symbol #. [12.22] The completions of a partial solution to a given model are the possible full solutions agreeing with the partial solution on all fixed components. [12.23]

Tree Search Branch and bound search begins at initial or root solution x(0)= (#, ,#) with all variables free. [12.24] Branch and bound searches terminate or fathom a partial solution when they either identify a best completion or prove that none can produce an optimal solution in the overall model. [12.25] When a partial solution cannot be terminated in a branch- and-bound search of a 0-1 discrete optimization model, it is branched by creating a 2 subsidiary partial solutions derived by fixing a previously free binary variable. One of these partial solutions matches the current except that the variable chosen is fixed =1, and the other =0. [12.26]

Tree Search Branch and bound search stops when every partial solution in the tree has been either branched or terminated. [12.27] Depth first search selects at each iteration an active partial solution with the most components fixed (i.e., one deepest in the search tree). [12.28]

LP-Based Branch and Bound Solution of the River Power Example x(0)=(#,#,#,#), ? = + ?(0)=(0,0,0,.4375); ?=6.125 0 x(1)=(#,#,#,1) ?(1)=(0,0,0,1) ?=14 x(2)=(#,#,#,0) ?(2)=(0,.33,1,0); ?=9 X4=0 X4=1 2 1 x(6)=(#,0,#,0) ?(6)=(.67,0,1,0); ?=9.67 X2=1 X2=0 Terminated by solving ? = 14 x(3)=(#,1,#,0) ?(3)=(0,1,.2,0); ?=13 6 3 ?(7)=(1,0,.8,0) ?=11 X1=0 X1=1 7 10 X3=0 X3=1 X3=1 ?(10)=(0,0,1,0) Infeasible 4 5 X3=0 ?(5)=(.33,1,1,0); ?=14.33 Terminated by bound ?(4)=(0,1,1,0); ?=17 Terminated by bound ?(8)=(1,0,1,0) ?=12 ?(9)=(1,0,0,0) Infeasible 9 8 T. by solving; ? = 12

Incumbent Solutions The incumbent solution at any stage in a search of a discrete model is the best (in terms of objective value) feasible solution known so far. We denote the incumbent solution ? and its objective function value ?. [12.29] If a branch and bound search stops as in [12.27], with all partial solutions having been either branched or terminated, the final incumbent solution is a global optimum if one exists. Otherwise, the model is infeasible. [12.30]

Candidate Problems The candidate problem associated with any partial solution to an optimization model is the restricted version of the model obtained when variables are fixed as in the partial solution. [12.31] The feasible completions of any partial solution are exactly the feasible solutions to the corresponding candidate problem, and thus the objective value of the best feasible completion is the optimal objective value of the candidate problem. [12.32]

Terminating Partial Solutions with Relaxations If any relaxation of a candidate problem proves infeasible, the associated partial solution can be terminated because it has no feasible completions. [12.33] If any relaxation of a candidate problem has optimal objective value no better than the current incumbent solution value, the associated partial solution can be terminated because no feasible completion can improve on the incumbent. [12.34] If an optimal solution to any constraint relaxation of a candidate problem is feasible in the full candidate, it is a best feasible completion of the associated partial solution. After checking whether a new incumbent has been discovered, the partial solution can be terminated. [12.35]

Algorithm 12A: LP-Based Branch and Bound (0-1 ILPs) Step 0: Initialization. Make the only active partial solution the one with all discrete variable free, and initialize solution index t 0. If any feasible solutions are known for the model, also choose the best as incumbent solution ? with objective value ?. Otherwise, set ? - if the model maximizes, and ? + if the model minimizes. Step 1: Stopping. If active partial solutions remain, select one as x(t), and proceed to Step 2. Otherwise, stop. If there is an incumbent solution ?, it is optimal, and if not, the model is infeasible. Step 2: Relaxation. Attempt to solve the LP relaxation of the candidate problem corresponding to x(t).

Algorithm 12A: LP-Based Branch and Bound (0-1 ILPs) Step 3: Termination by Infeasibility. If the LP relaxation proved infeasible, there are no feasible completions of the partial solution x(t). Terminate x(t),increment t t+1, and return to Step 1. Step 4: Termination by Bound. If the model maximizes and LP relaxation value ? satisfies ? ?, or it minimizes and ? ?, the best feasible completion of partial solution x(t) cannot improve on the incumbent. Terminate x(t),increment t t+1, and return to Step 1.

Algorithm 12A: LP-Based Branch and Bound (0-1 ILPs) Step 5: Termination by Solving. If the LP relaxation optimum ?(t) satisfies all binary constraints of the model, it provides the best feasible completion of partial solution x(t). After saving it as new incumbent solution ? ?(t); ? ? Terminate x(t),increment t t+1, and return to Step 1. Step 6: Branching. Choose some free binary-restricted component xp that was fractional in the LP relaxation optimum, and branch x(t) by creating two new actives. One is identical to x(t) except that xpis fixed = 0, and the other the same except that xpis fixed = 1. Then increment t t+1, and return to Step 1.

Branching Rules for LP-Based Branch and Bound LP-based branch and bound algorithms always branch by fixing an integer-restricted decision variable that had a fractional value in the associated candidate problem relaxation. [12.36] When more than one integer-restricted variable is fractional in the relaxation optimum, LP-based branch and bound algorithms often branch by fixing the one closest to an integer value. [12.37] Tie-breaker

LP-Based Branch and Bound Solution of the River Power Example The decision variables are defined as ?? 1 if generator j is turned on 0 otherwise min 7x1+ 12x2 + 5x3 + 14x4 s.t. 300x1 + 600x2 + 500x3 + 1600x4 xi = 0 or 1 j=1, ,4 700 x1* = x3* = 1 Cost = 12

LP-Based Branch and Bound Solution of the River Power Example x(0)=(#,#,#,#), ? = + ?(0)=(0,0,0,.4375); ?=6.125 0 x(1)=(#,#,#,1) ?(1)=(0,0,0,1) ?=14 x(2)=(#,#,#,0) ?(2)=(0,.33,1,0); ?=9 X4=0 X4=1 2 1 x(6)=(#,0,#,0) ?(6)=(.67,0,1,0); ?=9.67 X2=1 X2=0 Terminated by solving ? = 14 x(3)=(#,1,#,0) ?(3)=(0,1,.2,0); ?=13 6 3 ?(7)=(1,0,.8,0) ?=11 X1=0 X1=1 7 10 X3=0 X3=1 X3=1 ?(10)=(0,0,1,0) Infeasible 4 5 X3=0 ?(5)=(.33,1,1,0); ?=14.33 Terminated by bound ?(4)=(0,1,1,0); ?=17 Terminated by bound ?(8)=(1,0,1,0) ?=12 ?(9)=(1,0,0,0) Infeasible 9 8 T. by solving; ? = 12