Curvilinear Regression

Discover the nuances of curvilinear regression, polynomial modeling, and interactions in statistical analysis. Understand the challenges of collinearity, explore quadratic and cubic trends, and learn the sequence of tests to model curves effectively. Dive into the difference between linear and nonlinear models, and delve into the complexities of modeling continuous variable interactions. Expand your knowledge on moderators versus mediators and how to test for their presence. Unravel the power and flexibility of polynomial regression in fitting curves, from linear to quadratic to cubic equations. Learn how to leverage polynomial regression to capture intricate relationships in your data. Visualize the impact of changing weights in quadratic functions and the sharpness of bends in cubic functions. Be equipped with essential skills to enhance your regression modeling techniques.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Curvilinear Regression Modeling Departures from the Straight Line (Curves and Interactions)

Skill Set Why is collinearity likely to be a problem in using polynomial regression? How does polynomial regression model quadratic and cubic trends? Describe the sequence of tests used to model curves in polynomial regression.

More skills How do you model interactions of continuous variables with regression? What is the difference between a moderator and a mediator? How do you test for the presence of each?

Linear vs. Nonlinear Models Typical linear Model: + = + ' Y a b X b X 1 1 2 2 Typical nonlinear models: + + = + 2 ' Y a b X b X b X 1 1 2 1 3 2 = + ' log( ) Y a b X 1 1 We don t use models like this: = + + 2 3 2 ' Y a b X b X 1 1 2 Nonlinear means in the terms, not the coefficients.

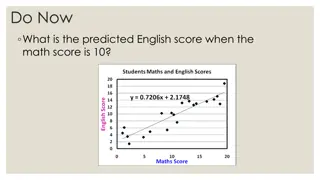

Curvilinear Regression Uses Polynomial Regression to fit curves. Polynomials are formed by taking IVs to successive powers. Polynomial equation referred to by its degree, determined by highest exponent. Power terms introduce bends. Linear Quadratic Cubic = = = + + + ' Y a b X 1 1 + + 2 ' Y a b X b X 1 1 2 1 + 2 3 1 ' Y a b X b X b X 1 1 2 1 3

Quadratic Function 8 Note the bend. 6 Y=2+2*X-.25*X*X 4 We can fit data with ceiling effects, sensation/perception as a function of stimulus intensity, performance as a function of practice, etc. 2 0 0 2 3 X 5 6

Quadratic Function (2) Y = 2+ 2X + bX*X 10 b = .125 8 b = -.125 6 b = -.25 (original curve) Y 4 b = -.5 2 0 0 2 3 X 5 6 The graph shows the effect of changing the b weight for the squared (quadratic) term.

Cubic Function Note the two bends. We get a new bend for each new power term. Sharpness of bend depends on size of b weights. 1600 Y=50*X-(5*X*X)+(.09*X*X*X) 1200 800 400 0 -400 -800 0 10 20 30 40 50 X

Response surfaces (1) With 1 IV, the relations between X and Y are shown as a line. With linear regression and 2 IVs, the response surface relating Y to the X variables will be a plane, e.g.: 15 12 Y 9 6 1 Y = X1+2*X2 2 3 3 The response surface will be like a stiff sheet of paper in a cardboard box. X1 4 1 5 2 3 X2 4 5

Response Surface (2) 15 12 Y 9 6 1 2 3 3 X1 4 1 5 2 X2 3 4 5 The same surface from a different angle.

Response Surface (3) 8 8 6 6 5 5 1 3 2 3 1 Nonlinear Relations Y = X1 + X2 - .1*X1**2 3 2 2 2 4 3 4 5 1 1 5 2 3 2 15 4 3 5 4 5 12 9 6 0 3 2 4 0 6 8 Y=X1+X2-.1*X1*X1. Relations between X2 and Y are linear; relations between X1 and Y are curved. Response surface is like a section of a coffee can. 10 0 2 4 6 8 10

Response Surface (4) Y = X1+X2-.2X1*X1-.2X2*X2 Nonlinear Relations Y = X1+X2 -.1*X1**2-.1*X2**2 3 5 4 2 3 1 2 1 0 1 0 0 2 2 -1 4 3 -1 6 4 8 10 5 1 0 2 2 4 6 3 8 4 10 5 In this graph, the relation between Y and both X variables is curved. Response surface is like a parachute.

Review What is polynomial regression? How does polynomial regression allow us to include one or more bends into lines and response surfaces?

Nonlinear Relations in Nonexperimental Research In experimental research, you can fit curves with orthogonal polynomials, which have advantages. Not covered here. Create power terms (IV taken to successive powers) Test for increasing numbers of bends by adding terms Quit when adding a term does not increase variance accounted for.

Polynomials to model bends in nonexperimental research Rating (DV) 10 9 10 8 9 8 7 7 8 9 7 6 8 5 7 5 6 7 7 8 Time 0 0 0 0 0 5 5 5 5 5 10 10 10 10 10 15 15 15 15 15 Time**2 0 0 0 0 0 25 25 25 25 25 100 100 100 100 100 225 225 225 225 225 Time**3 0 0 0 0 0 125 125 125 125 125 1000 1000 1000 1000 1000 3375 3375 3375 3375 3375

Correlations among terms Excite 1 -.72 -.62 -.55 Time Time**2 Time**3 Excite Time Time**2 Time**3 1 .96 .91 1 .99 1 Note that terms with higher exponents are VERY highly correlated. There WILL be problems with collinearity. Sequence of tests. Start with time, add time squared. If significant, add time cubed. Stop when adding a term doesn t help. Each power adds a bend. Quadratic is one bend, cubic is two, and so forth.

Results of Polynomial Regression Excite 1 -.72 -.62 -.55 Time Time**2 Time**3 Excite Time Time**2 Time**3 Note that polynomial is a special case of hierarchical reg. 1 .96 .91 1 .99 1 Model Intercept b1 b2 b3 R2 R2 Ch 1 Time 8.90 -.18 .52 .52 2 Time, Time2 9.25 -.39 .014 .58 .06 3 Time, Time2, Time3 9.20 -.23 -.02 .001 .59 .01

Polynomial Results (2) Suppose it had happened that the term for time-squared had been significant. The regression equation is Y' = 9.25 -.39X + .014X2. The results graphed: Roller Coaster Evaluations 12 9 Excitement 6 3 0 -4 0 4 8 12 16 20 Time

Interpreting Weights in Polynomial Regression All power terms for an IV work together to define the curve relating Y to X. Do not interpret b weights for polynomials. They change if you subtract the mean from the raw data. To estimate importance look to the change in R-square for the block of variables that represent the IV. Never use polynomials in a variable selection algorithm (e.g., stepwise regression). Specialized literature on nonlinear terms in path analysis and SEM (hard to do).

Review Why is collinearity likely to be a problem in using polynomial regression? Describe the sequence of tests used to model curves in polynomial regression. Review R code.

Interactions An interaction means that the importance of one variable depends upon the value of another. Importance means slope in regression with a continuous IV. An interaction is also sometimes called a moderator, as in Z moderates the relations between X and Y. In regression, we look to see if the slope relating the DV to the IV changes depending on the value of a second IV.

Example Interaction Low Cognitive Ability For those with low cog ability, there is a small correlation between creativity and 10 5 Medium Cognitive Ability productivity. Productivity 0 10 -5 5 -10 -10 -6 -2 2 6 10 Productivity Creativity 0 -5 As cognitive ability increases, the relations between creativity and productivity become stronger. The slope of productivity on creativity depends on cog ability. -10 High Cognitive Ability -10 -6 -2 2 6 10 Creativity 10 5 Productivity 0 -5 -10 -10 -6 -2 2 6 10 Creativity

Interaction Response Surface Interaction Interaction 80 60 Y 80 Y 40 60 20 40 0 0 20 2 10 4 10 8 6 8 6 X2 10 0 X2 8 6 8 4 6 X1 10 4 4 2 2 X1 2 0 0 0 The slope of X1 depends on the value of X2 and vice versa. Regression is looking to fit this response surface and no other when we do the customary analysis for interactions with continuous IVs. More restrictive than ANOVA.

Significance Tests for Interactions Subtract means from each IV (optional). Compute product of IVs. Compute significance of change in R-square using interaction(s). If R-square change is n.s., no interaction(s) present. If R-square change is significant, find the significant interaction(s). Graph the interaction(s)

1 50 40 100 2 35 45 80 3 40 50 90 Data to test for interaction between cognitive ability and creativity on performance. 4 50 55 105 5 55 60 110 6 35 40 95 7 45 45 100 8 55 50 105 9 50 55 95 10 40 60 90 11 45 40 110 12 50 45 115 13 60 50 120 14 65 55 125 15 55 60 105 16 50 40 110 17 55 45 95 18 55 50 115 19 60 60 120 20 65 65 140 ;

Correlation Matrix Pearson Correlation Coefficients, N = 20 Prob > |r| under H0: Rho=0 person product create cog inter person 1.00000 0.65629 0.32531 0.66705 0.57538 0.0017 0.1616 0.0013 0.0079 product 0.65629 1.00000 0.50470 0.83568 0.78465 0.0017 0.0232 <.0001 <.0001 create 0.32531 0.50470 1.00000 0.38414 0.84954 0.1616 0.0232 0.0945 <.0001 cog 0.66705 0.83568 0.38414 1.00000 0.80732 0.0013 <.0001 0.0945 <.0001 inter 0.57538 0.78465 0.84954 0.80732 1.00000 0.0079 <.0001 <.0001 <.0001

Results for 2 IVs (Main Effects) The GLM Procedure Dependent Variable: product Sum of Source DF Squares Mean Square F Value Pr > F Model 2 1080.151718 540.075859 23.93 <.0001 Error 17 383.598282 22.564605 Corrected Total 19 1463.750000 R-Square Coeff Var Root MSE product Mean 0.737935 9.360042 4.750222 50.75000 Source DF Type I SS Mean Square F Value Pr > F create 1 372.8504184 372.8504184 16.52 0.0008 cog 1 707.3012991 707.3012991 31.35 <.0001 Source DF Type III SS Mean Square F Value Pr > F create 1 57.9376107 57.9376107 2.57 0.1275 cog 1 707.3012991 707.3012991 31.35 <.0001 Standard Parameter Estimate Error t Value Pr > |t| Intercept -11.31387459 9.26553773 -1.22 0.2387 create 0.23848700 0.14883268 1.60 0.1275 cog 0.47077911 0.08408699 5.60 <.0001

Result for interaction Dependent Variable: product Sum of Source DF Squares Mean Square F Value Pr > F Model 3 1088.423539 362.807846 15.47 <.0001 Error 16 375.326461 23.457904 Corrected Total 19 1463.750000 R-Square Coeff Var Root MSE product Mean 0.743586 9.543519 4.843336 50.75000 Source DF Type I SS Mean Square F Value Pr > F create 1 372.8504184 372.8504184 15.89 0.0011 cog 1 707.3012991 707.3012991 30.15 <.0001 inter 1 8.2718212 8.2718212 0.35 0.5609 Source DF Type III SS Mean Square F Value Pr > F create 1 15.35701934 15.35701934 0.65 0.4303 cog 1 49.38823497 49.38823497 2.11 0.1661 inter 1 8.27182120 8.27182120 0.35 0.5609 Standard Parameter Estimate Error t Value Pr > |t| Intercept -45.48374780 58.31267387 -0.78 0.4468 create 0.87233592 1.07813934 0.81 0.4303 cog 0.79009054 0.54451482 1.45 0.1661 inter -0.00587585 0.00989498 -0.59 0.5609

Moderator and Mediator Moderator Means Interaction. Slope of one depends on the value of the other. Use moderated regression (test for an interaction) to test. Mediator means there is a causal chain of events. The mediating variable is the proximal cause of the DV. A more distal cause changes the mediator. Use path analysis* to test. In this graph, X2 is the mediator. Mediator Moderator X1 X2 X2 X1 Y Y * Modern methods Sobel test; Preacher & Hayes; Bootstrap

Review How do you model interactions of continuous variables with regression? What is the difference between a moderator and a mediator? How do you test for the presence of each?