Deep Generative Models in Probabilistic Machine Learning

This content explores various deep generative models such as Variational Autoencoders and Generative Adversarial Networks used in Probabilistic Machine Learning. It discusses the construction of generative models using neural networks and Gaussian processes, with a focus on techniques like VAEs and

11 views • 18 slides

Improving Qubit Readout with Autoencoders in Quantum Science Workshop

Dispersive qubit readout, standard models, and the use of autoencoders for improving qubit readout in quantum science are discussed in the workshop led by Piero Luchi. The workshop covers topics such as qubit-cavity systems, dispersive regime equations, and the classification of qubit states through

8 views • 22 slides

Machine Learning and Generative Models in Particle Physics Experiments

Explore the utilization of machine learning algorithms and generative models for accurate simulation in particle physics experiments. Understand the concepts of supervised, unsupervised, and semi-supervised learning, along with generative models like Variational Autoencoder and Gaussian Mixtures. Le

4 views • 15 slides

Riemannian Normalizing Flow on Variational Wasserstein Autoencoder for Text Modeling

This study explores the use of Riemannian Normalizing Flow on Variational Wasserstein Autoencoder (WAE) to address the KL vanishing problem in Variational Autoencoders (VAE) for text modeling. By leveraging Riemannian geometry, the Normalizing Flow approach aims to prevent the collapse of the poster

1 views • 20 slides

Time-Aware User Embeddings as a Service

Providing time-aware user/activity embeddings as a service to different teams within a company offers a centralized and task-independent solution. The approach involves learning universal, compact, and time-aware user embeddings that preserve temporal dependencies, catering to various applications s

5 views • 15 slides

Cutting-edge Techniques in Graph Regression using Graph Autoencoders

Explore the latest advancements in graph regression using Graph Autoencoders as discussed in the IAPR Joint International Workshops on Statistical Techniques in Pattern Recognition (SPR 2022) and Structural and Syntactic Pattern Recognition (SSPR 2022). The methodology involves a 2-step approach wit

0 views • 15 slides

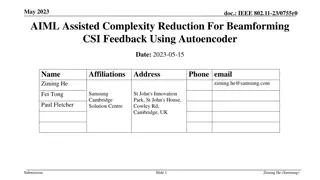

Complexity Reduction in Beamforming CSI Feedback Using Autoencoder

Explore how autoencoder-based schemes can reduce complexity in beamforming CSI feedback, comparing legacy and existing AIML beamforming schemes. Learn about the proposed autoencoder-based scheme's advantages and considered system parameters for performance evaluations in IEEE 802.11-23/0755r0 docume

3 views • 12 slides

Soft Sensor Design Using Autoencoder and Bayesian Methods

Explore the integration of autoencoder and Bayesian methods for batch process soft sensor design, focusing on viscosity estimation in complex liquid formulations. The methodology involves investigating process data, dimensionality reduction with autoencoder, and developing nonlinear estimators for v

1 views • 17 slides

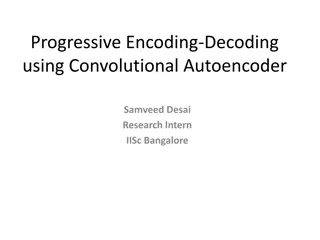

Progressive Encoding-Decoding Using Convolutional Autoencoder - Research Internship Insights

Explore the innovative research on image compression using neural networks, specifically Progressive Encoding-Decoding with a Convolutional Autoencoder. The approach involves a Deep CNN-based encoder and decoder to achieve different compression rates without retraining the entire network. Results sh

2 views • 7 slides

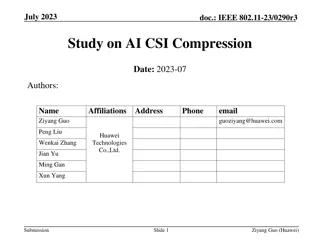

AI CSI Compression Study with VQ-VAE Method

Explore the study on AI CSI compression using a new vector quantization variational autoencoder (VQ-VAE) method, discussing existing works, performance evaluation, and future possibilities. The study focuses on reducing feedback overhead and improving throughput in wireless communication systems.

0 views • 10 slides

Study on AI CSI Compression Using VQVAE for IEEE 802.11-23

Explore the study on AI CSI compression utilizing Vector Quantized Variational Autoencoder (VQVAE) in IEEE 802.11-23. The research delves into model generalization, lightweight encoder development, and bandwidth variations for improved performance in CSI compression. Learn about ML solutions, existi

0 views • 21 slides

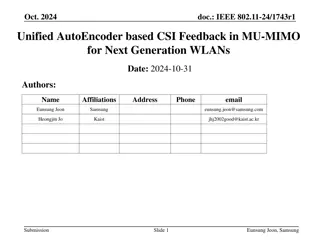

Unified AutoEncoder based CSI Feedback in MU-MIMO for Next Generation WLANs

Explore how Deep Neural Network AutoEncoder (DNN-AE) can minimize CSI feedback overhead while enhancing performance in MU-MIMO for future WLAN networks. The proposed scheme aims to reduce training efforts and hardware complexity by applying a unified DNN-AE across various CSI types, bandwidths, ante

3 views • 19 slides

Cutting-Edge Progress in Autoencoder and Faceswapping Technology

Dive into the latest developments in autoencoder technology and beyond faceswapping. Explore the intricacies of autoencoders, generative adversarial networks, and the innovative hug.mp4 project. Discover references to deepen your understanding of this fascinating field.

2 views • 5 slides

AI/ML Model Sharing Feasibility and Challenges in IEEE 802.11-23

Explore the technical feasibility of sharing AI/ML models between STAs in the context of IEEE 802.11-23. Discuss components of ML models, use cases like Autoencoder for CSI compression, and challenges faced in sharing ML algorithms. Learn how to distribute and share AI/ML models effectively for enha

0 views • 10 slides

Deep Neural Networks Workshop on Spins, Games, and Networks

Join the workshop on classifications with deep neural networks at The Institute of Mathematical Sciences, Chennai. Explore energy minimas of RBM architecture and autoencoder insights. Learn about varying temperatures in state space and Indus symbols. Get ready for an informative session! Questions?

1 views • 6 slides

AI/ML Applications in WLAN: CSI Compression Use Case

Explore how AI/ML technologies can enhance WLAN performance through CSI compression, reducing feedback overhead and increasing network throughput. Potential solutions include unsupervised learning techniques like K-means classification and Deep Neural Network Autoencoder. Additionally, consideration

0 views • 9 slides

Predicting Alzheimer's Disease with 3D Neural Networks

Explore a neuroimaging study utilizing 3D convolutional neural networks to predict Alzheimer's disease. Learn about the methodology, sparse autoencoder, and model creation process in this informative research paper.

6 views • 21 slides

Variational Autoencoder (VAE) Overview

Discover the concept of Variational Autoencoder (VAE) which incorporates probabilistic encoders and decoders to learn distributions for more accurate reconstructions. Learn about the key components, VAE encoder and decoder, loss functions, and addressing challenges in achieving continuous latent rep

1 views • 11 slides

Unified AutoEncoder based CSI Feedback in MU-MIMO

Explore the application of unsupervised learning techniques like K-means algorithm and Deep Neural Network AutoEncoder (DNN-AE) for CSI feedback compression in MU-MIMO for next-generation WLANs. The study aims to minimize CSI feedback overhead while ensuring packet error rate performance, with a foc

1 views • 15 slides