The Digital Personal Data Protection Act 2023

The Digital Personal Data Protection Act of 2023 aims to regulate the processing of digital personal data while balancing individuals' right to data protection and lawful data processing. It covers various aspects such as obligations of data fiduciaries, rights of data principals, and the establishm

3 views • 28 slides

NCI Data Collections BARPA & BARRA2 Overview

NCI Data Collections BARPA & BARRA2 serve as critical enablers of big data science and analytics in Australia, offering a vast research collection of climate, weather, earth systems, environmental, satellite, and geophysics data. These collections include around 8PB of regional climate simulations a

6 views • 22 slides

Revolutionizing with NLP Based Data Pipeline Tool

The integration of NLP into data pipelines represents a paradigm shift in data engineering, offering companies a powerful tool to reinvent their data workflows and unlock the full potential of their data. By automating data processing tasks, handling diverse data sources, and fostering a data-driven

9 views • 2 slides

Revolutionizing with NLP Based Data Pipeline Tool

The integration of NLP into data pipelines represents a paradigm shift in data engineering, offering companies a powerful tool to reinvent their data workflows and unlock the full potential of their data. By automating data processing tasks, handling diverse data sources, and fostering a data-driven

7 views • 2 slides

IMPACT INVESTING CHALLENGE 2024

Addressing the gender-climate nexus, our team's investment proposal focuses on empowering women in sustainable finance initiatives. We highlight the investment target, business model, and how our solution tackles gender inequalities within climate finance. Our implementation plan emphasizes commerci

4 views • 6 slides

Achieving Student Success through ASAP Programs

Accelerated Study in Associate Programs (ASAP) provides comprehensive academic and wraparound support to remove barriers for students, ensuring they can focus on their education. With a proven track record of student success based on data and research, ASAP offers various benefits including tuition

1 views • 14 slides

Ask On Data for Efficient Data Wrangling in Data Engineering

In today's data-driven world, organizations rely on robust data engineering pipelines to collect, process, and analyze vast amounts of data efficiently. At the heart of these pipelines lies data wrangling, a critical process that involves cleaning, transforming, and preparing raw data for analysis.

2 views • 2 slides

How Data Wrangling Is Reshaping IT Strategies in Deep

Data wrangling tool like Ask On Data plays a pivotal role in reshaping IT strategies by elevating data quality, streamlining data preparation, facilitating data integration, empowering citizen data scientists, and driving innovation and agility. As businesses continue to harness the power of data to

2 views • 2 slides

Data Wrangling like Ask On Data Provides Accurate and Reliable Business Intelligence

In current data world, businesses thrive on their ability to harness and interpret vast amounts of data. This data, however, often comes in raw, unstructured forms, riddled with inconsistencies and errors. To transform this chaotic data into meaningful insights, organizations need robust data wrangl

0 views • 2 slides

Bridging the Gap Between Raw Data and Insights with Data Wrangling Tool

Organizations generate and gather enormous amounts of data from diverse sources in today's data-driven environment. This raw data, often unstructured and messy, holds immense potential for driving insights and informed decision-making. However, transforming this raw data into a usable format is a ch

0 views • 2 slides

Why Organization Needs a Robust Data Wrangling Tool

The importance of a robust data wrangling tool like Ask On Data cannot be overstated in today's data-centric landscape. By streamlining the data preparation process, enhancing productivity, ensuring data quality, and fostering collaboration, Ask On Data empowers organizations to unlock the full pote

0 views • 2 slides

The Role of Data Migration Tool in Big Data with Ask On Data

Data migration tools are indispensable for organizations looking to transform their big data into actionable insights. Ask On Data exemplifies how these tools can streamline the migration process, ensuring data integrity, scalability, and security. By leveraging Ask On Data, organizations can achiev

0 views • 2 slides

The Key to Accurate and Reliable Business Intelligence Data Wrangling

Data wrangling is the cornerstone of effective business intelligence. Without clean, accurate, and well-organized data, the insights derived from analysis can be misleading or incomplete. Ask On Data provides a comprehensive solution to the challenges of data wrangling, empowering businesses to tran

0 views • 2 slides

Know Streamlining Data Migration with Ask On Data

In today's data-driven world, the ability to seamlessly migrate and manage data is essential for businesses striving to stay competitive and agile. Data migration, the process of transferring data from one system to another, can often be a daunting task fraught with challenges such as data loss, com

1 views • 2 slides

Understanding Consistency Protocols in Distributed Systems

Today's lecture covers consistency protocols in distributed systems, focusing on primary-based protocols and replicated-write protocols. These protocols play a crucial role in ensuring consistency across multiple replicas. One example discussed is the Remote-Write Protocol, which enforces strict con

0 views • 35 slides

Exploring Data Science: Grade IX Version 1.0

Delve into the world of data science with Grade IX Version 1.0! This educational material covers essential topics such as the definition of data, distinguishing data from information, the DIKW model, and how data influences various aspects of our lives. Discover the concept of data footprints, data

1 views • 31 slides

Design and Analysis of Engineering Experiments in Practice

Explore the fundamentals of engineering experiments, including blocking and confounding systems for two-level factorials. Learn about replicated and unreplicated designs, the importance of blocking in a replicated design, ANOVA for blocked designs, and considerations for confounding in blocks. Dive

0 views • 15 slides

Top 10 Dream11 Alternatives Generating Million Dollar Revenue

Numerous Dream11 Clone have surged in the fantasy sports market, achieving substantial revenue through replicated gameplay mechanics and diverse sports offerings. These platforms captivate a broad audience, monetizing through entry fees and strategic

4 views • 1 slides

Understanding Data Governance and Data Analytics in Information Management

Data Governance and Data Analytics play crucial roles in transforming data into knowledge and insights for generating positive impacts on various operational systems. They help bring together disparate datasets to glean valuable insights and wisdom to drive informed decision-making. Managing data ma

0 views • 8 slides

Understanding Data Governance and Data Privacy in Grade XII Data Science

Data governance in Grade XII Data Science Version 1.0 covers aspects like data quality, security, architecture, integration, and storage. Ethical guidelines emphasize integrity, honesty, and accountability in handling data. Data privacy ensures control over personal information collection and sharin

7 views • 44 slides

Importance of Data Preparation in Data Mining

Data preparation, also known as data pre-processing, is a crucial step in the data mining process. It involves transforming raw data into a clean, structured format that is optimal for analysis. Proper data preparation ensures that the data is accurate, complete, and free of errors, allowing mining

1 views • 37 slides

Raft Consensus Algorithm Overview for Replicated State Machines

Raft is a consensus algorithm designed for replicated state machines to ensure fault tolerance and reliable service in distributed systems. It provides leader election, log replication, safety mechanisms, and client interactions for maintaining consistency among servers. The approach simplifies oper

0 views • 32 slides

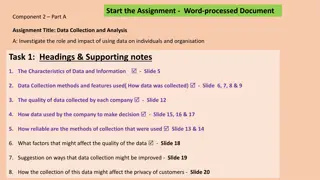

Understanding Data Collection and Analysis for Businesses

Explore the impact and role of data utilization in organizations through the investigation of data collection methods, data quality, decision-making processes, reliability of collection methods, factors affecting data quality, and privacy considerations. Two scenarios are presented: data collection

1 views • 24 slides

Analysis of Faculty-wide Educational Renewal Project Results

Results from a faculty-wide scan of courses in an educational renewal project reveal the distribution and emphasis on various research domains, knowledge, literacy, and skills among faculty members. The analysis includes percentages of courses covering research types, technical skills, communication

0 views • 7 slides

Understanding Data Life Cycle in a Collaborative Setting

Explore the journey of data from collection to preservation in a group setting. Post-its are arranged to represent the different stages like Analyzing Data, Preserving Data, Processing Data, and more. Snippets cover tasks such as Collecting data, Migrating data, Managing and storing data, and more,

0 views • 4 slides

Enhancing Data Management in INDEPTH Network with iSHARE2 & CiB

INDEPTH Network emphasizes the importance of iSHARE2 & CiB to enhance data sharing and management among member centers. iSHARE2 aims to streamline data provision in a standardized manner, while CiB provides a comprehensive data management solution. The objectives of iSHARE2 include facilitating data

0 views • 17 slides

Stronger Semantics for Low-Latency Geo-Replicated Storage

Discusses the importance of strong consistency and low latency in geo-replicated storage systems for improving user experience and revenue. Various storage dimensions, sharding techniques, and consistency models like Causal+ are explored. The Eiger system is highlighted for ensuring low latency by k

0 views • 24 slides

Verifying Functional Correctness in Conflict-Free Replicated Data Types

Explore the significance of verifying functional correctness in Conflict-Free Replicated Data Types (CRDTs), focusing on ensuring data consistency and program logic for clients. Learn about the importance of Strong Eventual Consistency (SEC) and the necessity of separate verification with atomic spe

0 views • 33 slides

An Introduction to Consensus with Raft: Overview and Importance

This document provides an insightful introduction to consensus with the Raft algorithm, explaining its key concepts, including distributed system availability versus consistency, the importance of eliminating single points of failure, the need for consensus in building consistent storage systems, an

0 views • 20 slides

The Raft Consensus Algorithm: Simplifying Distributed Consensus

Consensus in distributed systems involves getting multiple servers to agree on a state. The Raft Consensus Algorithm, designed by Diego Ongaro and John Ousterhout from Stanford University, aims to make achieving consensus easier compared to other algorithms like Paxos. Raft utilizes a leader-based a

0 views • 26 slides

Understanding the Raft Consensus Protocol

The Raft Consensus Protocol, introduced by Prof. Smruti R. Sarangi, offers a more understandable and easier-to-implement alternative to Paxos for reaching agreement in distributed systems. Key concepts include replicated state machine model, leader election, and safety properties ensuring data consi

0 views • 27 slides

Fault-Tolerant Replicated Systems in Computing

Overview of fault-tolerant replicated state machine systems in computing, covering topics such as primary-backup mechanisms, high availability extensions, view changes on failure, leader election, and consensus protocols for replicated operations. The content emphasizes the importance of leaders in

0 views • 38 slides

Understanding DNA Replication Process in Living Organisms

DNA replication is a fundamental biological process where an original DNA molecule produces two identical copies. This process involves initiation, elongation, and termination stages, utilizing replicator and initiator proteins. The DNA is unwound and replicated with the help of enzymes like helicas

0 views • 16 slides

Understanding Data Protection Regulations and Definitions

Learn about the roles of Data Protection Officers (DPOs), the Data Protection Act (DPA) of 2004, key elements of the act, definitions of personal data, examples of personal data categories, and sensitive personal data classifications. Explore how the DPO enforces privacy rights and safeguards person

0 views • 33 slides

Understanding Data Awareness and Legal Considerations

This module delves into various types of data, the sensitivity of different data types, data access, legal aspects, and data classification. Explore aggregate data, microdata, methods of data collection, identifiable, pseudonymised, and anonymised data. Learn to differentiate between individual heal

0 views • 13 slides

Family Centered Services of CT: Empowering Youth through TOP Sessions & Community Service

Family Centered Services of CT, led by Executive Director Cheryl Burack, offers a nationally-replicated youth development program focusing on teen pregnancy prevention and academic success. Through TOP Sessions, 15-17-year-olds engage in guided discussions, community service learning, and individual

0 views • 8 slides

The Design and Implementation of the Warp Transactional Filesystem

The Warp Transactional Filesystem (WTF) offers a new distributed filesystem design focusing on strong guarantees and zero-copy interfaces. It introduces the concept of file-slicing abstraction to enhance application performance, scalability, and transactional operations. The WTF architecture compris

0 views • 16 slides

Quantum Distributed Proofs for Replicated Data

This research explores Quantum Distributed Computing protocols for tasks like leader election, Byzantine agreement, and more. It introduces Quantum dMA protocols for verifying equality of replicated data on a network without shared randomness. The study discusses the need for efficient protocols wit

0 views • 28 slides

Understanding Standard Deviation and Standard Error of the Means

Standard deviation measures the variability or spread of measurements in a data set, while standard error of the means quantifies the precision of the mean of a set of means from replicated experiments. Variability is indicated by the range of data values, with low standard deviation corresponding t

0 views • 7 slides

Raft: A Consensus Algorithm for Replicated Logs Overview

Raft is a consensus algorithm developed by Diego Ongaro and John Ousterhout at Stanford University. It aims to ensure replicated log clients and consensus modules maintain proper log replication and state machine execution across servers. Raft utilizes leader election, normal operation for log repli

0 views • 31 slides