Encoder and Decoder in Combinational Logic Circuits

In the world of digital systems, encoders and decoders play a crucial role in converting incoming information into appropriate binary forms for processing and output. Encoders transform data into binary codes suitable for display, while decoders ensure that binary data is correctly interpreted and u

8 views • 18 slides

Knowledge Distillation for Streaming ASR Encoder with Non-streaming Layer

The research introduces a novel knowledge distillation (KD) method for transitioning from non-streaming to streaming ASR encoders by incorporating auxiliary non-streaming layers and a special KD loss function. This approach enhances feature extraction, improves robustness to frame misalignment, and

4 views • 34 slides

Evolution of Neural Models: From RNN/LSTM to Transformers

Neural models have evolved from RNN/LSTM, designed for language processing tasks, to Transformers with enhanced context modeling. Transformers introduce features like attention, encoder-decoder architecture (e.g., BERT/GPT), and fine-tuning techniques for training. Pretrained models like BERT and GP

15 views • 11 slides

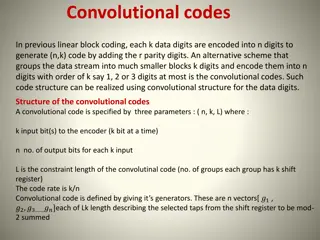

Convolutional Codes in Digital Communication

Convolutional codes provide an efficient alternative to linear block coding by grouping data into smaller blocks and encoding them into output bits. These codes are defined by parameters (n, k, L) and realized using a convolutional structure. Generators play a key role in determining the connections

2 views • 19 slides

ELECTRA: Pre-Training Text Encoders as Discriminators

Efficiently learning an encoder that classifies token replacements accurately using ELECTRA method, which involves replacing some input tokens with samples from a generator instead of masking. The key idea is to train a text encoder to distinguish input tokens from negative samples, resulting in bet

3 views • 12 slides

Decoding and NLG Examples in CSE 490U Section Week 10

This content delves into the concept of decoding in natural language generation (NLG) using RNN Encoder-Decoder models. It discusses decoding approaches such as greedy decoding, sampling from probability distributions, and beam search in RNNs. It also explores applications of decoding and machine tr

2 views • 28 slides

Comparing CLIP vs. LLaVA on Zero-Shot Classification by Misaki Matsuura

In this study by Misaki Matsuura, the effectiveness of CLIP (contrastive language-image pre-training) and LLaVA (large language-and-vision assistant) on zero-shot classification is explored. CLIP, with 63 million parameters, retrieves textual labels based on internet image-text pairs. On the other h

3 views • 6 slides

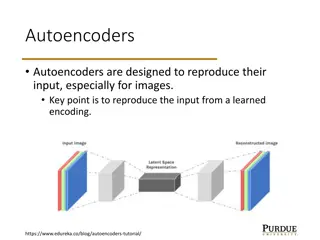

Variational Autoencoders (VAE) in Machine Learning

Autoencoders are neural networks designed to reproduce their input, with Variational Autoencoders (VAE) adding a probabilistic aspect to the encoding and decoding process. VAE makes use of encoder and decoder models that work together to learn probabilistic distributions for latent variables, enabli

10 views • 11 slides

Solar Energy Generator Design Rendering and Prototype Details

Solar Energy Generator design includes a prototype system mounted in a Pelican case with various peripherals. The system features a Laser Cut Delrin Panel covering all electronics with display, buttons, and a rotary encoder. External connections are facilitated through Souriau UTS circular connector

4 views • 7 slides

Generating Sense-specific Example Sentences with BART Approach

This work focuses on generating sense-specific example sentences using BART (Bidirectional and AutoRegressive Transformers) by conditioning on the target word and its contextual representation from another sentence with the desired sense. The approach involves two components: a contextual word encod

1 views • 19 slides

Optimized Colour Ordering for Grey to Colour Transformation

The research discusses the challenge of recovering a colour image from a grey-level image efficiently. It presents a solution involving parametric curve optimization in the encoder and decoder sides, minimizing errors and encapsulating colour data. The Parametric Curve maps grayscale values to colou

3 views • 19 slides

ZEN: Pre-training Chinese Text Encoder Enhanced by N-gram Representations

The ZEN model improves pre-training procedures by incorporating n-gram representations, addressing limitations of existing methods like BERT and ERNIE. By leveraging n-grams, ZEN enhances encoder training and generalization capabilities, demonstrating effectiveness across various NLP tasks and datas

2 views • 17 slides

Training wav2vec on Multiple Languages From Scratch

Large amount of parallel speech-text data is not available in most languages, leading to the development of wav2vec for ASR systems. The training process involves self-supervised pretraining and low-resource finetuning. The model architecture includes a multi-layer convolutional feature encoder, qua

4 views • 10 slides

Transformer Neural Networks for Sequence-to-Sequence Translation

In the domain of neural networks, the Transformer architecture has revolutionized sequence-to-sequence translation tasks. This involves attention mechanisms, multi-head attention, transformer encoder layers, and positional embeddings to enhance the translation process. Additionally, Encoder-Decoder

4 views • 24 slides

OWSM-CTC: An Open Encoder-Only Speech Foundation Model

Explore OWSM-CTC, an innovative encoder-only model for diverse language speech-to-text tasks inspired by Whisper and OWSM. Learn about its non-autoregressive approach and implications for multilingual ASR, ST, and LID.

3 views • 39 slides

Efficient Video Encoder on CPU+FPGA Platform

Explore the integration of CPU and FPGA for a highly efficient and flexible video encoder. Learn about the motivation, industry trends, discussions, Xilinx Zynq architecture, design process, H.264 baseline profile, and more to achieve high throughput, low power consumption, and easy upgrading.

1 views • 27 slides

Neural Image Caption Generation: Show and Tell with NIC Model Architecture

This presentation delves into the intricacies of Neural Image Captioning, focusing on a model known as Neural Image Caption (NIC). The NIC's primary goal is to automatically generate descriptive English sentences for images. Leveraging the Encoder-Decoder structure, the NIC uses a deep CNN as the en

0 views • 13 slides

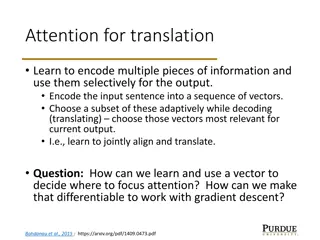

Attention Mechanism in Neural Machine Translation

In neural machine translation, attention mechanisms allow selective encoding of information and adaptive decoding for accurate output generation. By learning to align and translate, attention models encode input sequences into vectors, focusing on relevant parts during decoding. Utilizing soft atten

1 views • 17 slides

Convolutional Codes in Information Theory at University of Diyala

Concepts of convolutional codes and their application in error control coding within the Information Theory program at the University of Diyala's Communication Department. Understand the unique encoding process of convolutional encoders and the significance of parameters like coding rate and constra

2 views • 25 slides

Effective Approaches to Neural Machine Translation with Attention Mechanism

This research explores advanced techniques in neural machine translation with attention mechanisms, introducing new approaches and achieving state-of-the-art results in WMT English-French and English-German translations. The study delves into innovative models and examines variants of attention mech

1 views • 49 slides

Pointer Network and Sequence-to-Sequence in Machine Learning

Delve into the fascinating world of Pointer Network and Sequence-to-Sequence models in machine learning. Explore the interconnected concepts of encoder-decoder architecture, attention mechanisms, and the application of these models in tasks like summarization, machine translation, and chat-bot devel

1 views • 9 slides

Better Delivery. Better Exploits.

Delve into the world of exploit development with a focus on building an encoder for fun and knowledge. Discover the art of kits, understand the mindset of kit creators, and stay one step ahead of analysts. Learn about better obfuscation techniques, remain agile in your approach, and explore the nuan

0 views • 39 slides

Accurate Module Performance Predictions Using New IEC-61853 Model

Comprehensive details about a new model for accurate module performance predictions based on IEC-61853 data presented by Janine Freeman at the 7th PV Performance Modeling Workshop. The System Advisor Model (SAM) software enables detailed performance and economic analysis for renewable energy systems

17 views • 15 slides

Neural Machine Translation Overview

Explore the evolution of machine translation, from rule-based approaches to deep learning models like neural machine translation. Dive into the concepts of RNN encoder-decoder architecture and the mathematics behind statistical machine translation. Discover the power of deep learning in transforming

0 views • 13 slides

AESPrompt: Pre-Trained Language Models for Automated Essay Scoring

Learn about AESPrompt, a framework for automated essay scoring using pre-trained language models with prompt tuning. Discover how Prompt Encoder and self-supervised constraints enhance essay quality assessment. Explore the innovative prompt-based tuning method and self-supervised learning techniques

4 views • 11 slides

Encrypted Packet Classification Methodology using Deep Learning

Explore the methodology of encrypted packet classification by FATEMEH MAHDAVI, utilizing techniques like Stacked Auto-Encoder (SAE) and 1D CNN. The study includes dataset analysis, pre-processing steps, experimental results, and challenges faced in the process.

6 views • 18 slides

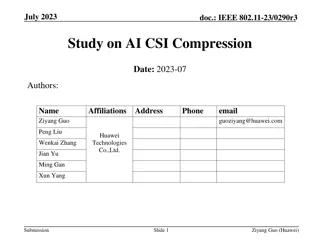

Study on AI CSI Compression in Wireless Communication

Explore the advancements in AI-driven Channel State Information (CSI) compression techniques for IEEE 802.11 standards. Discusses the use of neural networks for adapting to different channel conditions and bandwidths, achieving efficient compression with minimal overhead. Details the proposed lightw

3 views • 31 slides

Progressive Encoding-Decoding Using Convolutional Autoencoder - Research Internship Insights

Explore the innovative research on image compression using neural networks, specifically Progressive Encoding-Decoding with a Convolutional Autoencoder. The approach involves a Deep CNN-based encoder and decoder to achieve different compression rates without retraining the entire network. Results sh

2 views • 7 slides

Recurrent Encoder-Decoder Networks in Time-Varying Predictions

Explore the power of Recurrent Encoder-Decoder Networks for time-varying dense predictions, challenges with traditional RNNs, modifications like GRU and LSTM, bidirectional RNNs, and the fusion of CNN and RNN in CRNN for spatial-temporal data processing.

0 views • 10 slides

Study on AI CSI Compression Using VQVAE for IEEE 802.11-23

Explore the study on AI CSI compression utilizing Vector Quantized Variational Autoencoder (VQVAE) in IEEE 802.11-23. The research delves into model generalization, lightweight encoder development, and bandwidth variations for improved performance in CSI compression. Learn about ML solutions, existi

0 views • 21 slides

RNNs, Encoder-Decoder Models, and Machine Translation

Delve into the realm of Recurrent Neural Networks (RNNs) for sequence labeling and text categorization, explore the power of Encoder-Decoder models, attention mechanisms, and their role in machine translation. Unravel the complexities of mapping sequences to sequences, recall RNN equations, and gras

2 views • 43 slides

Sparse vs Dense Retrieval Models

Explore the differences between Sparse and Dense Retrieval models such as BM25, Bi-encoder, and Cross-encoder. Learn about lexical matching, vocabulary mismatch problems, and the use of Bi-Encoder vs Cross-Encoder in Dense Retrieval. Dive into the intricate details of cosine similarity scoring and r

5 views • 48 slides

Reducing Repeating Tokens in Encoder-Decoder Model

Explore how to reduce repeating tokens generated by an encoder-decoder model in natural language processing tasks. The model, comprising two neural networks, has shown rapid progress in tasks like machine translation and text summarization. By selecting words with the highest possibility during trai

0 views • 40 slides

Cross-Modal Generative Error Correction Framework

A groundbreaking framework, Whispering LLaMA, is presented in this paper. It introduces a two-pass rescoring paradigm utilizing advanced models to enhance speech recognition accuracy and error correction. The method integrates Whisper and LLaMA models for improved transcription outcomes, highlightin

0 views • 15 slides

Titan 10.1.0 Change Log Highlights and Updates

Explore the latest changes in Titan 10.1.0 including bug fixes, C++ compiler improvements, standard uplift, PER encoder enhancements, standalone ASN.1 encoder support, WSL2 usage as a Windows alternative, and more. Stay informed about the important updates in the Titan toolset for efficient developm

0 views • 7 slides

Understanding Transformer Architecture for Students

Explore the workings of the Transformer model, including Encoder, Decoder, Multi-Head Attention, and more. Dive into examples and visual representations to grasp key concepts easily. Perfect for students eager to learn about advanced neural network structures.

3 views • 37 slides

End-to-End Speech Translation using XSTNet

A comprehensive overview of end-to-end speech translation leveraging the innovative XSTNet model. Discusses the challenges in training E2E ST models, introduces XSTNet functionalities like supporting audio/text input, utilizing Transformer module, self-supervised audio representation learning, and m

2 views • 28 slides

Advanced Speech Translation System Leveraging Large Language Models

Explore the enhanced end-to-end speech translation system empowered by large language models (LLMs). The system focuses on efficient utilization of LLMs for high-performance speech translation, covering model architecture design, training strategies, and data recipe. Dive into speech encoder techniq

3 views • 31 slides

Reducing Token Repetition in Encoder-Decoder Model

Explore the reduction of repeating tokens generated by an encoder-decoder model supervised by Okumura Manabu. Learn about the background, related research, proposed methods, experiments, and conclusions in this study. Discover how the encoder-decoder model is used for natural language processing tas

2 views • 40 slides

Efficient Conformers via Sharing Experts for Speech Recognition

Explore parameter-efficient Conformers employing Sparsely-Gated Experts for precise end-to-end speech recognition. Leveraging knowledge distillation and weight-sharing mechanisms, this approach achieves competitive performance with significantly reduced encoder parameters. Discover attention-based e

1 views • 19 slides