Enhancing Data Reception Performance with GPU Acceleration in CCSDS 131.2-B Protocol

Explore the utilization of Graphics Processing Unit (GPU) accelerators for high-performance data reception in a Software Defined Radio (SDR) system following the CCSDS 131.2-B protocol. The research, presented at the EDHPC 2023 Conference, focuses on implementing a state-of-the-art GP-GPU receiver t

0 views • 33 slides

Understanding Parallelism in GPU Computing by Martin Kruli

This content delves into different types of parallelism in GPU computing, such as task parallelism and data parallelism, along with discussing unsuitable problems for GPUs and providing solutions like iterative kernel execution and mapping irregular structures to regular grids. The article also touc

1 views • 39 slides

Overview of GPU Architecture and Memory Systems in NVIDIA Tegra X1

Dive into the intricacies of GPU architecture and memory systems with a detailed exploration of the NVIDIA Tegra X1 die photo, instruction fetching mechanisms, SIMT core organization, cache lockup problems, and efficient memory management techniques highlighted in the provided educational materials.

7 views • 62 slides

Network Function Abstraction A delicate question of (CPU) affinity?

Exploring the delicate balance of CPU affinity in network function abstraction, including challenges, benefits, and solutions like CPU pinning for network workloads. Learn about the impact on performance and scalability, as well as the importance of proper configuration in virtual and physical envir

3 views • 40 slides

Understanding Computer Architecture: CPU Structure and Function

Delve into the intricate world of computer architecture with Prof. Dr. Nizamettin AYDIN as your guide. Explore topics such as CPU structure, registers, instruction cycles, data flow, pipelining, and handling conditional branches. Gain insights into the responsibilities of a CPU, internal structures,

0 views • 39 slides

Parallel Implementation of Multivariate Empirical Mode Decomposition on GPU

Empirical Mode Decomposition (EMD) is a signal processing technique used for separating different oscillation modes in a time series signal. This paper explores the parallel implementation of Multivariate Empirical Mode Decomposition (MEMD) on GPU, discussing numerical steps, implementation details,

1 views • 15 slides

GPU Scheduling Strategies: Maximizing Performance with Cache-Conscious Wavefront Scheduling

Explore GPU scheduling strategies including Loose Round Robin (LRR) for maximizing performance by efficiently managing warps, Cache-Conscious Wavefront Scheduling for improved cache utilization, and Greedy-then-oldest (GTO) scheduling to enhance cache locality. Learn how these techniques optimize GP

0 views • 21 slides

Understanding Modern GPU Computing: A Historical Overview

Delve into the fascinating history of Graphic Processing Units (GPUs), from the era of CPU-dominated graphics computation to the introduction of 3D accelerator cards, and the evolution of GPU architectures like NVIDIA Volta-based GV100. Explore the peak performance comparison between CPUs and GPUs,

5 views • 20 slides

Redesigning the GPU Memory Hierarchy for Multi-Application Concurrency

This presentation delves into the innovative reimagining of GPU memory hierarchy to accommodate multiple applications concurrently. It explores the challenges of GPU sharing with address translation, high-latency page walks, and inefficient caching, offering insights into a translation-aware memory

1 views • 15 slides

Understanding CPU Scheduling in Operating Systems

In a single-processor system, processes take turns running on the CPU. The goal of multiprogramming is to keep the CPU busy at all times. CPU scheduling relies on the alternating CPU and I/O burst cycles of processes. The CPU scheduler selects processes from the ready queue to execute when the CPU i

1 views • 26 slides

GPU-Accelerated Delaunay Refinement: Efficient Triangulation Algorithm

This study presents a novel approach for computing Delaunay refinement using GPU acceleration. The algorithm aims to generate a constrained Delaunay triangulation from a planar straight line graph efficiently, with improvements in termination handling and Steiner point management. By leveraging GPU

0 views • 23 slides

vFireLib: Forest Fire Simulation Library on GPU

Dive into Jessica Smith's thesis defense on vFireLib, a forest fire simulation library implemented on the GPU. The research focuses on real-time GPU-based wildfire simulation for effective and safe wildfire suppression efforts, aiming to reduce costs and mitigate loss of habitat, property, and life.

0 views • 95 slides

Understanding GPU Programming Models and Execution Architecture

Explore the world of GPU programming with insights into GPU architecture, programming models, and execution models. Discover the evolution of GPUs and their importance in graphics engines and high-performance computing, as discussed by experts from the University of Michigan.

0 views • 28 slides

Microarchitectural Performance Characterization of Irregular GPU Kernels

GPUs are widely used for high-performance computing, but irregular algorithms pose challenges for parallelization. This study delves into the microarchitectural aspects affecting GPU performance, emphasizing best practices to optimize irregular GPU kernels. The impact of branch divergence, memory co

0 views • 26 slides

Advanced GPU Performance Modeling Techniques

Explore cutting-edge techniques in GPU performance modeling, including interval analysis, resource contention identification, detailed timing simulation, and balancing accuracy with efficiency. Learn how to leverage both functional simulation and analytical modeling to pinpoint performance bottlenec

0 views • 32 slides

Deep Learning with Theano: Installation, Neurons, and Exploration

Delve into the world of deep learning with Peter Podolski's comprehensive guide on utilizing Theano for neural network development. Explore topics such as installation on various systems, working with neurons, and unlocking the potential for CPU and GPU optimization. Discover insights on hidden node

0 views • 20 slides

Communication Costs in Distributed Sparse Tensor Factorization on Multi-GPU Systems

This research paper presented an evaluation of communication costs for distributed sparse tensor factorization on multi-GPU systems. It discussed the background of tensors, tensor factorization methods like CP-ALS, and communication requirements in RefacTo. The motivation highlighted the dominance o

0 views • 34 slides

GPU Acceleration in ITK v4 Overview

This presentation by Won-Ki Jeong from Harvard University at the ITK v4 winter meeting in 2011 discusses the implementation and advantages of GPU acceleration in ITK v4. Topics covered include the use of GPUs as co-processors for massively parallel processing, memory and process management, new GPU

0 views • 33 slides

Understanding GPU-Accelerated Fast Fourier Transform

Today's lecture delves into the realm of GPU-accelerated Fast Fourier Transform (cuFFT), exploring the frequency content present in signals, Discrete Fourier Transform (DFT) formulations, roots of unity, and an alternative approach for DFT calculation. The lecture showcases the efficiency of GPU-bas

0 views • 40 slides

GPU Computing and Synchronization Techniques

Synchronization in GPU computing is crucial for managing shared resources and coordinating parallel tasks efficiently. Techniques such as __syncthreads() and atomic instructions help ensure data integrity and avoid race conditions in parallel algorithms. Examples requiring synchronization include Pa

0 views • 22 slides

Understanding GPU Performance for NFA Processing

Hongyuan Liu, Sreepathi Pai, and Adwait Jog delve into the challenges of GPU performance when executing NFAs. They address data movement and utilization issues, proposing solutions and discussing the efficiency of processing large-scale NFAs on GPUs. The research explores architectures and paralleli

0 views • 25 slides

Energy-Efficient Query Processing on Embedded CPU-GPU Architectures

This study explores the energy efficiency of query processing on embedded CPU-GPU architectures, focusing on the utilization of embedded GPUs and the potential for co-processing with CPUs. The research evaluates the performance and power consumption of different processing approaches, considering th

0 views • 22 slides

Maximizing GPU Throughput with HTCondor in 2023

Explore the integration of GPUs with HTCondor for efficient throughput computing in 2023. Learn how to enable GPUs on execution platforms, request GPUs for jobs, and configure job environments. Discover key considerations for jobs with specific GPU requirements and how to allocate GPUs effectively.

0 views • 22 slides

ZMCintegral: Python Package for Monte Carlo Integration on Multi-GPU Devices

ZMCintegral is an easy-to-use Python package designed for Monte Carlo integration on multi-GPU devices. It offers features such as random sampling within a domain, adaptive importance sampling using methods like Vegas, and leveraging TensorFlow-GPU backend for efficient computation. The package prov

0 views • 7 slides

GPU Acceleration in ITK v4: Overview and Implementation

This presentation discusses the implementation of GPU acceleration in ITK v4, focusing on providing a high-level GPU abstraction, transparent resource management, code development status, and GPU core classes. Goals include speeding up certain types of problems and managing memory effectively.

0 views • 32 slides

Improvements and Performance Analysis of GATE Simulation on HPC Cluster

This report covers the status of GATE-related projects presented in May 2017 by Liliana Caldeira, Mirjam Lenz, and U. we Pietrzyk at the Helmholtz-Gemeinschaft. It focuses on running GATE on a high-performance computing (HPC) cluster, particularly on the JURECA supercomputer at the Juelich Supercomp

0 views • 8 slides

Efficient Parallelization Techniques for GPU Ray Tracing

Dive into the world of real-time ray tracing with part 2 of this series, focusing on parallelizing your ray tracer for optimal performance. Explore the essentials needed before GPU ray tracing, handle materials, textures, and mesh files efficiently, and understand the complexities of rendering trian

0 views • 159 slides

Understanding CPU Structure and Function in Computer Organization and Architecture

Exploring the intricate details of CPU architecture, this content delves into the essential tasks of fetching, interpreting, processing, and writing data. It discusses the significance of registers, user-visible registers, general-purpose registers, and condition code registers in CPU operations. Ad

0 views • 83 slides

Understanding CPU Architecture in Computing for GCSE Students

Explore the fundamental concepts of CPU architecture, including the Von Neumann Architecture, common CPU components like ALU and CU, and how characteristics such as Clock Speed and Cache Size impact performance. Learn about the Fetch-Execute Cycle and the essential hardware components of a computer

0 views • 18 slides

Intel CPU Architectures Overview: Evolution and Features

Explore the evolution and key features of various Intel CPU architectures including Pentium, Core, and Pentium 4 series. Learn about the pipeline stages, instruction issue capabilities, branch prediction mechanisms, cache designs, and memory speculation techniques employed in these processors. Gain

0 views • 11 slides

Understanding the Basics of Multi-Stage Architecture in CPU Design

The article explains the fundamentals of a multi-stage digital processing system in computer organization, focusing on the central processing unit (CPU). It covers topics such as instruction execution, processor building blocks, and the benefits of pipelined operation. Concepts like fetching, decodi

0 views • 42 slides

Synchronization and Shared Memory in GPU Computing

Synchronization and shared memory play vital roles in optimizing parallelism in GPU computing. __syncthreads() enables thread synchronization within blocks, while atomic instructions ensure serialized access to shared resources. Examples like Parallel BFS and summing numbers highlight the need for s

0 views • 21 slides

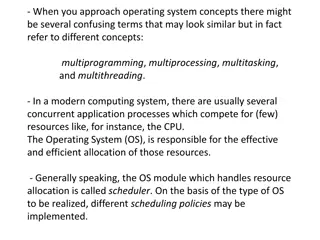

Understanding Operating System Concepts: Multiprogramming, Multiprocessing, Multitasking, and Multithreading

In the realm of operating systems, terms like multiprogramming, multiprocessing, multitasking, and multithreading can often be confusing due to their similar appearance but distinct meanings. These concepts play a crucial role in efficiently managing resources in a computing system, particularly in

0 views • 6 slides

Understanding CPU Scheduling Concepts at Eshan College of Engineering, Mathura

Dive into the world of CPU scheduling at Eshan College of Engineering in Mathura with Associate Professor Vyom Kulshreshtha. Explore topics such as CPU utilization, I/O burst cycles, CPU burst distribution, and more. Learn about the CPU scheduler, dispatcher module, scheduling criteria, and the impl

0 views • 18 slides

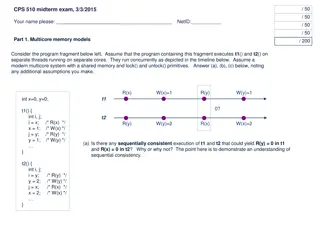

Multicore Memory Models and CPU Protection in Operating Systems

This content covers topics related to multicore memory models, synchronization, CPU protection levels in Dune-enabled Linux systems, and concurrency control in multithreaded programs. The material includes scenarios, questions, and diagrams to test understanding of these concepts in the context of t

0 views • 10 slides

Understanding Barrel Shifter in CPU Design

Barrel shifter is a vital component in CPU architecture, enabling shifting and rotating operations on data inputs based on control signals. The shifter consists of two main blocks - Shift-and-Rotate Array (SARA) and Control Logic. SARA, designed with multiple stages of cells, executes shift and rota

0 views • 12 slides

Fast Noncontiguous GPU Data Movement in Hybrid MPI+GPU Environments

This research focuses on enabling efficient and fast noncontiguous data movement between GPUs in hybrid MPI+GPU environments. The study explores techniques such as MPI-derived data types to facilitate noncontiguous message passing and improve communication performance in GPU-accelerated systems. By

0 views • 18 slides

Understanding CPU Virtualization and Execution Control in Operating Systems

Explore the concepts of CPU virtualization, direct execution, and control mechanisms in operating systems illustrated through a series of descriptive images. Learn about efficient CPU virtualization techniques, managing restricted operations, system calls, and a limited direct execution protocol for

0 views • 18 slides

Enhancing gem5's GPUFS Support for Improved Simulation Speed

Addressing challenges in application scaling, this project focuses on enhancing gem5's GPUFS support to improve simulation speed by functionally simulating memory copies and adding KVM CPU-GPU support. The introduction covers prior CPU-GPU support in gem5, ML support, and the introduction of GPUFS s

0 views • 19 slides

Illustrated Design of a Simplified CPU with 16-bit RAM

Demonstrates the design of a basic CPU with 11 instructions and 4096 16-bit RAM, showcasing the assembly of a general-purpose computer using gates and registers. The CPU comprises 8 key registers for various functions, employing a sequential circuit for instruction execution. The machine language pr

0 views • 31 slides