CS 404/504 Special Topics

Adversarial machine learning techniques in text and audio data involve generating manipulated samples to mislead models. Text attacks often involve word replacements or additions to alter the meaning while maintaining human readability. Various strategies are used to create adversarial text examples

1 views • 57 slides

Practically Adopting Human Activity Recognition

Cutting-edge research in Human Activity Recognition (HAR) focuses on practical adoption at scale, leveraging labeled and unlabeled data for inference and adaptation. Prior works explore models like LIMU-BERT and address challenges in combating data heterogeneity for feature extraction.

2 views • 35 slides

Mysterious Jungle Expedition

Amidst the dense jungle, two explorers, Simon and Bert, embark on an expedition filled with danger and intrigue. As they uncover hidden artifacts and face unexpected challenges, a sense of foreboding lingers in the air, hinting at a thrilling adventure ahead.

0 views • 12 slides

Transforming NLP for Defense Personnel Analytics: ADVANA Cloud-Based Platform

Defense Personnel Analytics Center (DPAC) is enhancing their NLP capabilities by implementing a transformer-based platform on the Department of Defense's cloud system ADVANA. The platform focuses on topic modeling and sentiment analysis of open-ended survey responses from various DoD populations. Le

0 views • 13 slides

Deep Learning Applications in Biotechnology: Word2Vec and Beyond

Explore the intersection of deep learning and biotechnology, focusing on Word2Vec and its applications in protein structure prediction. Understand the transformation from discrete to continuous space, the challenges of traditional word representation methods, and the implications for computational l

0 views • 28 slides

Evolution of Neural Models: From RNN/LSTM to Transformers

Neural models have evolved from RNN/LSTM, designed for language processing tasks, to Transformers with enhanced context modeling. Transformers introduce features like attention, encoder-decoder architecture (e.g., BERT/GPT), and fine-tuning techniques for training. Pretrained models like BERT and GP

1 views • 11 slides

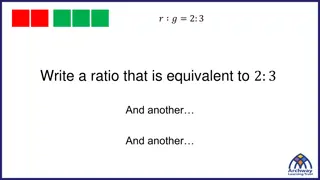

Equivalent Ratios and Linear Sequences for Problem Solving

Learn how to identify and work with equivalent ratios, spot linear sequences, and solve problems using ratios. Practice simplifying ratios, correcting work, and applying ratios in real-life scenarios. Explore scenarios like sharing sweets between Alice and Bert to strengthen your understanding of ra

1 views • 19 slides

Exploring Transformer Models for Text Mining and Beyond

Delve into the world of transformer models, from foundational concepts to practical applications like predicting words, sentences, and even generating complex content. Discover examples like BERT, GPT-2, and ChatGPT, showcasing how these models can handle diverse tasks beyond traditional language pr

0 views • 26 slides

A Comprehensive Overview of BERT and Contextualized Encoders

This informative content delves into the evolution and significance of BERT - a prominent model in NLP. It discusses the background history of text representations, introduces BERT and its pretraining tasks, and explores the various aspects and applications of contextualized language models. Delve i

0 views • 85 slides

UN-REDD Support on Safeguards and Safeguard Information Systems

The UN-REDD Programme assists countries in addressing social and environmental issues through the development of national approaches to safeguards. The programme's approach emphasizes the importance of safeguarding national REDD+ systems to manage potential negative impacts and enhance benefits. Str

0 views • 12 slides

Platform Support for Developing Analysis and Testing Plugins

This presentation discusses the platform support for developing plugins that aid in program analysis and software testing in IDEs. It covers IDE features, regression testing processes, traditional regression testing methods, and a case study on BEhavioral Regression Testing (BERT). The talk also del

0 views • 20 slides

ZEN: Pre-training Chinese Text Encoder Enhanced by N-gram Representations

The ZEN model improves pre-training procedures by incorporating n-gram representations, addressing limitations of existing methods like BERT and ERNIE. By leveraging n-grams, ZEN enhances encoder training and generalization capabilities, demonstrating effectiveness across various NLP tasks and datas

0 views • 17 slides

Extending Multilingual BERT to Low-Resource Languages

This study focuses on extending Multilingual BERT to low-resource languages through cross-lingual zero-shot transfer. It addresses the challenges of limited annotations and the absence of language models for low-resource languages. By proposing methods for knowledge transfer and vocabulary accommoda

0 views • 21 slides

Webinar on Value of FAIR Evaluators in EOSC-Nordic Step 5

Discover the importance of FAIR Evaluators in the EOSC-Nordic Step 5 webinar series. Learn about the assessment process, key takeaways, and insights on FAIR data and its implications. Join Bert Meerman, Director of GO FAIR Foundation, as he delves into the significance of machine actionability and t

0 views • 5 slides

Feedback on UN-REDD Programme's SEPC and BeRT in Sudan

Feedback on the implementation of the UN-REDD Programme's Social and Environmental Principles and Criteria (SEPC) and the Benefit and Risks Tool (BeRT) in Sudan. The workshop aimed to identify the benefits of the SEPC tool for designing the Sudan National REDD programme, explore its usefulness on th

1 views • 15 slides

Corporate Climate Assessment Using NLP Clustering

This work explores a novel approach in corporate climate assessment through applied NLP clustering, highlighting the relationship between climate risk and financial implications. The use of advanced techniques like BERT embedding for topic representation and clustering in corporate reports is discus

0 views • 33 slides

ATC Advanced Technologies Committee Overview 2023-2024 Activities

The ATC Advanced Technologies Committee, led by Bert van Duijn, focuses on sharing image data for developing imaging technologies for seed quality testing. With members from 13 countries, the committee engages in various working groups exploring X-ray, molecular, and mathematical technologies. They

0 views • 10 slides

Mediation in the Netherlands: Towards Peaceful Solutions Conference Interaction

Bert Maan, an international expert, discussed the background and overview of mediation in the Netherlands, highlighting the purpose of law, legal culture, the arrival of mediation, and the current situation. The legal culture in the Netherlands emphasizes a solution-oriented approach, with a traditi

0 views • 20 slides

Decoding Communication in NLP Models

Large scale language models are the current trend in NLP, emphasizing end-to-end learning for tasks like machine translation. Pre-training language models, such as ELMo and BERT, have shown impressive results. The focus is on understanding and interpreting the internal structure of these models to i

0 views • 54 slides