Building Bridges and Integrating Approaches in Research for Efficiency and Interoperability

Despite different starting points, there is significant overlap in the core documents of DFIG and Publishing Workflow IG. The session focuses on integrating approaches to address overlapping issues, with an emphasis on building bridges between diverse research cultures and terminology barriers. Key themes include configuration building, data fabric cycle, dimensions of testing, and compositions of components to enhance the research enterprise through efficiency and interoperability.

- Research Integration

- Interdisciplinary Collaboration

- Data Fabric Cycle

- Configuration Building

- Efficiency

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

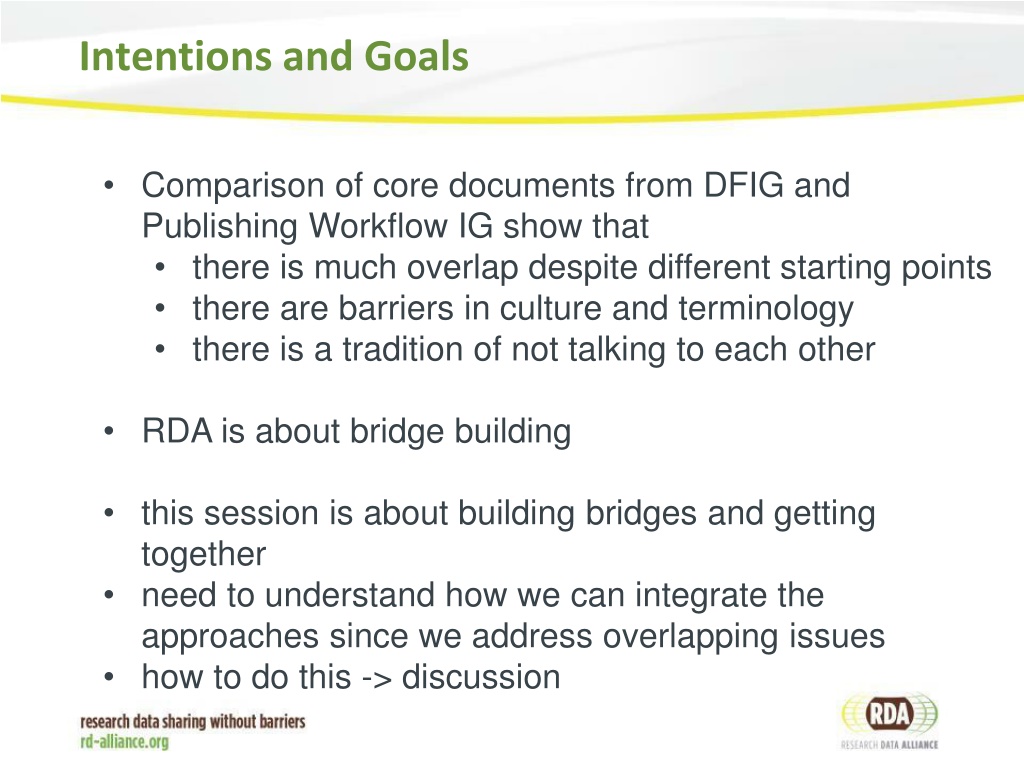

Intentions and Goals Comparison of core documents from DFIG and Publishing Workflow IG show that there is much overlap despite different starting points there are barriers in culture and terminology there is a tradition of not talking to each other RDA is about bridge building this session is about building bridges and getting together need to understand how we can integrate the approaches since we address overlapping issues how to do this -> discussion

Configuration Building The entire research enterprise now relies on software Much of this software has been either home-brewed or borrowed from other domains Re-usable components are needed Efficiency Interoperability Publishing, in the broadest sense, is the clear and obvious outcome of research We need to look for common components and approaches across all research areas

Data Fabric Cycle Observations Experiments Simulations etc. This slide indicated the continuous cycle of creating raw data or derived data based on collections of existing data. Identify components that could improve (stepwise).

Dimensions of Testing: getting to Data Fabrics RDA produces RDA Recommendations (i.e., outputs) Some technically-oriented RDA Recommendations have reference software with it, these are starting point Many technically oriented RDA recommendations do not have reference software, yet are machine actionable (a schema for instance). These also are of immediate interest to data fabric composition. Other recommendations play important but background roles in early composition RDA recommend- ation: Reference software RDA recommendation: Purely human consumption and action Machine actionable reference Human consumption needed before being actionable reference

Compositions of components Composition (or Fabric) A Composition B Core Specific Components & Services Components & Services

Compositions of components Given nature of data (can t do much without understanding it), successful data fabric will likely: 1. 2. 3. 4. Run on possibly distributed e-infrastructure (EUDAT, NDS, ) Serve scholarly domain as domain infrastructure Support multiple projects within that domain And eventually result in cross-domain research For 3 and 4 to be realized, composition of 2 must be shared across projects Composition (Fabric) B

Testbed* (US) to facilitate community evaluation of DTR and PIT service, and to test complex types * Funding proposal under review

WCRP CMIP6 data scenario WCRP: World Climate Research Program CMIP6: Coupled Model Intercomparison Project, phase 6 Ultimately inform IPCC assessment reports Big community effort: Earth system simulations, run internationally at multiple sites Estimated ca. 50 PB data volume over next 5 years PID for every file 250 Mio. Handles

The publishing process is elaborate and takes a lot of real time Requirement: Put a Handle in every file header, but not allowed to change files after production phase ESGF IPCC DDC archive ESGF CMOR CMIP publication replication project storage Step LTA DOI Production Phase Bibliometric Phase Phase Project / Community Phase MD Check Data Check M1 M2 M3 M4 D1 D2 D3

More details on the registration workflow Requirement: Put a Handle in every file header, but not allowed to change files after production phase tracking_id = hdl:21.14100/<UUID> Lot of time spent on agreements that ensure sanity of PID record Each object gets a PID and no object outside our control with embedded PID PID not citable required metadata not ready Still: some file headers are extracted and put in the PID record PIDs are a new development Handle registration not allowed to interrupt publication process

Make it scalable: Deal with burst events, provide high availability

Does the idea of common components work in this case? Yes.

What are the common components in this case? Object registration Object management (copy, move, delete) Collection builder Classifier (DTR) register specific community PID profile register file data types (netcdf, variables) Object information (end-user) Landing page link to further information, enable browsing Information tool External addendum (processing; feedback) Policy provider and enforcer (preliminary objects, LTA)

Address Every Object on the Net Two documents in prep: Report from Workshop, Digital Object Cloud

The DO Cloud ID: 123 A Identifier Service Identifier Service ID: 987/ Repository Repository Repository F Identifier Service Repository Repository ID: 843 G End users, developers, and automated processes deal with persistently identified,consistently structured digital objects which are securely & redundantly managed & stored in the Internet which is an overlay on existing or future infor- mation storage systems.

From abstract fabrics to solutions Common Components & Services Specific Components & Services Closing urgent gaps t-repositories PID system MD schemas MD editors vocabularies etc. Global Digital Object Cloud