Understanding Game Abstraction and Equilibrium

Extensive-Form Game Abstraction with Bounds delves into the complexities of game abstraction, exploring theoretical guarantees, algorithmic challenges, and equilibrium-finding processes. The difficulty of game abstraction is examined, highlighting issues such as pathologies and the struggle to optimize abstraction quality. Real versus abstracted games are compared, shedding light on equilibrium-finding algorithms and Nash equilibrium. The essence of extensive-form games, counterfactual value, regret, and the goal of abstraction - reducing variables while managing regret - are thoroughly discussed.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Extensive-Form Game Abstraction with Bounds

Outline Motivation Abstractions of extensive-form games Theoretical guarantees on abstraction quality Computing abstractions Hardness Algorithms Experiments

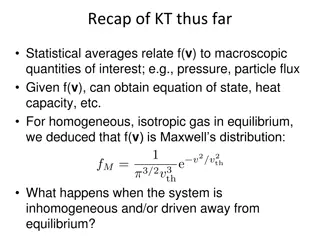

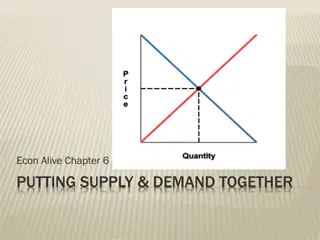

Real game Abstracted game (automated) Abstraction Equilibrium-finding algorithm Map to real game -Nash equilibrium Nash equilibrium

Why is game abstraction difficult? Abstraction pathologies Sometimes, refining an abstraction can be worse Every equilibrium can be worse Tuomas presented numerous papers on game abstraction without solution quality bounds Lossless abstractions are too large Particularly difficult to analyze in extensive-form games Information sets cut across subtrees A player might be best responding to nodes in different part of game tree

Extensive-form games - definition Game tree. 1 3 2 3 C Branches denote actions. Information sets. P1 P1 Payoff at leaves. r L R l Perfect recall. Players remember past actions. 0 1.5 P2 P2 c c d d Imperfect recall. Players might forget past actions. -3 P1 P1 P1 e e e f f f 8 0 0 -6 0 -6

Counterfactual value Defined for each information set ?. 1 3 2 3 C Expected value from information set. Assumes that player plays to reach ?. Rescales by probability of reaching ?. P1 P1 r L R l Example: Uniform distribution everywhere. Bottom information set. Reach probability: 1 0 1.5 P2 P2 c c d d 6. 1 2,1 Conditional node distribution: ? ? =1 2. -3 6 P1 P1 2 1 2 8 1 2 1 2 6 = 0.5. e e f f 8 0 0 -6

Regret Defined for each action ?. 1 3 2 3 C Change in expected value when taking ?. Holds everything else constant. P1 P1 r L R l Example: Uniform distribution everywhere. Bottom information set. ? ? =1 0 1.5 P2 P2 c c d d 2 1 2 8 1 2 1 ? = 1. 2 6 = 0.5. To get ?(?) set ?1 ? ? = ??? ? ? = 3.5. -3 6 P1 P1 e e f f 8 0 0 -6

Abstraction Goal: reduce number of decision variables, maintain low regret 1 3 2 3 C Method: merge information sets/remove actions P1 P1 Constraints: Must define bijection between nodes Nodes in bijection must have same ancestors sequences over other players Must have same descendant sequences over all players r L R l 0 1.5 P2 P2 c c d d Might create imperfect recall Worse bounds P1 P1 P1 P1 e e e e f f f f 8 0 0 -6 9 0 0 -7

Abstraction payoff error Quantify error in abstraction. 1 3 2 3 C Measure similarity of abstraction and full game. Based on bijection. P1 P1 Maximum difference between leaf nodes: First mapping: 1 Second mapping: 2 r L R l 0 1.5 P2 P2 c c Formally: Leaf node: ??? = |? ? ?(? )| Player node: ??? = max d d ??(?) P1 P1 P1 P1 ? Nature node: ??? = ?? ? ??(?) e e e e f f f f 8 0 0 -6 9 0 0 -8

Abstraction chance node error Quantify error in abstraction. P2 Measure similarity of abstraction and full game. Based on bijection. P1 P1 Maximum difference between leaf nodes: First mapping: 1 Second mapping: 2 r L R l 0 1.5 C C 1 3 2 3 1 3 2 3 Formally: Player node: ?0? = max ?0(?) ? P1 P1 P1 P1 Nature node: ?0? = ?|? ? ? (? )| e e e e f f f f 8 0 0 -6 9 0 0 -8

Abstraction chance distribution error Quantify error in abstraction. P2 Measure similarity of abstraction and full game. Based on bijection. P1 P1 Maximum difference between leaf nodes: First mapping: 1 Second mapping: 2 r L R l 0 1.5 C C 1 3 2 3 1 3 2 3 Formally: Infoset node: ?0? = |? ? ? ?(? |? ) Infoset: ?0? = ? ??0(?) P1 P1 P1 P1 e e e e f f f f 8 0 0 -6 9 0 0 -8

Bounds on abstraction quality Given: Original perfect-recall game Abstraction that satisfies our constraints Abstraction strategy with bounded regret on each action We get: An ?-Nash equilibrium in full game Perfect recall abstraction error for player ?: 0? + ? ?2?? 0? + ?? ?+ ? 0?? 2?? Imperfect-recall abstraction error: Same as for perfect recall, but ? times Linearly worse in game depth

Complexity and structure Complexity: NP-hard to minimize our bound (both for perfect and imperfect recall) Determining whether two trees are topologically mappable is graph isomorphism complete Decomposition: Level-by-level is, in general, impossible There might be no legal abstractions identifiable through only single-level abstraction

Algorithms Single-level abstraction: Assumes set of legal-to-merge information sets Equivalent to clustering with weird objective function Forms a metric space Immediately yields 2-approximation algorithm for chance-free abstraction Chance-only abstractions gives new objective function not considered in clustering literature Weighted sum over elements, with each taking the maximum intra-cluster distance Integer programming for whole tree: Variables represent merging nodes and/or information sets #variables quadratic in tree size

Perfect recall IP experiments 5 cards 2 kings 2 jacks 1 queen Limit hold em 2 players 1 private card dealt to each 1 public card dealt Betting after cards are dealt in each round 2 raises per round

Signal tree Tree representing nature actions that are independent of player actions Actions available to players must be independent of these Abstraction of signal tree leads to valid abstraction of full game tree

Imperfect-recall single-level experiments Game: Die-roll poker. Poker-like game that uses dice. Correlated die rolls (e.g. P1 rolls a 3, then P2 is more likely to roll a number close to 3). Game order: Each player rolls a private 4-sided die. Betting happens. Each player rolls a second private 4-sided die. Another round of betting. Model games where players get individual noisy and/or shared imperfect signals.

Experimental setup Abstraction: Compute bound-minimizing abstraction of the second round of die rolls. Relies on integer-programming formulation. Apply counterfactual regret minimization (CFR) algorithm. Gives solution with bounded regret on each action. Compute actual regret in full game. Compare to bound from our theoretical result.

Comparison to prior results Lanctot, Gibson, Burch, Zinkevich, and Bowling. ICML12 Bounds also for imperfect-recall abstractions Only for CFR algorithm Allow only utility error Utility error exponentially worse (?(? ) vs. ?( )) Do not take chance weights into account Very nice experiments for utility-error only case Kroer and Sandholm. EC14 Bounds only for perfect-recall abstractions Do not have linear dependence on height Imperfect-recall work builds on both papers The model of abstraction is an extension of ICML12 paper Analysis uses techniques from EC14 paper Our experiments are for the utility+chance outcome error case