Understanding MANOVA: Mechanics and Applications

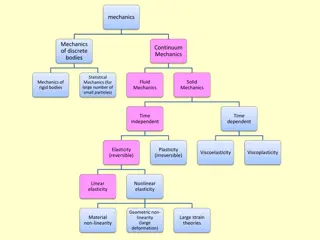

MANOVA is a multivariate generalization of ANOVA, examining the relationship between multiple dependent variables and factors simultaneously. It involves complex statistical computations, matrix operations, and hypothesis testing to analyze the effects of independent variables on linear combinations of dependent variables. The process includes breaking down total variance, considering interactions, and adjusting for covariates in MANCOVA. The use of matrices, sums of squares, and cross-products matrices play a crucial role in MANOVA analysis.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

MANOVA Mechanics

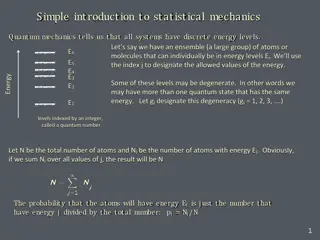

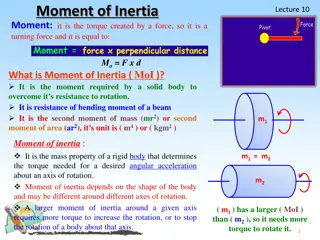

MANOVA is a multivariate generalization of ANOVA, so there are analogous parts to the simpler ANOVA equations First lets revisit Anova Anova tests the null hypothesis H0: 1= 2 = k How do we determine whether to reject?

SSTotal= SStotal 2 ( ) X X .. ij SStreatment SSerror SSbg= 2 ( ) n X X .. j SStotal SSwg= 2 ( ) X X ij j SSBetween groups SSwithin groups

Steps to MANOVA When you have more than one IV the interaction looks something like this: SSbgbreaks down into main effects and interaction = 2 ( ) SS n Y Y ** A i i = 2 ( ) SS n Y Y ** B j j = + 2 ( ) SS n Y Y Y Y ** AB ij i j i j

With one-way anova our F statistic is derived from the following formula /( ) 1 k SS k = F b , 1 k N k /( ) SS N w

Steps to MANOVA The multivariate test considers not just SSb and SSwfor the dependent variables, but also the relationship between the variables Our null hypothesis also becomes more complex. Now it is

With Manova we now are dealing with matrices of response values Each subject now has multiple scores, there is a matrix, as opposed to a vector, of responses in each cell Matrices of difference scores are calculated and the matrix squared When the squared differences are summed you get a sum-of- squares-and-cross-products-matrix (S) which is the matrix counterpart to the sums of squares. The determinants of the various S matrices are found and ratios between them are used to test hypotheses about the effects of the IVs on linear combination(s) of the DVs In MANCOVA the S matrices are adjusted for by one or more covariates

Now consider the matrix product, X'X. We ll start with this matrix The result (product) is a square matrix. The diagonal values are sums of squares and the off-diagonal values are sums of cross products. The matrix is an SSCP (Sums of Squares and Cross Products) matrix. So Anytime you see the matrix notation X'X or D'D or Z'Z, the resulting product will be a SSCP matrix.

Manova Now our sums of squares goes something like this T = B + W Total SSCP Matrix = Between SSCP + Within SSCP Wilk s lambda equals |W|/|T| such that smaller values are better (less effect attributable to error)

Well use the following dataset We ll start by calculating the W matrix for each group, then add them together The mean for Y1 group 1 = 3, Y2 group 2 = 4 W = W1+ W2+ W3 ss ss ss ss Group Y1 Y2 1 2 3 1 3 4 1 5 4 1 2 5 2 4 8 2 5 6 2 6 7 3 7 6 3 8 7 3 9 5 3 7 6 1 12 = W 1 21 2 Means 5.67 5.75

So 6 0 0 2 = W 1 We do the same for the other groups 2 1 6.8 2.6 2.6 5.2 = = W W 2 3 1 2 Adding all 3 gives us 14.8 1.6 1.6 = W 9.2

Now the between groups part The diagonals of the B matrix are the sums of squares from the univariate approach for each variable and calculated as: k = 2 ( ) b n Y Y .. ii j ij i = 1 j where n is the number of subjects in group j, j is the mean for the variable i in group j, and Y ij is the grand mean for variable i Y .. i

The off diagonals involve those same mean differences but are the products of the differences found for each DV k = = ( )( ) b b n Y Y Y Y .. .. mi im j ij i mj j = 1 j

Again T = B + W And now we can compute a chi-square statistic* to test for significance

Same as we did with canonical correlation (now we have p = number of DVs and k = number of groups)