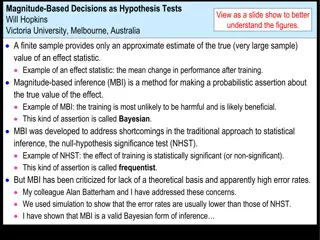

Understanding Magnitude-Based Inference (MBI) - A New Approach to Analyzing Study Results

Magnitude-Based Inference (MBI) offers a novel perspective on interpreting study outcomes by focusing on confidence intervals rather than solely relying on p-values. This approach helps researchers make more informed decisions, especially in studies with small samples where p-values may lead to misleading conclusions. By considering confidence limits and effect sizes, MBI provides a sophisticated way to assess the magnitude and significance of treatment effects.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

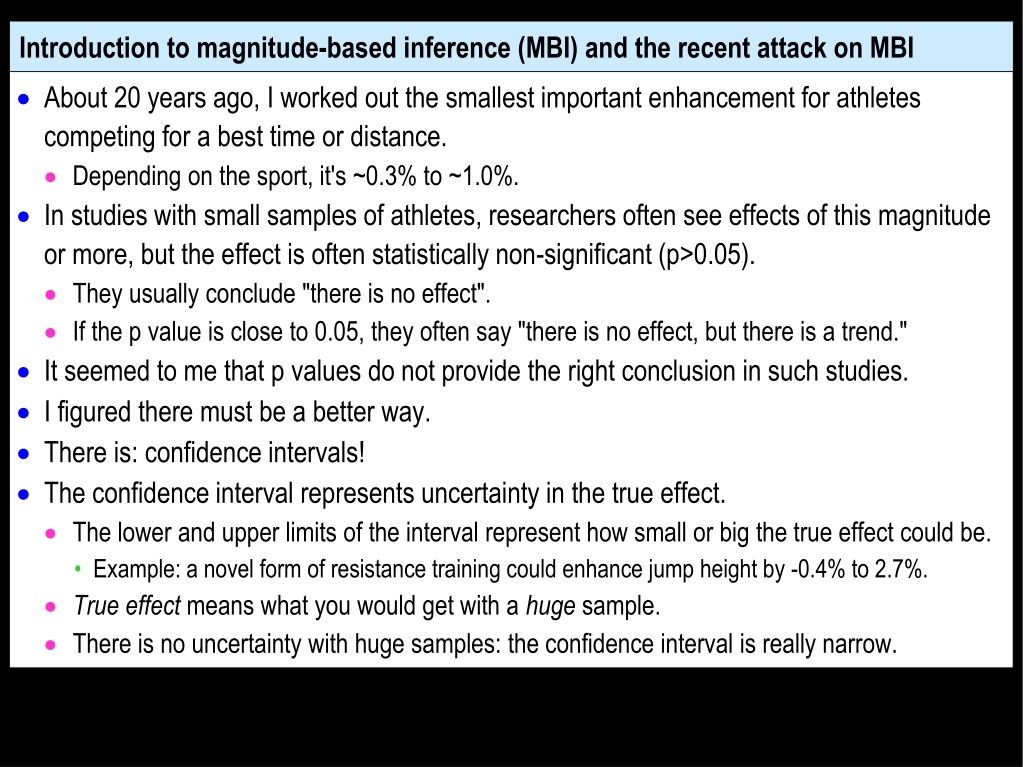

Introduction to magnitude-based inference (MBI) and the recent attack on MBI About 20 years ago, I worked out the smallest important enhancement for athletes competing for a best time or distance. Depending on the sport, it's ~0.3% to ~1.0%. In studies with small samples of athletes, researchers often see effects of this magnitude or more, but the effect is often statistically non-significant (p>0.05). They usually conclude "there is no effect". If the p value is close to 0.05, they often say "there is no effect, but there is a trend." It seemed to me that p values do not provide the right conclusion in such studies. I figured there must be a better way. There is: confidence intervals! The confidence interval represents uncertainty in the true effect. The lower and upper limits of the interval represent how small or big the true effect could be. Example: a novel form of resistance training could enhance jump height by -0.4% to 2.7%. True effect means what you would get with a huge sample. There is no uncertainty with huge samples: the confidence interval is really narrow.

Examples with huge samples and small samples: zero smallest important beneficial value smallest important harmful value Huge samples Treatment is beneficial: Treatment is trivial: don't use it! Treatment is harmful: don't use it! Small samples use it! Treatment could be beneficial or trivial: use it! p < 0.05 OK with p<0.05 too! Treatment could be beneficial or trivial: use it! p > 0.05 But not with p>0.05! substantial harm trivial substantial benefit Effect of a treatment P values can obviously lead to the wrong decision with small samples. The right decision depends on interpretation of the confidence limits, not p values. MBI is simply a sophisticated way to interpret the confidence limits

Effects where you compare something in two groups of different subjects, such as the mean for females vs males MBI uses 90% confidence limits to decide how big the difference could be. If the difference could be a substantial increase and a substantial decrease, the effect is described as unclear. The sample size isn't big enough to make a useful conclusion. Get more data! Otherwise the effect is clear, and you can describe it in several ways. State the range of the confidence interval: for example, trivial to a substantial increase. Or (more popular) describe the clear effect as possibly, likely, very likely or most likely either trivial or a substantial increase or a substantial decrease. If you describe a clear effect as possibly a substantial increase, don t forget that it is also possibly a trivial difference, and very unlikely a substantial decrease. A clear possible substantial increase does not mean it is definitely an increase, but it does mean that you don't have to worry about it being a decrease.

Effects representing treatments or other interventions that could enhance or impair performance or health You would implement a treatment that could be beneficial, provided it could not be harmful. You can use confidence limits to make this decision, but it's tricky: in MBI, could be beneficial implies using a 50% confidence interval, but could not be harmful implies a 99% confidence interval. It's easier to work directly with the probabilities (chances) for could be and could not be. If the treatment could be or is possibly beneficial (>25% chance) you would use it, provided it could not be or is most unlikely to be harmful (<0.5% chance). If the treatment could be beneficial (>25% chance) and could be harmful (>0.5% chance), you would like to use it, but you daren't. The effect of the treatment is unclear. Get more data!

Both forms of MBI allow researchers to report and interpret the uncertainty in plain language. With small studies, non-significant effects are often clear, so the researcher can often make useful publishable conclusions for such effects with MBI. Clear effects do not have spurious magnitudes in small studies: on average the magnitude is not substantially different from the true magnitude. So publication of clear effects does not lead to so-called publication bias. Publication of significant effects does produce bias. So what's the problem with MBI?

There are two problems, both of which are explained in full in the article The Vindication of MBI in the 2018 issue of Sportscience (sportsci.org). See also the post-publication comments. The first problem: two statisticians (Welsh in 2015, Sainani in 2018) have claimed that the confidence interval does not represent the likely range of the true effect. This claim is correct, but only according to the strict "frequentist" definition of the 90% confidence interval, which is too long and involved to show here! They say such statements about the true effect are Bayesian. This claim is also correct. Bayesian statisticians make probabilistic statements about the magnitude of the true effect, like MBI. But they then claim that MBI is not Bayesian. This claim is false. The confidence interval and probabilities of the true magnitude in MBI are identical to those of a legitimate form of Bayesian inference. It is known as reference Bayesian inference with a dispersed uniform prior. This complicated term refers to the kind of Bayesian inference in which you assume no information or belief about the magnitude of the effect prior to doing the analysis. As such, MBI is arguably the most objective and therefore best form of inference. A meta-analysis could provide an informative objective prior, but that is an impractical option.

This problem is solved if we identify MBI as this legitimate form of Bayesian inference. It might also help to refer to plausibility or credibility intervals rather than confidence intervals. It is astonishing that these statisticians did not regard MBI as Bayesian. But there is method in their madness. Bayesians don't have to calculate error rates. So if MBI is not Bayesian, frequentists can dismiss MBI using error rates based on p values The second problem: these statisticians claim that the error rates with MBI are unacceptable. This claim is also false, when you understand what it means to make an error in MBI. The explanation of the errors and the error rates was detailed by Alan Batterham and me in a publication in the journal Sports Medicine in 2016. The statisticians misinterpreted and redefined the errors to make MBI seem inferior to p values. Details of how MBI works, and how errors can occur, are explained in the next video.

The key points of this video: MBI is simply a sophisticated way to interpret the usual confidence intervals. MBI is a legitimate form of Bayesian inference that assumes no prior information or belief about the true effect. MBI allows researchers to report and interpret the uncertainty in plain language. MBI provides better inferences than p values, especially with small samples. MBI does not lower standards, whereas p values do.

How MBI works, and how you can make errors with MBI The following slides show in detail how to make a conclusion or decision about the magnitude of an effect using magnitude-based inference (MBI). The slides refer to confidence intervals, but from a Bayesian perspective these could be called plausibility or credibility intervals. See the previous video. The slides then show how you can make errors with MBI. In her MSSE article and in a YouTube video, Kristin Sainani used p values or her own modified definition of errors to get the apparently high error rates with MBI. The first few slides are about non-clinical inferences and errors. Non-clinical MBI is used to compare something in two groups of different subjects, such as the mean for females vs males. The remaining slides are about clinical inferences and errors. Clinical MBI is used for an effect representing a treatment or other intervention that could enhance or impair performance or health.

Non-clinical magnitude-based inference: how it works with confidence intervals smallest important positive value smallest important negative value zero NHST Non-clinical MBI Could only be +ive p < 0.05 p < 0.05 p > 0.05 Could be +ive or trivial p < 0.05 Could only be trivial p > 0.05 Could be ive, trivial or +ive: unclear, get more data! unclear, get more data! Could be ive, trivial or +ive: p > 0.05 p > 0.05 substantial negative trivial substantial positive MBI works! P values fail! Value of effect statistic "Could only be": >95% chance (very likely) "Could be": 5-95% chance (75-95%, likely; 25-75%, possibly, 5-25%, unlikely) These probabilities imply a 90% confidence interval.

Non-clinical magnitude-based inference: Type-I error: the true effect is trivial, but you conclude it is not trivial smallest important positive value smallest important negative value Non-clinical MBI MBI error Could only be +ive Yes: Type I Could be +ive or trivial No Could only be trivial No Could be +ive, trivial or ive: unclear, get more data! No substantial negative trivial substantial positive Value of effect statistic "Could only be": >95% chance (very likely) "Could be": 5-95% chance (75-95%, likely; 25-75%, possibly, 5-25%, unlikely) These probabilities imply a 90% confidence interval.

Non-clinical magnitude-based inference: Type-II error: the true effect is substantial (+ive/-ive), but you conclude it is not (+ive/-ive) smallest important negative value smallest important positive value Non-clinical MBI MBI error Could only be +ive No Could be +ive or trivial No Could only be trivial Yes: Type II Could be +ive, trivial or ive: unclear, get more data! No substantial negative trivial substantial positive Value of effect statistic "Could only be": >95% chance (very likely) "Could be": 5-95% chance (75-95%, likely; 25-75%, possibly, 5-25%, unlikely) These probabilities imply a 90% confidence interval.

Clinical magnitude-based inference: how it works with confidence intervals smallest important beneficial value smallest important harmful value Clinical MBI Could be beneficial, couldn't be harmful: use it! Couldn't be beneficial couldn't be harmful: don't use it! Couldn't be beneficial, could be harmful: don't use it! Could be beneficial or harmful: unclear, don't use it, get more data! substantial harm trivial substantial benefit Value of effect statistic You may also use a treatment if the chances or odds of benefit sufficiently outweigh the risk or odds of harm (odds ratio >66). "Could be beneficial": >25% chance (possibly) "Couldn't be harmful": <0.5% chance (most unlikely) "Couldn't be beneficial": <25% chance (unlikely) "Could be harmful": >0.5% chance (0.5-5%, very unlikely; 5-25%, unlikely; etc.) These probabilities imply a 50% confidence interval on the benefit side and a 99% confidence interval on the harm side. It's easier to work with the probabilities!

Clinical magnitude-based inference: Type-I error: the true effect is trivial, but you decide it could be used smallest important beneficial value smallest important harmful value Clinical MBI Could be beneficial, MBI error Yes: Type I couldn't be harmful: use it! Couldn't be beneficial No couldn't be harmful: Couldn't be beneficial, could be harmful: don't use it! don't use it! No Could be beneficial or harmful: unclear, don't use it, get more data! No substantial harm trivial substantial benefit Value of effect statistic A Type-I error also occurs if the confidence interval is entirely in the harm. We use a 90% confidence interval and call it a non-clinical Type-I error. "Could be beneficial": >25% chance (possibly) "Couldn't be harmful": <0.5% chance (most unlikely) "Couldn't be beneficial": <25% chance (unlikely) "Could be harmful": >0.5% chance (0.5-5%, very unlikely; 5-25%, unlikely; etc.) These probabilities imply a 50% confidence interval on the benefit side and a 99% confidence interval on the harm side. It's easier to work with the probabilities!

Clinical magnitude-based inference: Type-II error: the true effect is beneficial, but you decide not to use it smallest important beneficial value smallest important harmful value Clinical MBI Could be beneficial, couldn't be harmful: use it! Couldn't be beneficial MBI error No Yes: Type II couldn't be harmful: Couldn't be beneficial, could be harmful: don't use it! don't use it! Yes: Type II Could be beneficial or harmful: unclear, don't use it, get more data! No substantial harm trivial substantial benefit Value of effect statistic "Could be beneficial": >25% chance (possibly) "Couldn't be harmful": <0.5% chance (most unlikely) "Couldn't be beneficial": <25% chance (unlikely) "Could be harmful": >0.5% chance (0.5-5%, very unlikely; 5-25%, unlikely; etc.) These probabilities imply a 50% confidence interval on the benefit side and a 99% confidence interval on the harm side. It's easier to work with the probabilities!

Clinical magnitude-based inference: Type-II error: the true effect is harmful, but you decide to use it (also called a Type-I clinical error in the sample-size spreadsheet at Sportscience) smallest important beneficial value smallest important harmful value Clinical MBI Could be beneficial, couldn't be harmful: use it! Couldn't be beneficial MBI error Yes: Type II No couldn't be harmful: Couldn't be beneficial, could be harmful: don't use it! don't use it! No Could be beneficial or harmful: unclear, don't use it, get more data! No substantial harm trivial substantial benefit This decision is correct, hence no clinical error. Value of effect statistic "Could be beneficial": >25% chance (possibly) "Couldn't be harmful": <0.5% chance (most unlikely) "Couldn't be beneficial": <25% chance (unlikely) "Could be harmful": >0.5% chance (0.5-5%, very unlikely; 5-25%, unlikely; etc.) These probabilities imply a 50% confidence interval on the benefit side and a 99% confidence interval on the harm side. It's easier to work with the probabilities! This is incorrect, but it is not relevant to this clinical decision. It is a non-clinical Type-II error.

The next two slides show outcomes and errors for multiple repeats of a controlled trial. The data were generated with the simulation Alan Batterham and I used for our article in Sports Medicine. A colleague, Ken Quarrie, used the data to make graphics similar to those in Kristin Sainani's YouTube video and in an on-line magazine, fivethirtyeight. She used the simulation with a small sample size: 10 in the control and 10 in the experimental group. "Optimal" sample size would be much larger: 50 in each group for MBI and 144 for p values. She showed large error rates based on her misinterpretation of MBI. The slides here are the outcomes and error rates with the correct interpretation of MBI. The first slide shows outcomes and Type-I errors for 80 repeats when the true effect is 0. The second slide shows outcomes and Type-II errors for 20 repeats when the true effect is a range of small harmful values and 20 repeats with a range of small beneficial values. The final slide summarizes the outcomes with MBI.

Clinical MBI Type-I errors with 80 controlled trials, true effect = 0 Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful V. likely beneficial; v. unlikely trivial Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Two Type-I errors (error rate = 2.7%) Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Likely beneficial; unlikely trivial Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Likely trivial; unlikely harmful Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; unlikely harmful Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data V. unlikely trivial; v. likely harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; unlikely harmful One non-clinical Type-I error Unclear; get more data Unclear; get more data (error rate = 0.8%) Unclear; get more data Unclear; get more data Unlikely trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; unlikely harmful Unlikely trivial; likely harmful Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; unlikely harmful Unlikely trivial; likely harmful Unlikely trivial; likely harmful Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data Possibly trivial; possibly harmful Possibly trivial; possibly harmful Possibly trivial; unlikely harmful Possibly trivial; unlikely harmful Unlikely trivial; likely harmful Unlikely trivial; likely harmful Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data

Clinical MBI Type-II errors with 20 controlled trials True effects = small harmful True effects = small beneficial M. likely harmful; m. unlikely trivial Likely harmful; unlikely trivial Possibly harmful; possibly trivial M. likely beneficial; m. unlikely trivial Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data V. likely harmful; v. unlikely trivial Likely harmful; unlikely trivial Possibly harmful; possibly trivial V. likely beneficial; v. unlikely trivial Unclear; get more data Unclear; get more data Unclear; get more data Unclear; get more data No Type-II errors) (error rate = 0.2%) V. likely harmful; v. unlikely trivial Likely harmful; unlikely trivial Possibly harmful; possibly trivial Possibly trivial; unlikely harmful Possibly trivial; possibly harmful Likely beneficial; v. unlikely trivial Unclear; get more data Unclear; get more data Two Type-II errors) (error rate = 12%) V. likely harmful; v. unlikely trivial Likely harmful; unlikely trivial Possibly harmful; possibly trivial Possibly harmful; possibly trivial Possibly trivial; unlikely harmful Likely beneficial; v. unlikely trivial Unclear; get more data Unclear; get more data Likely harmful; unlikely trivial Likely harmful; unlikely trivial Possibly harmful; possibly trivial Possibly harmful; possibly trivial Likely beneficial; unlikely trivial Likely beneficial; unlikely trivial Unclear; get more data Unclear; get more data

In conclusion Alan Batterham and I quantified the error rates in our Sports Medicine article in 2016. We found that error rates with MBI were generally lower and often much lower than with p values. We also quantified publication rates with MBI and with p values. We assumed only clear effects with MBI, and only statistically significant effects with p values, would be publishable. We found that non-significant effects with small sample sizes were often clear, so researchers can publish more useful findings with MBI. Finally, we quantified the magnitudes of clear effects and significant effects. We found that clear effects do not have spurious magnitudes in small studies. On average, the magnitude is not substantially different from the true magnitude. Significant effects in such studies do have spurious magnitudes. On average, they differ substantially from the true magnitude. So, there is no "publication bias" with MBI, but there is such bias with p values. Problems solved: researchers can use and trust magnitude-based inference! Updated November 2018

Updated November 2018 The editor of Medicine and Science in Sports and Exercise has now banned MBI: "MSSE considers it unethical to use the MBI approach and label it as a Bayesian approach, since MBI is not universally accepted as Bayesian." But the articles cited to support this assertion were by authors who have not responded to the evidence that MBI is Bayesian with a "flat" (uninformative) prior. "Universal acceptance" is a rather strict criterion for inference. Do frequentists universally accept Bayesians, and vice versa? Also: "Sainani demonstrates mathematically that the MBI approach is not an acceptable method of statistical analysis." But "mathematically" refers to her error rates based on misuse of MBI. Correct use has low error rates. Reviewers could easily ensure that possibly or likely substantial is not presented as definitively substantial in manuscripts accepted for publication. Authors seem to think that getting a clear effect means that the effect is "real".

The editorial board of Journal of Sports Sciences has now stated that "until MBI receives formal endorsement from academic and medical statisticians , MBI should be used with caution." Batterham and I have already shown that MBI is Bayesian and is superior to NHST in respect of error rates, publication rates, and publication bias. But statisticians who accept Sainani's flawed critique will simply assert that MBI is not Bayesian and has unacceptable Type-I error rates. If they accept that MBI is Bayesian, there is another problem: most Bayesians favor informative priors. They do not acknowledge the qualities of uninformative priors: objective, and computationally straightforward. But informative priors bias the outcome by causing "shrinkage" towards the prior. And it is difficult to convert a prior belief into a trustworthy numerical prior. A trustworthy numerical prior could be provided by meta-analysis, but it is far better to perform the meta-analysis after your study, not before. In summary, MBI combines the best of both inferential worlds: the error rates of hypothesis testing with Bayesian estimation of uncertainty in the magnitude of effects. Can we get any influential statisticians to support this view? Meantime some good news: ESSA, BASES and ACSM have endorsed Victoria University's post-graduate course on applied sports statistics based on MBI.