Understanding Levels of Object Recognition in Computational Models

Explore the levels of object recognition in computational models, from single-object recognition to recognizing local configurations. Discover how minimizing variability aids in interpreting complex scenes and the challenges faced by deep neural networks in achieving human-level recognition on minimal images.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

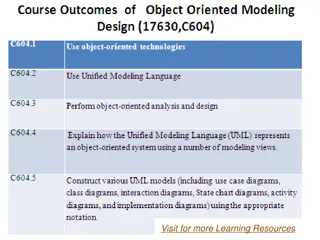

Below the object level: recognizing local configurations

Minimizing variability Useful for the interpretation of complex scenes

Searching for Minimal Images Over 15,000 Mechanical Turk subjects Atoms of Recognition PNAS 2016

Pairs Parent MIRC, Child sub-MIRC

0.79 0.0

0.14 0.88

Cover Average 16.9 / class Highly redundant Each MIRC is non-redundant: each feature is important

Testing computational models

DNN do not reach human level recognition on minimal images Recognition of minimal images does not emerge by training any of the existing models tested. The large gap at the minimal level is not reproduced The accuracy of recognition is lower than human s Representations used by existing models do not capture differences that human recognition is sensitive to

Minimal Images: Internal Interpretation Humans can interpret detailed sub-structures within the atomic MIRC. Cannot be done by current feed-forward models

Internal Interpretation Internal interpretations, perceived by humans Cannot be produced by existing feed-forward models

Current recognition models are feed-forward only This is likely to limit their ability to providing interpretation of find details A model that can produce interpretation of MIRCs The model uses a top-down stage (back to V1) Ben-Yossef et al, CogSci 2015 A model for full local image interpretation

Automatic caption: A brown horse standing next to a building. 2017

Automatic caption: a man is standing in front woman in white shirt.

Human description: Two women sitting at restaurant table while a man in a black shirt takes one of their purses off the chair, while they are not looking

Components: Man, Woman, Purse, Chair Properties: Young, dark-hair, red, Relations: Man grabs purse, Purse hanging of chair, Woman sitting on chair

Hanging-of Purse Chair Red Grabbing Sitting Woman Dark Man Not-looking This is what vision produces, and we identify the event as stealing

Stealing Stealing Object X Grabbing Owner Not-looking Person A Person B Abstract and cognitive (shared with non-sighted), broad generalization

Hanging-of Purse Chair Red Owns Grabbing Sitting Woman Dark Man Not-looking The ownership is added to the structure, This cognitive addition contributes to stealing