Understanding Latent Class Analysis: Estimation and Model Optimization

Latent Class Analysis (LCA) is a person-centered approach where individuals are assigned to different categories based on observed behaviors related to underlying categorical differences. The estimation problem in LCA involves estimating unobservable parameters using maximum likelihood approaches like EM algorithm. This process aims to identify parameter values that maximize the likelihood of the observed data, helping in determining the latent class affiliations with a degree of uncertainty. To avoid local maxima, it is crucial to check and replicate likelihood values, increase random sets of starting values, and optimize the E-M algorithm iteratively. The goal is to achieve the global maximum likelihood estimation for a robust latent class model.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

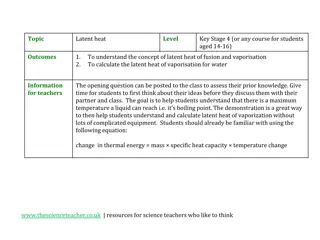

Introduction to Latent Class Analysis (III) Dr Oliver Perra o.perra@qub.ac.uk

Summary: Latent Class Analysis (LCA) Person centred-approach A mixture of individuals are assigned to different categories (classes) Measurement model: Observed behaviours are causally related to underlying categorical differences Probability-based model: Association indicators-latent variable estimated with error Latent class affiliations have a degree of uncertainty

Outline Estimation of the model Deciding on the number of classes Including covariates and distal outcomes in the model

Estimating the Latent Class model

Latent Class Analysis (LCA): Estimation Problem: Estimating unobservable parameters using observed information Iteration Maximum-Likelihood (ML) approaches: e.g. Maximum Likelihood with Robust Standard Errors (MLR). Identify parameters that maximise the likelihood the process described by the model produced observed data. Commonly used: Expectation-Maximization (EM) algorithm.

Likelihood Parameter values sets

Likelihood Parameter values sets

Likelihood Parameter values sets

Likelihood Parameter values sets

Global Maximum Local Maximum Likelihood Parameter values sets

Latent Class Analysis (LCA): Estimation Avoid local maxima: Check the likelihood (or log-likelihood) is replicated; Re-run the model increasing the number of random sets of starting values and optimizations in the E-M algorithm. Increase the number of iterations in the initial stage Reduce the threshold to consider convergence has been achieved

Deciding the number of classes

Deciding the number of latent classes Depression No Depression Low Mood Sleep probs Lack pleasure Fatigue

Deciding the number of latent classes Several statics to consider! Fit indices: Pearson 2and Likelihood Ratio 2: comparisons of the expected cell frequency count provided by the model and the observed cell frequency. Expected frequencies = observed ones 2 = 0. Expected frequencies significantly observed ones, 2 > 0 , p < .05

Deciding the number of latent classes Several statics to consider! Fit indices Test of models with n and n-1 class: Compare the fit of model with n classes (e.g. 4) with one with n-1 classes (e.g. 3). Bootstrap Likelihood-Ratio Test Vuong-Lo-Mendell-Rubin Likelihood Ratio test

Deciding the number of latent classes Several statics to consider! Fit indices Test of models with n and n-1 class Information Criteria: Take into account Likelihood-Ratio 2 but penalise more complex models. Akaike Information Criterion (AIC); Bayesian Information Criterion (BIC); Sample-size Adjusted Bayesian Information Criterion (aBIC).

Decide the number of latent classes Several statics to consider! Fit indices Test of models with n and n-1 class Information Criteria Entropy: Index of the precision of individuals categorisation into classes (range 0 to 1).

Decide the number of latent classes Several statics to consider! Fit indices Test of models with n and n-1 class Information Criteria Entropy Substantive knowledge

Including Covariates and Distal Outcomes

LCA with Covariates and Distal Outcomes Can you spot the problem? Age Retirement Latent class estimation and distal outcome Latent class estimation

LCA with Covariates and Distal Outcomes The latent classes estimated here take into account the heterogeneity in the indicators and in the distal outcome. Can you spot the problem? Age Retirement Latent class estimation and distal outcome Latent class estimation

LCA with Covariates and Distal Outcomes The latent classes estimated represent the heterogeneity in the responses to indicator items and in the distal outcome. Can you spot the problem? Measurement parameters estimation will shift! Age Retirement Latent class estimation and distal outcome Latent class estimation

LCA with Covariates and Distal Outcomes When using latent class affiliation as predictors, take into account uncertainty: Multiple pseudo-class draws: Participantsrandomly classified into latent classes multiple times based on their distributions of posterior probabilities. Results combined across draws using same methods as multiple imputation

LCA with Covariates and Distal Outcomes When using latent class affiliation as predictors, take into account uncertainty: Multiple pseudo-class draws. 3-Step Approach: Step 1: Estimate model and assign individuals to most likely class Step 2: Estimate measurement error (i.e. uncertainty in class allocation) Step 3: Impose relationships between classes and covariates/distal outcomes, while controlling for measurement error in class assignment

LCA with Covariates and Distal Outcomes: 3 Step Approach Step 1: Estimate model and assign individuals to most likely class Depression No Depression Low Mood Sleep probs Lack pleasure Fatigue

LCA with Covariates and Distal Outcomes: 3 Step Approach Step 2: Estimate measurement error (i.e. uncertainty in class allocation)

LCA with Covariates and Distal Outcomes: 3 Step Approach Step 3: Impose structural relationships between classes and covariates/distal outcomes, while controlling for measurement error in class assignment No Depression Depression Fixed according to classification uncertainty * No Depression Depression

LCA with Covariates and Distal Outcomes: 3 Step Approach Step 3: Impose structural relationships between classes and covariates/distal outcomes, while controlling for measurement error in class assignment No Depression Depression Gender *Fixed *Fixed SES No Depression Depression

LCA with Covariates and Distal Outcomes: 3 Step Approach Gender SES No Depression Depression

Summary Check solutions are stable: Best likelihood replicated Decide the number of classes Consider different statistics When considering latent classes as outcomes, predictors, or moderators, use advanced methods that control for uncertainty