Understanding Decision Trees: A Visual Guide

Explore the concept of Decision Trees through a rule-based approach. Learn how to predict outcomes based on a set of rules and visualize the rule sets as trees. Discover different types of Decision Trees and understand which features to use for breaking datasets effectively.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Lesson 5 Decision Tree (Rule Based Approach)

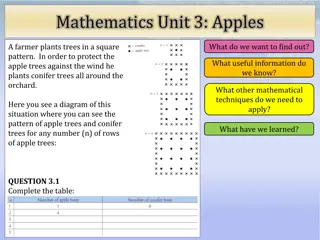

Example Features

Example Class

Example Given : <sunny, cool, high, true> Predict, if there will be a match?

Example Given : <sunny, cool, high, true> Predict, if there will be a match? Assume that I have a set of rules: - If ((lookout=sunny) and ( humudity=high) and (windy=false)) then (yes) else (no) - If (lookout=overcast) then (yes) If ((lookout=sunny) and ( humudity=high)) then (yes) else (no) so on .. - -

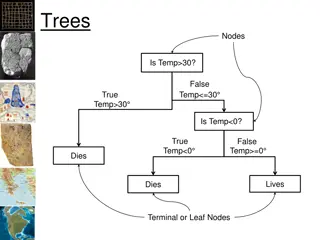

Set of rules can be visualized as a tree. Rule 1: If ((lookout=sunny) and ( humudity=high)) then (yes) else (no)

Set of rules can be visualized as a tree. Rule 1: If ((lookout=sunny) and ( humudity=high)) then (yes) else (no) Rule 2: If (lookout=overcast) then (yes)

Set of rules can be visualized as a tree. Rule 1: If ((lookout=sunny) and ( humudity=high)) then (yes) else (no) Rule 2: If (lookout=overcast) then (yes) Rule 3: If ((lookout=rain) and ( windy=true)) then (no) else (yes)

Many possible Trees Which Tree is the Best?

Which feature should be used to break the dataset? Types of DT ID3 (Iterative Dichotomiser 3) C4.5 (Successor of ID3) CART (Classification and Regression Tree) Random Forest

ID3 1. Calculate the entropy of the total dataset => H(S)=0.9911 we get a database with single class p+nlog2( p+n) p+nlog2( p+n) p p n n Entropy(S)= Entropy(4F,5M) = -(4/9)log2(4/9) - (5/9)log2(5/9) = 0.9911

ID3 1. Calculate the entropy of the total dataset 2. Choose and attribute and Split the dataset by an attribute we get a database with single class

ID3 Calculate the entropy of the total dataset Choose and attribute and Split the dataset by an attribute Calculate the entropy of each branch get a database with single class 1. 2. 3. Entropy(3F,2M) = -(3/5)log2(3/5) - (2/5)log2(2/5) = 0.9710 Entropy(1F,3M) = -(1/4)log2(1/4) - (3/4)log2(3/4) = 0.8113

ID3 Calculate the entropy of the total dataset Choose and attribute and Split the dataset by an attribute Calculate the entropy of each branch Calculate Information Gain of the split 1. 2. 3. 4. get a database with single class ??(?1) = ? ? [? ?1? ?1 + ? ?2? ?2] Gain(Hair Length <= 5) = 0.9911 (4/9 * 0.8113 + 5/9 * 0.9710 ) = 0.0911 Entropy(3F,2M) = -(3/5)log2(3/5) - (2/5)log2(2/5) = 0.9710 Entropy(1F,3M) = -(1/4)log2(1/4) - (3/4)log2(3/4) = 0.8113

What is information gain? Reduction in uncertainty of the parent dataset after the split. ??(?1) = ? ? [? ?1? ?1 + ? ?2? ?2]

ID3 Calculate the entropy of the total dataset Choose and attribute and Split the dataset by an attribute Calculate the entropy of each branch Calculate Information Gain of the split 1. 2. 3. 4. get a database with single class ??(?1) = ? ? [? ?1? ?1 + ? ?2? ?2] Gain(Hair Length <= 5) = 0.9911 (4/9 * 0.8113 + 5/9 * 0.9710 ) = 0.0911 Entropy(3F,2M) = -(3/5)log2(3/5) - (2/5)log2(2/5) = 0.9710 Entropy(1F,3M) = -(1/4)log2(1/4) - (3/4)log2(3/4) = 0.8113

ID3 Calculate the entropy of the total dataset Choose and attribute and Split the dataset by an attribute Calculate the entropy of each branch 1. 2. 3. Calculate Information Gain of the split 4. Repeat 2, 3, 4 for all Attributes The attribute that yields the largest IG is chosen for the decision node. 5. 6. Gain(Hair Length <= 5) = 0.0911 Gain(Weight <= 160) = 0.5900 Gain(Age <= 40) = 0.0183

ID3 Calculate the entropy of the total dataset Choose and attribute and Split the dataset by an attribute Calculate the entropy of each branch 1. 2. 3. Calculate Information Gain of the split 4. Repeat 2, 3, 4 for all Attributes The attribute that yields the largest IG is chosen for the decision node. 5. 6.

ID3 Calculate the entropy of the total dataset 1. Choose and attribute and Split the dataset by an attribute 2. Calculate the entropy of each branch 3. Calculate Information Gain of the split 4. Repeat 2, 3, 4 for all Attributes 5. The attribute that yields the largest IG is chosen for the decision node. 6. Repeat 1 to 6 for all sub-databases till we get sub- databases with single class 7.

ID3 Calculate the entropy of the total dataset 1. Choose and attribute and Split the dataset by an attribute 2. Calculate the entropy of each branch 3. Calculate Information Gain of the split 4. Repeat 2, 3, 4 for all Attributes 5. The attribute that yields the largest IG is chosen for the decision node. 6. Repeat 1 to 6 for all sub-databases till we get sub- databases with single class 7.