Understanding Attribute Selection Measures in Decision Trees

Decision trees are popular in machine learning for classification tasks. This content discusses the importance of attribute selection measures such as Information Gain, Gain Ratio, and Gini Index in constructing accurate decision trees. These measures help in selecting the most informative attributes for splitting the data, improving the classification accuracy. Understanding these measures is crucial for efficient decision tree construction and predictive modeling in data analysis.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

SEEM4630 2015-2016 Tutorial 1 : Decision tree Siyuan Zhang, syzhang@se.cuhk.edu.hk

Classification: Definition Given a collection of records (training set contains a set of attributes attributes, one of the attributes is the class training set ), each record class. Find a model other attributes. Decision tree Na ve bayes k-NN model for class class attribute as a function of the values of Goal: previously unseen previously unseenrecords should be assigned a class as accurately as possible. 2

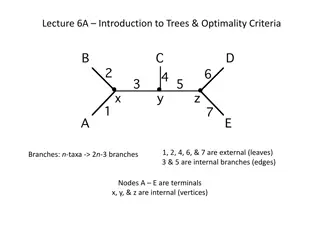

Decision Tree Goal Construct a tree so that instances belonging to different classes should be separated Basic algorithm (a greedy algorithm) Tree is constructed in a top-down recursive manner At start, all the training examples are at the root Test attributes are selected on the basis of a heuristics or statistical measure (e.g., information gain) Examples are partitioned recursively based on selected attributes 3

Attribute Selection Measure 1: Information Gain Let pi be the probability that a tuple belongs to class Ci, estimated by |Ci,D|/|D| Expected information (entropy) needed to classify a tuple in D: m = ( ) log ( ) Info D p p 2 i i = 1 i Information needed (after using A to split D into v partitions) to classify D: | | D v = j ( ) ( ) Info D Info D A j | | D = 1 j Information gained by branching on attribute A: Gain(A) = Info(D) Info (D) A 4

Attribute Selection Measure 2: Gain Ratio Information gain measure is biased towards attributes with a large number of values C4.5 (a successor of ID3) uses gain ratio to overcome the problem (normalization to information gain): | | | | D D v = j j ( ) log ( ) SplitInfo D 2 A | | | | D D = 1 j GainRatio(A) = Gain(A)/SplitInfo(A) 5

Attribute Selection Measure 3: Gini index If a data set D contains examples from n classes, gini index, gini(D) is defined as: gini(D) is defined as: If a data set D contains examples from n classes, gini index, n 2 = ( ) 1 gini D pj = j where pj is the relative frequency of class j in D If a data set D is split on A into two subsets D1 and D2, the gini index gini(D) is defined as index gini(D) is defined as where pj is the relative frequency of class j in D If a data set D is split on A into two subsets D1 and D2, the gini 1 | | | | | | | | D D D D = = + + ( ( ) ) ( ( ) ) ( ( ) ) D D gini gini gini gini 1 1 2 2 giniA giniA D D D D 1 1 2 2 | | | | | | | | D D D D gini = ( ) ( ) ( ) A gini D gini D Reduction in Impurity: Reduction in Impurity: A 6

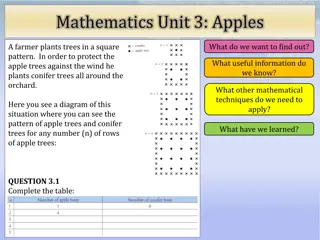

Example Outlook Temperature Humidity Wind Play Tennis Sunny Sunny Overcast Rain Rain Rain Overcast Sunny Sunny Rain Sunny Overcast Overcast Rain >25 >25 >25 15-25 <15 <15 <15 15-25 <15 15-25 15-25 15-25 >25 15-25 High High High High Normal Normal Normal High Normal Normal Normal High Normal High Weak Strong Weak Weak Weak Strong Strong Weak Weak Weak Strong Strong Weak Strong No No Yes Yes Yes No Yes No Yes Yes Yes Yes Yes No 7

Tree induction example Entropy of data S Info(S) = Info(S) = - -9/14(log 9/14(log2 2(9/14)) (9/14))- -5/14(log 5/14(log2 2(5/14)) = 0.94 (5/14)) = 0.94 Split data by attribute Outlook S[9+, 5 S[9+, 5- -] ] Outlook Outlook Sunny [2+,3 Sunny [2+,3- -] ] Overcast [4+,0 Overcast [4+,0- -] ] Rain [3+,2 Rain [3+,2- -] ] 5/14[- -2/5(log 2/5(log2 2(2/5)) 4/14[- -4/4(log 4/4(log2 2(4/4)) 5/14[- -3/5(log 3/5(log2 2(3/5)) = 0.94 0.69 = 0.25 0.69 = 0.25 Gain(Outlook) = 0.94 Gain(Outlook) = 0.94 5/14[ 4/14[ 5/14[ = 0.94 (2/5))- -3/5(log 3/5(log2 2(3/5))] (4/4))- -0/4(log 0/4(log2 2(0/4))] (3/5))- -2/5(log 2/5(log2 2(2/5))] (3/5))] (0/4))] (2/5))] 8

Tree induction example Split data by attribute Temperature <15 [3+,1 <15 [3+,1- -] ] 15 15- -25 [4+,2 25 [4+,2- -] ] >25 [2+,2 >25 [2+,2- -] ] S[9+, 5 S[9+, 5- -] ] Temperature Temperature Gain(Temperature) = 0.94 Gain(Temperature) = 0.94 4/14[ 6/14[ 4/14[ = 0.94 4/14[- -3/4(log 3/4(log2 2(3/4)) 6/14[- -4/6(log 4/6(log2 2(4/6)) 4/14[- -2/4(log 2/4(log2 2(2/4)) = 0.94 0.91 = 0.03 0.91 = 0.03 (3/4))- -1/4(log 1/4(log2 2(1/4))] (4/6))- -2/6(log 2/6(log2 2(2/6))] (2/4))- -2/4(log 2/4(log2 2(2/4))] (1/4))] (2/6))] (2/4))] 9

Tree induction example Split data by attribute Humidity Humidity High [3+,4 High [3+,4- -] ] S[9+, 5 S[9+, 5- -] Humidity ] Humidity Normal [6+, 1 Normal [6+, 1- -] ] Gain(Humidity) = 0.94 Gain(Humidity) = 0.94 7/14[ 7/14[ = 0.94 Split data by attribute Wind 7/14[- -3/7(log 3/7(log2 2(3/7)) 7/14[- -6/7(log 6/7(log2 2(6/7)) = 0.94 0.79 = 0.15 0.79 = 0.15 Wind (3/7))- -4/7(log 4/7(log2 2(4/7))] (6/7))- -1/7(log 1/7(log2 2(1/7))] (4/7))] (1/7))] Weak [6+, 2 Weak [6+, 2- -] ] S[9+, 5 S[9+, 5- -] Wind ] Wind Strong [3+, 3 Strong [3+, 3- -] ] Gain(Wind) = 0.94 Gain(Wind) = 0.94 8/14[ 6/14[ = 0.94 = 0.94 0.89 = 0.05 8/14[- -6/8(log 6/8(log2 2(6/8)) 6/14[- -3/6(log 3/6(log2 2(3/6)) 0.89 = 0.05 (6/8))- -2/8(log 2/8(log2 2(2/8))] (3/6))- -3/6(log 3/6(log2 2(3/6))] (2/8))] (3/6))] 10

Tree induction example Outlook Outlook Temperat Temperat ure ure Humidity Humidity Wind Wind Play Play Tennis Tennis Sunny Sunny Sunny Sunny Overcast Overcast >25 >25 >25 >25 >25 >25 High High High High High High Weak Weak Strong Strong Weak Weak No No No No Yes Yes Gain(Outlook) = 0.25 Gain(Temperature)=0.03 Gain(Humidity) = 0.15 Gain(Wind) = 0.05 Rain Rain Rain Rain Rain Rain Overcast Overcast 15 15- -25 <15 <15 <15 <15 <15 <15 25 High High Normal Normal Normal Normal Normal Normal Weak Weak Weak Weak Strong Strong Strong Strong Yes Yes Yes Yes No No Yes Yes Outlook Outlook Sunny Sunny Sunny Sunny Rain Rain Sunny Sunny Overcast Overcast 15 15- -25 <15 <15 15 15- -25 15 15- -25 15 15- -25 25 High High Normal Normal Normal Normal Normal Normal High High Weak Weak Weak Weak Weak Weak Strong Strong Strong Strong No No Yes Yes Yes Yes Yes Yes Yes Yes 25 25 25 Sunny Sunny Overcast Overcast Rain Rain ?? ?? Yes Yes ?? ?? Overcast Overcast >25 >25 Normal Normal Weak Weak Yes Yes Rain Rain 15 15- -25 25 High High Strong Strong No No 11

Entropy of branch Sunny Info(Sunny) = Info(Sunny) = - -2/5(log 2/5(log2 2(2/5)) (2/5))- -3/5(log 3/5(log2 2(3/5)) = 0.97 (3/5)) = 0.97 Split Sunny branch by attribute Temperature <15 [1+,0 <15 [1+,0- -] ] 15 15- -25 [1+,1 >25 [0+,2 >25 [0+,2- -] ] Gain(Temperature) Gain(Temperature) = 0.97 = 0.97 1/5[ 1/5[- -1/1(log 1/1(log2 2(1/1)) 2/5[ 2/5[- -1/2(log 1/2(log2 2(1/2)) 2/5[ 2/5[- -0/2(log 0/2(log2 2(0/2)) = 0.97 = 0.97 0.4 = 0.57 0.4 = 0.57 Sunny[2+,3 Sunny[2+,3- -] ] Temperature Temperature 25 [1+,1- -] ] (1/1))- -0/1(log 0/1(log2 2(0/1))] (1/2))- -1/2(log 1/2(log2 2(1/2))] (0/2))- -2/2(log 2/2(log2 2(2/2))] (0/1))] (1/2))] (2/2))] Split Sunny branch by attribute Humidity Gain(Humidity) Gain(Humidity) = 0.97 = 0.97 3/5[ 3/5[- -0/3(log 2/5[ 2/5[- -2/2(log = 0.97 = 0.97 0 = High [0+,3 High [0+,3- -] ] Sunny[2+,3 Sunny[2+,3- -] ] Humidity Humidity 0/3(log2 2(0/3)) 2/2(log2 2(2/2)) 0 = 0.97 0.97 (0/3))- -3/3(log 3/3(log2 2(3/3))] (2/2))- -0/2(log 0/2(log2 2(0/2))] (3/3))] (0/2))] Normal [2+, 0 Normal [2+, 0- -] ] Split Sunny branch by attribute Wind Gain(Wind) Gain(Wind) = 0.97 = 0.97 3/5[ 3/5[- -1/3(log 2/5[ 2/5[- -1/2(log = 0.97 = 0.97 0.95= 0.02 0.95= 0.02 Weak [1+, 2 Weak [1+, 2- -] ] Sunny[2+, 3 Sunny[2+, 3- -] ] Wind 1/3(log2 2(1/3)) 1/2(log2 2(1/2)) (1/3))- -2/3(log 2/3(log2 2(2/3))] (1/2))- -1/2(log 1/2(log2 2(1/2))] (2/3))] (1/2))] Wind Strong [1+, 1 Strong [1+, 1- -] ] 12

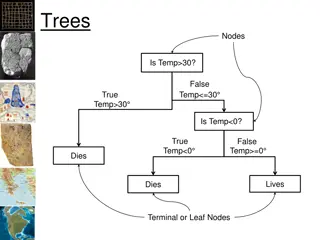

Tree induction example Outlook Sunny Overcast Rain Humidity Yes ?? High Normal No Yes 13

Entropy of branch Rain Info(Rain) = Info(Rain) = - -3/5(log 3/5(log2 2(3/5)) (3/5))- -2/5(log 2/5(log2 2(2/5)) = 0.97 (2/5)) = 0.97 Split Rain branch by attribute Temperature Temperature Gain(Outlook) Gain(Outlook) = 0.97 = 0.97 2/5[ 2/5[- -1/2(log 3/5[ 3/5[- -2/3(log 0/5[ 0/5[- -0/0(log = 0.97 = 0.97 0.95 = 0.02 <15 [1+,1 <15 [1+,1- -] ] 15 15- -25 [2+,1 25 [2+,1- -] ] >25 [0+,0 >25 [0+,0- -] ] Rain[3+,2 Rain[3+,2- -] ] Temperature Temperature 1/2(log2 2(1/2)) 2/3(log2 2(2/3)) 0/0(log2 2(0/0)) 0.95 = 0.02 (1/2))- -1/2(log 1/2(log2 2(1/2))] (2/3))- -1/3(log 1/3(log2 2(1/3))] (0/0))- -0/0(log 0/0(log2 2(0/0))] (1/2))] (1/3))] (0/0))] Split Rain branch by attribute Humidity Humidity Gain(Humidity) Gain(Humidity) = 0.97 = 0.97 2/5[ 2/5[- -1/2(log 3/5[ 3/5[- -2/3(log = 0.97 = 0.97 0.95 = 0.02 0.95 = 0.02 Wind High [1+,1 High [1+,1- -] ] Rain[3+,2 Rain[3+,2- -] ] Humidity Humidity 1/2(log2 2(1/2)) 2/3(log2 2(2/3)) (1/2))- -1/2(log 1/2(log2 2(1/2))] (2/3))- -1/3(log 1/3(log2 2(1/3))] (1/2))] (1/3))] Normal [2+, 1 Normal [2+, 1- -] ] Split Rain branch by attribute Wind Gain(Wind) Gain(Wind) = 0.97 = 0.97 3/5[ 3/5[- -3/3(log 2/5[ 2/5[- -0/2(log = 0.97 = 0.97 0 = Weak [3+, 0 Weak [3+, 0- -] ] Rain[3+,2 Rain[3+,2- -] ] Wind Wind 3/3(log2 2(3/3)) 0/2(log2 2(0/2)) 0 = 0.97 0.97 (3/3))- -0/3(log 0/3(log2 2(0/3))] (0/2))- -2/2(log 2/2(log2 2(2/2))] (0/3))] (2/2))] Strong [0+, 2 Strong [0+, 2- -] ] 14

Tree induction example Outlook Sunny Overcast Rain Humidity Yes Wind High Normal Weak Strong No No Yes Yes 15