Taming Tail Latencies for Web Search Optimization

This research focuses on predictive parallelization to optimize web search performance by reducing tail latencies. The goal is to improve query response time and quality without compromising user experience. By speeding up index search processes and addressing slow servers, the study aims to enhance the efficiency of query processing stages and overall response time in web search systems.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

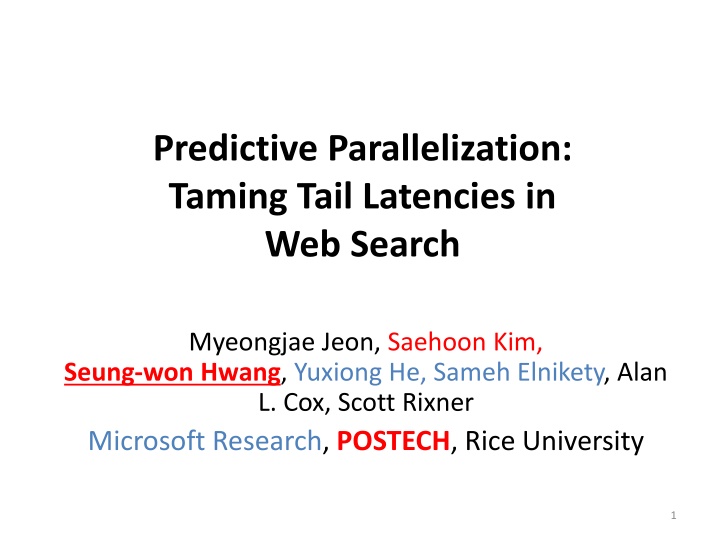

Predictive Parallelization: Taming Tail Latencies in Web Search Myeongjae Jeon, Saehoon Kim, Seung-won Hwang, Yuxiong He, Sameh Elnikety, Alan L. Cox, Scott Rixner Microsoft Research, POSTECH, Rice University 1

Performance of Web Search 1) Query response time Answer quickly to users (e.g., in 300 ms) 2) Response quality (relevance) Provide highly relevant web pages Improve with resources and time consumed Focus: Improving response time without compromising quality 2

Background: Query Processing Stages Focus: Stage 1 Query 100s 1000s of good matching docs doc Doc. index search For example: 300 ms latency SLA 10s of the best matching docs 2nd phase ranking Few sentences for each doc Snippet generator Response 3

Goal Speeding up index search (stage 1) without compromising result quality Improve user experience Larger index serving Sophisticated 2nd phase Query doc Doc. index search For example: 300 ms latency SLA 2nd phase ranking Snippet generator Response 4

How Index Search Works Partition all web pages across index servers (massively parallel) Distribute query processing (embarrassingly parallel) Aggregate top-k relevant pages Query Pages Aggregator Top-k pages Top-k pages Top-k pages Top-k pages Top-k pages Top-k pages Index server Index server Index server Index server Index server Index server Problem: Partition A slow server makes the entire cluster slow Partition All web pages Partition Partition Partition Partition 5

Observation Query processing on every server. Response time is determined by the slowest one. We need to reduce its tail latencies Latency 6

Examples Fast response Slow response Aggregator Aggregator Index servers Index servers Terminate long query in the middle of processing Fast response, but quality drop Long query (outlier) 7

Parallelism for Tail Reduction Opportunity Available idle cores CPU-intensive workloads Challenge Tails are few Tails are very long Percentile Latency Scale Breakdown Latency 50%tile 7.83 ms x1 Network 4.26 ms 75%tile 12.51 ms x1.6 Queueing 0.15 ms 95%tile 57.15 ms x7.3 I/O 4.70 ms 99%tile 204.06 ms x26.1 CPU 194.95 ms Latency distribution Latency breakdown for the 99%tile. 8

Predictive Parallelism for Tail Reduction Short queries Many Almost no speedup Long queries Few Good speedup 10 6 200 6 < 30 ms > 80 ms 169 Exec. Time (ms) Exec. Time (ms) 5 5 8 150 Speedup Speedup 4 4 6 5.2 4.5 3 100 3 4 2 2 41 50 2 1 1 0 0 0 0 1 2 3 4 5 6 1 2 3 4 5 6 Parallelism Degree Parallelism Degree 10

Predictive Parallelization Workflow Index server Execution time predictor query Predict (sequential) execution time of the query with high accuracy 11

Predictive Parallelization Workflow Index server Execution time predictor query long Resource manager short Using predicted time, selectively parallelize long queries 12

Predictive Parallelization Focus of Today s Talk 1. Predictor: of long query through machine learning 2. Parallelization: of long query with high efficiency 13

Brief Overview of Predictor Accuracy High recall for guaranteeing 99%tile reduction Cost Lowprediction overhead and misprediction cost In our workload, 4% queries with > 80 ms Prediction overhead of 0.75ms or less and high precision At least 3% must be identified (75% recall) Existing approaches: Lower accuracy and higher cost 14

Accuracy: Predicting Early Termination Lowest Docs sorted by static rank Highest Web Doc 1 Doc 2 Doc 3 . Doc N-2 Doc N-1 Doc N documents . . Inverted index for SIGIR Processing Not evaluated Only some limited portion contributes to top-k relevant results Such portion depends on keyword (or score distribution more exactly) 15

Space of Features Term Features [Macdonald et al., SIGIR 12] IDF, NumPostings Score (Arithmetic, Geometric, Harmonic means, max, var, gradient) Query features NumTerms (before and after rewriting) Relaxed Language

New Features: Query Rich clues from queries in modern search engines <Fields related to query execution plan> rank=BM25F enablefresh=1 partialmatch=1 language=en location=us . <Fields related to search keywords> SIGIR (Queensland or QLD)

Space of Features Term Features [Macdonald et al., SIGIR 12] IDF, NumPostings Score (Arithmetic, Geometric, Harmonic means, max, var, gradient) Query features NumTerms (before and after rewriting) Relaxed Language

Space of Features All features cached to ensure responsiveness (avoiding disk access) Term features require 4.47GB memory footprint (for 100M terms) Category Feature Term feature (14) AMeanScore GMeanScore HMeanScore MaxScore EMaxScore VarScore NumPostings GAvgMaxima MaxNumPostings In5%Max NumThres ProK IDF Query feature (6) English NumAugTerm Complexity RelaxCount NumBefore NumAfter

Feature Analysis and Selection Accuracy gain from boosted regression tree, suggesting cheaper subset 0.85 0.8 0.75 Recall 0.7 All features Sorted features 0.65 0.6 0 5 10 15 # features (sorted by importance) 20

Prediction Performance Precision (|A P|/|P|) Recall A = actual long queries P = predicted long queries 80 ms Thresh. Cost (|A P|/|A|) Keyword features 0.76 0.64 High All features 0.89 0.84 High Cheap features 0.86 0.80 Low Query features are important Using cheap features is advantageous IDF from keyword features + query features Much smaller overhead (90+% less) Similarly high accuracy as using all features 22

Algorithms Classification vs. Regression Comparable accuracy Flexibility Algorithms Linear regression Gaussian process regression Boosted regression tree

Accuracy of Algorithms Summary 80% long queries (> 80 ms) identified 0.6% short queries mispredicted 0.55 ms for prediction time with low memory overhead

Predictive Parallelism Key idea Parallelize only long queries Use a threshold on predicted execution time Evaluation Compare Predictive to other baselines Sequential Fixed Adaptive

99%tile Response Time Response Time (ms) 200 50% throughput increase 150 Sequential Degree=3 Predictive Adaptive 100 50 50 600 100 150 200 250 300 350 400 450 500 550 650 700 750 800 850 900 950 Query Arrival Rate (QPS) Outperforms Parallelize all 26

Related Work Search query parallelism Fixed parallelization [Frachtenberg, WWWJ 09] Adaptive parallelization using system load only [Raman et al., PLDI 11] High overhead due to parallelizing all queries Execution time prediction Keyword-specific features only [Macdonald et al., SIGIR 12] Lower accuracy and high memory overhead for our target problem 29

Thank You! Your query to Bing is now parallelized if predicted as long. Execution time predictor query long Resource manager short