Struggling with Data Accuracy_ Implement These Data Cleansing Techniques in Your BI Software

Explore essential data cleansing techniques that can significantly improve the accuracy and reliability of your Business Intelligence software. This guide offers practical advice on implementing normalization, deduplication, validation, and more, ensuring that your data is not just voluminous but truly valuable.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

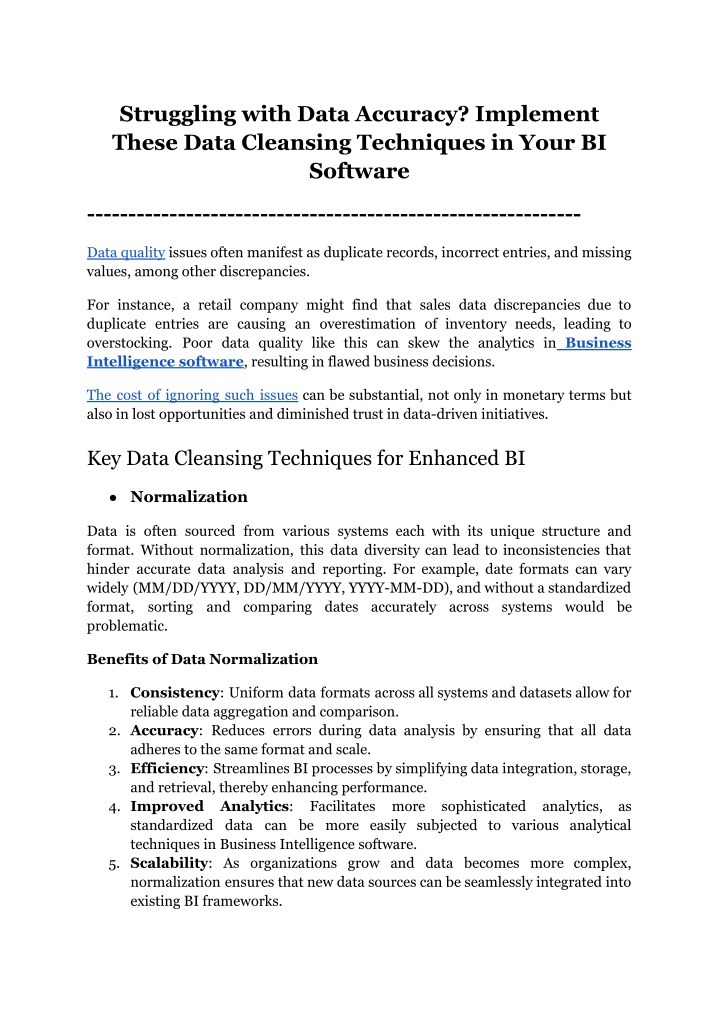

Struggling with Data Accuracy? Implement These Data Cleansing Techniques in Your BI Software ------------------------------------------------------------ Data quality issues often manifest as duplicate records, incorrect entries, and missing values, among other discrepancies. For instance, a retail company might find that sales data discrepancies due to duplicate entries are causing an overestimation of inventory needs, leading to overstocking. Poor data quality like this can skew the analytics in Business Intelligence software, resulting in flawed business decisions. The cost of ignoring such issues can be substantial, not only in monetary terms but also in lost opportunities and diminished trust in data-driven initiatives. Key Data Cleansing Techniques for Enhanced BI Normalization Data is often sourced from various systems each with its unique structure and format. Without normalization, this data diversity can lead to inconsistencies that hinder accurate data analysis and reporting. For example, date formats can vary widely (MM/DD/YYYY, DD/MM/YYYY, YYYY-MM-DD), and without a standardized format, sorting and comparing dates accurately across systems would be problematic. Benefits of Data Normalization 1. Consistency: Uniform data formats across all systems and datasets allow for reliable data aggregation and comparison. 2. Accuracy: Reduces errors during data analysis by ensuring that all data adheres to the same format and scale. 3. Efficiency: Streamlines BI processes by simplifying data integration, storage, and retrieval, thereby enhancing performance. 4. Improved Analytics: Facilitates standardized data can be more easily subjected to various analytical techniques in Business Intelligence software. 5. Scalability: As organizations grow and data becomes more complex, normalization ensures that new data sources can be seamlessly integrated into existing BI frameworks. more sophisticated analytics, as

Normalization Techniques The approach to normalization typically involves several key steps, each tailored to the specific requirements of the data and the intended use within the BI system: 1. Assessment of Data Format Variability: Begin by cataloging the various data formats present in your datasets. This could involve different units of measurement, date formats, or categorical labels that vary across sources. 2. Development of Standardization Rules: Define clear rules for how different data types should be formatted. This includes deciding on standard units of measure, date formats, text casing (e.g., all caps, title case), and numerical representations (e.g., decimal places). 3. Application of Transformation Functions: Use functions within your BI tools to convert data to the chosen formats. For instance, SQL functions like CAST or CONVERT can change data types or formats, and string functions can adjust text data. 4. Automating Normalization Processes: Implement automation scripts or use built-in features in BI software to apply these transformations routinely as new data loads into the system. 5. Continuous Monitoring and Updating: Regularly review and refine the normalization rules to accommodate new data types or sources, ensuring ongoing consistency and accuracy. 2. Deduplication Duplicate records often arise during data collection and integration from multiple sources, such as merging customer databases from different departments or importing historical data into a new BI software. These duplicates can distort analytics, such as inflating customer counts or misrepresenting sales figures, leading to erroneous business insights and decisions. Benefits of Implementing Deduplication 1. Improved Data Accuracy: By eliminating redundancies, deduplication enhances the precision of the data analytics performed by Business Intelligence tools. 2. Enhanced Decision Making: Clean, non-redundant data ensures that business decisions are based on accurate and reliable data insights. 3. Increased Efficiency: Reduces the data volume that BI solutions need to process, which can speed up analysis and reduce the load on system resources. 4. Cost Savings: Lower storage requirements by eliminating unnecessary data duplication, thus optimizing infrastructure costs. 5. Better Customer Insights: In scenarios involving customer data, deduplication helps in providing a unified view of each customer, which is

crucial for effective customer relationship management and personalized marketing. Deduplication Techniques and Methodologies Implementing deduplication involves a series of strategic steps within BI environments: 1. Data Identification: Use algorithms to scan through the dataset and identify potential duplicates. This might involve matching records based on key attributes such as names, addresses, or unique identifiers. 2. Record Linkage: Establish rules for how records are linked. This might include exact match criteria or more complex fuzzy matching techniques that can identify non-identical duplicates (e.g., 'Jon Smith' vs. 'Jonathan Smith'). 3. Review and Resolution: Once potential duplicates are identified, data managers must decide whether to delete redundant records or merge them. Merging involves consolidating multiple entries into a single record while preserving important unique data from each. 4. Automation of Deduplication Processes: Implement automated tools and scripts within your BI software that continuously perform deduplication. This is especially important in dynamic environments where new data is constantly added. Read Data Deduplication With AI. 5. Continuous Monitoring and deduplication rules and processes to adapt to new data sources and changing business requirements. Improvement: Regularly update 3. Validation and Verification Validation and verification are essential for maintaining high data quality by confirming that data inputs and outputs meet predefined standards and rules. In BI systems, where decision-making is heavily reliant on data, even minor inaccuracies can lead to significant financial losses, strategic missteps, and erosion of trust in data systems. Ensuring data integrity through rigorous validation and verification is, therefore, a key concern for business users, data analysts, and BI professionals. Key Benefits of Robust Validation and Verification 1. Enhanced Accuracy: Ensures that data used in BI analytics is correct and reliable, leading to more accurate business forecasts and strategies. 2. Improved Compliance: Meets regulatory and internal standards, which is crucial for industries such as finance and healthcare where data handling is heavily regulated. 3. Increased User Confidence: Builds trust among users in the reliability of the BI software, encouraging data-driven decision-making across the organization.

4. Risk Reduction: Minimizes the risks associated with data errors, such as incorrect customer data leading to poor customer service experiences. Effective Techniques for Validation and Verification in BI Systems Implementing validation and verification within BI tools involves several strategic and technical measures designed to ensure data integrity at various stages of the data lifecycle: 1. Data Input Validation: Ensures that incoming data into a BI solution adheres to specific format, type, and value constraints. This can be achieved by: Setting up data type checks (e.g., ensuring numeric inputs are not accepted as text). Implementing format validations (e.g., dates in YYYY-MM-DD format). Applying range validations (e.g., discount rates between 0% and 50%). 2. Data Output Verification: Verifies that outputs from Business Intelligence tools are consistent and accurate relative to the input data. This is particularly important after data transformation processes. Techniques include: Cross-referencing outputs with external trusted datasets to confirm accuracy. Using checksums and hashes to verify large datasets without needing to compare all data manually. 3. Automated Testing: Incorporates automated tests that regularly validate and verify data within the Business Intelligence software. Automation ensures ongoing compliance and integrity, significantly reducing the manual effort required and the potential for human error. 4. Data Quality Audits: Regular audits of data quality within BI tools, checking for consistency, accuracy, and adherence to business rules and standards. 4. Missing Data Handling: Managing Incompleteness in BI Systems Missing data can arise from various sources, such as errors in data entry, differences in data collection methods, or integration issues between disparate systems. In BI contexts, incomplete data can lead to biased decisions, misinformed strategies, and ultimately financial losses. Hence, robust handling of missing data is essential to leverage the full potential of Business Intelligence tools in driving organizational success. Benefits of Effective Missing Data Management 1. Improved Data Quality: Filling or properly handling missing data reduces biases and errors in BI reports.

2. Enhanced Decision Making: Accurate and complete data ensures that decisions are based on the most comprehensive view of available information. 3. Increased Reliability: BI software that reliably handles missing data builds confidence among users in the insights it generates. 4. Regulatory Compliance: Adequate handling of gaps in data can also help meet compliance standards, which often require complete and accurate reporting. Techniques for Managing Missing Data in BI Software Effective management of missing data involves selecting the right strategy based on the nature of the data and the analytical goals of the BI solution. Here are several approaches commonly used in Business Intelligence software: 1. Deletion: Listwise Deletion: Removes all data records that contain any missing values, used when the missing data is random and sparse. Pairwise Deletion: Utilizes available data in the analysis without deleting entire records, suitable for correlations where missing data is not pervasive. 2. Imputation: Mean/Median/Mode Imputation: Replaces missing values with the mean, median, or mode of the column, ideal for numerical data where additional data nuances are less critical. Regression Imputation: Estimates missing values using linear regression, useful in scenarios where data relationships are strong and predictable. K-Nearest Neighbors (KNN): Imputes values based on the 'k' closest neighbors to a given record, effective in more complex datasets where patterns can indicate missing data points. 3. Using Algorithmic Approaches: Multiple Imputation: Generates multiple versions of the dataset with different imputations, providing a comprehensive analysis of possible outcomes. Maximum Likelihood Techniques: Utilizes a statistical model to estimate the most likely values of missing data, optimizing the overall data set's statistical properties. Also, read Solving Data Inconsistencies: How Coalesce Transform Enhances BI Reporting 5. Data Enrichment Data enrichment is a crucial process in the realm of Business Intelligence (BI) that involves augmenting internal data with external data sources. This technique

enhances the depth and breadth of datasets, providing more detailed insights that are critical for sophisticated analysis and decision-making. Effective data enrichment can transform BI software from a simple data reporting tool into a powerful decision support system. Benefits of Data Enrichment in BI Software 1. Enhanced Decision-Making: Enriched data provides a more complete view of the business landscape, enabling more informed decisions. 2. Improved Customer Insights: Adding demographic and psychographic data to customer records helps in creating more targeted marketing campaigns and personalized customer experiences. 3. Increased Data Value: External data can reveal trends and patterns not visible with internal data alone, adding value to existing BI analytics. 4. Risk Mitigation: Incorporating external data such as market trends and economic indicators can help companies anticipate changes and adjust strategies accordingly. Strategies for Data Enrichment in BI Tools Implementing data enrichment involves several key steps to ensure that the external data enhances the existing BI system effectively: 1. Identifying Relevant Data Sources: Determine what external data could complement the internal datasets. Common sources include social media feeds, economic reports, demographic information, and third-party databases. Evaluate the credibility and reliability of these sources to ensure they meet the organization s standards for data quality. 2. Integrating Data: Use APIs or data integration tools to automate the flow of external data into the BI solution. This integration must be secure to protect data integrity and privacy. Match the external data with internal datasets accurately, which might involve techniques like record linkage, entity resolution, or fuzzy matching. 3. Maintaining Data Quality: Regularly validate and cleanse the integrated data to ensure it remains accurate and relevant. This is crucial as external data sources can change, impacting the quality and reliability of insights derived from Business Intelligence tools. Monitor the external data sources for changes in data provision policies or quality to adjust the integration processes as necessary. Conclusion

The accuracy and integrity of your data can make or break the insights derived from BI software. As we've explored throughout this blog, implementing robust data cleansing techniques is not just an option but a necessity for any business that relies on data to inform its decisions. From normalization and deduplication to validation, verification, and data enrichment, these strategies ensure that your data is not only accurate but also actionable. If you're ready to take your BI capabilities to the next level and significantly reduce data accuracy issues, why not start with a tool designed to facilitate and streamline this process? Grow offers a comprehensive BI solution that integrates seamlessly with your existing systems, equipped with advanced features to help automate and manage data cleansing tasks efficiently. Start your journey towards cleaner data and clearer insights today with Grow's 14-day free trial. Experience firsthand how easy and impactful cleaning your data can be when using the right tools. Don't just take our word for it; check out the Grow.com Reviews & Product Details on G2 to see how other businesses like yours have transformed their data handling practices using Grow. Enhance your decision-making process, ensure data accuracy, and push your business forward with confidence. Take action now to harness the full potential of your BI software with Grow. Sign up for your free trial and begin exploring the possibilities of a cleaner, more efficient data environment. Your data deserves the best treatment, and with the right techniques and tools, you can ensure it supports your business effectively. Let Grow help you make the most of your data, starting today!