Semi-Automatic Analysis of Spontaneous Language for Dutch

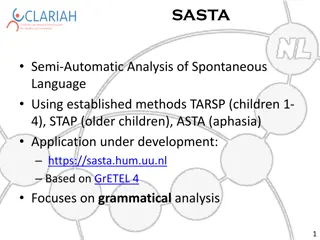

Develop an application to semi-automatically analyze spontaneous language transcripts for Dutch, focusing on language development disorders such as aphasia. The goal is to automate the manual analysis process using language technology, with methods like TARSP, STAP, and ASTA. The SASTA application allows input of transcripts from spontaneous language sessions and provides method-specific score forms for evaluation. Public and server versions are available, with internal development ongoing for desktop use in clinics. The application operates based on GrETEL, offering treebank query capabilities optimized for multiple queries on a small treebank.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Towards Semi Automatic Analysis of Spontaneous Language for Dutch Jan Odijk CLARIN Annual Conference 2020-10-05 1

Overview Problem and research question SASTA application Results Deviant Language Concluding Remarks Outlook for future work 2

Problem Language development (disorders), aphasia Assessment includes spontaneous language sessions Manual analysis A lot effort /time, not paid for often not done or only partially Can we automate this using language technology? Goal: develop an application to semi-automatically grammatically analyse spontaneous language transcripts Methods: TARSP, STAP and ASTA 3

Problem TARSP (children 1-4), STAP (older children) and ASTA (aphasia patients) Define language measures E.g. occurrence of certain word classes, # nouns, # compound nouns, # diminutives, # verbs, # finite verbs, # copulas, Constructions Auxiliary + infinitive, adjective used attributively, Inversion, subordinate clauses, coordination, Derive scores and compare these to normative figures 4

SASTA Application SASTA Application (SASTA project) Input: Transcript of a spontaneous language session Formats: Sasta-specific (.docx, .txt) or CHAT Or: annotated transcript Parameter: select a method: TARSP, ASTA, STAP Output: Generic score form Method-specific score forms (currently: TARSP, ASTA) Annotated transcript, format based on the Schlichting 2005 Appendix 5

SASTA Application SASTA Application Web application Public version soon here: https://sasta.hum.uu.nl Server version for use in clinics desktop application Internal development: Sastadev software 6

SASTA Application: how does it work? Based on GrETEL, a treebank query application Crucial Components Upload facility (upload one s own text or corpus) Parse facility (Alpino parser) Query facilities (Xpath query language) Different arrangement of these components: GrETEL: optimized for one query applied to a large treebank SASTA: optimized for multiple queries applied to a small treebank 7

SASTA Application: How does it work? Input text CHAT format CHAT processed: Metadata CHAT-annotations (result of the AnnCor project) Each utterance automatically parsed by the Alpino-parser treebank Method defined (in Excel format) Method is read in Its queries applied to the treebank Queries generate scores and annotations Illustration 8

Results (4-sep) Data provided Bronze reference Data provided adapted: By the developers, after applying SASTA Currently checked independently by the original data providers Silver reference 9

Results (4-sep) Comparison Results v. Bronze Indicator for quality (independent reference) Results v. Silver Indicator for quality (partially dependent reference) Bronze v. Silver Indicator for the quality of human annotation Recall, Precision, F1-score Results reflect defined core queries 10

ASTA Scores (4-sep) Results v. Bronze R P 62.9 73.5 72.2 71.8 80.9 80.9 72.5 78.2 52.0 51.2 95.2 78.3 80.5 73.9 89.4 75.0 88.7 82.9 88.4 77.7 Results v. Silver R P 79.6 92.7 76.7 89.3 83.3 95.2 75.4 90.8 65.6 87.4 95.9 92.4 83.7 85.1 92.2 98.3 90.1 92.0 90.1 92.7 Bronze v. Silver R P 82.5 82.2 84.5 98.9 87.1 99.6 88.7 99.1 71.4 96.6 85.0 99.6 86.1 95.5 77.6 98.7 90.6 98.9 84.8 99.2 F1 67.8 72.0 80.9 75.2 51.6 85.9 77.1 81.6 85.7 82.7 F1 85.7 82.5 88.8 82.4 74.9 94.1 84.4 95.2 91.0 91.4 F1 82.3 91.1 92.9 93.6 82.1 91.7 90.6 86.9 94.6 91.4 ASTA 01 ASTA 02 ASTA 03 ASTA 04 ASTA 05 ASTA 06 ASTA 07 ASTA 08 ASTA 09 ASTA 10 11

TARSP (1-sep) Results v. Bronze Results v. Silver Bronze v. Silver R P F1 R 85.1 73.1 78.6 87.5 94.2 90.7 79.8100.0 88.7 82.3 63.7 71.8 88.5 92.7 90.6 70.8 95.8 81.4 92.6 81.1 86.4 94.4 100.0 97.1 82.1 99.3 89.9 73.5 56.8 64.1 83.4 95.5 89.0 66.2 98.0 79.1 86.2 66.7 75.2 90.2 98.7 94.3 70.7100.0 82.9 75.9 63.3 69.0 85.2 98.8 91.5 69.4 96.5 80.7 89.4 69.4 78.2 92.3 97.8 95.0 73.2100.0 84.5 79.2 69.1 73.8 85.3 98.2 91.3 74.7 98.6 85.0 76.8 64.4 70.0 83.1 95.8 89.0 72.8100.0 84.3 82.1 69.7 75.4 86.3 95.2 90.5 76.4 99.3 86.3 Tarsp P F1 R P F1 Tarsp_01.xml Tarsp_02.xml Tarsp_04.xml Tarsp_06.xml Tarsp_07.xml Tarsp_08.xml Tarsp_09.xml Tarsp_10.xml Tarsp_03.xml Tarsp_05.xml 12

STAP scores (25-sep) Complexity analysis (STAP-form sheet 5) Results v. Bronze Results v. Silver Bronze v. Silver Sample R P F1 R P F1 R P F1 STAP_02 73.2 80.4 76.6 75.1 88.9 81.4 92.3 99.4 95.7 STAP_03 84.2 83.5 83.8 84.5 90.0 87.2 88.6 95.2 91.8 STAP_04 88.8 93.6 91.1 89.1 96.6 92.7 96.8 99.5 98.2 STAP_05 86.8 89.8 88.3 87.1 92.4 89.7 96.7 99.0 97.8 STAP_06 92.3 91.8 92.1 92.7 97.3 95.0 94.8 100.0 97.3 STAP_07 82.7 88.6 85.6 83.8 95.3 89.1 93.8 99.6 96.6 STAP_08 86.7 80.6 83.5 92.1 88.6 90.3 85.7 88.8 87.2 STAP_09 91.2 91.2 91.2 91.7 96.5 94.0 95.0 100.0 97.4 STAP_10 93.0 82.8 87.6 93.8 93.1 93.4 89.6 100.0 94.5 13

Deviant Language SASTA mainly grammatical analysis no analysis / correction of deviant language (which occurs a lot) Partially manual annotation (Examples) But it is incomplete, inconsistent, often absent Deviant language negatively affects the grammatical analysis (Examples) Can we (partially) automate this too? 14

Deviant Language Examples Deviant transcriptions (Examples) Usually to indicate a specific pronunciation* (Regional) informal spoken language ie-diminutives* (Examples) Incorrect possessive pronouns (Examples) Wrong pronunciation indicated in the transcript Examples (* = first version has been tested independently + = first version is available) 15

Deviant Language False starts, repetitions, incomplete utterances+ Examples Grammatical errors (wrong) overgeneralisations* of verbal inflection Examples Wrong determiner+: Examples Wrong auxiliary: Examples 16

Other matters Limitations of Alpino insufficient information on compounds* Examples Insufficient information on verbless utterances Examples Insufficient information on V1-sentence+ Examples Analysis with words the child does not know at its age: Examples Analysis as a construction the child does not know at its age: Examples 17

Small experiment Schlichting 2005 appendix automatic corrections (marked with *) improve recall (2 percent points) and precision (0.4 percent points) (not integrated in Sasta yet, we are currently working on this) Schlichting % Scores Eval Meth Corr R P F1 Sastadev No 86.580.883.6 Sastadev Yes 88.581.284.7 18

Concluding remarks Automating analysis of spontaneous language: Promising results, though not perfect Potential for further improvements Its actual usefulness must be tested in clinical setting If successful, May have great societal impact Enables / reduces costs / improves quality of such assessments May have scientific impact Many of the linguistic problems require sophisticated solutions May benefit the CLARIN research infrastructure Derivative program CHAMP-NL, CHAT iMProver for Dutch Here applied to Dutch But approach can be followed for other languages for which there is a parser, and a query system to query the structures generated by the parser 19

Outlook SASTA project phase 2 is ASTA good enough to be useful / efficient / workable in the clinical setting? Proposal prepared, meeting VKL in October Cooperation with other partners Auris, Hogeschool Utrecht, Itslanguage Extend speech recognition, combine with speech recognition, alternative annotation interfaces 20

Outlook SASTA+ (funded by Utrecht University) Deal with deviant language Generate multiple alternatives Parse these alternatives Compare the resulting parses and select the best E.g. degree of grammatical cohesion Automatic replacement of deviant pronunciation based on Context Other documents of the patient / the clinic / CHILDES corpora 21

SASTA+ And the derivative product: CHAMP-NL (CHAT iMProver for Dutch) suggests improvements of CHAT files Especially important since we also offer MOR en GRA analysis for CHAT files 22

Ref & Meer info Schlichting, Liesbeth (2005). TARSP. Taalontwikkelingsschaal van Nederlandse kinderen van 1- 4 jaar. Amsterdam: Pearson. http://portal.clarin.nl , http://www.clariah.nl Augustinus, L. et al. 2017. GrETEL: A Tool for Example-Based Treebank Mining. In: Odijk. J and van Hessen. A. (eds.) 2017. CLARIN in the Low Countries. Pp. 269 280. London: Ubiquity Press. DOI: https://doi.org/10.5334/bbi.22 License: CC-BY 4.0 Odijk. J., van der Klis, M., and Spoel, S. (2018). Extensions to the GrETEL treebank query application. Proceedings of the 16th International Workshop on Treebanks and Linguistic Theories (TLT16) pp 46-55. Prague. http://aclweb.org/anthology/W/W17/W17-7608.pdf Odijk & Van Hessen (eds.) 2017. CLARIN in the Low Countries. London: Ubiquity Press. (Open Access). DOI: http://dx.doi.org/10.5334/bbi Odijk, J. et al. 2018. The AnnCor CHILDES Treebank. In Nicoletta Calzolari et al. (Eds.). Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018). Miyazaki. Japan (pp. 2275-2283). Paris. France: ELRA. 24

TARSP Experiment A close up of a logo Description automatically generated Method specifies language measures Example (TARSP). illustrated in GrETEL: Hww i (auxiliary with infinitive) Query Applied to the Schlichting 2005 Appendix 5 results: utterances 10, 11, 13, 22, 28 Schlichting s analysis: 10, 11, 13, 22, 28 25

CHAT Annotation Examples A close up of a logo Description automatically generated Actually said Intended CHAT- annotation Translation tactor tractor t(r)actor nerke nergens nerke [: nergens] nowhere taat staat taat [: staat] stands Die kan je die kan je Die kan je <die kan je> [/] die kan je These you can 26

Deviant Language Wrong analysis A close up of a logo Description automatically generated Expression Analysed as Should be Translation stukkies* N, sg, compound stuk + kies broken tooth N, pl, dim, lemma=stuk Small pieces isse N, sg V, fin, lemma=is is Varkens zit Two utterances One utterance with agreement error Pigs is 27

Deviant Transcription A close up of a logo Description automatically generated Transcription Should be Reason Translation Mouwe* mouwen Final n not pronounced sleeves Gaatie* gaat ie Pronounced as one word goes he zie-ken-huis* ziekenhuis Syllables pronounced separately hospital 28

Ie-Diminutives A close up of a logo Description automatically generated Transcription Should be Translation stukkies* stukjes little pieces poppie* popje little doll beessie* beestje little animal cluppie* clubje favourite club 29

Possessive pronouns A close up of a logo Description automatically generated Transcription Should be Translation ze zus zijn zus she sister (his sister) me broer mijn broer me brother (my brother) 30

Wrong pronunciation A close up of a logo Description automatically generated Transcription Should be Translation gaap schaap sheep goot groot big vaketje varkentje little pig 31

False starts, Repetitions, incomplete utterances A close up of a logo Description automatically generated Utt ik werd niet meer ik werd afge afge afge afgedech afgedech+ Gloss I was no more I was offtha offtha offtha offthank offthank Utt ik heb nog euh de half euh de euh van de euh voor de euh+ Gloss I have still eh the half eh the eh of the eh for the eh Utt O oke uhm wat wat er is gebeurd+ Gloss Oh OK eh what what there is happened 32

(Wrong) A close up of a logo Description automatically generated Overgeneralisations Transcription Should be Translation uitgekijken* uitgekeken watched out gekeekt* gekeken watched gevalt* gevallen fallen 33

Wrong Det / Aux A close up of a logo Description automatically generated Transcription Should be Translation de kopje thee het kopje thee The cup of tea de ponyautootje het ponyautootje The pony car Dat heeft gebeurd Dat is gebeurd That has happened 34

Compounds A close up of a logo Description automatically generated Transcription Should be analysed as Translation tafelkleed tafel+kleed table cloth kinderboerderij kinder+boerderij children s farm 35

V1 Utterances A close up of a logo Description automatically generated Type V1-expression Translation dat-Drop Is kipje Is little-chicken Imperative Kijk eens Look daar-Drop Gaat ie (There) he goes wat-Drop Is dat ? (what) is that? yes-no question Blijft ie wel staan Stays it standing ik-drop Moet deze lepel (I ) must have this spoon 36

Wrong word/ construction analysis A close up of a logo Description automatically generated Transcription Analysed as Translation Emmer mee emmer /verb v. emmer / noun (child age : 3;7) Whine-on with v. bucket with Die dicht dicht / verb v. dicht /adj (child age : 3;7) `he writes poetry v. `It (is) closed De kippen hier hier mod kippen I v. hier as predicate (Child age: 4;6) the chickens here 37

Verbless utterances A close up of a logo Description automatically generated Transcriptio n Analysed as Should be Translation Ik naar omie PP naar omie PP pred ik I to uncle mod ik Ik varken Two independent utterances Ik subject, varken object I (want) pig Ladder hier Hier mod ladder Hier pred ladder ladder (is) here 38

SASTA Project Phase I A close up of a logo Description automatically generated SASTA project Semi-Automatic Analysis of Spontaneous Language Funded by CLARIAH, Stichting Taaltechnologie, Vereniging voor Klinische Lingu stiek (VKL), Vogellanden. Team: Utrecht University: Jan Odijk, Jelte van Boheemen, Sjoerd Eilander VKL: Rob Zwitserlood, Margo Zwitserlood Vogellanden & VKL: Nina Blom, Elsbeth Boxum 39